Platformer - Facebook admits its mistakes

Open in browser

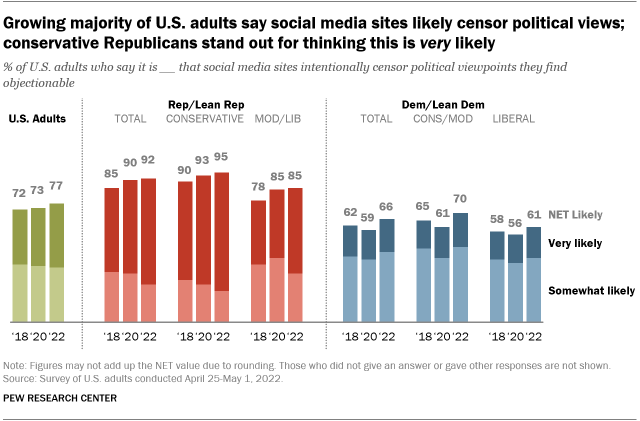

Here’s this week’s free edition of Platformer. I’m able to send it to you because of the small fraction of readers who choose to pay. If you value independent, ad-free journalism that explores the intersection of tech and democracy, I hope you’ll consider contributing and becoming a member today. Join now and you can come hang out with us in our chatty Discord server, where lately we’ve been talking about Elon Musk all day. 👉 Facebook admits its mistakesWhat the company's latest enforcement report tells us about the free-speech debateToday, let’s talk about Facebook’s latest effort to make the platform more comprehensible to outsiders — and how its findings inform our current, seemingly endless debate over whether you can have a social network and free speech, too. Start with an observation: last week, Pew Research reported that large majorities of Americans — Elon Musk, for example! — believe that social networks are censoring posts based on the political viewpoints they express. Here’s Emily A. Vogels:

One reason I find these numbers interesting is that of course social networks are removing posts based on the viewpoints they express. American social networks all agree, for example, that Nazis are bad and that you shouldn’t be allowed to post on their sites saying otherwise. This is a political view, and to say so should not be controversial. Of course, that’s not the core complaint of most people who complain about censorship on social networks. Republicans say constantly that social networks are run by liberals, have liberal policies, and censor conservative viewpoints to advance their larger political agenda. (Never mind the evidence that social networks have generally been a huge boon to the conservative moment.) And so when you ask people, as Pew did, whether social networks are censoring posts based on politics, they’re not answering the question you actually asked. Instead, they’re answering the question: for the most part, do the people running these companies seem to share your politics? And that, I think more or less explains 100 percent of the difference in how Republicans and Democrats responded. But whether on Twitter or in the halls of Congress, this conversation almost always takes place only at the most abstract level. People will complain about individual posts that get removed, sure, but only rarely does anyone drill down into the details: on what categories of posts are removed, in what numbers, and in what the companies themselves have to say about the mistakes they make. That brings us to a document that has a boring name, but is full of delight for those of us who are nosy and enjoy reading about the failures of artificial-intelligence systems: Facebook’s quarterly community standards enforcement report, the latest of which the company released today as part of a larger “transparency report” for the latter half of 2021. An important thing to focus on, whether you’re an average user worried about censorship or recently bought a social network promising to allow almost all legal speech, is what kind of kind of speech Facebook removes. Very little of it is “political,” at least in the sense of “commentary about current events.” Instead, it’s posts related to drugs, guns, self-harm, sex and nudity, spam and fake accounts, and bullying and harassment. To be sure, some of these categories are deeply enmeshed in politics — terrorism and “dangerous organizations,” for example, or what qualifies as hate speech. But for the most part, this report chronicles stuff that Facebook removes because it’s good for business. Over and over again, social products find that their usage shrinks when even a small percentage of the material they host includes spam, nudity, gore, or people harassing each other. Usually social companies talk about their rules in terms of what they’re doing “to keep the community safe.” But the more existential purpose is to keep the community returning to the site at all. This is what makes Texas’ new social media law, which I wrote about yesterday, potentially so dangerous to platforms: it seemingly requires them to host material that will drive away their users. At the same time, it’s clear that removing too many posts also drives people away. In 2020, I reported that Mark Zuckerberg told employees that censorship was the No. 1 complaint of Facebook’s user base. A more sane approach to regulating platforms would begin with the assumption that private companies should be allowed to establish and enforce community guidelines, if only because their companies likely would not be viable without them. From there, we can require platforms to tell us how they are moderating, under the idea that sunlight is the best disinfectant. And the more we understand about the decisions platforms make, the smarter the conversation we can have about what mistakes we’re willing to tolerate. As the content moderation scholar Evelyn Douek has written: “Content moderation will always involve error, and so the pertinent questions are what error rates are reasonable and which kinds of errors should be preferred.” Facebook’s report today highlights two major kinds of errors: ones made by human beings, and ones made by artificial intelligence systems. Start with the humans. For reasons that the report does not disclose, between the last quarter of 2021 and the first quarter of this one, its human moderators suffered “a temporary decrease in the accuracy of enforcement” on posts related to drugs. As a result, the number of people requesting appeals rose from 80,000 to 104,000, and Facebook ultimately restored 149,000 posts that had been wrongfully removed. Humans arguably had a better quarter than Facebook’s automated systems, though. Among the issues with AI this time around:

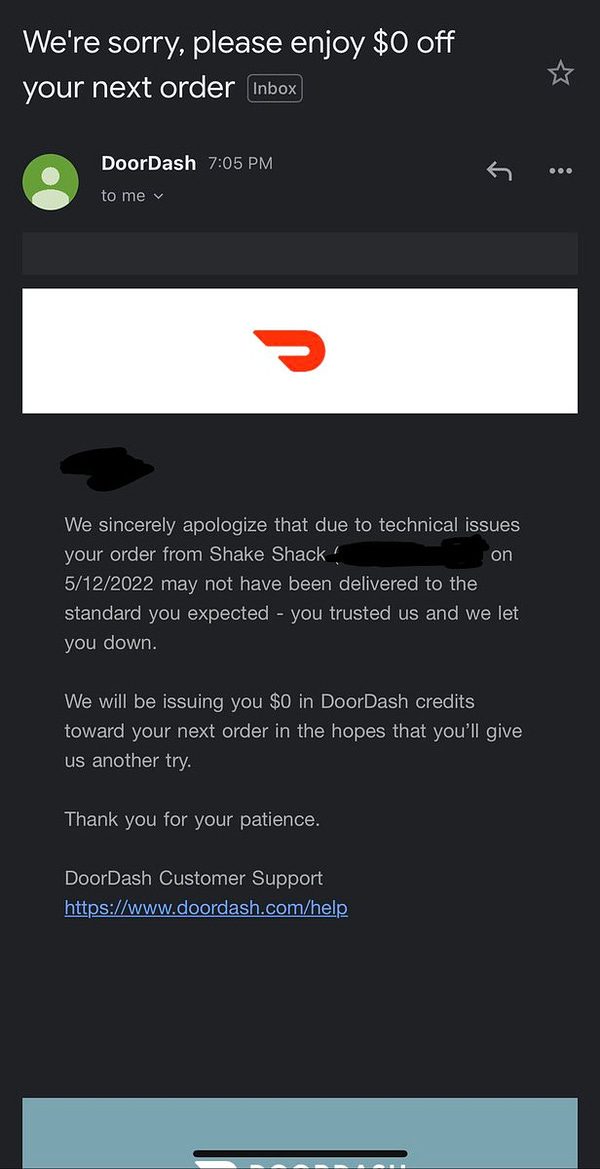

Of course, there was also good evidence that automated systems are improving. Most notably, Facebook took action on 21.7 million posts that violated policies related to violence and incitement, up from 12.4 million the previous quarter, “due to the improvement and expansion of our proactive detection technology.” That raises, uh, more than a few questions about what escaped detection in earlier quarters. Still, Facebook shares much more about its mistakes than other platforms do; YouTube, for example, shares some information about videos that were taken down in error, but not by category and without any information about the mistakes that were made. And yet still there’s so much more we would benefit from knowing — from Facebook, YouTube, and all the rest. How about seeing all of this data broken down by country, for example? How about seeing information about more explicitly “political” categories, such as posts removed for violating policies related to health misinformation? And how about seeing it all monthly, rather than quarterly? Truthfully, I don’t know that any of that would do much to shift the current debate about free expression. Partisans simply have too much to gain politically by endlessly crying “censorship” whenever any decision related to content moderation goes against them. But I do wish that lawmakers would at least spend an afternoon enmeshing themselves in the details of a report like Facebook’s, which lays out both the business and technical challenges of hosting so many people’s opinions. It underscores the inevitability of mistakes, some of them quite consequential. And it raises questions that lawmakers could answer via regulations that might actually withstand 1st Amendment scrutiny, such as what rights to appeal a person should have if their post or account are removed in error. There’s also, I think, an important lesson for Facebook in all that data. Every three months, according to its own data, millions of its users are seeing their posts removed in error. It’s no wonder that, over time, this has become the top complaint among the user base. And while mistakes are inevitable, it’s also easy to imagine Facebook treating these customers better: explaining the error in detail, apologizing for it, inviting users to submit feedback about the appeals process. And then improving that process. The status quo, in which those users might get see a short automated response that answers none of their questions, is a world in which support for the social network — and for content moderation in general — continues to decline. If only to preserve their businesses, the time has come for platforms to stand up for it. Musk ReadsWell, let’s see. Elon Musk’s attempt to renegotiate his Twitter deal saw him on Tuesday issuing an ultimatum of sorts, tweeting that “this deal cannot move forward” unless concerns about spam and fake accounts are resolved to his satisfaction. Matt Levine points out that those concerns can never be resolved to his satisfaction, because they are transparently phony concerns intended to drive Twitter back to the negotiating table. Over a deal that he already signed, but now regrets. Meanwhile, Twitter filed a preliminary proxy statement with the Securities and Exchange Commission — a necessary step on the road to getting shareholder approval for the deal. The most interesting tidbit in there, to me, is that Musk says he asked former CEO Jack Dorsey to remain on the board, but Dorsey declined. This would seem to blow up a lot of theories about how Dorsey had orchestrated this whole disaster as a way to eventually return as CEO. The document is also, as Levine points out, an extended chronicle of Musk violating US securities laws. For which there are unlikely to be any meaningful penalties. It seems like there are only two remaining paths forward: one in which Musk says definitively that he won’t buy the company at the priced he agreed to, or he attempts to sever ties with Twitter completely. Both are bad for the company, the rule of law, etc. Finally, would you believe that today three more senior employees quit Twitter? Governing

Industry

Those good tweetsTalk to meSend me tips, comments, questions, and widely viewed content: casey@platformer.news. By design, the vast majority of Platformer readers never pay anything for the journalism it provides. But you made it all the way to the end of this week’s edition — maybe not for the first time. Want to support more journalism like what you read today? If so, click here: |

Older messages

Why the company behind Pokémon Go is getting crypto-curious

Saturday, May 28, 2022

Niantic is exploring web3. Will its user base play along?

You Might Also Like

🚀 Ready to scale? Apply now for the TinySeed SaaS Accelerator

Friday, February 14, 2025

What could $120K+ in funding do for your business?

📂 How to find a technical cofounder

Friday, February 14, 2025

If you're a marketer looking to become a founder, this newsletter is for you. Starting a startup alone is hard. Very hard. Even as someone who learned to code, I still believe that the

AI Impact Curves

Friday, February 14, 2025

Tomasz Tunguz Venture Capitalist If you were forwarded this newsletter, and you'd like to receive it in the future, subscribe here. AI Impact Curves What is the impact of AI across different

15 Silicon Valley Startups Raised $302 Million - Week of February 10, 2025

Friday, February 14, 2025

💕 AI's Power Couple 💰 How Stablecoins Could Drive the Dollar 🚚 USPS Halts China Inbound Packages for 12 Hours 💲 No One Knows How to Price AI Tools 💰 Blackrock & G42 on Financing AI

The Rewrite and Hybrid Favoritism 🤫

Friday, February 14, 2025

Dogs, Yay. Humans, Nay͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🦄 AI product creation marketplace

Friday, February 14, 2025

Arcade is an AI-powered platform and marketplace that lets you design and create custom products, like jewelry.

Crazy week

Friday, February 14, 2025

Crazy week. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

join me: 6 trends shaping the AI landscape in 2025

Friday, February 14, 2025

this is tomorrow Hi there, Isabelle here, Senior Editor & Analyst at CB Insights. Tomorrow, I'll be breaking down the biggest shifts in AI – from the M&A surge to the deals fueling the

Six Startups to Watch

Friday, February 14, 2025

AI wrappers, DNA sequencing, fintech super-apps, and more. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

How Will AI-Native Games Work? Well, Now We Know.

Friday, February 14, 2025

A Deep Dive Into Simcluster ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏