📝 Guest post: How to Select Better ConvNet Architectures for Image Classification Tasks*

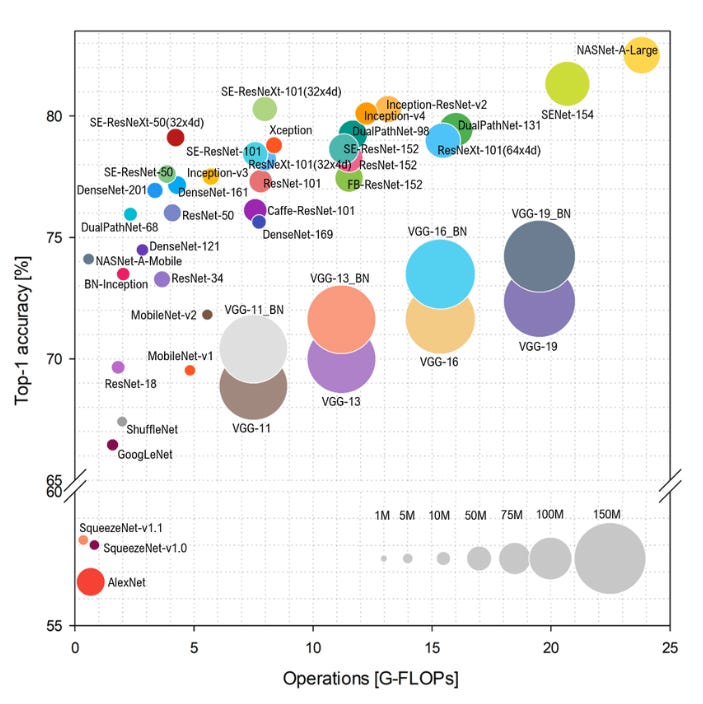

Was this email forwarded to you? Sign up here There's no go-to formula for any ML project. A huge part of improving machine learning systems is experimenting until you find a solution that works well for your use case. However, an overwhelming amount of tools and model architectures are available for computer vision tasks. In this article, Superb AI’s team provides you with a strategy to select a suitable architecture for your image classification project. Image Classification Task OverviewImage Classification is a task that attempts to comprehend an entire image as a whole. The goal is to classify the image by assigning it to a specific label. Image classification can be considered a fundamental problem because it forms the basis for other computer vision tasks like object localization, detection, segmentation, etc. The ImageNet Challenge is the (unofficial) benchmark standard for most computer vision algorithms when it comes to image classification. It is an annual competition that uses subsets from a large dataset of annotated photographs comprising over 1 million images across 1,000 object classes, AKA ImageNet. Historically, machine learning on images had been a challenging task until 2012, when an extensive deep convolutional neural network called AlexNet showed excellent performance on the ImageNet Challenge and caught everyone’s attention. Ever since, convolutional neural networks, or ConvNets, have evolved very fast and surpassed human-level performance on visual tasks. Fast forward to 2022, and Vision Transformer or ViT models are currently topping the charts. Transformer architecture, initially proposed for natural language processing, adopts the self-attention mechanism - having the model focus on some part of the input text sequence when predicting each word. The Vision Transformer model adapts the attention idea to work on images, where the equivalent of words are patches that the input image is broken into. The concept of applying Transformer architecture to images is very interesting and potentially the source of breakthroughs. At the time of writing this blog, ViT models do scale well with datasets but are still problematic in small-scale datasets. We don’t understand them fully yet and are just starting to put ViT models into practice. ConvNets, on the other hand, were put into practice for more than a decade and have become very effective and popular due to the democratization of data, computational power, and abundance of tools and frameworks that followed. And since the goal of this article is to help practitioners to choose the model architecture for real-world computer vision problems, supervised image classification using ConvNets is what we will focus on. Selecting a ConvNet ArchitectureThere are plenty of ConvNet architectures for image classification that you can employ for real-world computer vision projects. However, choosing which model architecture to use is not always an exact science. But we should always start any machine learning project by setting up goals and expectations. Determine Desired PerformanceHow can one determine a reasonable level of performance to expect for your specific use case? Model accuracy and inference speed both matter to ML engineers: • Accuracy - We’d like to use a model that gives us the highest accuracy, which means a better experience for end-users. • Speed of model’s training and inference - We’d want the model to train and generate predictions as fast as possible because, in production, we might have to serve thousands of users and need to retrain the model quickly if any problems occur. Generally, we need to use a deeper (larger) model to get higher accuracy. But with a larger model comes a larger number of parameters that make it slower to execute. To visualize the accuracy/complexity tradeoff among common SOTA architectures in computer vision, you could refer to the Benchmark Analysis of Representative Deep Neural Network Architectures paper (published in 2018) that features a very useful chart. For most deep learning deployments in the industry, we should consider the accuracy level that is necessary for an application to be safe, computationally affordable, and appealing to users. For example, suppose you know that the end goal of your project is to deploy the model on edge devices. In that case, complexity is a more critical concern, and you might have to sacrifice accuracy a bit (MobilNet architecture is a good choice here). On the other hand, if training cost and prediction latency are not a concern for your project, and you are after the highest accuracy, you might want to choose the largest/fanciest model or even consider an ensemble of several model architectures. Once you have an idea of your realistic desired performance, your architecture search decisions will be guided by this goal. In the next section, let’s zoom in on some of the most commonly used SOTA ConvNet architectures. Popular ConvNet ArchitecturesThis section will briefly look at various ConvNet architectures widely used nowadays, i.e., their evolution, advantages, and disadvantages. VGG FamilyTo improve upon AlexNet, researchers started to increase the depth or number of convolutional layers. Adding more layers resulted in better classification accuracy. VGG architecture (proposed in 2014) had a deeper network structure and improved accuracy but also doubled its size and increased runtimes compared to AlexNet. It has 16 convolutional layers (and 19 in the later iteration) and uses 3x3 convolutions exclusively without losing accuracy. Why choose VGG architecture? Its simple architecture makes it relatively easy to understand and implement. It performs well on common image classification scenarios. VGG architectures have also been found to learn hierarchical elements of images like texture and content, making them popular choices for training style transfer models. InceptionThe Inception network came to the world in 2014. The main hallmark of this architecture is building a convolutional neural network while improving the utilization of computing resources. When lining up the convolutional and pooling layers in a network, the designer has multiple choices, and the best decision is not obvious. Instead of deciding beforehand which sequence of layers is the most effective, the Inception module provides several alternatives that the network can choose from based on data and training. Why choose Inception architecture? It’s a deeper CNN than VGG, with fewer parameters and significantly higher accuracy. This results in a shorter training time and smaller size of the trained model. On the other hand, this architecture can be pretty complex to implement from scratch. ResNetResNet or Residual Neural Network, introduced in 2015, continued the trend of increasing the depth of the models. One downside of adding too many layers is that doing so makes the network more prone to overfitting the training data due to the vanishing gradient problem. To solve this, ResNet introduced a novel residual module architecture with skip connections that allow information to flow from earlier layers in the network to later layers, creating an alternative shortcut path for the gradient to flow through. This fundamental breakthrough allowed for the training of extremely deep neural networks with hundreds of layers. The most common variant of ResNet is ResNet50, containing 50 layers, but larger variants can have over 100 layers. Why choose ResNet architecture? ResNet is widely used as it performs really well on classification tasks specifically. On top of that, it uses global average pooling, which accelerates the training (compared to the previous architectures like VGG). If you want something tried and true, this is a good choice. XceptionThe Xception model was proposed by Francois Chollet (creator of Keras) in 2017. This model is an extension of Inception architecture that replaces the inception model with depthwise separable convolutions and ResNet-style skip connections. Why choose Xception architecture? Xception offers a combination of both ResNet and Inception architectural features in a simpler design. This makes architecture easy to define and modify. Linear stack layers make training faster than Inception, and it slightly outperforms Inception in terms of accuracy on ImageNet. MobileNetThe MobileNet architecture was developed by Google to find a neural network suitable for edge devices such as smartphones or tablets. MobileNet architectures introduced two important innovations: depthwise separable convolutions and a width multiplier (hyperparameter). Depthwise, separable convolutions replaced regular convolution layers with fewer parameters and are more computationally efficient. The width multiplier is a parameter that controls how many parameters are used for each convolution layer. This allows for the creation of several networks along a tradeoff curve of size and speed versus accuracy. Why choose MobileNet architecture? An array of models can be created with this architecture so that more powerful devices can receive larger, more accurate models. In contrast, less powerful devices can use smaller, less accurate models. If you need a model that you can deploy on edge devices with scarce computational resources, MobileNet is the way to go. EfficientNetA later iteration of MobileNetV2 by the same team is called EfficientNet. Inverted residual bottlenecks are again the basic building blocks, but the optimization goal was tweaked towards prediction accuracy rather than mobile inference latency. Convolutional architectures have three main ways of scaling: use more layers, use more channels in each layer or use higher-resolution input images. The EfficientNet paper points out that these scaling axes are not independent, and this family of networks is scaled along three scaling axes rather than just one. Why choose EfficientNet architecture? This architecture is a workhorse of many applied ML teams because it offers optimal performance levels for every weight count. If you don’t have size/speed restrictions (inference on auto-scaling cloud system) and want the best/fanciest model, consider EfficientNet. Neural Architecture SearchFor much of the last decade, new state-of-the-art results were achieved with new network architecture manually created by researchers. As with many tasks that rely on human intuition and experimentation, someone eventually asked if a machine could do it better. Neural architecture search (NAS) automates the process of neural network design. Given a goal (e.g., model accuracy) and constraints (network size or inference latency), these methods rearrange composable blocks of layers to form new architectures. Though NAS has found new architectures that outperform their human-designed peers, the process is incredibly computationally expensive. Why choose NAS architecture? Besides being computationally expensive, the network architectures discovered by NAS techniques typically don’t look like those designed by humans. We don’t know why they work well, so we cannot move some ideas to other architectures. Nevertheless, NAS achieves high accuracy, and, in some cases, that’s enough.

Want to discuss your particular use case? Reach out to our team, and we can put you on the right track! *This post was written by Dasha Gurova, community manager at Superb AI, and originally posted here. We thank Superb AI for their ongoing support of TheSequence.You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

📃🪄🖼 Edge#204: Inside Imagen. Google’s Impressive Text-to-Image Alternative to OpenAI’s DALLE-2

Thursday, June 30, 2022

Imagen provides a simpler architecture able to generate photorealistic images from language inputs

🎂🎉🦾 Happy Birthday TheSequence!

Wednesday, June 29, 2022

50% OFF to celebrate our 2nd anniversary!

🔴🟨 Edge#203: What are Graph Recurrent Neural Networks?

Tuesday, June 28, 2022

+ what GNNs on Dynamic Graphs; and the exploration of DeepMind's Jraph, a GNN Library for JAX.

📝 Guest post: Right-Sizing Training Workloads with NVIDIA A100 and A40 GPUs*

Monday, June 27, 2022

In this article, CoreWeave's team explains how it is helping companies deploy more timely and efficient AI applications by right-sizing projects to optimize training on both NVIDIA A100 and A40 GPU

📹 🤖 Transformers for Video

Sunday, June 26, 2022

Weekly news digest curated by the industry insiders

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your