Study: Ritalin Works, But School Isn't Worth Paying Attention To

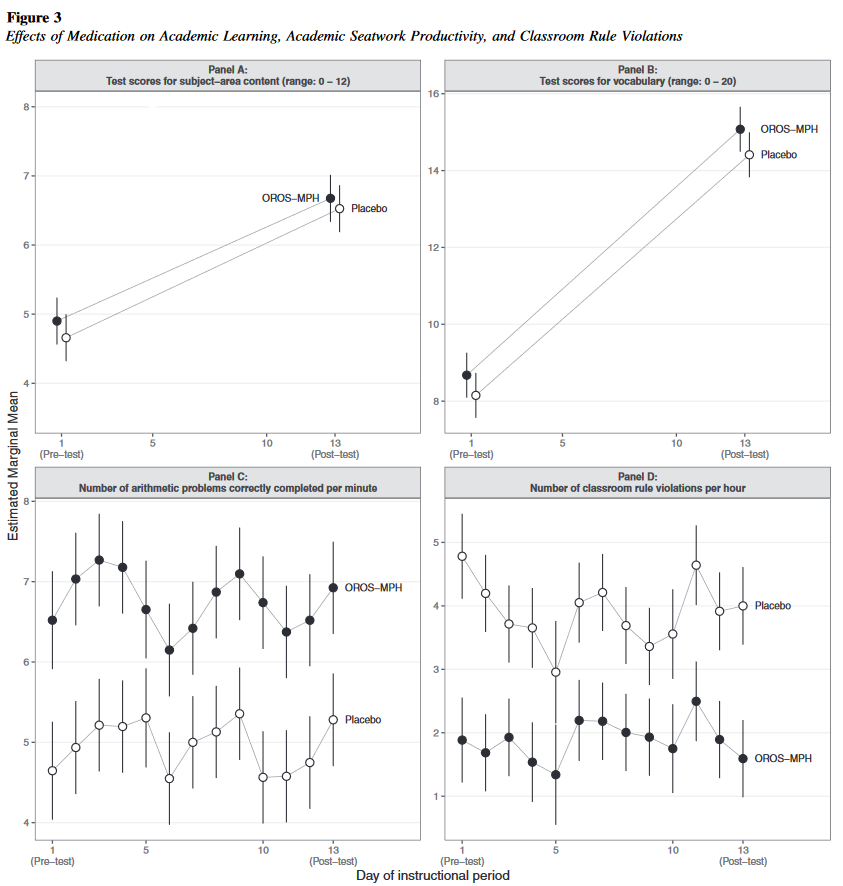

Recent study, Pelham et al: The Effect Of Stimulant Medication On The Learning Of Academic Curricula In Children With ADHD. It’s gotten popular buzz as “scientists have found medication has no detectable impact on how much children with ADHD learn in the classroom” and “Medication alone has no impact on learning”. This probably comes as a surprise if you’ve ever worked with stimulants, ADHD patients, or classrooms, so let’s take a look. 173 kids, mostly Hispanic boys age 7-12, were in a “therapeutic ADHD summer camp” intended to help them learn focusing and attention-directing techniques (style tip: do not call this a “concentration camp”). The kids had two short classes each day, one on vocabulary and one on a grab bag of different subject matters. For the first three weeks, half the kids got Concerta (ie long-acting Ritalin) and the other half didn’t, then they switched for the next three weeks. As an additional test, there was a ten minute period each day when the kids were asked to do math problems as fast as possible. Here are the results: The bottom left graph is how many math problems kids completed per minute. Kids on Concerta do math about 50% higher, and this difference is significant. Bottom right is number of classroom rule violations per hour. Kids on Concerta only cause trouble about half as often, and this is significant too. So the Concerta’s clearly doing something, and I think it would be fair to describe that thing as fairly describe as “making kids pay more attention”. The top two graphs are the test scores. The Concerta kids do slightly better on the tests; this is just above the significance threshold for vocabulary, and just below it for other subjects, but probably in real life it’s having a small positive effect in both cases. But there’s no test score x condition interaction. That is, having been on Concerta throughout the course doesn’t make kids do better on the post-test, beyond the small boost it gives to test-taking ability in general. In other words, it doesn’t look like it helped them learn more during the course. (to be clear, the problem isn’t that this is a bad curriculum that doesn’t help kids learn. The kids did do better on the post-test than the pre-test. It’s just that paying more attention to the curriculum didn’t improve that learning beyond paying less attention) This matches my general impression of the rest of the stimulant/ADHD literature, see eg this old review by Swanson and this newer one. Stimulants often raise grades, usually by improving students’ ability to concentrate on tests, or their likelihood of finishing homework on time. But if you take care to separate out how much people are learning, it usually doesn’t change by very much. I’m not sure this says anything bad about Concerta. Concerta’s only claim was that it helps people pay attention better, and this study bears that out. Kids who take Concerta do better on tests, complete a homework analogue faster, and cause less trouble in class. But it does say something bad, at least weird, about the role of attention in school. For some reason, paying attention better doesn’t (always) mean you learn more. Why not? Maybe classroom instruction is redundant? That is, teachers say everything a few times, so if you miss it once, it doesn’t matter? But this doesn’t fit well with overall retention being pretty poor. The average score on the subject tests was around 50%; on the vocab, 75%. Maybe all students heard the instruction enough times for it to sink in, and their limit was innate intelligence - their ability to comprehend complicated concepts. Maybe some “subject area” questions were on more complicated topics than others, but this doesn’t make sense for the vocabulary tests. Surely all vocabulary words are equally complicated, and you just have to hear them enough times for them to sink in? But if this were true, we would have proven that it’s impossible for a student to get a score other than 100% or 0% on a test of novel vocabulary words that they spent equal amounts of time learning. But I took vocab tests in school - usually twenty words taught together as a single lesson, where we drilled each of them the same amount - and often got scores other than 100% or 0%. So maybe even among seemingly similar words, some are arbitrarily easier to remember than others, maybe because they sound like another word that provides a natural mnemonic, or because they seem unusually mellifluous, or have especially relevant definitions. So maybe there’s some hidden “difficulty level” of each word for a particular student, and if a word is below your difficulty threshold, then it takes very few repetitions to sink in, and if above, more repetitions won’t help. This is reminding me of an old post I wrote, Why Do Test Scores Plateau? It looked at student doctors (mostly surgeons), who took a test in their first, second, third, fourth, and fifth years of residency. The surgeons did much better in their second compared to their first year, a little better in their third compared to their second year, and then plateaued. The effect was so strong that there was only a 52% chance that a randomly-selected fifth-year surgeon would outperform a randomly-selected fourth-year surgeon. Yet there were no ceiling effects - smart fifth-year surgeons outperformed dumb fifth-year surgeons, and everyone was well short of 100%. So why weren’t they learning anything in their fifth year? At the time, I guessed that maybe intelligence determines how detailed a branching-structure of facts your brain can support. Difficult and detailed surgery facts build on simpler, more fundamental facts, but at some point you saturate your brain’s resolution and stop adding more details. (another way to think of this is via the Homer Simpson Effect - the tendency for learning one thing to make you forget other, similar things, presumably because your brain can’t consistently keep the two thoughts distinct and keeps the newer one rather than having them both interfere with each other all the time) Something like this must be true if we assume that it takes a certain intelligence level to learn surgery - or quantum physics, or whatever. Otherwise you could get a very dumb person, keep teaching them a little more quantum physics every day for twenty years, and eventually expect them to know as much as a smart person would after getting a four-year degree. I’ve never heard of someone formally trying this, but I predict it wouldn’t work. This is already irresponsibly speculative, so no harm in making even wilder leaps - I wonder if there’s a human equivalent of AI scaling laws. These are the rules about how AI performance increases when you add more parameters, training data, or compute. I don’t claim to understand the exact functions (see link for details), but the short version is that high performance requires not being bottlenecked on any of them. Continuing to be irresponsible: are there human equivalents of these? Parameter count might be related to intelligence (which probably has at least a little to do with literal neuron number; the remainder might be how good your neurons are at maintaining patterns that don’t interfere with each other). Training data is how much you’ve learned about a subject, and compute is spending time reviewing and re-reviewing things until you’ve mastered them. But how would you differentiate this for learning a single vocabulary word? There’s just one piece of relevant data - a flashcard with the word and its definition. All that’s left is how many times you review it - and maybe how much attention you’re paying each time? But this study suggests the latter has pretty small effects in a naturalistic setting. These students must be bottlenecked by some other resource. You’re a free subscriber to Astral Codex Ten. For the full experience, become a paid subscriber. |

Older messages

Open Thread 231

Monday, July 4, 2022

...

Your Book Review: The Internationalists

Friday, July 1, 2022

Finalist #8 in the Book Review Contest

Links For June

Friday, July 1, 2022

...

Highlights From The Comments On San Fransicko

Wednesday, June 29, 2022

...

What Caused The 2020 Homicide Spike?

Wednesday, June 29, 2022

...

You Might Also Like

Strategic Bitcoin Reserve And Digital Asset Stockpile | White House Crypto Summit

Saturday, March 8, 2025

Trump's new executive order mandates a comprehensive accounting of federal digital asset holdings. Forbes START INVESTING • Newsletters • MyForbes Presented by Nina Bambysheva Staff Writer, Forbes

Researchers rally for science in Seattle | Rad Power Bikes CEO departs

Saturday, March 8, 2025

What Alexa+ means for Amazon and its users ADVERTISEMENT GeekWire SPONSOR MESSAGE: Revisit defining moments, explore new challenges, and get a glimpse into what lies ahead for one of the world's

Survived Current

Saturday, March 8, 2025

Today, enjoy our audio and video picks Survived Current By Caroline Crampton • 8 Mar 2025 View in browser View in browser The full Browser recommends five articles, a video and a podcast. Today, enjoy

Daylight saving time can undermine your health and productivity

Saturday, March 8, 2025

+ aftermath of 19th-century pardons for insurrectionists

I Designed the Levi’s Ribcage Jeans

Saturday, March 8, 2025

Plus: What June Squibb can't live without. The Strategist Every product is independently selected by editors. If you buy something through our links, New York may earn an affiliate commission.

YOU LOVE TO SEE IT: Defrosting The Funding Freeze

Saturday, March 8, 2025

Aid money starts to flow, vital youth care is affirmed, a radical housing plan takes root, and desert water gets revolutionized. YOU LOVE TO SEE IT: Defrosting The Funding Freeze By Sam Pollak • 8 Mar

Rough Cuts

Saturday, March 8, 2025

March 08, 2025 The Weekend Reader Required Reading for Political Compulsives 1. Trump's Approval Rating Goes Underwater Whatever honeymoon the 47th president enjoyed has ended, and he doesn't

Weekend Briefing No. 578

Saturday, March 8, 2025

Tiny Experiments -- The Lazarus Group -- Food's New Frontier ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Your new crossword for Saturday Mar 08 ✏️

Saturday, March 8, 2025

View this email in your browser Happy Saturday, crossword fans! We have six new puzzles teed up for you this week! You can find all of our new crosswords in one place. Play the latest puzzle Click here

Russia Sanctions, Daylight Saving Drama, and a Sneaky Cat

Saturday, March 8, 2025

President Trump announced on Friday that he is "strongly considering" sanctions and tariffs on Russia until it agrees to a ceasefire and peace deal to end its three-year war with Ukraine. ͏