Astral Codex Ten - Absurdity Bias, Neom Edition

Alexandros M expresses concern about my post on Neom. My post mostly just makes fun of Neom. My main argument against it is absurdity: a skyscraper the height of WTC1 and the length of Ireland? Come on, that’s absurd! But isn’t the absurdity heuristic a cognitive bias? Didn’t lots of true things sound absurd before they turned out to be true (eg evolution, quantum mechanics)? Don’t I specifically believe in things many people have found self-evidently absurd (eg the multiverse, AI risk)? Shouldn’t I be more careful about “this sounds silly to me, so I’m going to make fun of it”?

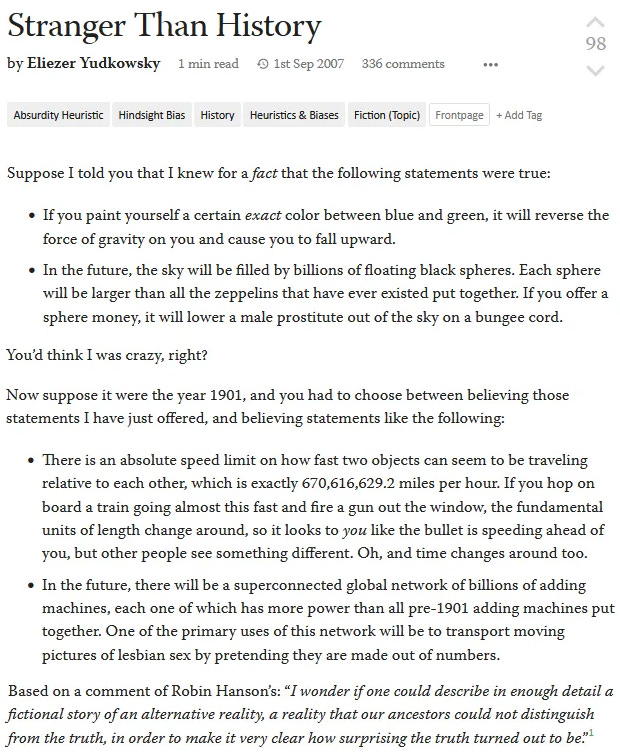

Here’s a possible argument why not: everything has to bottom out in absurdity arguments at some level or another. Suppose I carefully calculated that, with modern construction techniques, building Neom would cost 10x more than its allotted budget. This argument contains an implied premise: “and the Saudis can’t construct things 10x cheaper than anyone else”. How do we know the Saudis can’t construct things 10x cheaper than anyone else? The argument itself doesn’t prove this; it’s just left as too absurd to need justification. Suppose I did want to address this objection. For example, I carefully researched existing construction projects in Saudi Arabia, checked how cheap they were, calculated how much they could cut costs using every trick available to them, and found it was less than 10x? My argument still contains the implied premise “there’s no Saudi conspiracy to develop amazing construction technology and hide it from the rest of the world”. But this is another absurdity heuristic - I have no argument beyond that such a conspiracy would be absurd. I might eventually be able to come up with an argument supporting this, but that argument, too, would have implied premises depending on absurdity arguments. So how far down this chain should I go? One plausible answer is “just stop at the first level where your interlocutors accept your absurdity argument”. Anyone here think Neom’s a good idea? No? Even Alexandros agrees it probably won’t work. So maybe this is the right level of absurdity. If I was pitching my post towards people who mostly thought Neom was a good idea, then I might try showing that it would cost 10x more than its expected budget, and see whether they agreed with me that Saudis being able to construct things 10x cheaper than anyone else was absurd. If they did agree with me, then I’ve hit the right level of argument. And if they agree with me right away, before I make any careful calculations, then it was fine for me to just point to it and gesture “That’s absurd!” I think this is basically the right answer for communications questions, like how to structure a blog post. When I criticize communicators for relying on the absurdity heuristic too much, it’s because they’re claiming to adjudicate a question with people on both sides, but then retreating to absurdity instead. When I was young a friend recommended me a pseudoscience book on ESP, with lots of pseudoscientific studies proving ESP was real. I looked for skeptical rebuttals, and they were all “Ha ha! ESP? That’s absurd, you morons!” These people were just clogging up Google search results that could have been giving me real arguments. But if nobody has ever heard of Neom, and I expect my readers to immediately agree that Neom is absurd, then it’s fine (in a post describing Neom rather than debating it) to stop at the first level. (I do worry that it might be creating an echo chamber; people start out thinking Neom is a bad idea for the obvious reasons, then read my post and think “and ACX also thinks it’s a bad idea” is additional evidence; I think my obligation here is to not exaggerate the amount of thought that went into my assessment, which I hope I didn’t.) But the absurdity bias isn’t just about communication. What about when I’m thinking things through in my head, alone? I’m still going to be asking questions like “is Neom possible?” and having to decide what level of argument to stop at. To put it another way: which of your assumptions do you accept vs. question? Question none of your assumptions, and you’re a closed-minded bigot. Question all of your assumptions, and you get stuck in an infinite regress. The only way to escape (outside of a formal system with official axioms) is to just trust your own intuitive judgment at some point. So maybe you should just start out doing that. Except that some people seem to actually be doing something wrong. The guy who hears about evolution and says “I know that monkeys can’t turn into humans, this is so absurd that I don’t even have to think about the question any further” is doing something wrong. How do you avoid being that guy? Some people try to dodge the question and say that all rationality is basically a social process. Maybe on my own, I will naturally stop at whatever level seems self-evident to me. Then other people might challenge me, and I can reassess. But I hate this answer. It seems to be preemptively giving up and hoping other people are less lazy than you are. It’s like answering a child’s question about how to do a math problem with “ask a grown-up”. A coward’s way out! Eliezer Yudkowsky gives his answer here:

This is all true as far as it goes, but it’s still just rules for the rare situations when your intuitive judgments of absurdity are contradicted by clear facts that someone else is handing you on a silver platter. But how do you, pondering a question on your own, know when to stop because a line of argument strikes you as absurd, vs. to stick around and gather more facts and see whether your first impressions were accurate? I don’t have a great answer here, but here are some parts of a mediocre answer:

You’re a free subscriber to Astral Codex Ten. For the full experience, become a paid subscriber. |

Older messages

Slightly Against Underpopulation Worries

Thursday, August 4, 2022

...

Model City Monday 8/1/22

Monday, August 1, 2022

...

Open Thread 235

Monday, August 1, 2022

...

Your Book Review: Viral

Saturday, July 30, 2022

Finalist #12 in the Book Review Contest

Links For July

Friday, July 29, 2022

...

You Might Also Like

Can Anything Stop Bird Flu?

Monday, March 10, 2025

March 10, 2025 HEALTH Can Anything Stop Bird Flu? By Christopher Cox Illustration: David Macaulay In February 2024, dairy farmers in the northwest corner of the Texas Panhandle noticed that their herds

Going to the Mattresses

Monday, March 10, 2025

Investment Advice, Protest Arrest ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Rocket’s $1.75B deal to buy Redfin amps up competition with Zillow

Monday, March 10, 2025

GeekWire Awards: Vote for Next Tech Titan | Amperity names board chair ADVERTISEMENT GeekWire SPONSOR MESSAGE: A limited number of table sponsorships are available at the 2025 GeekWire Awards: Secure

🤑 Money laundering for all (who can afford it)

Monday, March 10, 2025

Scammers and tax evaders get big gifts from GOP initiatives on crypto, corporate transparency, and IRS enforcement. Forward this email to others so they can sign up 🔥 Today's Lever story: A bill

☕ Whiplash

Monday, March 10, 2025

Amid tariff uncertainty, advertisers are expecting a slowdown. March 10, 2025 View Online | Sign Up Marketing Brew Presented By StackAdapt It's Monday. The business of sports is booming! Join top

☕ Splitting hairs

Monday, March 10, 2025

Beauty brand loyalty online. March 10, 2025 View Online | Sign Up Retail Brew Presented By Bloomreach Let's start the week with some news for fans of plant milk. A new oat milk, Milkadamia Flat

Bank Beliefs

Monday, March 10, 2025

Writing of lasting value Bank Beliefs By Caroline Crampton • 10 Mar 2025 View in browser View in browser Two Americas, A Bank Branch, $50000 Cash Patrick McKenzie | Bits About Money | 5th March 2025

Dismantling the Department of Education.

Monday, March 10, 2025

Plus, can someone pardoned of a crime plead the Fifth? Dismantling the Department of Education. Plus, can someone pardoned of a crime plead the Fifth? By Isaac Saul • 10 Mar 2025 View in browser View

Vote now for the winners of the Inbox Awards!

Monday, March 10, 2025

We've picked 18 finalists. Now you choose the winners. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚡️ ‘The Electric State’ Is Better Than You Think

Monday, March 10, 2025

Plus: The outspoken rebel of couch co-op games is at it again. Inverse Daily Ready Player One meets the MCU in this Russo Brothers Netflix saga. Netflix Review Netflix's Risky New Sci-Fi Movie Is