The Diff - Snowflake, Revisited

This is the weekly free edition of The Diff. Today, we’re talking about Snowflake—what it is today, and where it’s going. Subscribers-only posts last week included: Snowflake, RevisitedPlus! Microsoft's Antitrust Edge; The Free Time Dividend; Feedback Loops; The News Business; Ending the Traffic Jam; Diff Jobs

Welcome to the free weekly edition of The Diff! This newsletter goes out to 45,086 readers, up 427 since last week. In this issue:

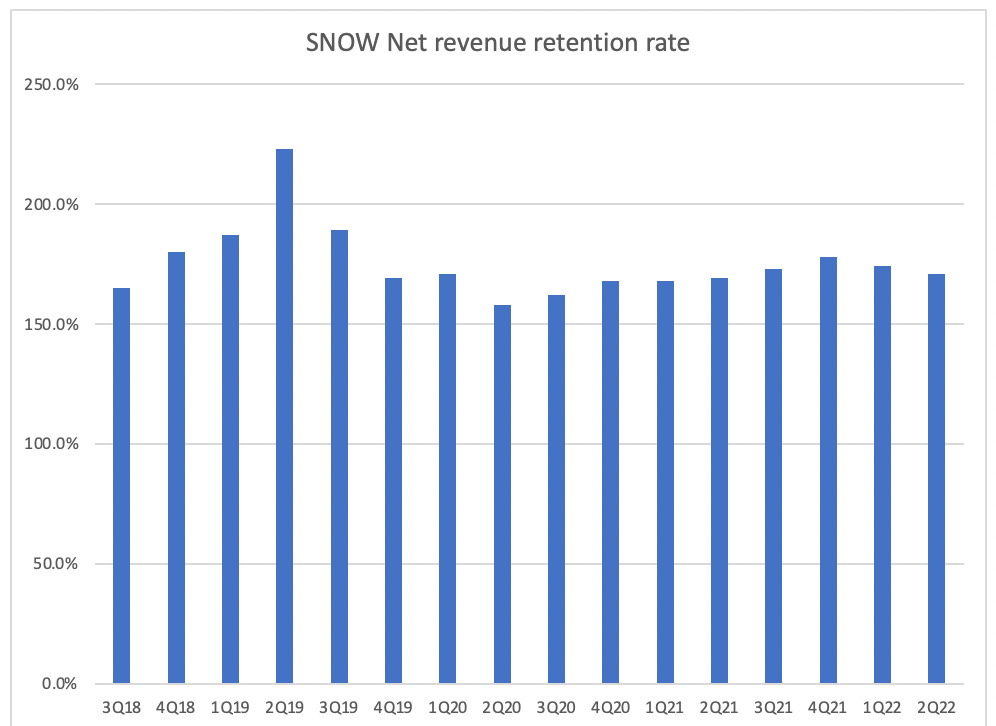

When Snowflake went public in 2020, it had the kind of valuation that leads commentators to reach for terms more extreme than “nosebleed”—177x trailing sales, which is the kind of multiple companies can get when they’re just testing out a new product, but one that almost never applies with a revenue run-rate in the hundreds of millions. In the two years since then, Snowflake has experienced both the good kind of multiple compression (their revenue run-rate was $532m at IPO and is $1,989m as of last quarter) and the bad kind (the stock is down by a third). Snowflake still trades at a valuation that would make a growth investor circa 2018 blanche. It’s priced at 32x last year’s sales. Before 2020, Servicenow, previously the canonical example of a growth company that earned a premium multiple at a mature state, rarely cracked 20x. But Snowflake is also a business with extraordinary topline metrics. Not only has revenue grown, but the dominant factor in that revenue growth is net dollar retention. In general, enterprise companies that can hit 130% net dollar retention—i.e. for every $100 spent by customers last year, revenue from those customers alone is $130 this year inclusive of customers whose spending dropped to $0—are in a good position and get a premium valuation. Snowflake’s average dollar retention has been around 175% from mid-2018 to present, and its decline has been gradual. The company does expect dollar retention to decline eventually, as more of their customers mature, but it hasn’t happened just yet. Put another way, if you take that net dollar retention and compound it out on a quarterly basis, a customer who did $100k in revenue as of the second quarter of 2018 would be producing $933k in revenue as of last quarter.¹ Understanding this growth means understanding what, exactly, Snowflake is selling. The company describes it as a “data cloud”: they’re offering ways for customers to store and query all of their data on top of existing cloud services like AWS, Azure, and Google Cloud Platform. Within that category, they’re solving several problems at once: multiple inconsistent data sources that don’t talk to each other, manual processes for scaling storage and compute resources, and rules for determining who can access what. Not to mention the problem of querying terabytes or even petabytes of customer data in a performant and reliable way. This means that Snowflake’s growth happens in at least four dimensions:

And these can compound. A company that’s figured out more ways to use data will look for more opportunities to collect it, and organizations that can quickly react to new information will tend to grow faster. Snowflake’s abstract bet is that the companies that produce more bits of data per dollar of revenue will, over time, produce more dollars of revenue, and that collecting an implicit tax on every bit will lead to sustainable growth. The ProductSnowflake’s current core product is a database engine, supporting data pipelines, business intelligence, and data applications. They also have governance and security services, so customers can control access both internally and externally. The last point is important because it leads to network effects: a Snowflake user can share a subset of data with their customers, while restricting it to just what the customer needs access to. Data marketplaces are a harder business than data products—a “marketplace” is not the ideal forum for selling something whose value is so dependent on the user and on who else buys the data—but Snowflake’s overall ecosystem makes such a product easier to offer. Keeping all of a company’s data in one place creates a mild intra-company network effect: since it’s easier to query across different datasets, there are more queries to run. And scaling in the background means that it’s easy to start tracking something whether or not it’s useful just yet. The company leaned in to this kind of thinking: initially, they charged a markup on both storage and compute, but switched to breaking even on storage since it’s a cheap complement.² As more data lives on Snowflake, this slowly tilts the equation towards doing more analysis there, too: even if it’s slightly cheaper elsewhere, it may be more expensive to move the data there. And as many cloud companies have learned over time, a project that’s born on a platform because it’s convenient can turn into a nice annuity if it gets mission-critical before it gets moved somewhere cheaper. One subtle force that benefits Snowflake is that it’s cheaper to query data on Snowflake with Snowflake than with Databricks (or any other offering, even considering the markup on compute). This resembles a toll on data exiting the platform, which grows in proportion with the amount of data being moved out of the platform. There’s always a tendency for big vendors to capture dollars from smaller ones—the existence of Teams makes some companies reluctant to keep paying for Zoom and Slack. (Though the experience of using Teams may make them think twice.) Companies that store data, like Snowflake, get a disproportionately strong benefit from this friction reduction. Not only because it’s easier to pay one vendor than two, but because there’s a cost to moving data between vendors or redundantly storing it at both. There are physical limits to sharing data that are expressed in public cloud pricing. From a C-level executive’s perspective, the data cloud is the data cloud whether your counterparty is in AWS us-east-1 (Virginia) or Google Cloud us-central1 in (Iowa), but from an engineering perspective, there’s a pretty big penalty for querying data in another public cloud region. For example, if I need to query an entire 10 TB table that’s stored in a Snowflake account in us-west-2 (Oregon) from my Snowflake account in us-east-1 (Virginia), that’s going to cost $200 ($20 per TB times 10). There’s a coordination problem, especially between organizations, because most customers are trying to co-locate their Snowflake workloads with their other cloud workloads, but also might want to co-locate their Snowflake workloads with other Snowflake customers’. As Snowflake usage in organizations becomes more decentralized and customers access more datasets on the marketplace, data transfer will become a larger segment of customer spending while slowing down queries, absent any creative technical solutions. They might subsidize data transfer costs, particularly on data marketplace queries, or purchase their own connection between popular regions. . Another friction reducer for Snowflake is that queries are written in SQL, so the software that interfaces with legacy storage options is fairly portable, and has a wide base of potential users. Snowflake has taken pains to maximize the compatibility of their SQL dialect with other offerings, such as Oracle and MS SQL, evident in redundant keywords or ones that are syntactically supported but non-functional. The idea is it’s better for some queries to silently fail in mostly harmless ways than to fail with an error, which requires human intervention. There are two good ways to model one company’s steady-state usage of a product like Snowflake. One option is incremental—running the same analysis on every batch of customer interactions, for example. The other is running backwards-looking processes on all accumulated data, for tasks like training machine learning models. The nice thing about the latter use case, from Snowflake’s perspective, is that a) it’s growing as a share of all data analysis, and b) the compute involved grows as a function of the cumulative data an organization has accumulated. It’s potentially a fifth dimension to their growth. In June, Snowflake announced native applications hosted on Snowflake that query Snowflake datasets, these could be shared and monetized on the data marketplace, as opposed to sharing only raw data. Making the product more polyglot is useful both as a way to increase developers’ willingness to use it and as a way to directly compete with Databricks, which supports Python, Scala, R, Java, and SQL. However, these types of programs could have much lower performance than Snowflake’s execution engine, so paying Snowflake a premium to run these programs might not make sense. Snowflake’s execution engine is written in C++ which has much higher performance than JavaScript / Python. Better support for more frequently-updated tables, or for streaming data, will mean that more data is first stored in Snowflake and is always analyzed there, which further increases usage and makes it harder to churn. And the data marketplace is a potential source of even more upside. Snowflake doesn’t yet fit the Bill Gates strict definition of a platform: “A platform is when the economic value of everybody that uses it, exceeds the value of the company that creates it. Then it’s a platform.”³ If Snowflake makes the market for data more liquid, it’s possible that many labor-intensive manual intermediaries will go away, and that consulting firms will arise to answer business questions by browsing the data marketplace, shopping for a few unique datasets, and putting them together to reach a conclusion. It’s also possible that companies that don’t have a data edge, but compete with someone who does, will buy it secondhand—a chain that doesn’t have a customer loyalty program might still be able to acquire aggregate data elsewhere. There’s also room for a marketplace in analysis—maybe instead of rolling your own attribution model to tease out how much email, brand advertising, and search ads drive sales, you’ll be able to buy one, plug in data, and get a decent answer. The data marketplace could make using Snowflake more of a strategic decision than an engineering one, which would give Snowflake greater pricing power on their core offering and potentially overwhelm engineering concerns with a wholesale migration. This is already the case for some companies with potential to add a listing, but as more listings get added to the marketplace the pressure will increase. Companies are starting to worry about the vendor lock-in implications of Snowflake owning their data (or, perhaps, of Snowflake owning the data and renting it back to customers), so Snowflake is going to start supporting open table formats. This could mean if table Z is derived from tables X and Y, all of them could be accessible in Snowflake, but the pipeline that creates Z from X and Y is on Databricks or open source tools. However, Snowflake can apply arbitrary rules on sharing these tables or any view derived from them, which is a trump card they might play. They had a strong anti-lock-in pitch when they were tiny and the cloud platforms they used were relatively huge, but as they’ve grown, that’s become a harder case to make. The BusinessAn interesting metaphor Snowflake uses internally is that right now, Snowflake is like a cell phone, but as the data marketplace and application development capabilities expand it will be like the iPhone. Snowflake’s short term business cycle is:

It would be easy to look at their current profitability and assume that they’re sloppy on costs. Their operating margin was -43% in the last four quarters, and their cumulative loss since inception was $2.3bn. But a look at their CEO’s track record and public writings shows that they’re obsessed with costs, since internal inefficiency slows down their growth model. Since those money-losing deals they land expand so fast, they’d have to spend aggressively just to keep up with 70% per-customer revenue growth, but their approach is reasonable right now. (If they kept marketing and R&D spend at current levels, and never landed another customer, 158% dollar retention—the worst they’ve ever reported—would get them to breakeven by the end of 2024). Their CEO, Frank Slootman, has written about the question of how to set the spending cadence at a growth company with favorable unit economics:

Importantly, he didn’t write this to justify Snowflake’s strategy: this is from TAPE SUCKS, the story of his time at Data Domain. (I’ve kept the title in all caps both because that’s how it appears on the cover and because Slootman seems to be the kind of person who frequently speaks in all caps.) He continues:

One way to view the long-term upside case is that, in a very abstract sense, Snowflake is Enterprise Google. Not in the sense that it has the same kinds of products or sells them in the same way, but in the sense that it’s accomplishing the same kind of mission and has the same sorts of self-reinforcing loops. Google wants to organize the world’s information, but some information wants to stay hidden, because its value diminishes if everyone has it. Google is an information-aggregating institution whose business model is to auction off attention, and to finely measure and grade that attention to ensure that it’s going to the high bidder. Snowflake is also aggregating information, and is selling compute used to query that information, but it’s also a platform for sharing and selling data. Snowflake wants to make it possible to analyze the world’s information, and to extend that possibility to whoever owns the information in question or can afford it. And while Google has created a truly valuable public good as well as a phenomenal business, it’s likely that the majority of the monetizable data in the world is the property of one big company or another. And as Snowflake makes it easier to realize that value, both by analyzing the data and selling it, they’ll become a big company, too. Further ReadingSnowflake’s current CEO has the wonderful habit of writing a book about each company he runs. The aforementioned TAPE SUCKS talks through Data Domain (one highlight: it is possible to sell your company to a competitor you’ve trash-talked, especially if some other company is bidding for it), and The Rise of the Data Cloud talks about Snowflake itself. This piece was coauthored by Max Conradt, a data engineer working in the climate risk analytics space. A Word From Our SponsorsThink of the last time you had to import a spreadsheet, did it work on the first try? Of course not. Importing data via a CSV can be highly repetitive, time consuming, and difficult to automate because well, it just never works right. Each customer has their own, slightly different processes for managing information about their business. So each time you onboard a new customer, you’ve got to adapt the data they bring in files to your system. It’s tedious - for you and them - and it’s certainly not fun. Flatfile is the product that takes this complexity away. Our embeddable drag and drop CSV importer gives you the ability to jump seamlessly from closing a deal to a clean dataset in just minutes. Learn more about how Flatfile can help your onboarding process today! ElsewhereMicrosoft's Antitrust EdgeThe Information has a nice profile of Rima Alaily, Microsoft's top antitrust lawyer ($), who has helped the company dodge some of the rhetoric (and deal risk) that's assailed other big tech companies, while still pushing through mergers like Nuance and (probably) Activision. Real 90s kids know this is basically the template to a joke, but it's true: part of the bull case for Microsoft is that it's avoided the same antitrust concerns the rest of Big Tech worries about. And it turns out that when a company makes understanding competitive regulation a key part of how it operates, it doesn't just need to use that tool defensively. The Free Time DividendThe American Time Use Survey shows that workers in 2020 spent an average of five hours less in the office, with about half of that free time going to leisure at home and much of the rest split between working from home and sleeping. It will be a long time before we get comprehensive data, but given how quickly GDP normalized this implies that productivity at home was higher than productivity at the office. The distribution of how in-person work affects productivity is a tricky one: the two strongest use cases are 1) crisis situations where high-bandwidth communication and the ability to interrupt other people so they re-prioritize is valuable, and 2) over long periods, when in-person interactions percolate into new ideas. Feedback LoopsStuff I Thought About This Week has a nice variant view on the recent *Pro Publica story about how landlords are using software that encourages them to raise rents: "The increased use of AI tools/software add-ons will have one tangible impact: a significant increase in the amplitude of feedback loops in the economy." That's true at the scale of individual industries: if everyone uses the same analytical tools, they'll reach similar conclusions, and may chase each other's pricing decisions around. But it also means that industry cycles will be somewhat decoupled from the broader macro cycle if they're more driven by industry-specific forces. Recessions get bad when everything is falling apart at once—when laid-off workers can't switch to new careers because nobody else is hiring, either, and when investors can't move capital from dying industries to growing ones because there isn't much growth. Adding interference at the industry level will reduce the amplitude of swings at the macro level, since short and extreme cycles will mean that even in the worst recession, there's more likely to be at least one industry that's suddenly and rapidly recovering from its own mini-recession that started a bit earlier. The News BusinessFacebook parent Meta is unhappy with Canada's proposed Online News Act, which would require Facebook to pay news publishers for links users post to their stories. In their post, the company threatens to block users from posting news stories entirely rather than pay a tax. It's an interesting comment on how sticky perceptions of companies can be. Facebook used to host lots of news and news discussion, but has discovered that "talking about current events on Facebook" is, rather than being a feature, a good summary of what drives people to delete the app and never use the service again. So they've tried to deemphasize news over other kinds of content. The users who fled made the site more partisan; if young people leave and middle-aged people stay, the demographic will get more conservative and the news stories that still get shared will reflect this. And that makes Facebook's tilt somewhat self-reinforcing; the business that gets the most news coverage relative to its size is the news business, so the narrative keeps moving forward even as the underlying facts change. Facebook says news-related content is less than 3% of what Canadian users view on the service. Since the company is not exactly unfamiliar with tweaking its ranking algorithm, the remaining news on the site is presumably net beneficial to them, at least as far as their metrics can manage. But probably not if they have to pay for it. Ending the Traffic JamThe longstanding traffic jam at the ports of Los Angeles and Long Beach has now basically ended ($, WSJ), partly due to faster turnaround and mostly due to lower demand. Supply chain problems were an early driver of the uptick in inflation that started in spring 2021, though it's hard to get a good read on where prices will settle. Since companies lost money from having insufficient inventory at some points in the pandemic and then from having too much later on, they may respond to lower certainty by aiming for higher margins. One way to look at the long-term disinflationary trend in consumer durables is that it was partly driven by faster inventory turnover; if a given level of sales requires less warehouse space and less shelf space, it makes products cheaper. If that lower inventory requirement was partly illusory, then even a reset to normal supply chain conditions won't mean a reset to historical prices. Diff JobsCompanies in the Diff network are actively seeking talent! If you're interested in exploring growth opportunities at unique companies, get in touch!

And if you're thinking about your next role but not sure when, please feel free to reach out; we're happy to chat so we know what to keep an eye out for. Interested in hiring through the Diff network? Let's talk! 1 Of course, net dollar retention is an average figure, that consists of some customers ramping up spending fast, some who have done all the Snowflake deployment they expect to and will just grow based on their internal data production and usage, and some who have died off completely. But net dollar retention is a figure calculated from aggregate revenue, not on a per-customer basis, so if it’s skewed by smaller customers routinely tripling their annual spend, there need to be lots of them to offset a bigger customer growing at a slower rate. 2 They could take this further and actually subsidize storage, but that would attract customers who wanted to arbitrage cheap storage. Since that would only be worthwhile at scale, the few abuses that happened would be big ones. It’s possible that Snowflake, given its knowledge of customer behavior, could still be earning something even with ostensibly zero markup, if they’re compressing data that’s going to be stored for longer periods. 3 Except in the degenerate case where you treat every output that requires some input as participating in a platform. In that case, a steel mill is a “platform” if buildings and cars are worth more than their scrap value. To make it specific, you’d have to restrict to businesses that are built entirely on the product. 4 Snowflake’s markup has expanded over time. A reseller like Snowflake has two ways to think about buying from cloud companies: Reserve compute capacity to get a discount. However, this capacity must be used within a fixed period. They can also negotiate a volume discount for compute. This is an interesting dynamic because cloud providers know how much Snowflake is charging for this capacity whereas Snowflake probably does not know the cost basis of the cloud providers. So they’re leaking information to competitors when they try to control what will eventually be their biggest cost. Fortunately for them, the bigger the discount they can justify—the more usage they expect to get—the stronger their claim that they’re winning the market. They do disclose in their 10-K that they’re making volume commitments, and that their economics will be much worse if they don’t hit those numbers and still have to pay. Snowflake will also be constrained by availability of computing resources on the cloud providers’. Popular instance types in popular regions / availability zones are often not available. Snowflake could get around this by reserving capacity, but as noted above this requires them to forecast demand and then forfeit unused capacity. It’s very likely that Snowflake uses a single instance type and will be particularly sensitive to fluctuations in its availability. You’re a free subscriber to The Diff. For the full experience, become a paying subscriber. |

Older messages

Longreads + Open Thread

Saturday, October 22, 2022

Biased Coins; Baby Booms; McKinsey; Collusion-by-Software; Boards; Chips

The Rise of Single-Result Search Products

Monday, October 17, 2022

Plus! Mango Manipulation; The Other Emerging Labor Shortage; China's Labor Shortage; Unspinning; Bad News; Diff Jobs

Longreads + Open Thread

Saturday, October 15, 2022

Timing; High-Speed Rail; Compatibility; Banking; Velocity; Grocery; Resets

Newsletter Economics in 2022

Monday, October 10, 2022

Plus! Dogfooding the Metaverse; Distribution; Rebundling; Negative Yields; The Labor Shortage Continues

Longreads + Open Thread

Saturday, October 8, 2022

EA, Nomura, Forecasting, Solo Founders, Odds, Talent, Walmart

You Might Also Like

🇨🇳 The US is out, China is in

Tuesday, March 11, 2025

Citigroup's forecast for US and Chinese stocks, Lego stacked bricks, and Boeing's investigation | Finimize Hi Reader, here's what you need to know for March 12th in 3:10 minutes. Citigroup

The Under-the-Radar Threat to Your Retirement

Tuesday, March 11, 2025

Nearly half of older adults are burdened by bad debt ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

15 Years Since We Bought Our Toxic Asset

Tuesday, March 11, 2025

In a new Planet Money plus episode, former Planet Money hosts David Kestenbaum and Chana Joffe-Walt look back at a pioneering series that sought to explain a major source of the 2008 financial crisis.

👋 Investors ditched the S&P 500

Monday, March 10, 2025

The US president didn't rule out a recession, but TSMC eased some of investors' other worries | Finimize Hi Reader, here's what you need to know for March 11th in 3:07 minutes. TSMC's

💳 Find a new credit card

Monday, March 10, 2025

Let's get those rewards ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Text and Telos

Monday, March 10, 2025

Plus! Diff Jobs; Scaling; Retail Investors; Comparative Advantage; Transaction Costs and Corporate Structure; DeepSeek Governance Text and Telos By Byrne Hobart • 10 Mar 2025 View in browser View in

Longreads + Open Thread

Saturday, March 8, 2025

Personal Essays, Lies, Popes, GPT-4.5, Banks, Buy-and-Hold, Advanced Portfolio Management, Trade, Karp Longreads + Open Thread By Byrne Hobart • 8 Mar 2025 View in browser View in browser Longreads

💸 A $24 billion grocery haul

Friday, March 7, 2025

Walgreens landed in a shopping basket, crypto investors felt pranked by the president, and a burger made of skin | Finimize Hi Reader, here's what you need to know for March 8th in 3:11 minutes.

The financial toll of a divorce can be devastating

Friday, March 7, 2025

Here are some options to get back on track ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Too Big To Fail?

Friday, March 7, 2025

Revisiting Millennium and Multi-Manager Hedge Funds ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏