Astral Codex Ten - Who Predicted 2022?

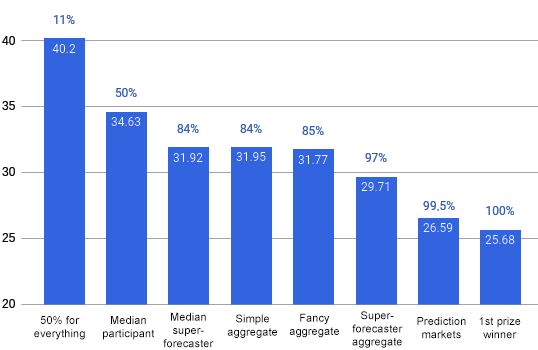

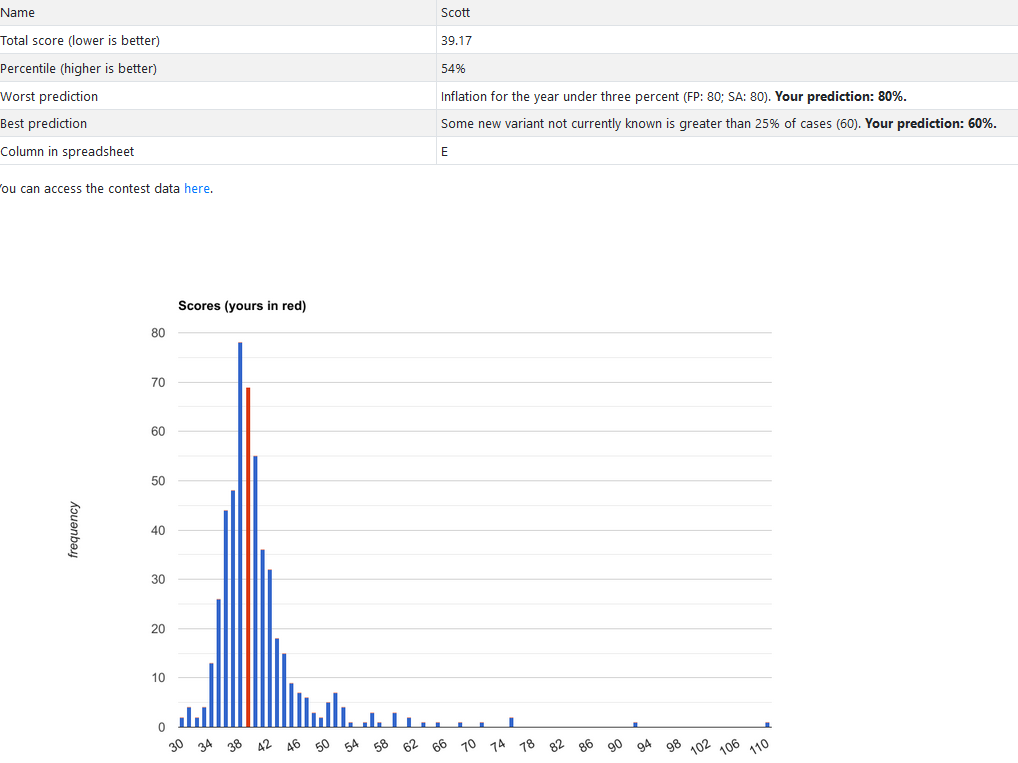

Last year saw surging inflation, a Russian invasion of Ukraine, and a surprise victory for Democrats in the US Senate. Pundits, politicians, and economists were caught flat-footed by these developments. Did anyone get them right? In a very technical sense, the single person who predicted 2022 most accurately was a 20-something data scientist at Amazon’s forecasting division. I know this because last January, along with amateur statisticians Sam Marks and Eric Neyman, I solicited predictions from 508 people. This wasn’t a very creative or free-form exercise - contest participants assigned percentage chances to 71 yes-or-no questions, like “Will Russia invade Ukraine?” or “Will the Dow end the year above 35000?” The whole thing was a bit hokey and constrained - Nassim Taleb wouldn’t be amused - but it had the great advantage of allowing objective scoring. Our goal wasn’t just to identify good predictors. It was to replicate previous findings about the nature of prediction. Are some people really “superforecasters” who do better than everyone else? Is there a “wisdom of crowds”? Does the Efficient Markets Hypothesis mean that prediction markets should beat individuals? Armed with 508 people’s predictions, can we do math to them until we know more about the future (probabilistically, of course) than any ordinary mortal? After 2022 ended, Sam and Eric used a technique called log-loss scoring to grade everyone’s probability estimates. Lower scores are better. The details are hard to explain, but for our contest, guessing 50% for everything would give a score of 40.2¹, and complete omniscience would give a perfect score of 0. Here’s how the contest went: As mentioned above: guessing 50% corresponds to a score of 40.2. This would have put you in the eleventh percentile (yes, 11% of participants did worse than chance). Philip Tetlock and his team have identified “superforecasters” - people who seem to do surprisingly well at prediction tasks, again and again. Some of Tetlock’s picks kindly agreed to participate in this contest and let me test them. The average superforecaster outscored 84% of other participants. The “wisdom of crowds” hypothesis says that averaging many ordinary people’s predictions produces a “smoothed-out” prediction at least as good as experts. That proved true here. An aggregate created by averaging all 508 participants’ guesses scored at the 84th percentile, equaling superforecaster performance. There are fancy ways to adjust people’s predictions before aggregating them that outperformed simple averaging in the previous experiments. Eric tried one of these methods, and it scored at the 85th percentile, barely better than the simple average. Crowds can beat smart people, but crowds of smart people do best of all. The aggregate of the 12 participating superforecasters scored at the 97th percentile. Prediction markets did extraordinarily well during this competition, scoring at the 99.5th percentile - ie they beat 506 of the 508 participants, plus all other forms of aggregation. But this is an unfair comparison: our participants were only allowed to spend five minutes max researching each question, but we couldn’t control prediction market participants; they spent however long they wanted. That means prediction markets’ victory doesn’t necessarily mean they’re better than other aggregation methods - it might just mean that people who can do lots of research beat people who do less research.² Next year's contest will have some participants who do more research, and hopefully provide a fairer test. The single best forecaster of our 508 participants got a score of 25.68. That doesn’t necessarily mean he’s smarter than aggregates and prediction markets. There were 508 entries, ie 508 lottery tickets to outperform the markets by coincidence. Most likely he won by a combination of skill and luck. Still, this is an outstanding performance, and must have taken extraordinary skill, regardless of how much luck was involved. And The Winners Are . . .I planned to recognize the top five of these 508 entries. After I sent out prize announcement emails, a participant pointed out flaws in our resolution criteria³. We decided to give prizes to people who won under either resolution method. 1st and 2nd place didn't change, but 3rd, 4th, and 5th did - so seven people placed in the top five spots. They are:

Here are some other interesting people who did exceptionally well and scored near the top of the 508 entries:

And thanks to our question-writers:

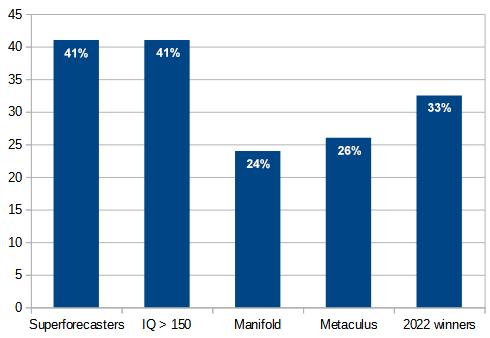

Along with looking at individuals, we also tried to figure out which groups did the best - whether there were any demographic characteristics that reliably predicted good forecasting. Not really. Liberals didn’t outperform conservatives, old people didn’t outperform young people, nothing like that⁶. Users of the website Less Wrong, which tries to teach a prediction-focused concept of rationality, didn’t significantly outperform others, which disappointed me. But users with self-reported IQ > 150 may have outperformed everyone else. This result needs many caveats: it was sensitive to cutoff points, self-reported IQs are unreliable, and sample sizes were low. Still, these people did almost as well as superforecasters, an impressive performance.⁷ Towards 2023: Pushing Back The VeilGiven what we’ve learned, can we predict 2023? Sam, Eric and I are running a repeat version of the contest for 2023. We have about 3500 entries so far, answering questions like “Will there be a lasting cease-fire in Ukraine?” I’ve peeked at the submissions, and - spoiler - everyone disagrees. This is the human condition. The future is murky. Nobody knows for sure what will happen. Get 3500 people, and there will be 3500 views. What’s new is that we have methods that can, with high levels of certainty, mediate these disagreements. Last year, superforecaster aggregation beat 97% of individual predictors. This finding has been replicated in other experiments. Probably it will hold true this year too. By running a simple algorithm on the data I have, I can beat 3400 of these 3500 participants. Or maybe the prediction market results will hold. One market (Manifold) and another market-like site (Metaculus) are joining the contest this year. If they do as well as last year, they’ll beat all but 15 of the 3500 entries. If things go very well, maybe we’ll discover new ways of aggregating their results that can beat every individual predictor, at least most of the time. If this seems boring and technical to you, I maintain it should instead sound mystical, maybe even blasphemous. It is not given for humans to see the future. But it’s not impossible to see the future either - for example, I confidently predict that the sun will rise tomorrow. This project is about discovering the limits of that possibility. A 40% vs. 41% chance of a cease-fire in Ukraine next year might feel like a minor difference. But this is the sort of indeterminate portentious future event it feels impossible to know anything about at all, and here we are slightly shaving off layers of uncertainty, one by one. A person who estimates a 99.99999% chance of a cease-fire in Ukraine next year is clearly more wrong than someone who says a 41% chance. Maybe it’s possible to say with confidence that a 41% chance to be better than a 40% chance, and for us to discover this, and to hand it to policy-makers charting plans that rely on knowing what will happen. None of these aggregation methods are the most accurate source of forecasts on a Ukraine cease-fire - that would be Putin and Zelenskyy, who could have secret plans they haven’t announced. These methods might not even be the most accurate public source - maybe that’s someone with an International Relations PhD who’s studied the region their whole life. But we talk about fuel efficiency for cars. This is about knowledge efficiency. Given certain resources - the information about Ukraine available to the general public, a few minutes of reflection - how much accuracy can we wring out of those? There must be some limit. But we haven’t found it yet. And every new algorithm pushes back the veil a little bit further, very slightly expanding what mortals may know about what is to come. If you entered the contest, you can check your score using this website. Special thanks to Sam Marks and Eric Neyman for running the contest. Sam is nominally a math grad student at Harvard, but actually spends most of his time thinking about AI alignment and developing the educational programming for the Harvard and MIT AI safety student groups. Eric is nominally a grad student at Columbia studying algorithms for elicitation and aggregation of knowledge, but he's on a leave of absence to do research in AI alignment. Eric blogs at Unexpected Values, and you can read some of his more formal work on prediction aggregation here. You can access the anonymized contest dataset here. 1 This number and the following graph include only the 61 out of 71 questions that we had prediction market estimates for, in order to keep the prediction market results commensurable with the other results. The additional ten questions were added back in before awarding prizes. 2 But Sam and Eric object that prediction markets were also handicapped this year - most of the markets they took their numbers from were very small Manifold markets with only a single-digit number of participants, just a few months after Manifold started existing at all. They say the most likely reason prediction markets did so well was because only the most knowledgeable people will bet on a certain question, whereas our contest encouraged everyone to predict each question (technically you could opt out, but most people didn’t). Plausibly this coming year, when we have multiple big prediction markets for each question, the markets will totally blow away all other participants. 3 Matt Yglesias scored his question “Will Nancy Pelosi announce plans to retire?” as TRUE, but technically she only announced that she would retire as Democratic leader, not as a Congresswoman, so the question is false as written. Vox Future Perfect scored their question “Will the Biden administration set a social cost of carbon at > $100” as PARTIALLY RIGHT, because they announced plans to maybe do this, but they have not done it yet and the question is false as written. We originally resolved “Will a Biden administration Cabinet-level official resign?” as FALSE, but Eric Lander, a science advisor who resigned, was technically Cabinet-level and the question as written is true. These changes didn’t affect headline results much, but they shifted some people up or down a few places, and shifted a few people with very high confidence on these questions up or down a few dozen places. 4 I asked the winners for their forecasting advice. Ryan wrote:

Skerry wrote:

Johan wrote:

Haakon wrote:

5 But also, I was the first person to enter, everyone else got to see my results, and anyone who chose not to answer a specific question defaulted to my results. This gave other people an advantage over me. This coming year we’ve changed the rules in a way that gives me less of a disadvantage, so we’ll see how I do. 6 Actually, if you analyze raw scores, liberals did outperform conservatives, and old people did outperform young people. But Eric made a strong argument that raw scores were too skewed and we should be comparing percentile ranks instead. That is, some people did extremely badly, so their raw scores could be extreme outliers and unfairly skew the correlations, but nobody can have a percentile rank lower than100th. When you analyze percentile ranks, these effects disappeared. 7 If you analyze raw scores, IQ correlates with score pretty well. But when you analyze percentile ranks, you need to group people into <150 and >150 to see any effect. You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

Open Thread 260

Sunday, January 22, 2023

...

ACX Survey Results 2022

Friday, January 20, 2023

...

Highlights From The Comments On The Media Very Rarely Lying

Friday, January 20, 2023

...

Conspiracies of Cognition, Conspiracies Of Emotion

Friday, January 20, 2023

...

2023 Subscription Drive + Free Unlocked Posts

Friday, January 20, 2023

...

You Might Also Like

Rocket’s $1.75B deal to buy Redfin amps up competition with Zillow

Monday, March 10, 2025

GeekWire Awards: Vote for Next Tech Titan | Amperity names board chair ADVERTISEMENT GeekWire SPONSOR MESSAGE: A limited number of table sponsorships are available at the 2025 GeekWire Awards: Secure

🤑 Money laundering for all (who can afford it)

Monday, March 10, 2025

Scammers and tax evaders get big gifts from GOP initiatives on crypto, corporate transparency, and IRS enforcement. Forward this email to others so they can sign up 🔥 Today's Lever story: A bill

☕ Whiplash

Monday, March 10, 2025

Amid tariff uncertainty, advertisers are expecting a slowdown. March 10, 2025 View Online | Sign Up Marketing Brew Presented By StackAdapt It's Monday. The business of sports is booming! Join top

☕ Splitting hairs

Monday, March 10, 2025

Beauty brand loyalty online. March 10, 2025 View Online | Sign Up Retail Brew Presented By Bloomreach Let's start the week with some news for fans of plant milk. A new oat milk, Milkadamia Flat

Bank Beliefs

Monday, March 10, 2025

Writing of lasting value Bank Beliefs By Caroline Crampton • 10 Mar 2025 View in browser View in browser Two Americas, A Bank Branch, $50000 Cash Patrick McKenzie | Bits About Money | 5th March 2025

Dismantling the Department of Education.

Monday, March 10, 2025

Plus, can someone pardoned of a crime plead the Fifth? Dismantling the Department of Education. Plus, can someone pardoned of a crime plead the Fifth? By Isaac Saul • 10 Mar 2025 View in browser View

Vote now for the winners of the Inbox Awards!

Monday, March 10, 2025

We've picked 18 finalists. Now you choose the winners. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚡️ ‘The Electric State’ Is Better Than You Think

Monday, March 10, 2025

Plus: The outspoken rebel of couch co-op games is at it again. Inverse Daily Ready Player One meets the MCU in this Russo Brothers Netflix saga. Netflix Review Netflix's Risky New Sci-Fi Movie Is

Courts order Trump to pay USAID − will he listen?

Monday, March 10, 2025

+ a nation of homebodies

Redfin to be acquired by Rocket Companies in $1.75B deal

Monday, March 10, 2025

Breaking News from GeekWire GeekWire.com | View in browser Rocket Companies agreed to acquire Seattle-based Redfin in a $1.75 billion deal that will bring together the nation's largest mortgage