A Taxonomy to Understand Federated Learning

Was this email forwarded to you? Sign up here. You can also give it as a gift. A Taxonomy to Understand Federated LearningClassifying different types of federated learning methods, Meta AI research about highly scalable and asynchronous federated learning pipelines and Microsoft's FLUTE framework.In this issue:

💡 ML Concept of the Day: A Taxonomy to Understand Federated LearningOne of the most common mistakes around federated learning, is to think about it as a single type of architecture. Even though while the principles of federated learning are unique enough to merit its own space within deep learning, there are different architectures that implement those principle in diverse way. While there is no consistent taxonomy to study federated learning, there are some categorization schemes that are proven to be quite useful. The following taxonomy might be relevant to understand the different variations of federated learning architectures: By ML Construct: This categorization scheme focuses divides federated learning architectures based on the ML artifacts that are being distributed.

By Federation Scheme: This criteria is based on the architecture of the federation.

By Data Partition: This criteria focuses on the feature and data partition models used in the federation.

In the next few editions of this series, we will be diving into each several of these categories. 🔎 ML Research You Should Know About: Faster and More Scalable Federated LearningIn Federated Learning with Buffered Asynchronous Aggregation, Meta AI proposes a asynchronous method for scaling the training of federated learning models. The objective: Meta AI method addresses some of the fundamental scalability challenges of federated learning models. Why it is so important? Scaling federated learning across many devices is a monumental challenge for most organizations. Meta AI’s technique showcases the advance of asynchronous methods to scale federated learning models. Diving Deeper: Most of the mainstream methods for training federated learning models rely on synchronous processes. In the scychronous architecture, nodes will download the current mode and will cooperate to send updates to a centralized server. While synchronous communication is simpler and has strong privacy guarantee properties has major scalability limitations. Effectively, synchronous federated learning is only as fast as the slowest device/node in the network. This is known as the straggler problem. In their paper, Meta AI provides an asynchronous method that addresses the limitation of synchronous federated learning techniques. The core idea behind Meta AI is relatively simple. The architecture uses a larger number of nodes than typically needed and regularly drops the slowest ones on each training round. For instance, a training round might start with 1500 nodes and end with 1200 as the 300 slowest nodes were remove from the federation. When a node is available for training, it downloads the model, computes the update and sends it to the server just like in a synchronous scenario. However, the server just aggregates the updates until It has met its prerequisites and distributes the aggregated update to the nodes. The slowest nodes might still send their updates to the server but those might be based on state information. Meta AI tested this technique with federated learning architectures consisting of 100 million Android devices. In average, the training processes were about 5x faster than synchronous alternatives. 🤖 ML Technology to Follow: FLUTE is a Platform for High Performance Federated Learning SimulationsWhy Should I Know About This:. FLUTE is one of the first frameworks that allow data scientists to run multi-agent federated learning scenarios. What is it: Federated Learning Utilities and Tools for Experimentation (FLUTE) was designed to enable researchers to perform rapid prototyping of offline simulations of federated learning scenarios without incurring in high computational costs. The framework includes a core series of capabilities that enable that goal:

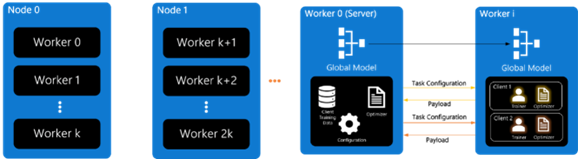

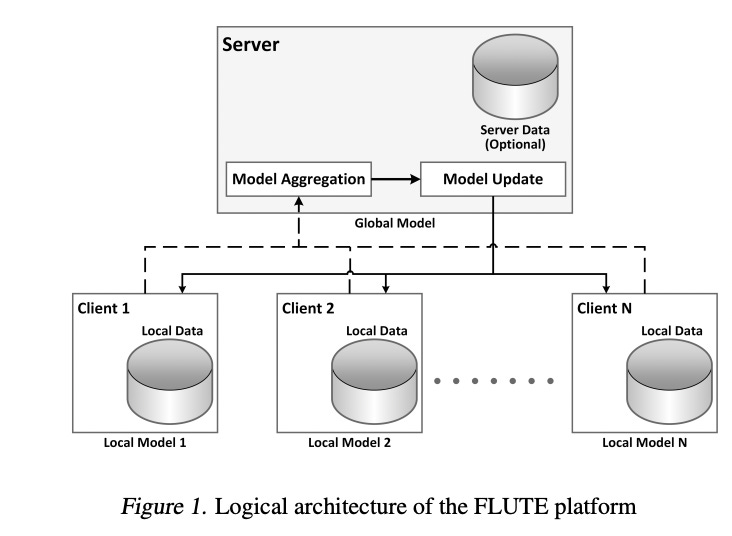

A typical FLUTE architecture consists of a number of nodes which are physical or virtual machines that execute a number of workers. One of the nodes acts as a orchestrator distributing the model and tasks to the different workers. Each worker processes the tasks sequentially, calculates the model delta and sends the gradients back to the orchestrator which federates it into the centralized model. Extrapolating this workflow to a large number of clients, we get something like the following architecture. In this architecture, a federated learning workflow is based on the following steps( from the research paper):

The initial version of FLUTE is based on PyTorch which enables it interoperability with a large number of deep learning architectures. The communication protocols are implemented using OpenMPI which guarantees high levels of performance and scalability. How Can I Use it: FLUTE is open source and available at https://github.com/microsoft/msrflute. . TheSequence is a summary of groundbreaking ML research papers, engaging explanations of ML concepts, and exploration of new ML frameworks and platforms. We keep you up-to-date with the main AI news, trends, and technology developments. This post is only for paying subscribers of TheSequence Edge. You can give it as a gift. |

Older messages

ChatGPT and Whisper APIs

Sunday, March 5, 2023

Sundays, The Sequence Scope brings a summary of the most important research papers, technology releases and VC funding deals in the artificial intelligence space.

📝 How is MLOps more than just tools?

Friday, March 3, 2023

Hi there! At TheSequence, we're exploring what MLOps culture looks like across the industry at the start of 2023. A huge variety of tools are available for ML development, but the culture and

Inside Claude: The ChatGPT Competitor that Just Raised Over $1 Billion

Thursday, March 2, 2023

Claude uses an interesting technique called Constitutional AI to enable safer content.

Edge 269: A New Series About Federated Learning

Tuesday, February 28, 2023

Intro to federated learning, the original federated learning and the TensorFlow Federated framework.

Meta and Amazon Generative AI Moves

Sunday, February 26, 2023

Sundays, The Sequence Scope brings a summary of the most important research papers, technology releases and VC funding deals in the artificial intelligence space.

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your