Astral Codex Ten - MR Tries The Safe Uncertainty Fallacy

The Safe Uncertainty Fallacy goes:

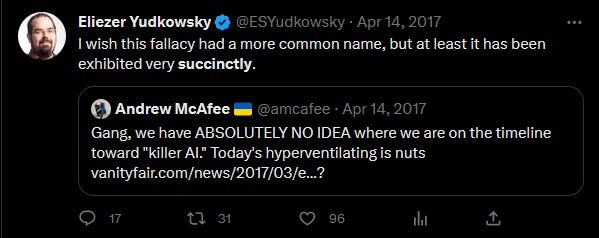

You’re not missing anything. It’s not supposed to make sense; that’s why it’s a fallacy. For years, people used the Safe Uncertainty Fallacy on AI timelines: Since 2017, AI has moved faster than most people expected; GPT-4 sort of qualifies as an AGI, the kind of AI most people were saying was decades away. When you have ABSOLUTELY NO IDEA when something will happen, sometimes the answer turns out to be “soon”. Now Tyler Cowen of Marginal Revolution tries his hand at this argument. We have absolutely no idea how AI will go, it’s radically uncertain:

Therefore, it’ll be fine:

Look. It may well be fine. I said before my chance of existential risk from AI is 33%; that means I think there’s a 66% chance it won’t happen. In most futures, we get through okay, and Tyler gently ribs me for being silly. Don’t let him. Even if AI is the best thing that ever happens and never does anything wrong and from this point forward never even shows racial bias or hallucinates another citation ever again, I will stick to my position that the Safe Uncertainty Fallacy is a bad argument. Normally this would be the point where I try to steelman Tyler and explain in more detail why the strongest version of his case is wrong. But I’m having trouble figuring out what the strong version is. Here are three possibilities: 1) The base rate for things killing humanity is very low, so we would need a strong affirmative argument to shift our estimate away from that base rate. Since there’s so much uncertainty, we don’t have strong affirmative arguments, and we should stick with our base rate of “very low”. Suppose astronomers spotted a 100-mile long alien starship approaching Earth. Surely this counts as a radically uncertain situation if anything does; we have absolutely no idea what could happen. Therefore - the alien starship definitely won’t kill us and it’s not worth worrying? Seems wrong. What’s the base rate for alien starships approaching Earth killing humanity? We don’t have a base rate, because we’ve never been in this situation before. What is the base rate for developing above-human-level AI killing humanity? We don’t . . . you get the picture. You can try to fish for something sort of like a base rate: “There have been a hundred major inventions since agriculture, and none of them killed humanity, so the base rate for major inventions killing everyone is about 0%”. But I can counterargue: “There have been about a dozen times a sapient species has created a more intelligent successor species: australopithecus → homo habilis, homo habilis → homo erectus, etc - and in each case, the successor species has wiped out its predecessor. So the base rate for more intelligent successor species killing everyone is about 100%”. The Less Wrongers call this game “reference class tennis”, and insist that the only winning move is not to play. Thinking about this question in terms of base rates is just as hard as thinking of it any other way, and would require arguments for why one base rate is better than another. Tyler hasn’t made any. 2) There are so many different possibilities - let’s say 100! - and dying is only one of them, so there’s only a 1% chance that we’ll die. This is sort of how I interpret:

Alien time again! Here are some possible ways the hundred-mile long starship situation could end:

Therefore, there’s no need to worry about the giant alien ship. The chance that it kills us is only 1%! If I’m even cleverer at generating scenarios, I can get it down below 0.5%! You can’t reason this way in real life, sorry. It relies on a fake assumption that you’ve parceled out scenarios of equal specificity (does “the aliens have founded a religion that requires them to ritually give gingerbread cookies to one civilization every 555 galactic years, and so now they’re giving them to us” count as “one scenario” in the same way “the aliens want to study us” counts as “one scenario”?) and likelihood. 3) If you can’t prove that some scenario is true, you have to assume the chance is 0, that’s the rule. No it isn’t! I’ve tried to make this argument again and again, for example in The Phrase No Evidence Is A Red Flag For Bad Science Communication. The way it worked there was - someone would worry that the new Wuhan coronavirus could spread from human to human. Doctors would look through the literature, find nobody had done a study on this topic, and say “Relax! There is no evidence that the coronavirus can spread between humans! If you think it can, you’re a science denier! Go back to middle school and learn that you need evidence to support hypotheses!” If you asked the followup question “Is there any evidence that the coronavirus can’t spread between humans?”, they would say you don’t need evidence, that’s the null hypothesis. Then they would shout down all attempts at quarantine or safety procedures, because “trust the science”. Then it would turn out the coronavirus could spread between humans just fine, and they would always look so betrayed. How could they have known? There was no evidence. If you’re can’t prove something either way, you need to take a best guess. Usually you’ll use base rates. If there’s no evidence a drug cures cancer, I suspect it doesn’t, because most things don’t cure cancer. If there’s no evidence an alien starship is going to kill humanity, I’m not sure which base rate to use, but I’m not going to immediately jump to “zero percent chance, come back when you have proof”. In order to generate a belief, you have to do epistemic work. I’ve thought about this question a lot and predict a 33% chance AI will cause human extinction; other people have different numbers. What’s Tyler’s? All he’ll say is that it’s only a “distant possibility”. Does that mean 33%? Does it mean 5-10% (as Katja’s survey suggests the median AI researcher thinks?) Does it mean 1%? Or does Tyler not have a particular percent in mind, because he wants to launder his bad argument through a phrase that sort of sounds like it means “it’s not zero, you can’t accuse me of arrogantly dismissing this future in particular” but also sort of means “don’t worry about it” without having to do the hard work of checking whether any particular number fills both criteria at once? If you have total uncertainty about a statement (“are bloxors greeblic?”), you should assign it a probability of 50%. If you have any other estimate, you can’t claim you’re just working off how radically uncertain it is. You need to present a specific case. I look forward to reading Tyler’s, sometime in the future. He ends by saying:

This is, of course, nonsense. We designed our society for excellence at strangling innovation. Now we’ve encountered a problem that can only be solved by a plucky coalition of obstructionists, overactive regulators, anti-tech zealots, socialists, and people who hate everything new on general principle. It’s like one of those movies where Shaq stumbles into a situation where you can only save the world by playing basketball. Denying 21st century American society the chance to fulfill its telos would be more than an existential risk - it would be a travesty. You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

The Government Is Making Telemedicine Hard And Inconvenient Again

Wednesday, March 29, 2023

...

Turing Test

Monday, March 27, 2023

...

Open Thread 269

Monday, March 27, 2023

...

Half An Hour Before Dawn In San Francisco

Monday, March 20, 2023

...

Open Thread 268

Monday, March 20, 2023

...

You Might Also Like

Strategic Bitcoin Reserve And Digital Asset Stockpile | White House Crypto Summit

Saturday, March 8, 2025

Trump's new executive order mandates a comprehensive accounting of federal digital asset holdings. Forbes START INVESTING • Newsletters • MyForbes Presented by Nina Bambysheva Staff Writer, Forbes

Researchers rally for science in Seattle | Rad Power Bikes CEO departs

Saturday, March 8, 2025

What Alexa+ means for Amazon and its users ADVERTISEMENT GeekWire SPONSOR MESSAGE: Revisit defining moments, explore new challenges, and get a glimpse into what lies ahead for one of the world's

Survived Current

Saturday, March 8, 2025

Today, enjoy our audio and video picks Survived Current By Caroline Crampton • 8 Mar 2025 View in browser View in browser The full Browser recommends five articles, a video and a podcast. Today, enjoy

Daylight saving time can undermine your health and productivity

Saturday, March 8, 2025

+ aftermath of 19th-century pardons for insurrectionists

I Designed the Levi’s Ribcage Jeans

Saturday, March 8, 2025

Plus: What June Squibb can't live without. The Strategist Every product is independently selected by editors. If you buy something through our links, New York may earn an affiliate commission.

YOU LOVE TO SEE IT: Defrosting The Funding Freeze

Saturday, March 8, 2025

Aid money starts to flow, vital youth care is affirmed, a radical housing plan takes root, and desert water gets revolutionized. YOU LOVE TO SEE IT: Defrosting The Funding Freeze By Sam Pollak • 8 Mar

Rough Cuts

Saturday, March 8, 2025

March 08, 2025 The Weekend Reader Required Reading for Political Compulsives 1. Trump's Approval Rating Goes Underwater Whatever honeymoon the 47th president enjoyed has ended, and he doesn't

Weekend Briefing No. 578

Saturday, March 8, 2025

Tiny Experiments -- The Lazarus Group -- Food's New Frontier ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Your new crossword for Saturday Mar 08 ✏️

Saturday, March 8, 2025

View this email in your browser Happy Saturday, crossword fans! We have six new puzzles teed up for you this week! You can find all of our new crosswords in one place. Play the latest puzzle Click here

Russia Sanctions, Daylight Saving Drama, and a Sneaky Cat

Saturday, March 8, 2025

President Trump announced on Friday that he is "strongly considering" sanctions and tariffs on Russia until it agrees to a ceasefire and peace deal to end its three-year war with Ukraine. ͏