Hello and thank you for tuning in to Issue #490.

Once a week we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

If you find this useful, please consider becoming paid subscriber here:

https://datascienceweekly.substack.com/subscribe

If you don’t find this useful, unsubscribe here.

Hope you enjoy it!

:)

And now, let's dive into some interesting links from this week:

Building LLM applications for production

This post consists of three parts: a) Part 1 discusses the key challenges of productionizing LLM applications and the solutions that I’ve seen, b) Part 2 discusses how to compose multiple tasks with control flows (e.g. if statement, for loop) and incorporate tools (e.g. SQL executor, bash, web browsers, third-party APIs) for more complex and powerful applications, c) Part 3 covers some of the promising use cases that I’ve seen companies building on top of LLMs and how to construct them from smaller tasks...

R Without Statistics, a forthcoming book (draft)

R Without Statistics will show ways that R can be used beyond complex statistical analysis. Readers will learn about a range of uses for R, many of which they have likely never even considered. Each chapter will, using a consistent format, cover one novel way of using R…

Water Data Science in 2021

It is an exciting time to be a data science practitioner in environmental science. In the last five years, we’ve seen massive data growth, modeling improvements, new more inclusive definitions of “impact” in science, and new jobs and duties. The title of “data scientist” has even been formally added as a job role by the U.S.A federal government and there are all kinds of data science needs spelled out in the new USGS science plan. As 2021 progresses, I felt compelled to write up a few of the activities we are focusing on right now, as well as share some ideas we are exploring for the future…

Track every customer interaction in real-time and gain a deep understanding of your customers’ behavior

Track every customer interaction in real-time and gain a deep understanding of your customers’ behavior

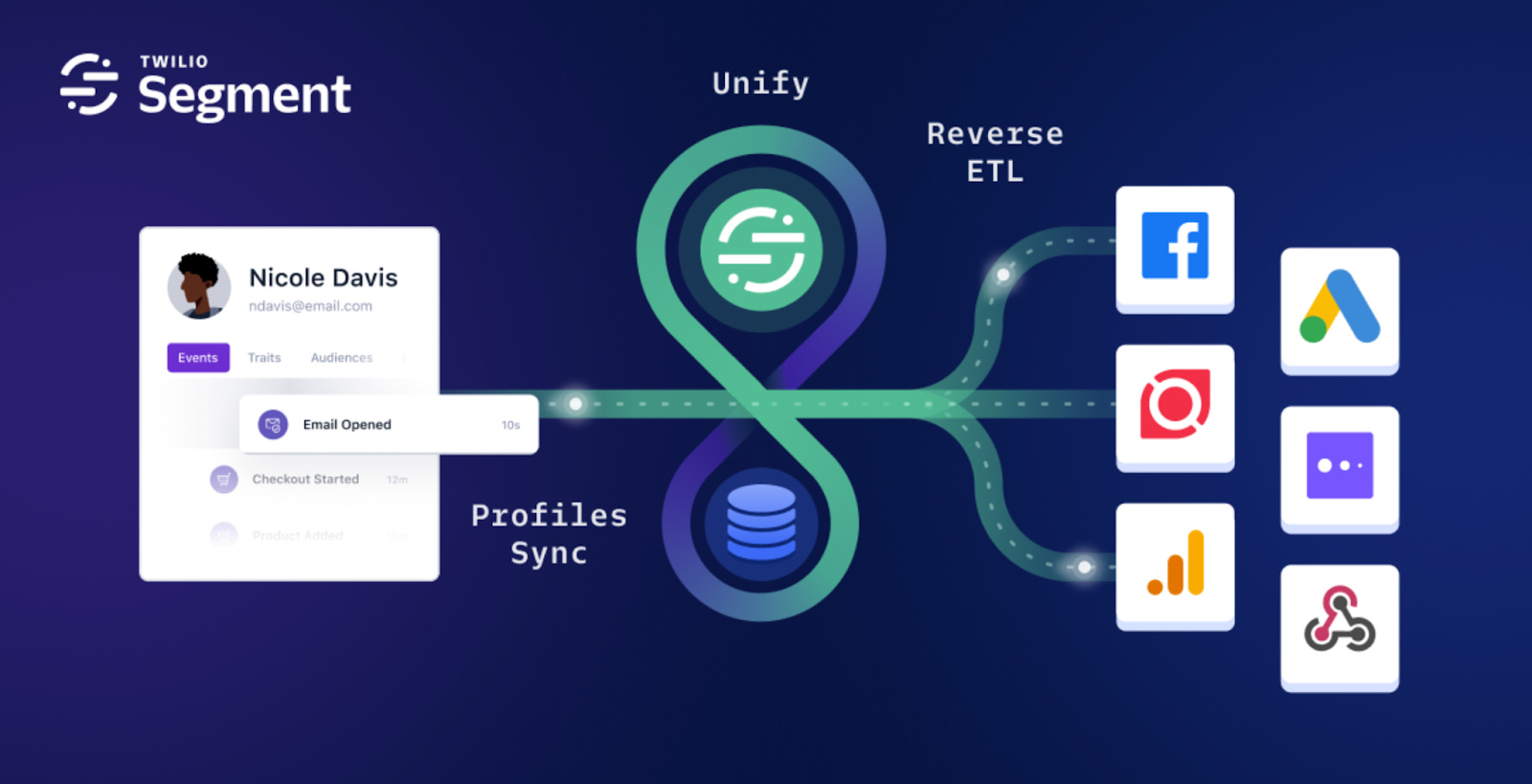

Segment Unify allows you to unite online and offline customer data in real-time across every platform and channel. Use Segment Profiles Sync to send identity resolved customer profiles to your data warehouse, where they can be used for advanced analytics and enhanced with valuable data-at-rest. Then use Segment Reverse ETL to immediately activate your ‘golden’ profiles across your CX tools of choice.

Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

Want to work on Generative AI?! [Twitter Thread]

So you want to work on Generative AI! Lots of amazing teams are hiring, but there's a lot of hype and noise right now, and it can be tough to find the good ones. 🚩Here are a few red/yellow flags to look for that indicate that a company doesn't know what they're talking about…

SQL Is All You Need

I strongly believe that our lives would be way easier if SQL was everything (or almost) we needed when it comes to data. In this article, I want to play with the idea of building a machine learning algorithm by just using SQL and ClickHouse. Hence the title, which is a clear reference to the Attention Is All You Need paper…

Build Reliable Machine Learning Pipelines with Continuous Integration

After spending months fine-tuning a model, you discover one with greater accuracy than the original. Excited by your breakthrough, you create a pull request to merge your model into the main branch…Unfortunately, because of the numerous changes, your team takes over a week to evaluate and analyze them, which ultimately impedes project progress. Furthermore, after deploying the model, you identify unexpected behaviors resulting from code errors, causing the company to lose money…In retrospect, automating code and model testing after submitting a pull request would have prevented these problems and saved both time and money…

Introducing `askgpt`: a chat interface that helps you to learn R!

When I talked to my colleagues Mariken and Kasper the other day about how to make teaching R more engaging and how to help students overcome their problems, it is no big surprise that the conversation eventually found it’s way to the large language model GPT-3.5 by OpenAI and the chat interface ChatGPT…So I got to work implementing a few of the functionalities I wish I had available when I first started with R…The resulting package was just released on CRAN and I wanted to write this post to highlight a few of the way you can use it to make learning or teaching easier…

Time-Series Forecasting: Deep Learning vs Statistics — Who Wins?

This article aims to clear the confusion and provide an unbiased view, using reliable data and sources from both academia and industry. Specifically, we will cover: a) The pros and cons of Deep Learning and Statistical Models, b) When to use Statistical models and when Deep Learning, c) How to approach a forecasting case, and d) How to save time and money by selecting the best model for your case and dataset...Let’s dive in...

pyobsplot: Observable Plot in Jupyter notebooks and Quarto documents

pyobsplot allows to use Observable Plot to create charts in Jupyter notebooks, VSCode notebooks, Google Colab and Quarto documents. Plots are created from Python code with a syntax as close as possible to the JavaScript one…

Equality of Odds: Measuring and Mitigating Bias in Machine Learning

In this article, we will review a well-known fairness criterion, called 'Equalized Odds' (EO). EO aims to equalize the error a model makes when predicting categorical outcomes for different groups, here: [circle] and [triangle]. EO takes the merit different groups of people have into account by considering the underlying ground truth distribution of the labels. This ensures the errors across outcomes and groups are similar, i.e. fair…

Practical considerations for active machine learning in drug discovery

This review recapitulates key findings from previous active learning studies to highlight the challenges and opportunities of applying adaptive machine learning to drug discovery. Specifically, considerations regarding implementation, infrastructural integration, and expected benefits are discussed. By focusing on these practical aspects of active learning, this review aims at providing insights for scientists planning to implement active learning workflows in their discovery pipelines…

An Introduction to a Powerful Optimization Technique: Simulated Annealing

Simulated annealing is an optimization technique that tries to find the global optimum for a mathematical optimization problem. It is a great technique and you can apply it to a wide range of problems. In this post we’ll dive into the definition, strengths, weaknesses and use cases of simulated annealing…

Programming Beyond Cognitive Limitations with AI

Our natural processing power is limited, and leveraging AI for assistance can help us to use it more efficiently, especially when it comes to reading and understanding code…We can use AI can help us to work around some of these natural limits: by comprehending and understanding code faster, we can learn more efficiently and dedicate our focus to higher-level coding tasks…Let’s talk about how AI can augment our processing power by tying cognitive science concepts to specific programming tasks we can offload…

Using Planetary Forensics To Visualize Historic Drought In The Horn Of Africa

Understanding the ebb and flow of water in the Horn of Africa can help to gauge the severity of drought and get ahead of widespread famine. The current multi-year drought coincides with the La Niña climate system, which typically reduces rainfall in the Horn of Africa while increasing it elsewhere like Southeast Asia…

We’re looking for engineers with a background in machine learning & artificial intelligence to improve our products and build new capabilities. You'll be driving fundamental and applied research in this area. You’ll be combining industry best practices and a first-principles approach to design and build ML models and infrastructure that will improve Figma’s design and collaboration tool.

What you’ll do at Figma:

Drive fundamental and applied research in ML/AI, with Figma product use cases in mind

Formulate and implement new modeling approaches both to improve the effectiveness of Figma’s current models as well as enable the launch of entirely new AI-powered product features

Work in concert with other ML researchers, as well as product and infrastructure engineers to productionize new models and systems to power features in Figma’s design and collaboration tool

Explore the boundaries of what is possible with the current technology set and experiment with novel ideas.

Apply here

Want to post a job here? Email us for details --> team@datascienceweekly.org

* Based on unique clicks.

** Find last week's issue #489 here.

Thanks for joining us this week :)

All our best,

Hannah & Sebastian

P.S.,

Please consider becoming paid subscriber here: https://datascienceweekly.substack.com/subscribe

:)

Copyright © 2013-2023 DataScienceWeekly.org, All rights reserved.