Platformer - The AI is eating itself

Here’s your free edition of Platformer for this week: a look at the lessons we’re learning from the prolonged revolt of Reddit users, and what it means for the future of social networks. Paid subscribers get the most out of Platformer. Most recently, we broke the news that Twitter is stiffing Google on its cloud computing bills. We’d love to send you scoops like these — and we’d love for you to support our work. Just upgrade your subscription and we’ll start sending them your way:

Today let’s look at some early notes on the effect of generative artificial intelligence on the broader web, and think through what it means for platforms. At The Verge, James Vincent surveys the landscape and finds a dizzying number of changes to the consumer internet over just the past few months. He writes:

The rapid diffusion of text generated by large language models around the web cannot be said to come as any real surprise. In December, when I first covered the promise and the perils of ChatGPT, I led with the story of Stack Overflow getting overwhelmed with the AI’s confident bullshit. From there, it was only a matter of time before platforms of every variety began to experience their own version of the problem. To date, these issues have been covered mostly as annoyances. Moderators of various sites and forums are seeing their workloads increase, sometimes precipitously. Social feeds are becoming cluttered with ads for products generated by bots. Lawyers are getting in trouble for unwittingly citing case law that doesn’t actually exist. For every paragraph that ChatGPT instantly generates, it seems, it also creates a to-do list of facts that need to be checked, plagiarism to be considered, and policy questions for tech executives and site administrators. When GPT-4 came out in March, OpenAI CEO Sam Altman tweeted: “it is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it.” The more we all use chatbots like his, the more this statement rings true. For all of the impressive things it can do — and if nothing else, ChatGPT is a champion writer of first drafts — there also seems to be little doubt that is corroding the web. On that point, two new studies offered some cause for alarm. (I discovered both in the latest edition of Import AI, the indispensable weekly newsletter from Anthropic co-founder and former journalist Jack Clark.) The first study, which had an admittedly small sample size, found that crowd-sourced workers on Amazon’s Mechanical Turks platforms increasingly admit to using LLMs to perform text-based tasks. Studying the output of 44 workers, using “a combination of keystroke detection and synthetic text classification,” researchers from EPFL write, they “estimate that 33–46% of crowd workers used LLMs when completing the task.” (The task here was to summarize the abstracts of medical research papers — one of the things today’s LLMs are supposed to be relatively good at.) Academic researchers often use platforms like Mechanical Turk to conduct research in social science and other fields. The promise of the service is that it gives researchers access to a large, willing, and cheap body of potential research participants. Until now, the assumption has been that they will answer truthfully based on their own experiences. In a post-ChatGPT world, though, academics can no longer make that assumption. Given the mostly anonymous, transactional nature of the assignment, it’s easy to imagine a worker signing up to participate in a large number of studies and outsource all their answers to a bot. This “raises serious concerns about the gradual dilution of the ‘human factor’ in crowdsourced text data,” the researchers write. “This, if true, has big implications,” Clark writes. “It suggests the proverbial mines from which companies gather the supposed raw material of human insights are now instead being filled up with counterfeit human intelligence.” He adds that one solution here would be to “build new authenticated layers of trust to guarantee the work is predominantly human generated rather than machine generated.” But surely those systems will some time in coming. A second, more worrisome study comes from researchers at the University of Oxford, University of Cambridge, University of Toronto, and Imperial College London. It found that training AI systems on data generated by other AI systems — synthetic data, to use the industry’s term — causes models to degrade and ultimately collapse. While the decay can be managed by using synthetic data sparingly, researchers write, the idea that models can be “poisoned” by feeding them their own outputs raises real risks for the web. And that’s a problem, because — to bring together the threads of today’s newsletter so far — AI output is spreading to encompass more of the web every day. “The obvious larger question,” Clark writes, “is what this does to competition among AI developers as the internet fills up with a greater percentage of generated versus real content.” When tech companies were building the first chatbots, they could be certain that the vast majority of the data they were scraping was human-generated. Going forward, though, they’ll be ever less certain of that — and until they figure out reliable ways to identify chatbot-generated text, they’re at risk of breaking their own models. What we’ve learned about chatbots so far, then, is that they make writing easier to do — while also generating text that is annoying and potentially disruptive for humans to read. Meanwhile, AI output can be dangerous for other AIs to consume — and, the second group of researchers predict, will eventually create a robust market for data sets that were created before chatbots came along and began to pollute the models. In The Verge, Vincent argues that the current wave of disruption will ultimately bring some benefits, even if it’s only to unsettle the monoliths that have dominated the web for so long. “Even if the web is flooded with AI junk, it could prove to be beneficial, spurring the development of better-funded platforms, he writes. “If Google consistently gives you garbage results in search, for example, you might be more inclined to pay for sources you trust and visit them directly.” Perhaps. But I also worry the glut of AI text will leave us with a web where the signal is ever harder to find in the noise. Early results suggest that these fears are justified — and that soon everyone on the internet, no matter their job, may soon find themselves having to exert ever more effort seeking signs of intelligent life. Talk about this edition with us in Discord: This link will get you in for the next week. Governing

Industry

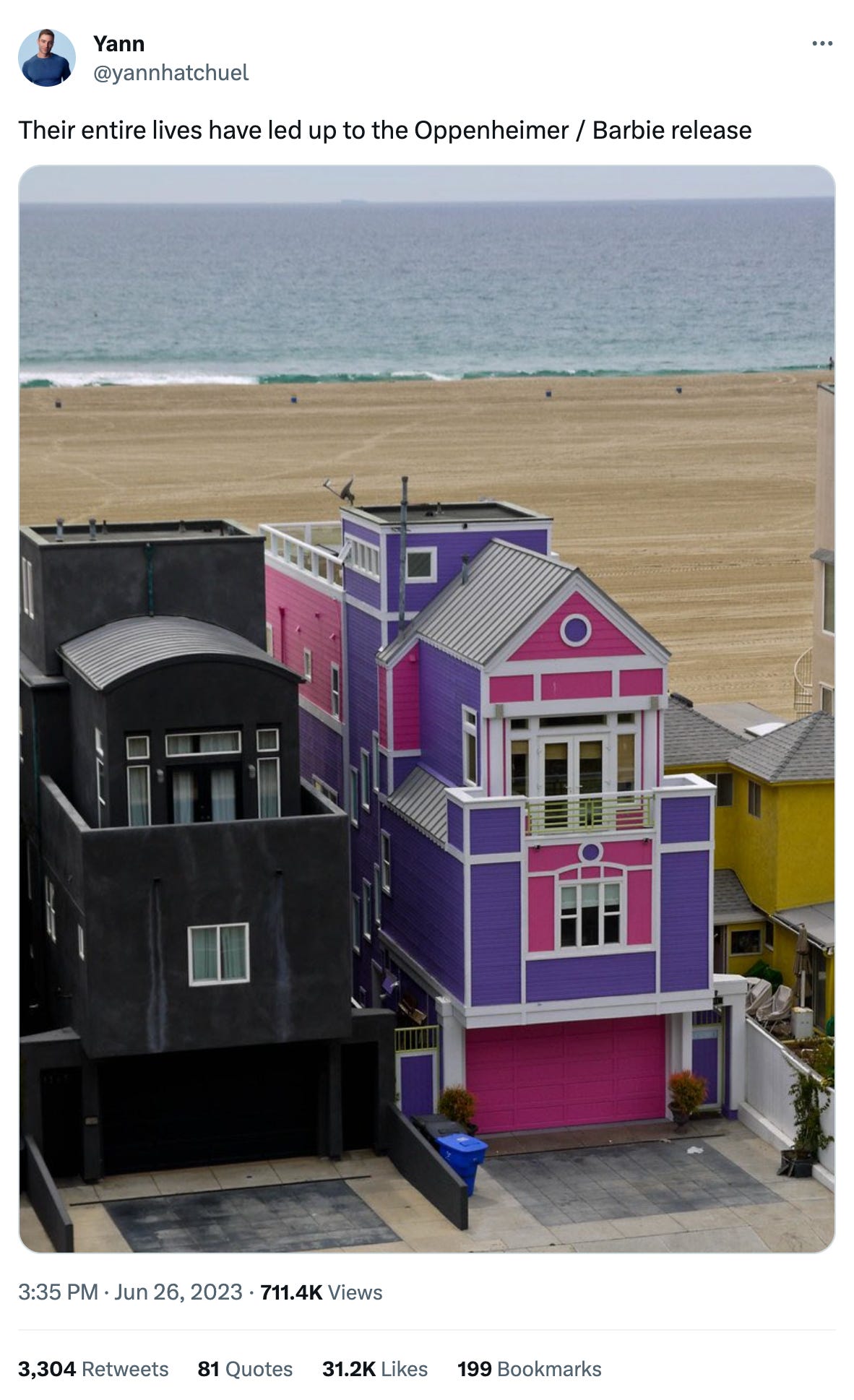

Those good tweetsFor more good tweets every day, follow Casey’s Instagram stories. (Link) (Link) (Link) Talk to usSend us tips, comments, questions, and AI-free copy: casey@platformer.news and zoe@platformer.news. By design, the vast majority of Platformer readers never pay anything for the journalism it provides. But you made it all the way to the end of this week’s edition — maybe not for the first time. Want to support more journalism like what you read today? If so, click here: |

Older messages

What we’re learning from the Reddit blackout

Wednesday, June 21, 2023

Five lessons, and one open question

Reddit doubles down

Wednesday, June 14, 2023

Delaying its API changes would benefit everyone — but users have other options, too

Reddit goes dark

Tuesday, June 13, 2023

As it moves to shut down third-party apps, the site's self-governing ethos comes back to haunt it

The platforms give up on 2020 lies

Monday, June 12, 2023

For a time, they fought the good fight — but not any more

Apple prepares for a platform shift

Friday, June 2, 2023

Will the Reality Pro be the metaverse's iPhone moment?

You Might Also Like

How’s that little project going?

Tuesday, March 4, 2025

Read time: 1 min. 2 sec. It's 2017. I've been grinding on Starter Story for months. Blood! Sweat!! Tears!!! (okay, not actual blood, but you get it) I'd put in the hours. Built the thing.

How to build a $10M ARR B2B AI Startup

Tuesday, March 4, 2025

How I'd get to $10m ARR ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

[CEI] Chrome Extension Ideas #180

Tuesday, March 4, 2025

ideas for Figma, GitHub, Job Applicants, and stud ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

500k subs & 7 figs in revenue

Tuesday, March 4, 2025

This founder was still in college when he sold the business ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

1,000,000

Tuesday, March 4, 2025

Taking a moment to celebrate and reflect—and then back to work ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

BSSA #116 - Outsourcing to scale 🚀

Tuesday, March 4, 2025

March 04, 2025 | Read Online Hello everyone! The Wide Event is almost sold out. More than 90% of the tickets have been booked. If you're one of the people waiting until the last minute to purchase,

🔥 The secret factories big brands don’t want you to know 👗👖

Tuesday, March 4, 2025

The best fashion suppliers don't advertise—here's how to find them. Hey Friend , If you've ever struggled to find high-quality fashion manufacturers, there's a reason: The best

Making Wayves

Tuesday, March 4, 2025

+ Girls just wanna have funding; e-bike turf war View in browser Powered by ViennaUP Author-Martin by Martin Coulter Good morning there, Since 2021, VC firm Future Planet Capital (FPC) has secured more

Animal Shine And Doctor Stein 🐇

Monday, March 3, 2025

And another non-unique app͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

upcoming analyst-led events

Monday, March 3, 2025

the future of the customer journey, tech M&A predictions, and the industrial AI arms race. CB-Insights-Logo-light copy Upcoming analyst-led webinars Highlights: The future of the customer journey,