Hello and thank you for tuning in to Issue #505.

Once a week we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

Seeing this for the first time? Subscribe here:

Want to support us? Become a paid subscriber here.

If you don’t find this email useful, please unsubscribe here.

And now, let's dive into some interesting links from this week:

:)

Large language models, explained with a minimum of math and jargon

The goal of this article is to make a lot of knowledge accessible to a broad audience. We’ll aim to explain what’s known about the inner workings of these models without resorting to technical jargon or advanced math. We’ll start by explaining word vectors, the surprising way language models represent and reason about language. Then we’ll dive deep into the transformer, the basic building block for systems like ChatGPT. Finally, we’ll explain how these models are trained and explore why good performance requires such phenomenally large quantities of data…

Real-Real-World Programming with ChatGPT

I wanted to create a well-scoped project to test how well AI handles a realistic yet manageable real-world programming task. Here’s how I worked on it: I subscribed to ChatGPT Plus and used the GPT-4 model in ChatGPT to help me with design and implementation. I also installed the latest VS Code with GitHub Copilot and the experimental Copilot Chat plugins, but I ended up not using them much. I found it easier to keep a single conversational flow within ChatGPT rather than switching between multiple tools. Lastly, I tried not to search for help on Google, Stack Overflow, or other websites, which is what I would normally be doing while programming. In sum, this is me trying to simulate the experience of relying as much as possible on ChatGPT to get this project done…

Building and operating a pretty big storage system called S3

What I’d really like to share with you more than anything else is my sense of wonder at the storage systems that are all collectively being built at this point in time, because they are pretty amazing. In this post, I want to cover a few of the interesting nuances of building something like S3, and the lessons learned and sometimes surprising observations from my time in S3…

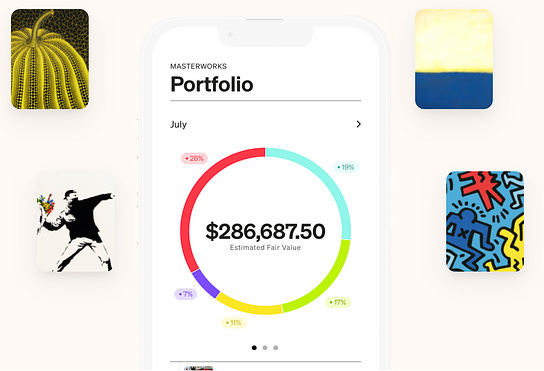

100% true. Research has proven that a portfolio with just a 5% invested in contemporary art has historically driven not only higher returns, but also a better risk-adjusted appreciation rate versus a “traditional” portfolio of 60% stocks and 40% bonds.

Translation: more money in your bank account, with fewer ups and downs.

But how is the average person supposed to get access to an asset that has been the exclusive domain of the ultra-rich for centuries? The answer is Masterworks, an award-winning platform for investing in fractionalized works of art.

It's not just easy to use, Masterworks has completed 14 exits on their artwork, all of them profitable, with the last three recent sales realizing annualized net returns of 17.8, 21.5% and 35% for investors.

Today, Data Science Weekly readers can get priority access to its latest offerings by skipping the waitlist with this exclusive link.

See important disclosures at masterworks.com/cd

Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

Could a purely self-supervised Foundation Model achieve grounded language understanding?

Is it possible for language models to achieve language understanding? My current answer is, essentially, “Well, we don’t currently have compelling reasons to think they can’t.” This vide/post is mainly devoted to supporting this answer by critically reviewing prominent arguments that language models are intrinsically limited in their ability to understand language…

Royal Statistical Society publishes new data visualisation guide

We are pleased to announce the publication of our new guide, ‘Best Practices for Data Visualisation’, containing insights, advice, and examples (with code) to make data outputs more impactful. The guide begins with an overview of why we visualise data, and then discusses the core principles and elements of data visualisations – including the structure of charts and tables, and how those structures can be refined to aid readability…

Accelerating PyTorch Model Training

Last week, I gave a talk on "Scaling PyTorch Model Training With Minimal Code Changes" at CVPR 2023 in Vancouver…For future reference, and for those who couldn't attend, I wanted to try a little experiment and convert the talk into a short article, which you can find below!..So, this article delves into how to scale PyTorch model training with minimal code changes. The focus here is on leveraging mixed-precision techniques and multi-GPU training paradigms, not low-level machine optimizations. We will use a simple Vision Transformer (ViT) trained to classify images as our base model…

Rewarding Chatbots for Real-World Engagement with Millions of Users

This work investigates the development of social chatbots that prioritize user engagement to enhance retention…The proposed approach uses automatic pseudo-labels collected from user interactions to train a reward model that can be used to reject low-scoring sample responses generated by the chatbot model at inference time. Intuitive evaluation metrics, such as mean conversation length (MCL), are introduced as proxies to measure the level of engagement of deployed chatbots. A/B testing on groups of 10,000 new daily chatbot users on the Chai Research platform shows that this approach increases the MCL by up to 70%, which translates to a more than 30% increase in user retention for a GPT-J 6B model…

Urbanity: automated modelling and analysis of multidimensional networks in cities

In this work, we introduce Urbanity, a network-based Python package to automate the construction of feature-rich urban networks anywhere and at any geographical scale. We discuss data sources, the features of our software, and a set of data representing the networks of five major cities around the world. We also test the usefulness of added context in our networks by classifying different types of connections within a single network. Our findings extend accumulated knowledge about how spaces and flows within city networks work, and affirm the importance of contextual features for analyzing city networks…

The “percentogram”—a histogram binned by percentages of the cumulative distribution, rather than using fixed bin widths

I wondered if you have seen this type of thing before, and what you think? – basically, it is like a histogram or density plot in that is shows the overall shape of the distribution, but what I find nice is that each bar is made to have the same area and to specifically represent a chosen percentage. One could call it an “percentogram.” Hence, it is really easy to assess how much of the distribution is falling in particular ranges. You can also specifically color code the bars according to e.g., particular quantiles, deciles etc…

Barclays Capital Inc. seeks Assistant Vice President, Data Scientist in New York, NY (multiple positions available):

* Write Extract, Transform, Load (ETL) code to read from our data sources, and load data for analysis using source control (git; bitbucket) to version-control code contributions

* Encapsulate analysis code built on the ETL code to make work reusable by the team

* Automate analysis processes using Spark, Python, Pandas, SpaCy, Tensorflow, Keras, PyTorch, and other open-source large-scale computing and statistical software

* Create and maintain a Reddit data pipeline, with ad hoc maintenance to serve requests

* Review other coworkers’ contributions to our shared repository

* Telecommuting benefits permitted

Apply here

Want to post a job here? Email us for details --> team@datascienceweekly.org

What We Know About LLMs (Primer)

The madness of crowds aside, it is worth reflecting on what we concretely know about LLMs at this point in time and how these insights sparked the latest AI fervor. This will help put into perspective the relevance of current research efforts and the possibilities that abound…

LLM-Reading-List

Just helping myself keep track of LLM papers that I‘m reading, with an emphasis on inference and model compression.

Factor models and Synthetic Control

First, I'll share what a factor model is and then I'll circle back to how it connects to synthetic controls. As an important disclaimer: the synthetic control estimator is consistent under a wide variety of data-generating processes. However, the factor model is a leading case and it is pedagogically useful…

* Based on unique clicks.

** Find last week's issue #504 here.

Thanks for joining us this week :)

All our best,

Hannah & Sebastian

P.S.,

If you found this newsletter helpful, consider supporting us by becoming a paid subscriber here: https://datascienceweekly.substack.com/subscribe :)

Copyright © 2013-2023 DataScienceWeekly.org, All rights reserved.