📝 Guest Post: Build Trustworthy LLM Apps With Rapid Evaluation, Experimentation and Observability*

Was this email forwarded to you? Sign up here In this guest post, Vikram Chatterji, CEO and co-founder of Galileo, introduces us to their LLM Studio. It provides a powerful evaluation, experimentation and observability platform across the LLM application development lifecycle (prompting with RAG, fine-tuning, production monitoring) to detect and minimize hallucinations through a suite of evaluation metrics. You can learn more about Galileo LLM Studio through their webinar on Oct 4. Let’s dive in. With large language models (LLMs) increasing in size and popularity we, as a data science community, have seen new needs emerge. LLM-powered apps have a different development lifecycle than traditional NLP-powered apps – prompt experimentation, testing multiple LLM APIs, RAG and LLM fine-tuning. In speaking with practitioners across financial services, healthcare, and AI-native companies, it has become clear that LLMs require a new development toolchain. Namely, three big challenges facing LLM developers stand out:

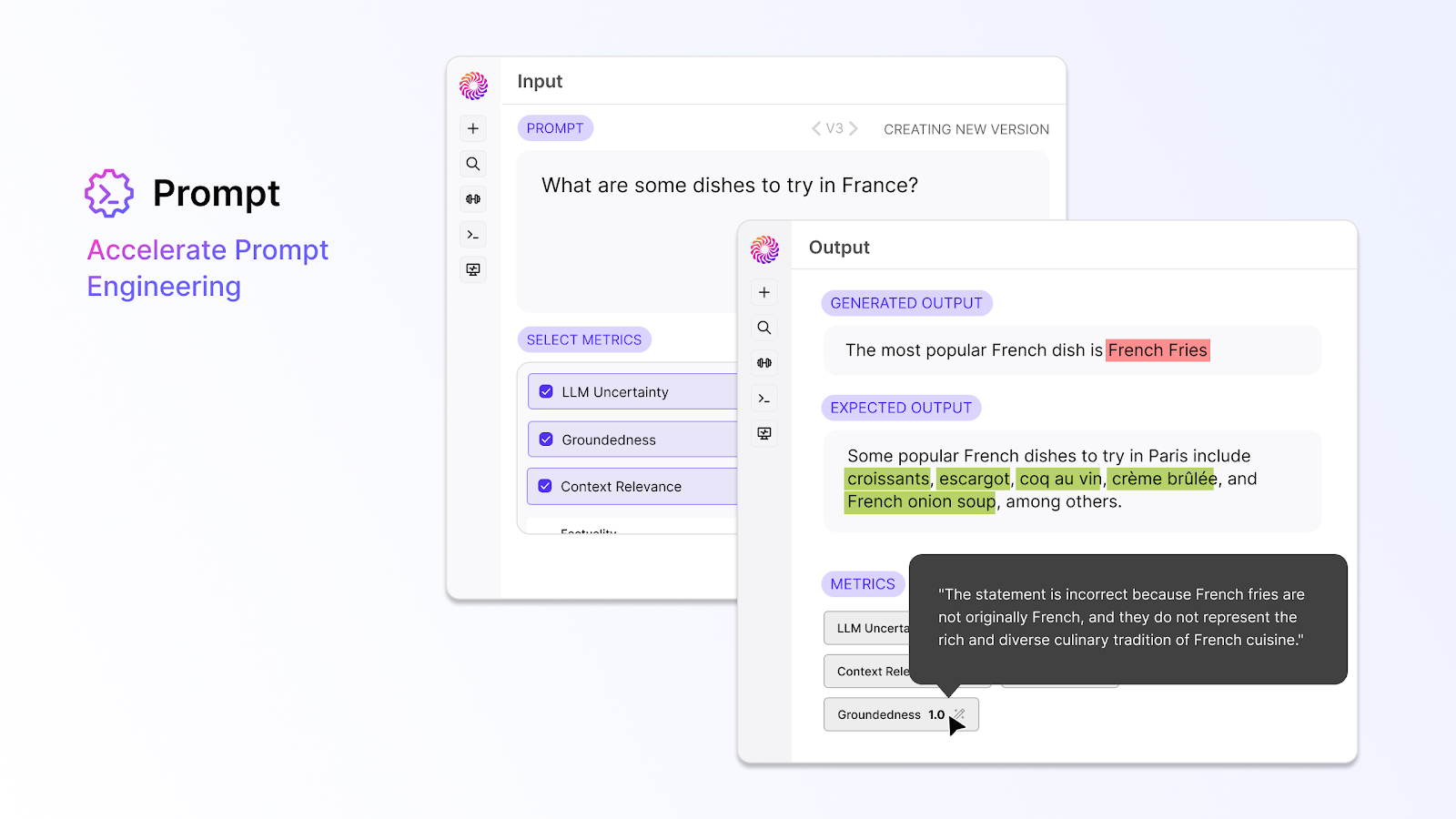

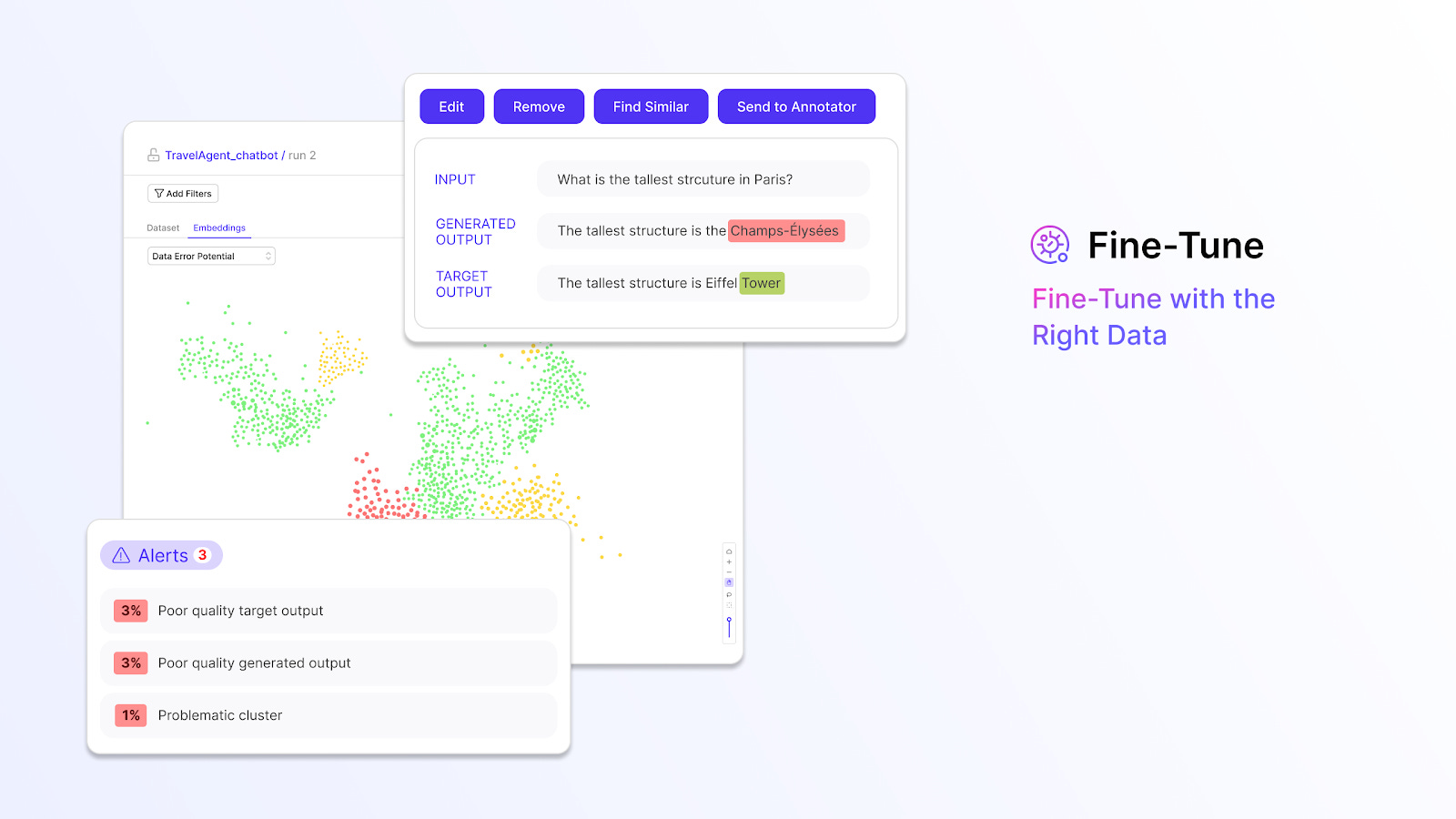

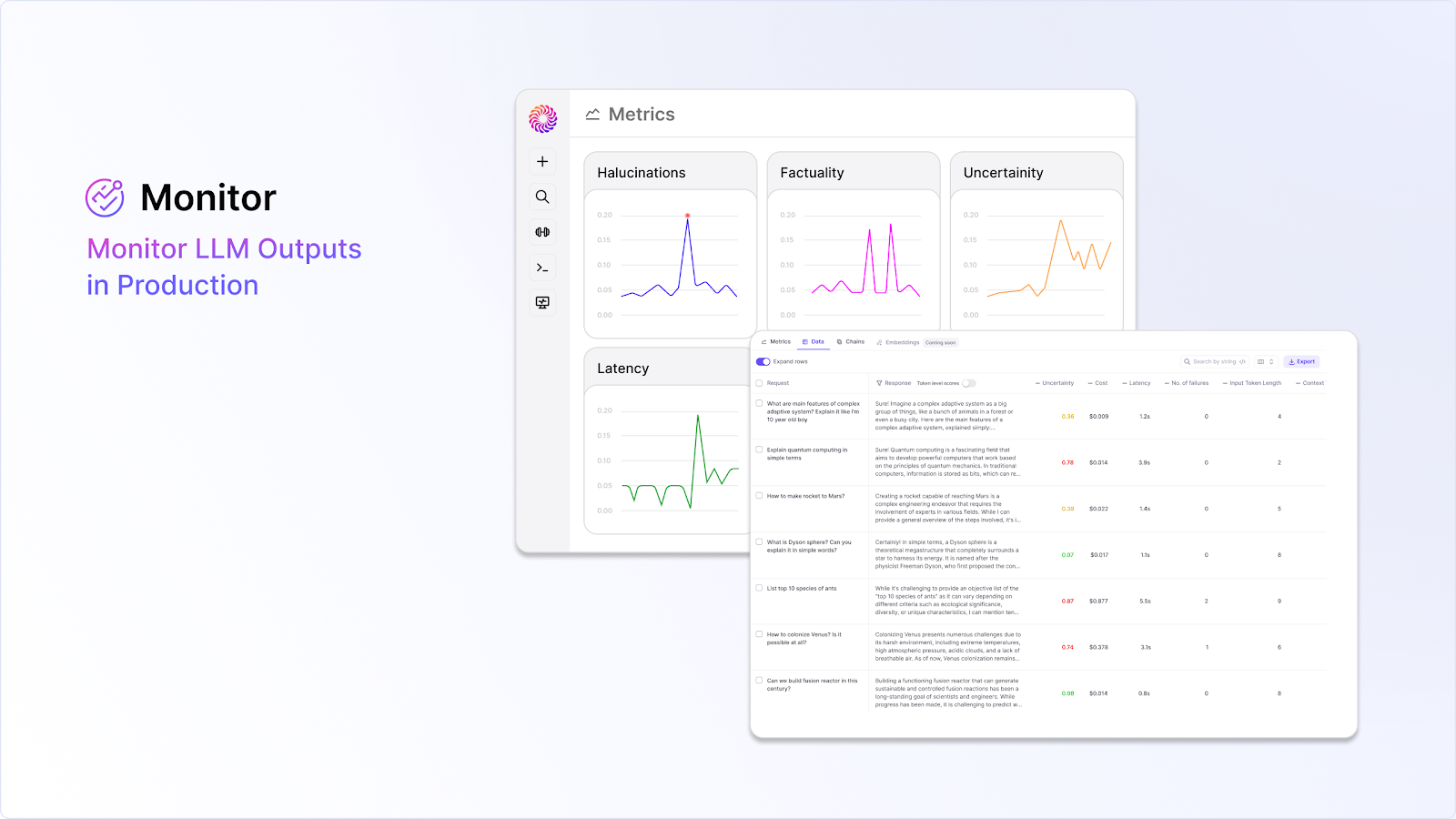

Introducing Galileo LLM StudioLLM Studio helps you develop and evaluate LLM apps in hours instead of days. It is designed to help teams across the application development lifecycle, from evaluation and experimentation during development to observability and monitoring once in production. LLM Studio offers three modules - Prompt, Fine-Tune, and Monitor - so whether you’re using RAG or fine-tuning, LLM Studio has you covered. 1. PromptPrompt engineering is all about experimentation and root-cause analysis. Teams need a way to experiment with multiple LLMs, their parameters, prompt templates, and context from vector databases. The Prompt module helps you systematically experiment with prompts in order to find the best combination of prompt template, model, and parameters for your generative AI application. We know prompting is a team sport, so we’ve built features to enable collaboration with automatic version controls. Teams can use Galileo’s powerful suite of evaluation metrics to evaluate outcomes and detect hallucinations. 2. Fine-TuneWhen fine-tuning an LLM, it is critical to leverage high-quality data. However, data debugging is painstaking, manual, and needs a bunch of iterations – moreover, leveraging labeling tools here balloons cost and time. The Fine-Tune module is an industry-first product built to automatically identify the most problematic training data for the LLM – incorrect ground truth, regions of low data coverage, low-quality data, and more. Coupled with collaborative experiment tracking and 1-click similarity search, Fine-Tune is perfect for data science teams and subject matter experts to work together towards building high-quality custom LLMs. 3. MonitorPrompt engineering and fine-tuning are half the journey. Once an application is in production and in end-customers’ hands, the real work begins. Generative AI builders need governance frameworks in place to minimize the risk of LLM hallucinations in a scalable and efficient manner. This is especially important as generative AI is still in the early innings of winning end-user trust. To help with this, we’ve built Monitor, an all-new module that gives teams a common set of observability tools and evaluation metrics for real-time production monitoring. Apart from the usual tracing available, Monitor ties application metrics like user engagement, cost, and latency to ML metrics used to evaluate models and prompts during training, like Uncertainty, Factuality, and Groundedness. Teams can set up alerts so they can be notified and conduct root-cause analysis the second something seems off. A Unified Platform to Drive Continuous ImprovementWhile each of these modules provides value in its own right, the greatest value-unlock comes from these modules operating on a single fully integrated platform. A core principle for building AI powered apps should be ‘Evaluation First’ - everything starts and ends with the ability to evaluate and inspect your application. This is why Galileo offers a Guardrail Metrics Store - equipped with a common set of research-backed evaluation metrics that users can use across Prompt, Fine-Tune, and Monitor. Our Guardrail Metrics include powerful new metrics from Galileo's in-house ML Research Team (e.g. Uncertainty, Factuality, Groundedness). You can also define your own custom evaluation metrics. Together, these metrics help teams minimize the risk of LLM hallucinations and bring more trustworthy applications to market. Building LLM powered applications can be tricky. Poor quality prompts, context, data or LLMs can quickly lead to hallucinatory responses. To find out more about how you can perform metrics-powered evaluation and experimentation across the LLM app development lifecycle, sign up for our upcoming webinar here! *This post was written by the Galileo team exclusively for TheSequence. We thank Galileo for their ongoing support of TheSequence.You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

Edge 330: Inside DSPy: Stanford University's LangChain Alternative

Thursday, September 28, 2023

DSPy combines model chaining, reasoning, fine tuning in a single framework for solving tasks using language.

Edge 329: Types of Fine-Tuning Methods in Foundation Models

Tuesday, September 26, 2023

A simple taxonomy of understand the different types of techniques for fine-tuning foundation models.

Do Amazon and Apple Have Any Moats in Generative AI?

Sunday, September 24, 2023

Sundays, The Sequence Scope brings a summary of the most important research papers, technology releases and VC funding deals in the artificial intelligence space.

📣 Event: Announcing apply(ops), the Biggest Virtual Conference for the Global ML Community!

Friday, September 22, 2023

Our favorite virtual ML data engineering conference is coming back on November 14! And this time, the theme is apply(ops), where the focus will be on platforms and architectures for production ML use

Edge 328: Inside AudioCraft: Meta AI’s New Family of Generative Audio Models

Thursday, September 21, 2023

A review of Meta's EnCodec, AudioGen and MusicGen models.

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your