The Most Obvious Secret in AI: Every Tech Giant Will Build Its Own Chips

Was this email forwarded to you? Sign up here The Most Obvious Secret in AI: Every Tech Giant Will Build Its Own ChipsSundays, The Sequence Scope brings a summary of the most important research papers, technology releases and VC funding deals in the artificial intelligence space.Next Week in The Sequence:

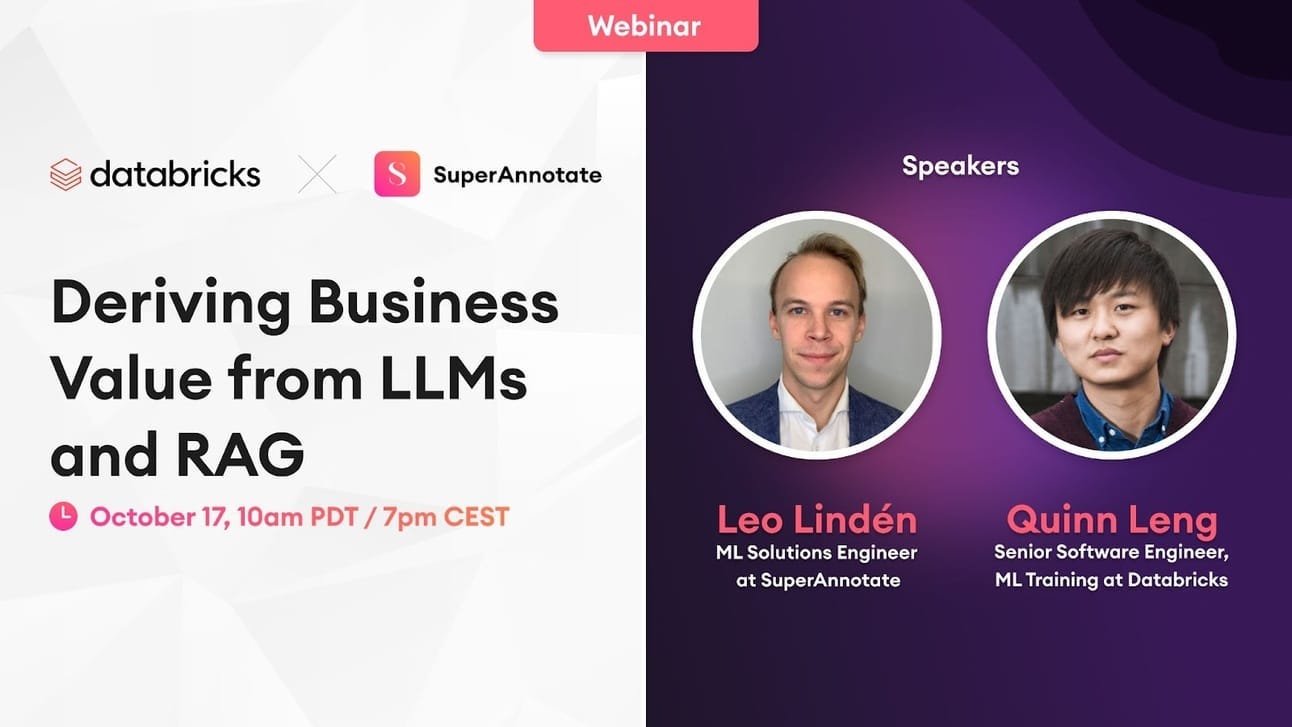

You can subscribe below:📝 Editorial: The Most Obvious Secret in AI: Every Tech Giant Will Build Its Own ChipsNVIDIA reigns as the undisputed king of the AI hardware market, a trend that has propelled the company to nearly one trillion dollars in market capitalization. NVIDIA's dominance has resulted in an unwelcome dependency for AI platform providers, often leading to limitations in their products. Even tech giants such as Microsoft and Amazon have experienced GPU shortages when it comes to pretraining or fine-tuning some of their massive foundation models. This dependency is even more challenging for AI startups, which are forced into multi-year leases of GPU infrastructure as a competitive defensive move. Removing the reliance on NVIDIA GPUs is a natural evolution in the development of generative AI, and the most obvious path is for tech incumbents to develop their own AI chips. Google serves as a primary example of this trend. The search giant is ushering in a new generation of its tensor processing unit (TPU) technology, which is prevalent in Google Cloud. Just last week, reports surfaced that OpenAI is exploring options to develop its own AI chips. Similarly, Microsoft has been working on its own AI chip for a while, and it is expected to debut next month. Amazon has released its Inferentia AI chip, which is particularly interesting given its supply chain expertise. It can even be argued that companies like AMD might become attractive acquisition targets for these tech incumbents in order to have a competitive alternative to NVIDIA. The current generation of foundation models has not shown any limitations in terms of scaling laws. This means that, contrary to the beliefs of some skeptics, these models will continue to grow in size for the foreseeable future. The process of building these larger models will require massive GPU computing power, and relying solely on NVIDIA for this computing power may not be the only option. Within a few months, we can expect every major cloud platform incumbent to start manufacturing its own AI chips. 📌 Webinar: Deriving Business Value from LLMs and RAGDate: October 17th, 10 am PDT / 7 pm CEST We are excited to support an upcoming webinar with Databricks and SuperAnnotate where we'll learn how to derive business value from Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG). In this webinar, Leo and Quinn will delve into these capabilities to help you gain tangible insights into assessing these models for optimal alignment with your objectives. Join us for a knowledge-packed session that offers actionable insights for companies, big or small, looking to leverage the might of LLMs and RAG. Equip yourself with the information to drive strategic AI decisions. Secure your spot today. It’s free (of course). 🔎 ML ResearchDALL-E 3OpenAI published a paper detailing some of the technical details behind DALL-E 3. The paper details elements of the DALL-E 3 readiness process including expert red teaming, evaluation and safety —> Read more. LLama EcosystemMeta AI Research published an analysis of the adoption of its Llama 2 model. The writeup covers some of the future areas of focus of Llama including multimodality —> Read more. Scaling Learning for Different RobotsGoogle DeepMind published a paper and dataset detailing Open X-Embodiment, a dataset for robotics training. The paper also discusses the robotics transformer model that can transfer skills across different robotics embodiments —> Read more. Contrastive Learning for Data RepresentationAmazon Science published two papers proposing constrastive learning techniques that can improve data representations in ML models. The first paper proposes a training function that creates useful representations while maintaining managing memory and training costs. The second paper proposes geometric constrainsts in representations that result more useful for downstream tasks —> Read more. Text2RewardResearchers from Microsoft Research, CMU, University of Hong Kong and others published a paper discussing Text2Reward, a method that can generate a reinforcement learning reward function based on natural language inputs. The methods takes a goal described in language as input and generates a dense reward function based on a representation of the environment —> Read more. 🤖 Cool AI Tech ReleasesLMSYS-Chat-1MLMSYS, the organization behind Vicuna and Chatbot Arena, open sourced a datasets containing one million real world conversations with LLMs —> Read more. PyTorch 2.1PyTorch released a new version wit interesting updates in areas such as tooling, audio generation and accleration —> Read more. 🛠 Real World MLEmbeddings at LinkedInLinkedIn discusses the embeddings architectures used to power its job search capabilities —> Read more. Meta Contributions to Python’s New VersionMeta outlines some of its recent contributions to Python 3.12 —> Read more. 📡AI Radar

You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

📣 Webinar: Learn how to fine-tune RAG and boost your content quality with Zilliz and 🔭 Galileo

Friday, October 6, 2023

If you're trying to improve the quality of your LLM-generated responses, you've probably explored retrieval augmented generation (RAG). Grounding your model on external sources of information

Edge 332: Inside FlashAttention: The Method Powering LLM Scalability to Whole New Levels

Thursday, October 5, 2023

FlashAttention and FlashAttention-2 have been implemented by some of the major LLM platforms in the market.

ML Pulse: Inside MLEnv, the Platform Powering Machine Learning at Pinterest

Wednesday, October 4, 2023

DEtails about the architecture and best practices used by the Pinterest engineering team to power their high scale internal workloads.

Edge 331: Universal Language Model Finetuning

Tuesday, October 3, 2023

One off the earliest fine-tuning techniques that still works today.

A Week of Monster Generative AI Releases

Sunday, October 1, 2023

Sundays, The Sequence Scope brings a summary of the most important research papers, technology releases and VC funding deals in the artificial intelligence space.

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your