📝 Guest Post: Do We Still Need Vector Databases for RAG with OpenAI's Built-In Retrieval?

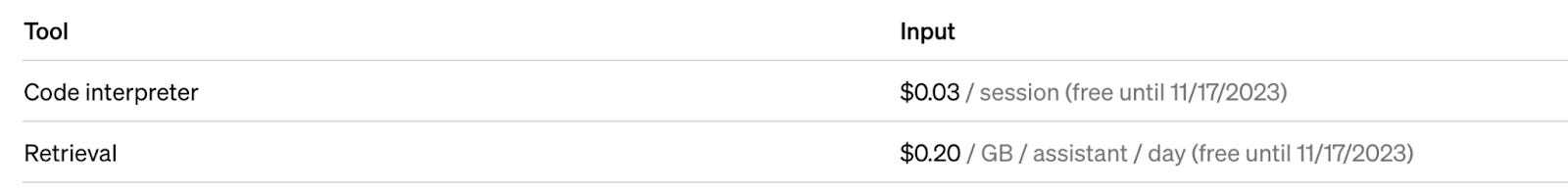

Was this email forwarded to you? Sign up here In this guest post, Jael Gu, an algorithm engineer at Zilliz, will delve into the constraints of OpenAI's built-in retrieval and walk you through creating a customized retriever using Milvus, an open-source vector database. OpenAI once again made headlines with a slew of releases during its DevDay, unveiling the GPT-4 Turbo model, the new Assistants API, and a range of enhancements. The Assistants API emerges as a powerful tool helping developers craft bespoke AI applications catering to specific needs. It also allows them to tap into additional knowledge, longer prompt length, and tools for various tasks. While OpenAI Assistants come with an integrated retrieval feature, it's not perfect — think restrictions on data scale and lack of capability in customization. This is precisely where a custom retriever steps in to help. By harnessing OpenAI's function calling capabilities, developers can seamlessly integrate a customized retriever, elevating the scale of the additional knowledge and better fitting diverse use cases. Let’s dive in! Limitations of OpenAI’s retrieval in Assistants and the role of custom retrieval solutionsOpenAI's built-in Retrieval feature represents a leap beyond the model's inherent knowledge, enabling users to augment it with extra data such as proprietary product information or user-provided documents. However, it grapples with notable limitations. Scalability constraintOpenAI Retrieval imposes file and total storage constraints that might fall short for extensive document repositories:

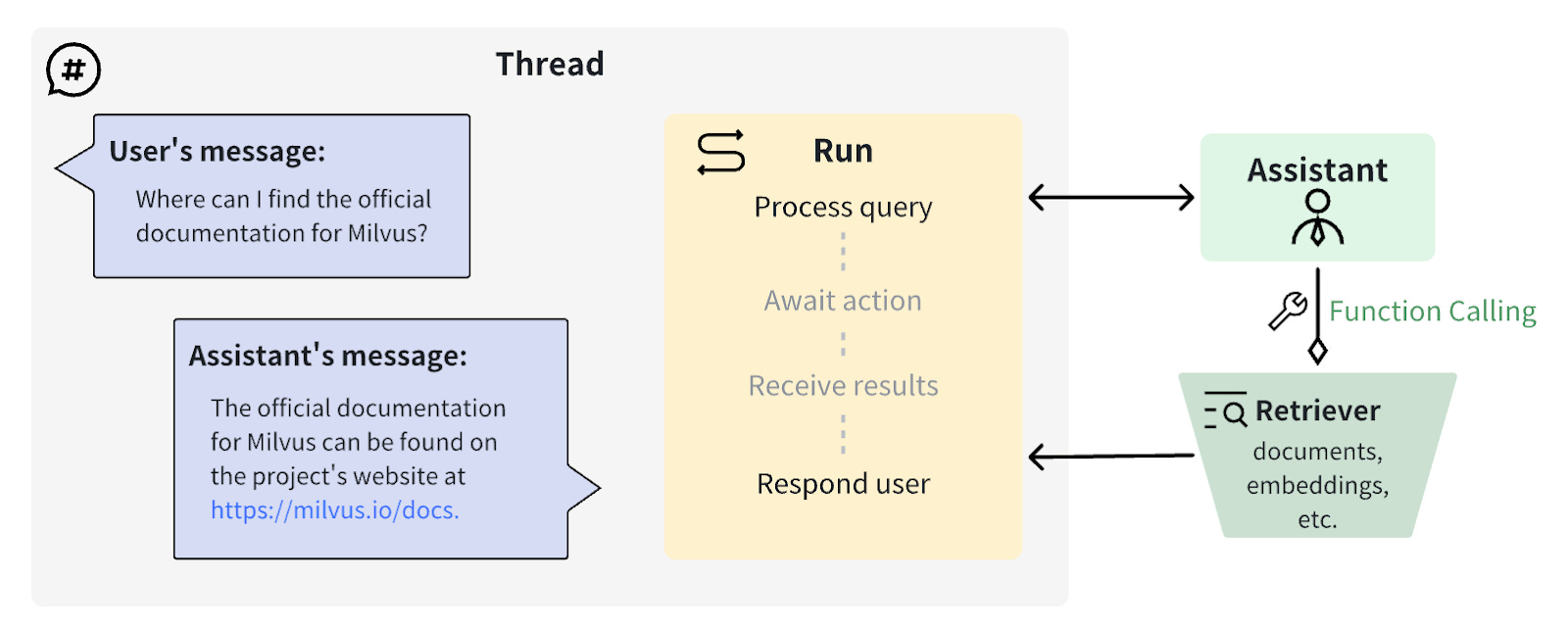

For organizations with extensive data repositories, these limitations pose challenges. A scalable solution that grows seamlessly without hitting storage ceilings becomes imperative. Integrating a custom retriever powered by a vector database like Milvus or Zilliz Cloud (the managed Milvus) offers a workaround for the file limitations inherent in OpenAI's built-in Retrieval. Lack of customizationWhile OpenAI's Retrieval offers a convenient out-of-the-box solution, it cannot consistently align with every application's specific needs, especially regarding latency and search algorithm customization. Utilizing a third-party vector database grants developers the flexibility to optimize and configure the retrieval process, catering to production needs and enhancing overall efficiency. Lack of multi-tenancyRetrieval is a built-in feature in OpenAI Assistants that only supports individual user usage. However, if you are a developer aiming to serve millions of users with both shared documents and users' private information, the built-in retrieval feature cannot help. Replicating shared documents to each user's Assistant escalates storage costs, while having all users share the same Assistant poses challenges in supporting user-specific private documents. The following graph shows that storing documents in OpenAI Assistants is expensive ($6 per GB per month; for reference, AWS S3 charges $0.023), making storing duplicate documents on OpenAI incredibly wasteful. For organizations harboring extensive datasets, a scalable, efficient, and cost-effective retriever that aligns with specific operational demands is imperative. Fortunately, with OpenAI's flexible function calling capability, developers can seamlessly integrate a custom retriever with OpenAI Assistants. This solution ensures businesses can harness the best AI capabilities powered by OpenAI while maintaining scalability and flexibility for their unique needs. Leveraging Milvus for customized OpenAI retrievalMilvus is an open-source vector database that can store and retrieve billions of vectors within milliseconds. It is also highly scalable to meet users’ rapidly growing business needs. With rapid scaling and ultra-low latency, the Milvus vector database is among the top choices for building a highly scalable and more efficient retriever for your OpenAI assistant. Building a custom retriever with OpenAI function calling and Milvus vector databaseLet’s start building the custom retriever and integrate it with OpenAI by following the step-by-step guide.

|

Older messages

Gemini and Mistral MoE: Both Impactul Altough Very Different Releases

Sunday, December 10, 2023

Next Week in The Sequence: Edge 351: Presents a detailed summary of our series about fine-tuning in foundation models. Edge 352: Will dive into LinkedIn's embedding architecure that power its

📝 Guest Post: How to Maximize LLM Performance*

Friday, December 8, 2023

In this post, Jordan Burgess, co-founder and Chief Product Officer at Humanloop, discusses the techniques for going from an initial demo to a robust production-ready application and explain how tools

Meet Zephyr: How Hugging Face's Instruction Fine Tuned LLM Outperformed Models 10 Times Its Size

Thursday, December 7, 2023

A fine-tuned version of Mistral, Zephyr applied some very clever techniques that led it to outperform LLaMA 70B and other much larger models.

Edge 349: Reinforcement Learning with AI Feedback

Tuesday, December 5, 2023

One of the most promising techniques that uses feedback from AI agents to fine tune foundation models.

📹 [Webinar] Building a Real-Time Fraud Detection System at Signifyd

Monday, December 4, 2023

Fraudsters are always evolving their tactics, such as using AI and LLMs, to bypass detection. To combat fraud, Signifyd, an e-commerce fraud detection platform, uses ML to make instantaneous decisions

You Might Also Like

Re: You're Invited: Free Photo Management Class

Tuesday, March 11, 2025

This is your last chance to register for tomorrow's live online Photo Management Class, Wednesday, March 12, at 4:30 pm ET! Sign up now to attend the FREE Photo Management Class The recent changes

BetterDev #275 - Tracking You from a Thousand Miles Away! Turning a Bluetooth Device into an Apple AirTag Without Root Privileges

Monday, March 10, 2025

Better Dev #275 Mar 10, 2025 Hi all, In the wave of ByBit exchange being hack for 1.6billion, and the hack is very sophisciated, exploit developer access key to change an s3 bucket. The attack start by

What's the goal of the goal & Tapbots is working on a Bluesky client

Monday, March 10, 2025

Capacities releases rewritten PDF viewer and new AI editor, Ghost teases larger update for its ActivityPub integration, clear communication, and more in this week's issue of Creativerly.

Ranked: | The World's Most Popular Programming Languages 🖥️

Monday, March 10, 2025

In 2024, Python surpassed JavaScript as the most popular programming language on GitHub for the first time. View Online | Subscribe | Download Our App Invest in your growth at Exchange 2025. FEATURED

GCP Newsletter #441

Monday, March 10, 2025

Welcome to issue #441 March 10th, 2025 News Infrastructure Official Blog Hej Sverige! Google Cloud launches new region in Sweden - Google Cloud has launched its 42nd cloud region in Sweden, providing

⚡ THN Weekly Recap: New Attacks, Old Tricks, Bigger Impact

Monday, March 10, 2025

State-sponsored hacking, IoT botnets, ransomware shifts—this week's cyber roundup covers it all. Stay informed, stay secure. Read now ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Beware AI voice cloning tools 🤖

Monday, March 10, 2025

Linux for your phone; Warner Bros. DVDs rot; GCal gets Gemini -- ZDNET ZDNET Tech Today - US March 10, 2025 Voice waveforms Most AI voice cloning tools aren't safe from scammers, Consumer Reports

⚙️ Google's AI plans

Monday, March 10, 2025

Plus: The DeepSeek alarm bells

Post from Syncfusion Blogs on 03/10/2025

Monday, March 10, 2025

New blogs from Syncfusion ® Convert PowerPoint to PDF in C# Using Advanced Formatting Options By Mohanaselvam Jothi Learn how to convert PowerPoint presentations to PDF with advanced options using the

😎 10 Weirdest Android Phones Ever — Why I Prefer Bixby to Google Assistant

Monday, March 10, 2025

Also: 3 Awesome Shows to Watch After "Fallout", and More! How-To Geek Logo March 10, 2025 Did You Know Despite their dog-like appearance, hyenas are more similar, phylogenetically speaking,