📝 Guest Post: How to Build the Right Team for Generative AI*

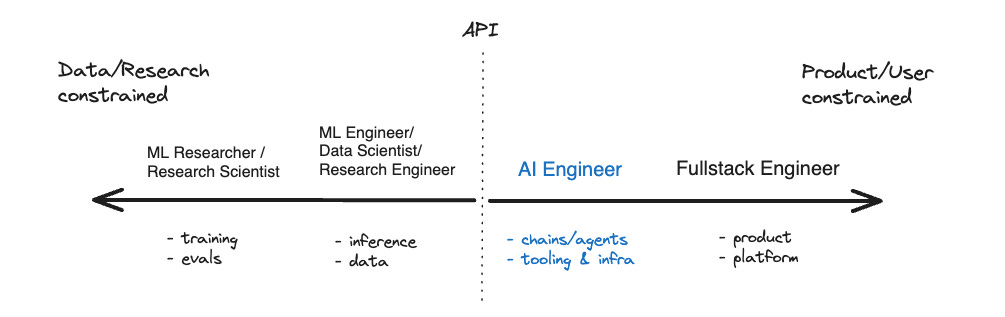

Was this email forwarded to you? Sign up here Generative AI and Large Language Models (LLMs) are new to most companies. If you are an engineering leader building Gen AI applications, it can be hard to know what skills and types of people are needed. In this post, Raza Habib, CEO & Co-founder at Humanloop, shares what they have learned about the skills needed to build a great AI team while helping hundreds of companies put LLMs into production. You probably don’t need ML engineersIn the last two years, the technical sophistication needed to build with AI has dropped dramatically. At the same time, the capabilities of AI models have grown. This creates an incredible opportunity for more companies to adopt AI because you probably already have most of the talent you need in-house. ML engineers used to be crucial to AI projects because you needed to train custom models from scratch. Training your own fully bespoke ML models requires more mathematical skills, an understanding of data science concepts, and proficiency with ML tools such as TensorFlow or PyTorch. Large Language Models like GPT-4, or open-source alternatives like LLaMa, come pre-trained with general knowledge of the world and language. Much less sophistication is needed to use them. With traditional ML, you needed to collect and manually annotate a dataset before designing an appropriate neural network architecture and then training it from scratch. With LLMs, you start with a pre-trained model and can customize that same model for many different applications via a technique called "prompt engineering". Prompt engineering is a key skillset“Prompt engineering” is simply the skill of articulating very clearly in natural language what you want the model to do and ensuring that the model is provided with all the relevant context. These natural language instructions, or “prompts”, become part of your application’s codebase and replace the annotated dataset that you used to have to collect to build an AI product. Prompt engineering is now one of the key skills in AI application development. To be good at prompt engineering you need excellent written communication, a willingness to experiment and a familiarity with the strengths and weaknesses of modern AI models. You don’t typically need any specific mathematical or technical knowledge. The people most suited for prompt engineering are the domain experts who best understand the needs of the end user – often this is the product managers. Product Managers and Domain Experts are Increasingly ImportantProduct managers and domain experts have always been vital for building excellent software but their role is typically one step removed from actual implementation. LLMs change this. They make it possible for non-technical experts to directly shape AI products through prompt engineering. This saves expensive engineering time and also shortens the feedback loop from deployment to improvement. We see this in action with Humanloop customers like Twain, who use LLMs to help salespeople write better emails. The engineers at Twain build the majority of the application but they are not well-placed to understand how to write good sales emails because they lack domain knowledge. As a result, they are not the right people to be customizing the AI models. Instead, Twain employs linguists and salespeople as prompt engineers. Another example is Duolingo, which has built several AI features powered by LLMs. Software engineers are not experts in language learning and would struggle to write good prompts for this situation. Instead, the engineers at Duolingo build the skeleton of the application that lives around the AI model and a team of linguists is responsible for prompt development. Generalist Full-Stack Engineers can outperform AI specialistsThe majority of most AI applications are still traditional code. Only the pieces that require complex reasoning are delegated to AI models. The engineering team still builds the majority of the application, orchestrates model calls, establishes the infrastructure for prompt engineering, integrates data sources to augment the model's context and optimizes performance. When it comes to optimising LLM performance, there are two common techniques which your team will need to be aware of. These are “fine-tuning” and “retrieval augmented generation” or RAG. Fine-tuning is when you slightly adjust the model parameters of a pre-trained AI model using example data. RAG is when you augment a generative AI model with traditional information retrieval to give the model access to private data. Full-stack engineers with a broad understanding of different technologies, and the ability to learn quickly, should be able to implement both RAG and fine-tuning. There is no need for them to have deep machine-learning knowledge as most models can now be accessed via API and increasingly there are specialist developer tools that make fine-tuning and RAG straightforward to implement. Compared to Machine Learning specialists, fullstack engineers tend to be more comfortable moving across the stack and are often more product minded. In fact there is a new job title emerging for generalist engineers who have a strong familiarity with LLMs and the tools around them: "The AI Engineer". Product and engineering teams need to work closely togetherOne of the challenges of generative AI is that there are a lot of new workflows and most companies lack appropriate tooling. For AI teams to work well, there needs to be an easy way for domain experts to iterate on prompts. However, prompts affect your applications as much as code and so need to be versioned and evaluated with the same level of rigor as code. Traditional software tools like Git are not a good solution because they alienate the non-technical domain experts who are critical to success. Often, teams end up using a mixture of stitched-together tools like the OpenAI playground, Jupyter notebooks and complex Excel spreadsheets. The process is error-prone and leads to long delays. Building custom internal tools can be very expensive and because the field of AI is evolving so rapidly they are difficult to maintain. Humanloop can help here by solving the most critical workflows around prompt engineering and evaluation. The platform gives companies an interactive environment where their domain experts/PMs and engineers can work together to iterate on prompts. Coupled with this are tools for evaluating the performance of AI features in rigorous ways both from user feedback and from automated evaluations. By providing the right tooling, Humanloop makes it much easier for your existing product teams to become your AI teams. *This post was written by Raza Habib, CEO & Co-founder at Humanloop, specially for TheSequence. We thank Humanloop for their insights and ongoing support of TheSequence.You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

Inside FunSearch: Google DeepMind’s LLM that Discovered New Math and Computer Science Algorithms

Thursday, January 18, 2024

Discovering new science is one of the ultimate frontiers for AI.

Edge 361: LLM Reasoning with Graph of Thoughts

Tuesday, January 16, 2024

Not chains or trees but graph structures for LLM reasoning.

A New Compute Platform for Generative AI ?

Sunday, January 14, 2024

Is generative AI big enough to spark the creation of a new compute platform?

The Sequence Chat: Arjun Sethi on Venture Investing in Generative AI

Friday, January 12, 2024

The founder and CIO of an enterprise VC powerhouse shares his thoughts about the generative AI market.

Meet Ghostbuster: An AI Technique for Detecting LLM-Generated Content

Friday, January 12, 2024

Created by Berkeley University, the new method uses a probability distribution method to detect the likelihood of AI-generated tokens within a document.

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your