Nvidia aims to become the world's first AI foundry - Weekly News Roundup - Issue #459

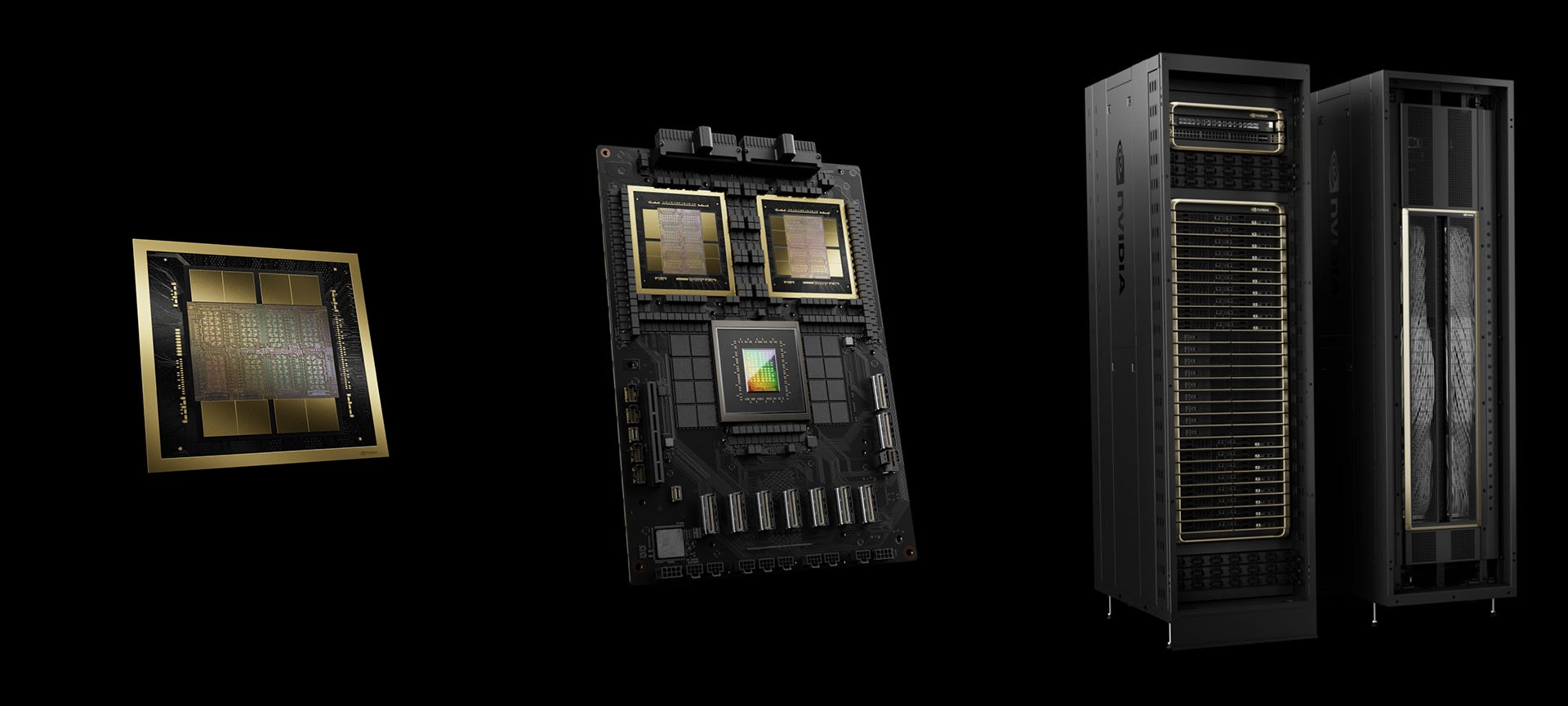

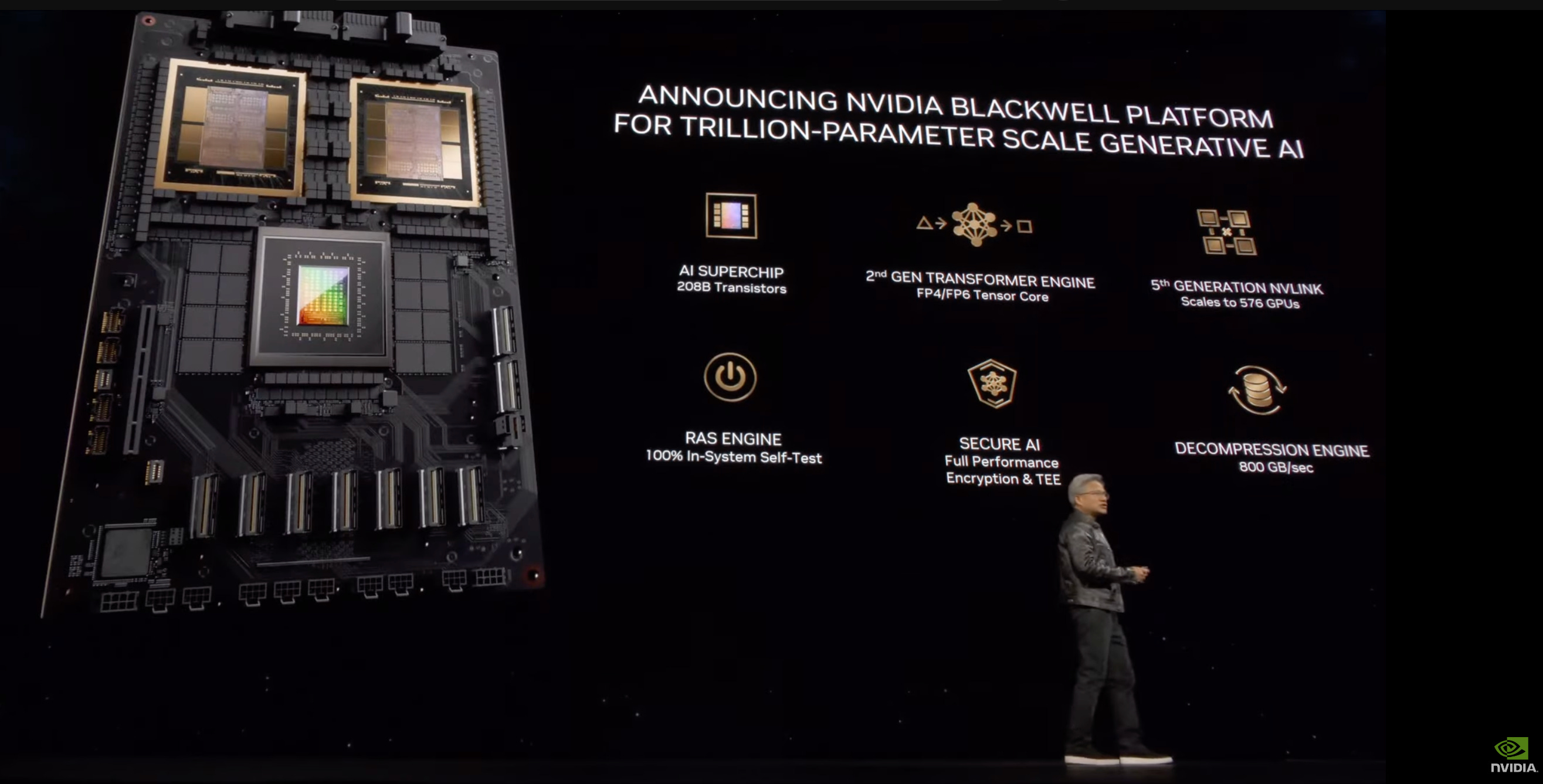

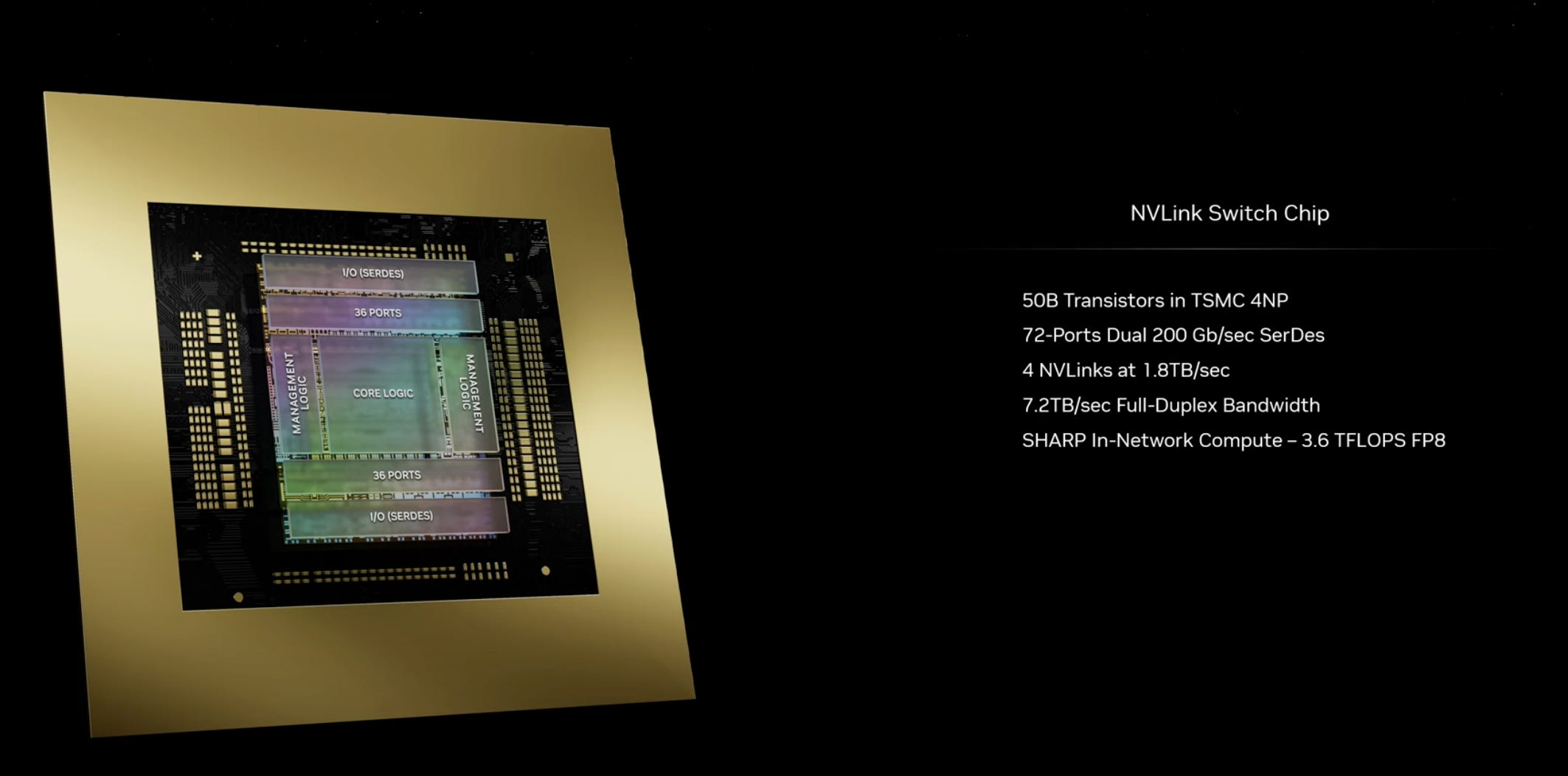

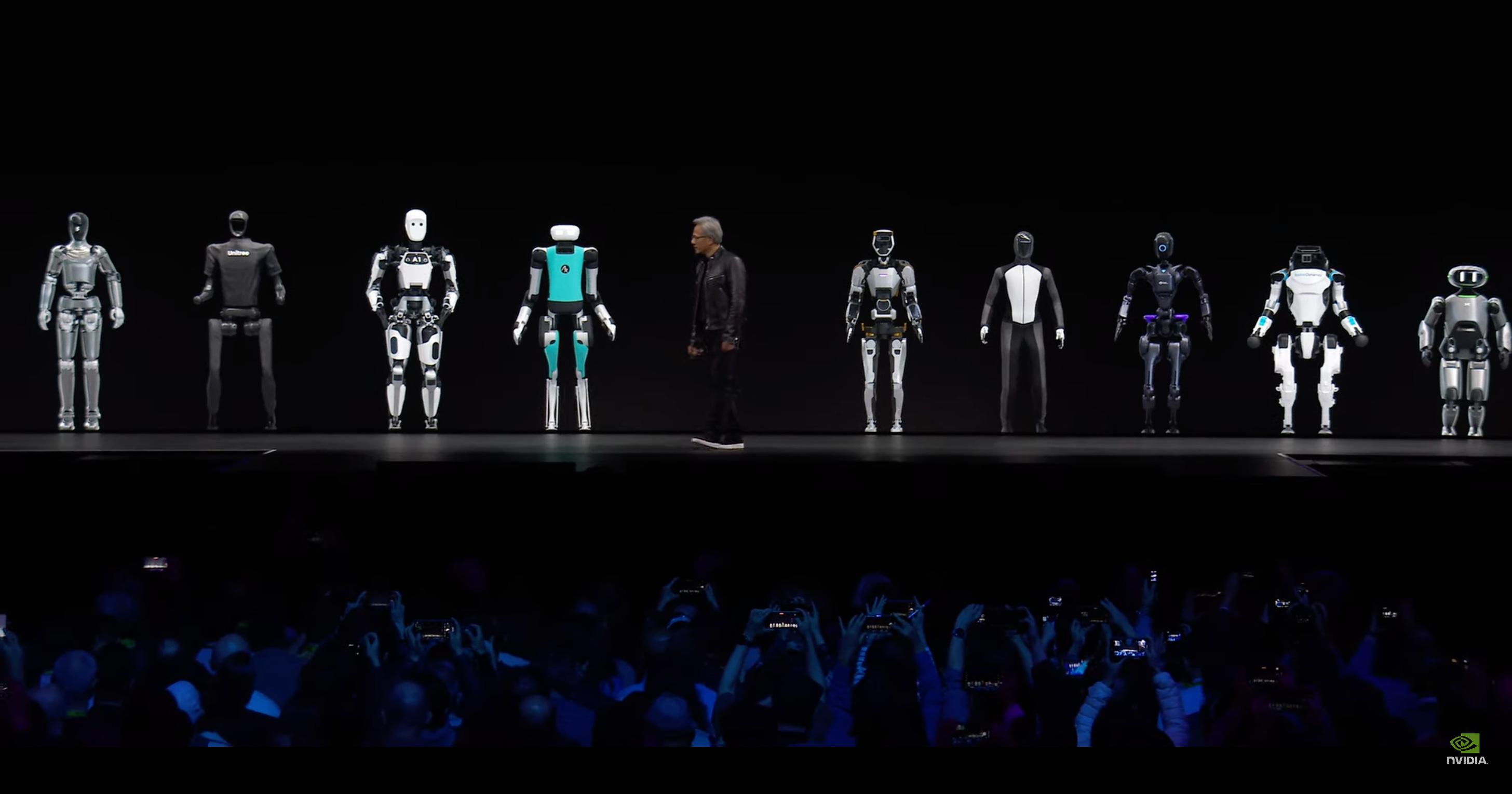

Nvidia aims to become the world's first AI foundry - Weekly News Roundup - Issue #459Plus: Microsoft + Inflection AI; Apple MM1; Grok is open source; a human with Neuralink implant plays chess and Civilisation; employees at top AI labs fear safety is an afterthought; and more!Hello and welcome to Weekly News Roundup Issue #459. This week was dominated by Nvidia’s GTC event, during which Nvidia showed and announced numerous new AI products and services. However, that was not the only notable development this week. In the world of AI, the founders of Inflection joined Microsoft, Elon Musk open-sourced Grok and employees at top AI labs fear safety is an afterthought. Apple demonstrated their multimodal language model and discussed the potential use of Gemini with Google. Meanwhile, Neuralink introduced the first human with their neural implant who showed how he can play games using the Neuralink device. Before we dive into this week’s news roundup, I want to acknowledge that this issue is being released later than usual. A combination of various events has prevented me from not only delivering the Weekly News Roundup on time but also the in-depth articles. However, that is always an opportunity to review my processes and put in place new processes to minimise the chances of this happening again. I hope you'll enjoy this week's issue. 2023 was a great year for Nvidia. Every AI company, from large players like OpenAI and Microsoft to newly founded startups, was vying for Nvidia’s top H100 or at least A100 GPUs. Riding the AI wave, the company exceeded expectations quarter after quarter in 2023, became a key supplier of highly sought-after GPUs for training top AI models, and joined the elite club of companies valued at over one trillion dollars. All just in time for the company to celebrate the 30th anniversary of its founding. With this in the background, Nvidia held GTC this week, inviting everyone to join and see what new products and services the company is releasing this year. The most anticipated release was the new generation of high-end GPUs based on the new Blackwell architecture but Nvidia has also surprised with some other interesting products and services. I noticed two themes after watching Jensen Huang’s two-hour-long, densely packed keynote (which also contained Jensen’s bad jokes and occasional cringe). First, on the hardware side, Nvidia decided to go bigger. Bigger chips, bigger computers, more memory and more computing power to train even bigger AI models. Secondly, Nvidia is refocusing itself on combining hardware and software to become what Jensen Huang said is the world’s first AI foundry.  “We need bigger GPUs”The star of the show was Blackwell, Nvidia’s newest GPU architecture. Unlike previous generations of Nvidia GPUs, Blackwell GPU has two chips connected to make one massive chip, marking it the first multi-die chip for Nvidia. Each chip has 104 billion transistors, resulting in a total number of 208 billion transistors. For comparison, the previous Hopper-based H100 has 80 billion transistors. According to Huang, these two chips are connected in such a way they think they are one big chip, not two separate chips. In total, Blackwell GPU offers 20 petaFLOPS of AI performance, and 192GB of HBM3e memory with 8 TB/s of memory bandwidth. Next, two Blackwell GPUs can be combined with one Grace CPU to create a GB200 superchip. Two of GB200 go inside Blackwell Compute Node which, together with Nvidia’s NVLinks, are the basic building blocks of Nvidia DGX SuperPOD, which offer a total of 11.5 exaFLOPS of AI performance (with FP4 numbers). These DGX SuperPODs can then be scaled up even further to create the “full datacenter” with 32,000 Blackwell GPUs offering 645 exaFLOPS of AI performance, 13 petabytes of memory and superfast networking connecting all these chips. According to Nvidia, DGX SuperPOD is ready for processing trillion-parameter models. That’s a lot of numbers and names thrown at you. Tera this, peta that. This part of the keynote nicely explains visually how Nvidia scales from a single Blackwell chip up to the full datacenter. To better illustrate what kind of jump in performance Blackwell is bringing to the table, Jensen Huang shared that training what was labelled as GPT-MoE-1.8T on one of the slides—which is almost certainly GPT-4, thus confirming the rumoured structure of OpenAI’s current top model—required 90 days and 8,000 H100 GPUs. With Blackwell, the same task would only need 2,000 GPUs in the same timeframe. Moreover, a Blackwell-based supercomputer would also be more energy-efficient; instead of the 15MW consumed by the Hopper-based system, a Blackwell-based system would require just 4MW. The Blackwell system introduces several interesting features. With the RAS Engine, the GB200 superchip can monitor the system, perform self-tests and diagnostics, and notify users if something is about to go wrong, thus improving the utilization of the entire supercomputer. The GB200 superchip also introduces a second-generation Nvidia Transformer Engine, which can automatically recast numbers into lower precision FP4 4-bit numbers, speeding up calculations. Additionally, it includes built-in security features to encrypt data at rest, in transit, and during computation. Even though Blackwell was the star of the show, Nvidia also showcased interesting developments in chip-to-chip communication. Modern AI models are too large to fit into a single GPU and need to be distributed across multiple GPUs. However, these GPUs must communicate with each other and exchange vast amounts of data, creating a bottleneck that limits the entire system's performance. To solve this bottleneck, Nvidia built the NVLink Switch Chip which allows every GPU in the system to communicate with any other GPU, effectively turning the entire system into one big GPU. For more details into Nvidia’s new Blackwell architecture and how it compares to previous generations, I recommend checking out this deep dive from AnandTech, which has all the numbers compared alongside excellent in-depth technical analysis. NIMsIn addition to accelerating the AI industry with hardware, Nvidia also aims to support the industry with software. Setting up AI models can be challenging, especially for companies lacking in-house AI expertise. To address this issue and simplify the deployment of AI models, Nvidia introduced NIMs. NIMs, short for Nvidia Inference Microservices, are pre-trained models ready to use in various tasks. The goal is to create easy-to-use AI models as containers that can be used straight away by enterprises or to use them as a base for custom AI workflows. NIM currently supports models from Nvidia, A21, Adept, Cohere, Getty Images, and Shutterstock, as well as open models from Google, Hugging Face, Meta, Microsoft, Mistral AI, and Stability AI. Nvidia is collaborating with Amazon, Google, and Microsoft to make these NIM microservices available on their platforms. Additionally, NIMs will be integrated into frameworks like Deepset, LangChain, and LlamaIndex. No one expected humanoid robotsOne development I didn't anticipate was Nvidia jumping onto the humanoid robotics hype train. Humanoid robots are just around the corner, with some already being tested by companies like Amazon, BMW, and Mercedes-Benz. Nvidia sensed an opportunity here and introduced Project GR00T, a foundation model for humanoid robots. GR00T (short for "Generalist Robot 00 Technology" and spelt with zeroes to avoid any copyright issues with Disney) is intended as a starting point for these robots. Robots equipped with GR00T are designed to understand natural language and emulate human movements by observation—quickly acquiring coordination, dexterity, and other skills necessary to navigate, adapt, and interact with the real world, according to Nvidia.

In addition to GR00T, Nvidia announced a new computing platform named Jetson Thor, designed specifically for humanoid robots. The company states that this new platform, based on the Nvidia Blackwell architecture, can perform complex tasks and interact safely and naturally with both people and machines. It features a modular architecture optimized for performance, power, and size. The Robot Report has a very good in-depth article looking at all things robotics, including GR00T, announced at GTC 2024. If you're interested in learning more about what Nvidia has to offer for robotics, this is a great resource. Nvidia as an AI FoundryThe generative AI revolution (or bubble, depending on your perspective) propelled Nvidia to become the third most valuable company in the world, valued at $2.357 trillion, trailing only Apple and Microsoft. Nvidia sees its future in AI. The company has effectively become the sole provider of hardware used to train the world's most advanced models and continues to deliver even more powerful hardware for training and deploying these sophisticated models. However, Nvidia does not want to stop at hardware. It also aims to introduce its own proprietary software solutions to help businesses fully leverage AI. By combining both AI hardware and software, Nvidia aspires to become the world’s first AI foundry. One thing is for certain - the new hardware presented at GTC 2024 will enable the training and deployment of even more powerful AI models. Who knows, maybe there are already a couple of thousand Blackwell GPUs in Azure data centres crunching numbers for the upcoming GPT-5. If you enjoy this post, please click the ❤️ button or share it. Do you like my work? Consider becoming a paying subscriber to support it For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter. Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving. 🦾 More than a humanWatch Neuralink’s First Human Subject Demonstrate His Brain-Computer Interface How one streamer learned to play video games with only her mind The quest to legitimize longevity medicine First Genetically Engineered Pig Kidney Transplanted into Living Patient 🧠 Artificial IntelligenceMustafa Suleyman, DeepMind and Inflection Co-founder, joins Microsoft to lead Copilot Grok is open-source now Apple’s MM1 AI Model Shows a Sleeping Giant Is Waking Up Apple Is in Talks to Let Google Gemini Power iPhone AI Features Employees at Top AI Labs Fear Safety Is an Afterthought, Report Says India asks tech firms to seek approval before releasing 'unreliable' AI tools Google DeepMind’s new AI assistant helps elite soccer coaches get even better ‘A landmark moment’: scientists use AI to design antibodies from scratch If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word? 🤖 RoboticsAutonomous auto racing promises safer driverless cars on the road A snake-like robot designed to look for life on Saturn's moon Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it. Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support! My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!" |

Older messages

How Devin Signals the Age of AI Agent - Weekly News Roundup - Issue #458

Friday, March 15, 2024

Plus: humanoid robot understands human speech; Nvidia gets sued over AI use of copyrighted works; Mercedes-Benz will trial a humanoid robot; DeepMind SIMA; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Claude 3, the new best LLM on the block - Weekly News Roundup - Issue #457

Friday, March 8, 2024

Plus: OpenAI reveals Elon's emails; Unitree's humanoid robot is available for purchase; Microsoft's engineer raises concerns about Copilot Designer and responsible AI; and more! ͏ ͏ ͏

Your surgeon, a robot, will see you soon

Wednesday, March 6, 2024

How the robotic revolution promises to make surgeons more efficient and help patients recover more quickly from surgeries ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

CYBATHLON - The Olympics for Cyborgs - Weekly News Roundup - Issue #453

Monday, March 4, 2024

Plus: scammers steal $25 million with deepfakes; Bard becomes Gemini and Gemini Ultra is out; playing DOOM on cells; world's first transgenic ants; Atlas does something useful; and more!

Sam Altman asks for $7 trillion - Weekly News Roundup - Issue #454

Monday, March 4, 2024

Plus: OpenAI Sora and AI agents; ChatGPT gets memory; Gemini 1.5; "meaty" rice; more humanoid robots; glowing plants go on pre-order; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

Daily Coding Problem: Problem #1708 [Medium]

Tuesday, March 4, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Indeed. Given a 32 -bit positive integer N , determine whether it is a power of four in

Underscore Naming, Flask-SQLAlchemy, Kivy, and More

Tuesday, March 4, 2025

Single and Double Underscore Naming Conventions in Python #671 – MARCH 4, 2025 VIEW IN BROWSER The PyCoder's Weekly Logo Single and Double Underscore Naming Conventions in Python In this video

Dial An Advertiser ☎️

Tuesday, March 4, 2025

Things like phone books existed before phone books. Here's a version for your browser. Hunting for the end of the long tail • March 4, 2025 I've decided to stop being so unfair to myself with

Ranked | The World's Top 20 Economies by GDP Growth (2015-2025) 📊

Tuesday, March 4, 2025

Halfway through the 2020s, here's a report card on the top 20 economies and their progress since 2015. View Online | Subscribe | Download Our App Presented by Hinrich Foundation NEW REPORT:

Open Source Isnt Dead...Its Just Forked

Tuesday, March 4, 2025

Top Tech Content sent at Noon! Augment Code: Developer AI for real eng work. Start for free Read this email in your browser How are you, @newsletterest1? 🪐 What's happening in tech today, March 4,

LW 172 - How to Make Compare at Pricing Show at Checkout

Tuesday, March 4, 2025

How to Make Compare at Pricing Show at Checkout Shopify Development news and articles Issue 172 -

Issue 165

Tuesday, March 4, 2025

💻🖱️ A single click destroyed this man's entire life. Fake murders get millions of YouTube views. Zuckerberg can now read your silent thoughts. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

This top multitool is under $30

Tuesday, March 4, 2025

Thinnest phone ever?📱; ArcoPlasma; Siri alternatives 🗣️ -- ZDNET ZDNET Tech Today - US March 4, 2025 GOTRAX 4 electric scooter I finally found a high-quality multitool for under $30 Compact and durable

Post from Syncfusion Blogs on 03/04/2025

Tuesday, March 4, 2025

New blogs from Syncfusion ® Stacked vs. Grouped Bar Charts in Blazor: Which is Better for Data Visualization? By Gowrimathi S Learn the difference between the stacked and grouped bar charts and choose

⚙️ GenAI Siri

Tuesday, March 4, 2025

Plus: TSMC's hundred billion dollar investment