Astral Codex Ten - Asterisk/Zvi on California's AI Bill

California’s state senate is considering SB1047, a bill to regulate AI. Since OpenAI, Anthropic, Google, and Meta are all in California, this would affect most of the industry. If the California state senate passed a bill saying that the sky was blue, I would start considering whether it might be green, or colorless, or maybe not exist at all. And people on Twitter have been saying that this bill would ban open-source AI - no, all AI! - no, all technology more complicated than a toaster! So I started out skeptical. But Zvi Mowshowitz (summary article in Asterisk, long FAQ on his blog) has looked at it more closely and found:

The bill applies to “frontier models” trained on > 10^26 FLOPs - in other words, models a bit bigger than any that currently exist. GPT-4 doesn’t qualify, but GPT-5 probably will. It also covers any model equivalent to these, ie anything that uses clever new technology to be as intelligent as a current 10^26 FLOPs model without actually using that much compute. It places three¹ types of regulation on these models: First, companies have to train and run them in a secure environment where “advanced persistent threats” (eg China) can’t easily hack in and steal them². Second, as long as the model is on company computers, the company has to be able to shut it down quickly if something goes wrong. Third, companies need to test to see if the model can be used to do something really bad. Its three categories of really bad things are:

If the tests show that the model can do these bad things, the company has to demonstrate that it won’t, presumably by safety-training the AI and showing that the training worked. The kind of training AIs already have - the kind that prevents them from saying naughty words or whatever - would count here, as long as “the safeguards . . . will be sufficient to prevent critical harms.” So the bill isn’t about regulating deepfakes or misinformation or generative art. It’s just about nukes and hacking the power grid. There are some good objections and some dumb objections to this bill. Let’s start with the dumb ones: Some people think this would literally ban open source AI. After all, doesn’t it say that companies have to be able to shut down their models? And isn’t that impossible if they’re open-source? No. The bill specifically says⁴ this only applies to the copies of the AI still in the company’s possession⁵. The company is still allowed to open-source it, and they don’t have to worry about shutting down other people’s copies. Other people think this would make it prohibitively expensive for individuals and small startups to tinker with open-source AIs. But the bill says that only companies training giant foundation models have to worry about any of this. So if Facebook trains a new LLaMA bigger than GPT-5, they’ll have to spend some trivial-in-comparison-to-training-costs amount to test it in-house and make sure it can’t make nukes before they release it. But after they do that, third-party developers can do whatever they want to it - re-training, fine-tuning, whatever - without doing any further tests. Other people think all the testing and regulation would make AIs prohibitively expensive to train, full stop. That’s not true either. All the big companies except Meta already do testing like this - here’s Anthropic’s, Google’s, and OpenAI’s - that already approximate the regulations. Training a new GPT-5 level AI is so expensive - hundreds of millions of dollars - that the safety testing probably adds less than 1% to the cost. No company rich enough to train a GPT-5 level AI is going to be turned off by the cost of asking it “hey can you create super-Ebola?”, and putting the answer into a nice legal-looking PDF. This isn’t the “create a moat for OpenAI” bill that everyone’s scared of⁶. Other people are freaking out over the “certification under penalty of perjury”. In some cases, developers have to certify under penalty of perjury that they’re complying with the bill. Isn’t this crazy? Doesn’t it mean if you make a mistake about your AI, you could go to jail? This is extremely misunderstanding how law works. Perjury means you can’t deliberately lie, something which is hard to prove and so rarely prosecuted. More to the point, half of the stuff I do in an average day as a medical doctor is certified under penalty of perjury - filling out medical leave forms is the first one to come to mind. This doesn’t mean I go to jail if my diagnosis is wrong. It’s just the government’s way of saying “it’s on the honor system”. What are some of the reasonable objections to this bill? Some people think the requirement to prove the AI safe is impossible or nearly so. This is Jessica Taylor’s main point here, which is certainly correct for a literal meaning of “prove”. Zvi points out that it just says “reasonable assurance”, which is a legal term for “you jumped through the right number of hoops”. In this case probably the right number of hoops is doing the same kind of testing that OpenAI/Anthropic/Google are currently doing, or that AI safety testing organization METR recommends. The bill gestures at the National Institute of Standards and Technology a few times here, and NIST just named one of METR’s founders as their AI safety czar, so I would be surprised if things didn’t end going this direction. METR’s tests are possible and many AI models have successfully passed earlier versions. Other people are worried about a weird rule that you can’t train an AI if you think it’s going to be unsafe. After some simple points about having a safety policy set up before training, the bill adds that you should:

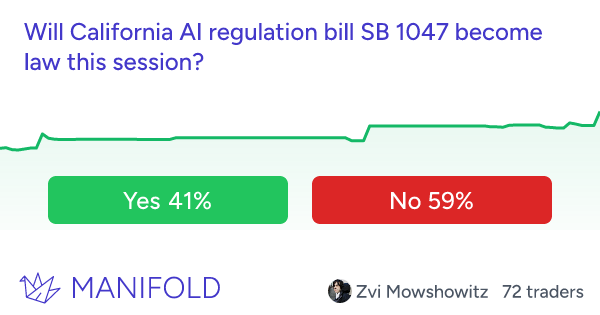

This makes less sense than all the other rules - you can test a model post-training to see if it’s harmful, but this seems to suggest you should know something before it’s trained. Is this a fully general “if something bad happens, we can get angry at you”? I agree this part should be clarified. Other people think the benchmarking clause is too vague. The law applies to models trained with > 10^26 FLOPs, or any model that uses advanced technology to be equally as good despite less compute. Equally as good how? According to benchmarks. Which benchmarks? The law doesn’t say. But it does say that the Technology Department will hire some bureaucrats to give guidance on this. I think this is probably the only way to do this; it’s too easy to fake any given benchmark. Every AI company already compares their models to every other AI company on a series of benchmarks anyway, so this isn’t demanding they create some new institution. It’s just “use common sense, ask the bureaucrats if you’re in a gray area, a judge will interpret it if it comes to trial”. This is how every law works. Other people complain that any numbers in the bill that make sense now may one day stop making sense. Right now 10^26 FLOPs is a lot. But in thirty years, it might be trivial - within the range that an academic consortium or scrappy startup might spend to train some cheap ad hoc AI. Then this law will be unduly restrictive to academics and scrappy startups. I assume that numbers will be updated when they no longer make sense - California’s minimum wage was originally $0.15 per hour - but if you think the government will drop the ball on this, it might affect AI versatility thirty years from now - if we’re still around. Other people note that this will *eventually* make open source impossible. Someday AIs really will be able to make nukes or pull off $500 million hacks. At that point, companies will have to certify that their model has been trained not to do this, and that it will stay trained. But if it were open-source, then anyone could easily untrain it. So after models become capable of making nukes or super-Ebola, companies won’t be able to open-source them anymore without some as-yet-undiscovered technology to prevent end users from using these capabilities. Sounds . . . good? I don’t know if even the most committed anti-AI-safetyist wants a provably-super-dangerous model out in the wild. Still, what happens after that? No cutting-edge open-source AIs ever again? I don’t know. In whatever future year foundation models can make nukes and hack the power grid, maybe the CIA will have better AIs capable of preventing nuclear terrorism, and the power company will have better AIs capable of protecting their grid. The law seems to leave open the possibility that in this situation, the AIs wouldn’t technically be capable of doing these things, and could be open-sourced. (or you could base your Build-A-Nuke-Kwik AI company in some state other than California.) Finally - last week discussed Richard Hanania’s The Origin Of Woke, which claimed that although the original Civil Rights Act was good and well-bounded and included nothing objectionable, courts gradually re-interpreted it to mean various things much stronger than anyone wanted at the time. This bill tells the Department of Technology to offer guidance on what kind of tests AI companies should use. I assume their first guidance will be “the kind of safety testing that all companies except Meta are currently doing”, because those are good tests, and the same AI safety people who helped write those tests probably also helped write this bill. But Hanania’s book, and the process of reading this bill, highlight how vague and complicated all laws can be. The same bill could be excellent or terrible, depending on whether it’s interpreted effectively by well-intentioned people, or poorly by idiots. That’s true here too. The best I can say against this objection is that this bill seems better-written than most. Many of the objections to its provisions seem to not understand how law works in general (cf. the perjury section) - the things they attack as impossible or insane or incomprehensibly vague are much easier and clearer than their counterparts in (let’s say) medicine or aerospace. Future AIs stronger than GPT-4 seem like the sorts of things which - like bad medicines or defective airplanes - could potentially cause damage. This sort of weak regulation that exempts most models and carves out a space for open-sourcing seems like a good compromise between basic safety and protecting innovation. I join people like Yoshua Bengio and Geoffrey Hinton in supporting it. Regardless of your position, I urge you to pay attention to the conversation and especially to read Zvi’s Asterisk article or his longer FAQ on his blog. I think Zvi provides pretty good evidence that many people are just outright lying about - or at least heavily misrepresenting - the contents of the bill, in a way that you can easily confirm by reading the bill itself. There will be many more fights over AI, and some of them will be technical and complicated. Best to figure out who’s honest now, when it’s trivial to check! If you disagree, I’m happy to make bets on various outcomes, for example:

1 It also demands that compute clusters implement some Know Your Customer laws, and creates an official State Compute Cluster for California. I’m ignoring these because nobody has expressed much of an opinion on them. The State Compute Cluster for California isn’t on anyone’s AI safety list, and I assume it’s part of some bargaining with some other interest group. 2 And, incidentally, where the AI can’t break out. 3 The difference between (2) and (3) is that (2) triggers if a malicious human tells the AI to do the hack, but (3) only triggers if the AI becomes sentient or something and commits the crime itself. 4 “Full shutdown means the cessation of operation of a covered model, including all copies and derivative models, on all computers and storage devices within custody, control, or possession of a person”, where “person” is elsewhere defined to mean corporation. I agree this is a little ambiguous, but Asterisk talked to the state senator’s office and they confirmed that they meant the less-restrictive, pro-open-source meaning. 5 Is this a loophole that makes the law useless? I think no - as we’ll see later, companies won’t be able to open-source certain models that are proven to have very dangerous capabilities. This part is probably aimed at those. 6 AFAICT OpenAI and other big labs haven’t expressed a position on this bill, and I can’t guess what their position is. You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

Highlights From The Comments On "The Origin Of Woke"

Tuesday, May 7, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Open Thread 328

Monday, May 6, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Book Review: The Origins Of Woke

Wednesday, May 1, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Response to Hanson On Health Care

Tuesday, April 30, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Open Thread 327

Sunday, April 28, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

What Trump Needs to Do in His Address to Congress

Tuesday, March 4, 2025

March 4, 2025 Head to the Intelligencer homepage tonight to follow along as we cover Trump's joint address to Congress at 9pm ET. EARLY AND OFTEN 5 Things Trump Needs to Do in His Address to

What A Day: Sticker shock wave

Tuesday, March 4, 2025

Tank the stock market and hike food prices, right before your first big speech to the nation? Political genius, Mr. President. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Join Jon, Jon, Tommy, & Dan before Trump's speech.

Tuesday, March 4, 2025

Stick around to live chat the joint address → ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Fee Fi Fo Dumb

Tuesday, March 4, 2025

The Tariff Wars Have Come ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

GeekWire Awards finalists revealed | How to supercharge Seattle’s startup scene

Tuesday, March 4, 2025

Rec Room layoffs | Anthropic's Seattle office | Tech Moves ADVERTISEMENT GeekWire SPONSOR MESSAGE: Revisit defining moments, explore new challenges, and get a glimpse into what lies ahead for one

SCOTUS to America: Eat 💩

Tuesday, March 4, 2025

Plus, new tariffs realize the dream of the original billionaire candidate, whose populism began reshaping working-class politics. Forward this email to others so they can sign up ⏪ ICYMI in The Lever:

☕ Do it live

Tuesday, March 4, 2025

How Whatnot aims to become a home for uncertain TikTok livestreamers. March 04, 2025 View Online | Sign Up Marketing Brew Presented by StartEngine It's Tuesday. Ready to score big in sports

☕ Black History math

Tuesday, March 4, 2025

Target's Black History Month posts by year. March 04, 2025 View Online | Sign Up Retail Brew Presented By Bloomreach It's Tuesday. Tomorrow, we're making online shopping fun again—no more

Trump's federal cryptocurrency reserve.

Tuesday, March 4, 2025

Plus, why do people always talk past each other on abortion? Trump's federal cryptocurrency reserve. Plus, why do people always talk past each other on abortion? By Isaac Saul • 4 Mar 2025 View in

Mom Magnet

Tuesday, March 4, 2025

Writing of lasting value Mom Magnet By Uri Bram & Peter Hirsch • 4 Mar 2025 View in browser View in browser Always Look Up Everybody's Mom Ada Palmer | Ex Urbe | 12th February 2025 Two