| | | Good Morning. In case you don’t follow Roaring Kitty on Twitter, he posted again for the first time in years … and all of a sudden, meme stocks seem to be back. (Roaring Kitty is credited with the rise of the meme stock way back in 2021). | And OpenAI made its first big product unveil in a while (and Google tried its best to steal OpenAI’s thunder). | In today’s newsletter: | 🤝 Apple & OpenAI partnership is a ‘done deal’ 📱 Your Instagram posts power Meta’s AI models 📄 New Paper: AI and its risk to democracy 💬 OpenA is making AI ‘from the movies’

|  | Google @Google |  |

| |

One more day until #GoogleIO! We’re feeling 🤩. See you tomorrow for the latest news about AI, Search and more. |  | | | May 13, 2024 | | | | | | 1.68K Likes 353 Retweets 121 Replies |

|

|

| | |

| | Apple & OpenAI partnership is a ‘done deal’ |  | Image Source: Apple |

| Bloomberg’s Mark Gurman reported over the weekend that Apple and OpenAI are “finalizing terms” for a partnership that would see OpenAI’s tech integrated into iOS 18. Apple has reportedly held similar talks with Google regarding its Gemini tech; these discussions remain ongoing. | Then, on Monday, Wedbush’s tech team referred to the pending partnership as a “done deal.” | Wedbush tech analyst Dan Ives expects Apple to officially unveil the partnership (and an OpenAI-enhanced Siri) on June 10, during Apple’s Worldwide Developer Conference. “Ultimately, an OpenAI partnership is much more significant and foundational for Cook's AI strategy than a Google Gemini deal,” Ives said.

| | Mark Gurman @markgurman This is the Apple AI strategy:A) On-device LLM (in house) B) Cloud-powered LLM (in house) C) Chatbot (OpenAI for sure, Google maybe). Apple isn’t building its own chatbot but knows the market wants it so it’s going elsewhere for it. It’s the same playbook as search. Dare W @darewecan @markgurman So is Apple abandoning their “ajax” ai & going all in with OpenAI or is OpenAI a stop gap until they catch up with their own AI? May 12, 2024 1.47K Likes 139 Retweets 56 Replies |

| Both Ives and Deepwater’s Gene Munster said Apple stands to make a lot of money from such a deal. Munster likened it to the Apple-Google search deal (Google pays Apple 36% of its search advertising revenue from Safari). | Apple did not respond to my request for comment. | | Your Instagram posts are giving Meta’s AI an edge |  | Image source: Meta |

| Meta’s Chief Product Officer Chris Cox, speaking at the Bloomberg Tech Summit last week, said that Meta has one big edge when it comes to its AI image generators: Instagram. | The company’s advantage is the “public images that have been shared on Facebook and Instagram,” Cox said. “We don’t train on private stuff, we don't train on stuff that people share with their friends; we do train on things that are public.” | Cox said that the sheer quantity and quality of Instagram as a dataset —everything from photos of fashion to photos of people — is why Meta’s AI image generators are of such “amazing quality.” | Meta’s privacy policy: | Meta’s privacy policy states that it gets AI training data from a few sources: public information on the internet, licensed content and “information from Meta’s products and services.” It is unclear if photos posted on private accounts are included in the training data; Meta did not reply to a request for comment on this point.

| One more thing: | For a while now, our data has been scraped, packaged, and sold as a cost-of-admission to the internet — for years, we scrolled to the bottom of terms’ of service and clicked “I agree,” because if we didn’t agree, we couldn’t use Twitter, or YouTube, or insert any other app/website here. | Over the past 12 months, that system has gradually evolved into one where our content – in addition to our data – is being scraped as an increased cost of admission for using these platforms. They got us in; then they changed the rules. And at this point, I don’t think too many people are going through updates to Instagram’s terms of service. | | Are you okay with companies training their AI models on your social media content? | | | Together with Bright Data | Unlock the web and collect web data at scale with Bright Data, your ultimate web scraping partner. | | Access complex sites with the most advanced JavaScript frameworks with our robust web scraping solutions. | Award-Winning Proxy Network - Bypass IP blocks & CAPTCHAs Advanced Web Scrapers - From low-code environments to high-level programming Ready-Made and Custom Datasets - Access extensive datasets directly or request customized collections AI and LLM Ready - Equip your AI and language models with the diverse data they require

| Experience the Unstoppable – Try Bright Data Today | | New Paper: AI & the epistemic risk it poses to democracy |  | Image Source: Unsplash |

| The proliferation of AI which we are currently experiencing is one that will have more than a few impacts; we might soon see fleets of cars that can drive themselves; we might soon see novel drugs that could be lifechanging for countless people; and we are at the cusp of a world in which machines could become the middle man (thing?) between humans and information. | John P. Wihbey, an associate professor of media innovation and tech at Northeastern University, argued in a recent paper that an environment where human knowledge and information become “mediated by machines” is one that poses an epistemic risk to democracy. | Read the paper here. | Some highlights: | "The very structure of AI, namely the building of predictive models based on past data, is fundamentally in conflict with basic aspects of democratic life, which is inherently forward-looking.” He argues that the question at hand concerns the potential loss of societal knowledge as “machines begin assuming the tasks of refereeing public conversations.” The thing that he says will be lost is a touch of humanity; “what people think, how they argue and the preferences they reveal as they debate.”

| He says there must remain a space to “preserve human cognitive resources that are not substantially shaped by AI-mediating technologies.” |

| | |

| | 💰AI Jobs Board: | Applied Scientist: Microsoft · United States · Multiple locations, Hybrid · Full-time · (Apply here) Technology Consultant - Data & AI: Microsoft · United States · Multiple locations, Hybrid · Full-time · (Apply here) Applied Scientist - Artificial General Intelligence: Amazon · United States · Multiple Locations · Full-time · (Apply here)

| | 🗺️ Events: * | Ai4, the world’s largest gathering of artificial intelligence leaders in business, is coming to Las Vegas — August 12-14, 2024.

| | 🌎 The Broad View: | Microsoft and Amazon are collectively investing $5.6 billion in cloud/AI infrastructure in France (CNBC). The U.S. and China will meet in Geneva to discuss the risks of AI (Reuters). Apple & Google partner up to fight location stalking (Apple).

| *Indicates a sponsored link |

| | |

| Together with Superhuman | | Superhuman was already the fastest email experience in the world, designed to help customers fly through their inbox twice as fast as before. | We’ve taken it even further with Superhuman AI — an inbox that automatically summarizes email conversations, proactively fixes spelling errors, translates email into different languages and even automatically pre-drafts replies for you, all in your voice and tone. | Superhuman customers save 4 hours every week, and with Superhuman AI, they get an additional hour back to focus on what matters to them. | Get 1 month free of Superhuman |

| | |

| | OpenAI is making AI ‘from the movies’ |  | Created with AI by The Deep View. |

| The moment you’ve all been waiting for – it’s not Search, it’s not GPT-5 … It’s GPT-4o, an omni-modal evolution of ChatGPT that, according to Sam Altman, feels like the AI from the movie Her. The model can receive text, image and audio input and can produce similarly multi-modal output (through vocal conversations with users). | Key points: | Gpt-4o will be rolled out for free to ALL users. The model, which Sam Altman called the “best model in the world,” is twice as fast as GPT-4 turbo, and half the cost in the API. During the demo, the new model appeared to interpret an OpenAI employee’s emotion based on a selfie. The demo also featured live, instantaneous language translation. Though OpenAI acknowledged the new safety challenges posed by the audio component of the model, it said that it has established (vague) safety guardrails to protect against misuse and is shipping the product (with voice limitations) anyway. A desktop app is also incoming.

|  | OpenAI @OpenAI |  |

| |

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks. |  | openai.com/index/hello-gp…

Hello GPT-4o

We’re announcing GPT-4 Omni, our new flagship model which can reason across audio, vision, and text in real time. |

|

|  | | | May 13, 2024 | | | | | | 24.4K Likes 5.45K Retweets 1.11K Replies |

|

| AGI or just a lot of training data? | GPT-4o appears to be another step toward the appearance of synthetic reasoning. | We still don’t know the specifics of OpenAI’s training set, which limits scientists’ ability to understand just how powerful (or not) its models are (and poses questions of data privacy/copyright violation in one). As software engineer Grady Booch said: “By the very nature of its architecture, no LLM can reason.” “One might see dim reflections of inductive and deductive reasoning only in so far as the training data set contains such examples, but abductive reasoning — indeed any color of reasoning that requires the production of new abstractions — is unreachable…” “To think this is an AGI or even a path to AGI is folly.”

| A couple of thoughts here: | The first thing that occurred to me is that this is a demo; often, demos don’t seem to translate too well to users. I’m very curious to see just how usable this is as it rolls out (let me know if you start messing around with it). | The other first thing that occurred to me revolves around hallucinations, which are a reality of the LLM architecture. This multimodal expansion seems to create a series of new avenues for incorrect information to make its way to people; i.e. translating, or using ChatGPT as a math tutor. | Selling reliability on a potentially unreliable tool seems like something other than the best path forward. That said, yeah, the demo was impressive, specifically the speech synthesis; very fast and very smooth. | “If OpenAI had GPT-5, they would have shown it,” cognitive scientist Gary Marcus said. “They don’t have GPT-5 after 14 months of trying … evidence that we may have reached a phase of diminishing returns.”

|

| | |

| | | |  | Image 1 |

| Which image is real? | |  | Image 2 |

|

| | |

| | Yoodli: An AI tool to improve your speaking skills. Gryzzly: A tool to simplify tracking project time. Emma AI: A tool to create a custom AI assistant.

| Have cool resources or tools to share? Submit a tool or reach us by replying to this email (or DM us on Twitter). | *Indicates a sponsored link |

| | |

| SPONSOR THIS NEWSLETTER | The Deep View is currently one of the world’s fastest-growing newsletters, adding thousands of AI enthusiasts a week to our incredible family of over 200,000! Our readers work at top companies like Apple, Meta, OpenAI, Google, Microsoft and many more. | If you want to share your company or product with fellow AI enthusiasts before we’re fully booked, reserve an ad slot here. |

| | |

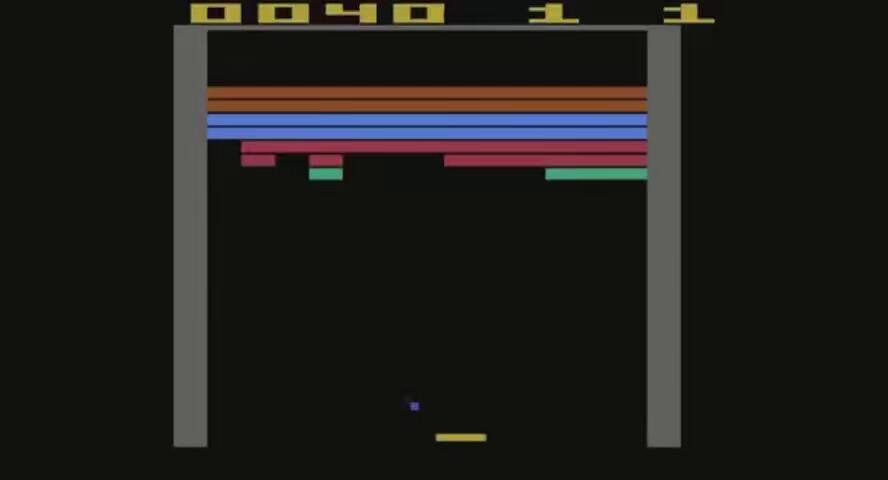

| One last thing👇 |  | Jon Erlichman @JonErlichman |  |

| |

On this day in 1976: Breakout was released. Atari engineer Steve Jobs asked friend Steve Wozniak to help design a game. Wozniak, who worked at HP, created it during his spare time. It was a hit. And it inspired Woz to build a computer. |  | | | May 13, 2024 | | | | | | 163 Likes 30 Retweets 9 Replies |

|

| That's a wrap for now! We hope you enjoyed today’s newsletter :) | What did you think of today's email? | | We appreciate your continued support! We'll catch you in the next edition 👋 | -Ian Krietzberg, Editor-in-Chief, The Deep View |

| | |

| |

|