Humanity Redefined - Let's look at GPT-4o without the hype

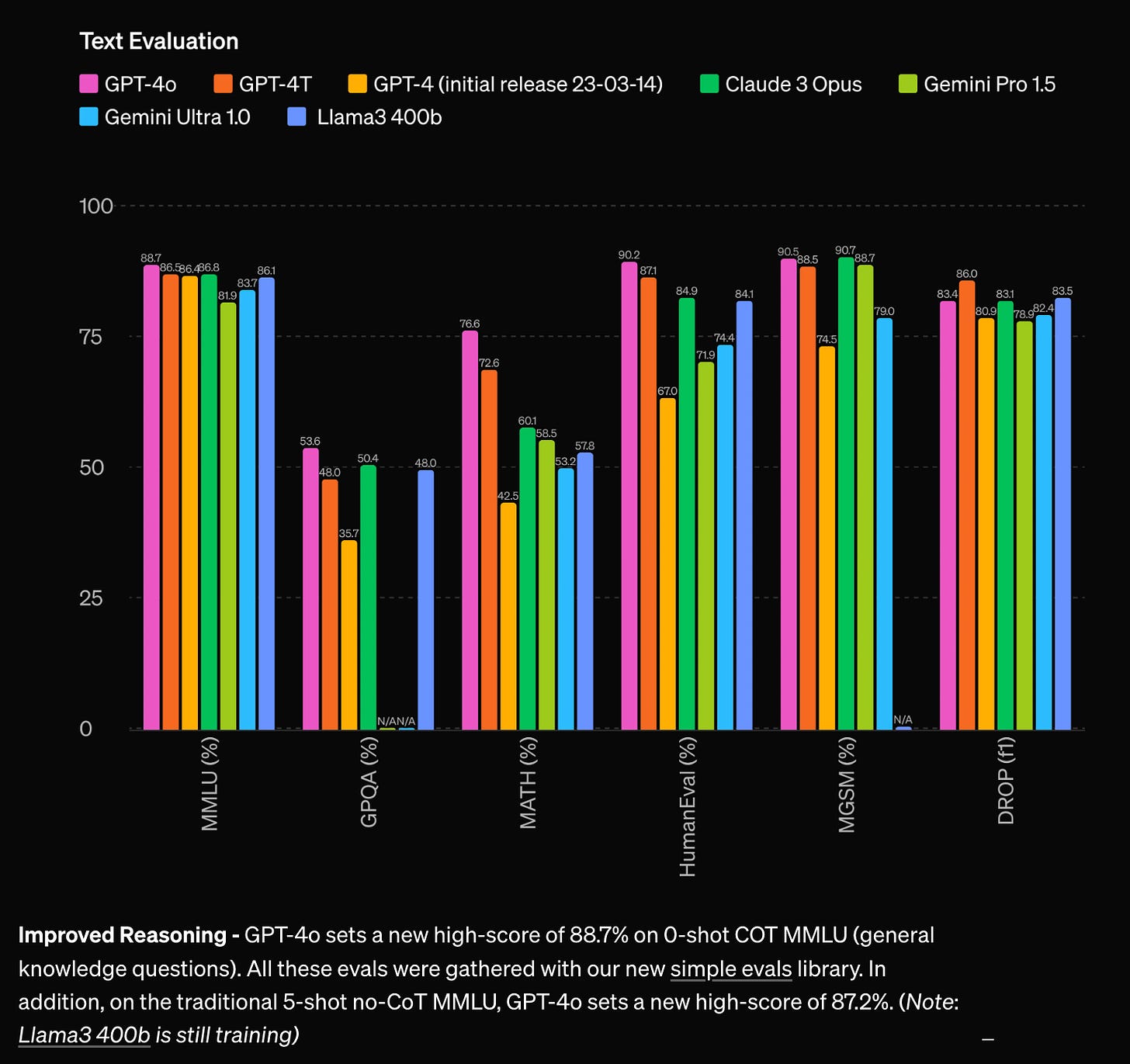

Let's look at GPT-4o without the hypeOpenAI released its new flagship model and redefined what "open" in OpenAI meansOpenAI has been stirring the pot recently. At the beginning of May, a mysterious new model named GPT-2 Chatbot appeared out of nowhere and climbed to the top of the LMSYS Arena benchmark. All signs indicated that this model had links to OpenAI. Next, rumours began circulating about OpenAI working on an AI-powered search engine to challenge Google’s dominance in search. Lastly, OpenAI announced its Spring Update, scheduled just a day before the Google I/O event. But instead of a new search engine and spoiling Google’s big event, OpenAI revealed GPT-4o, its new best model. Let’s take a closer look at what OpenAI brings to the table, how the new model compares to its competitors, and how the release of GPT-4o redefines what “open” in OpenAI means. The “o” in GPT-4o stands for “omni,” which means "all" or "every." It is a good choice of word, as GPT-4o is OpenAI’s first natively multimodal model and joins Google Gemini in this category. Unlike previous models, where image or audio processing modules are attached to the text model, GPT-4o combines all those modules into one model. The new model not only understands and generates text but also can analyze images, generate code, and take in video input, for example from a smartphone camera, and answer questions about it.  During the live stream, OpenAI employees showcased what the new model is capable of with demos mostly focused on voice and video interactions with the model, asking it to answer questions in natural language, describe what it sees through a smartphone camera, solve math problems, and analyze code. What impressed me was the speed at which GPT-4o returned answers in those demos. OpenAI says GPT-4o responds to audio input in 320 milliseconds on average. For comparison, GPT-3.5 and GPT-4 in voice mode take about 2.8 seconds and 5.4 seconds on average, respectively, to respond. What OpenAI showed looks impressive. GPT-4o feels like a true AI assistant from sci-fi movies and immediately evoked the chatbot from the movie Her which seems to be a big inspiration for people at OpenAI. Thanks to the improvements in response time, conversations with GPT-4o feel more natural. The AI replies quickly, making it feel like you are speaking to a human. You can also interrupt the AI mid-sentence—it then stops talking and listens to what you have to say. What also helps sell this illusion of talking to a human is the fact that ChatGPT has a personality. It replies in a helpful, cheerful, and upbeat voice. It also sings, jokes, and laughs.  GPT-4o is OpenAI’s new best modelAlongside demos and example GPT-4o responses, OpenAI published benchmark results comparing its newest model to GPT-4 Turbo, the original GPT-4, and its competitors from Google, Meta, and Anthropic. Overall, the picture that emerges from these results is that GPT-4o is the new best large language model, but it is not a generational leap like the jump from GPT-3.5 to GPT-4. GPT-4o is an improvement over GPT-4, but the competitor models are not that far behind. In text evaluation, GPT-4o scores better than GPT-4 Turbo or its competitors, Anthropic’s Claude 3 Opus and Google’s Gemini 1.5 Pro, in almost all tests. Only in the DROP test, which measures a model’s reasoning abilities with reading comprehension, did GPT-4o not emerge as the best model. Furthermore, the difference between GPT-4o and GPT-4 Turbo is not that significant in some tests.

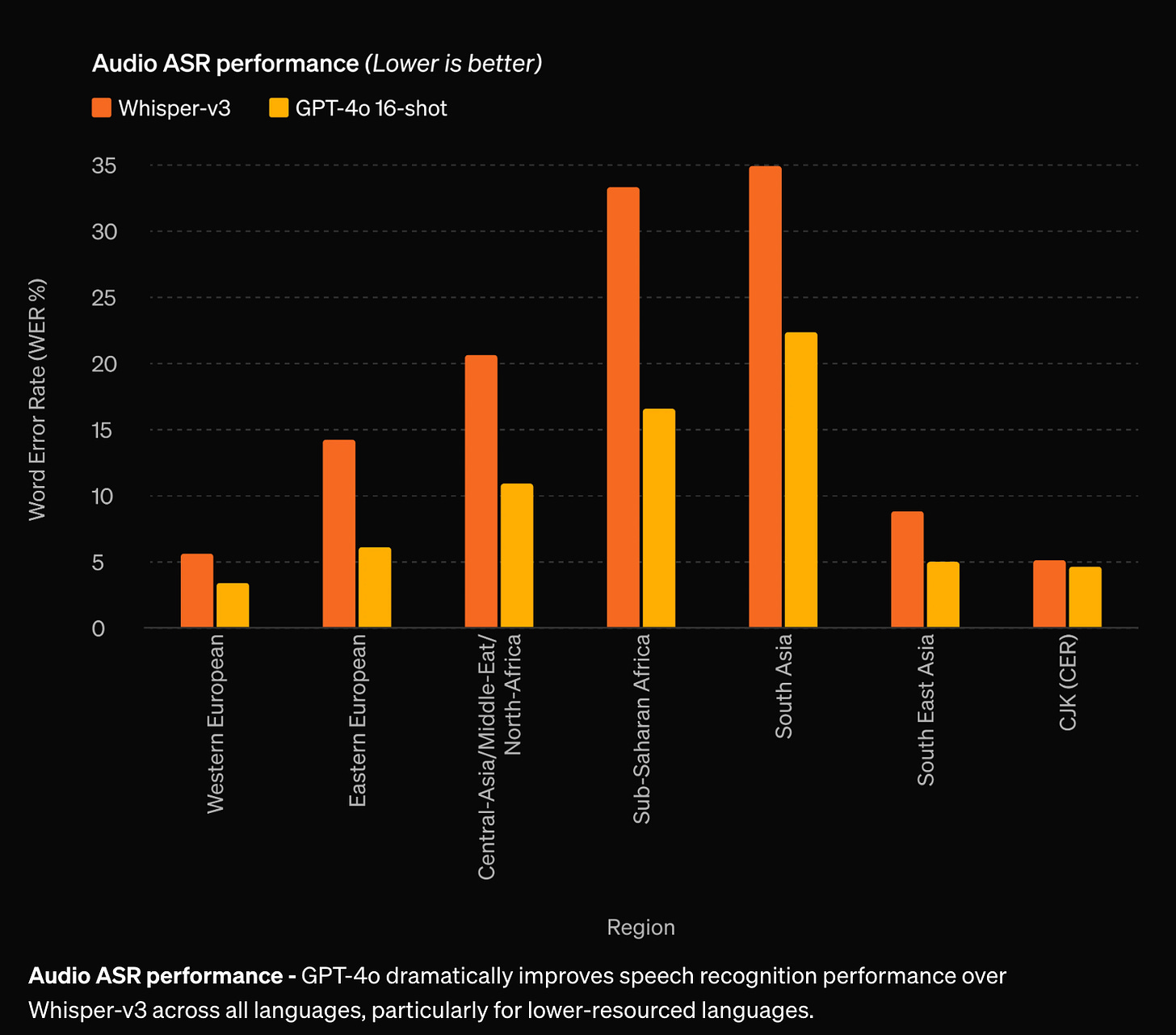

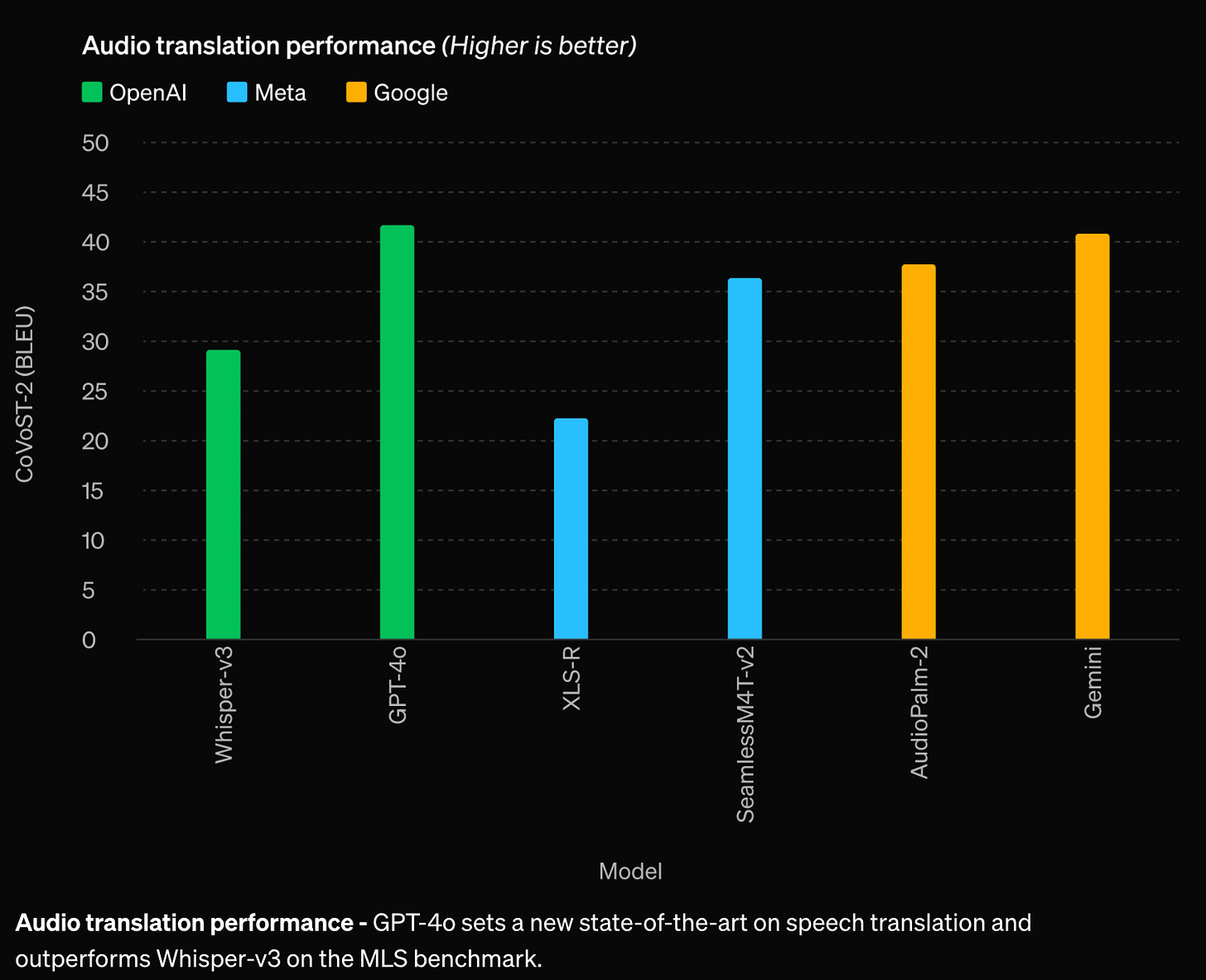

In audio tests, GPT-4o is better than OpenAI’s speech-to-text model Whisper-v3 and a bit better than Google’s Gemini.

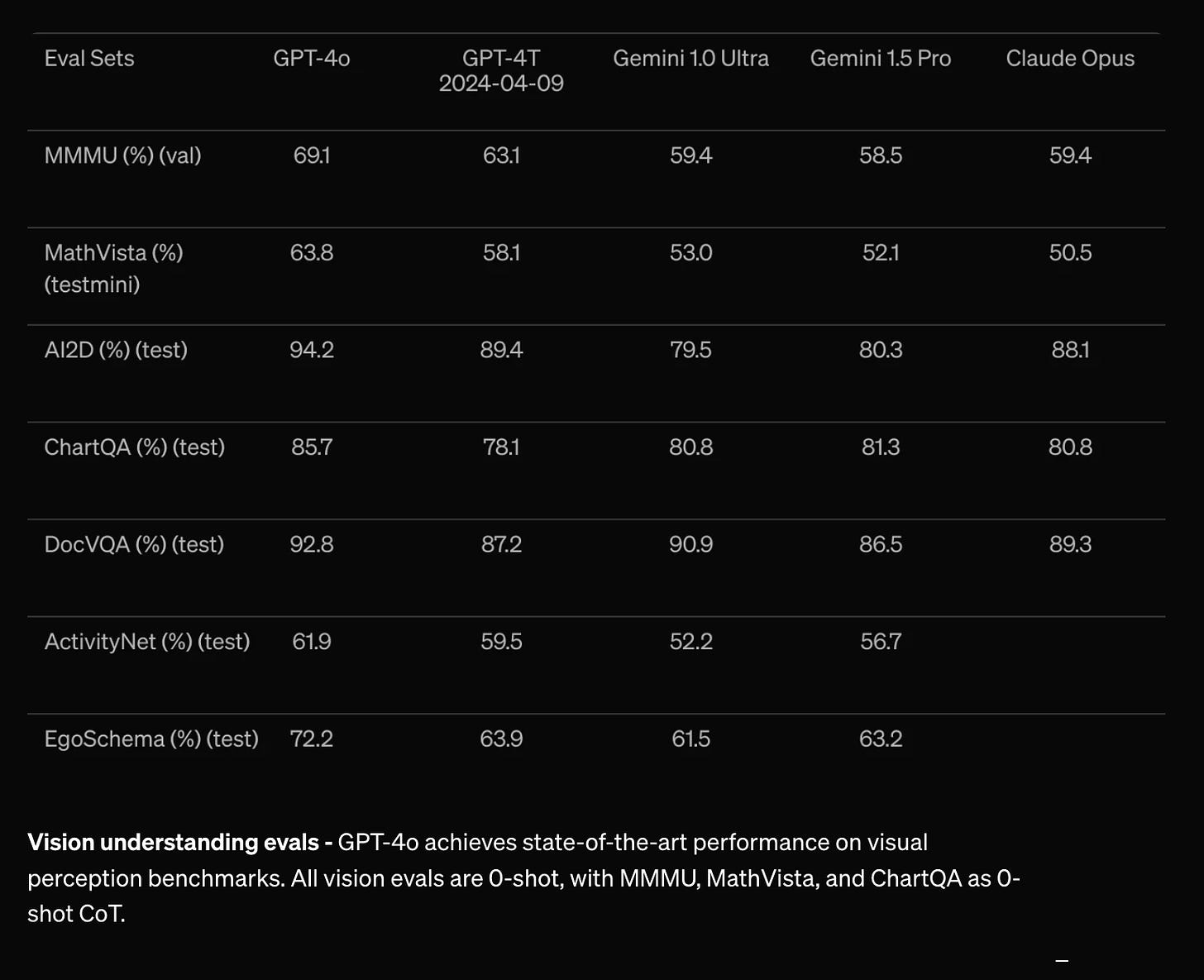

In visual understanding evals, GPT-4o beats every model, according to the benchmark results provided by OpenAI.

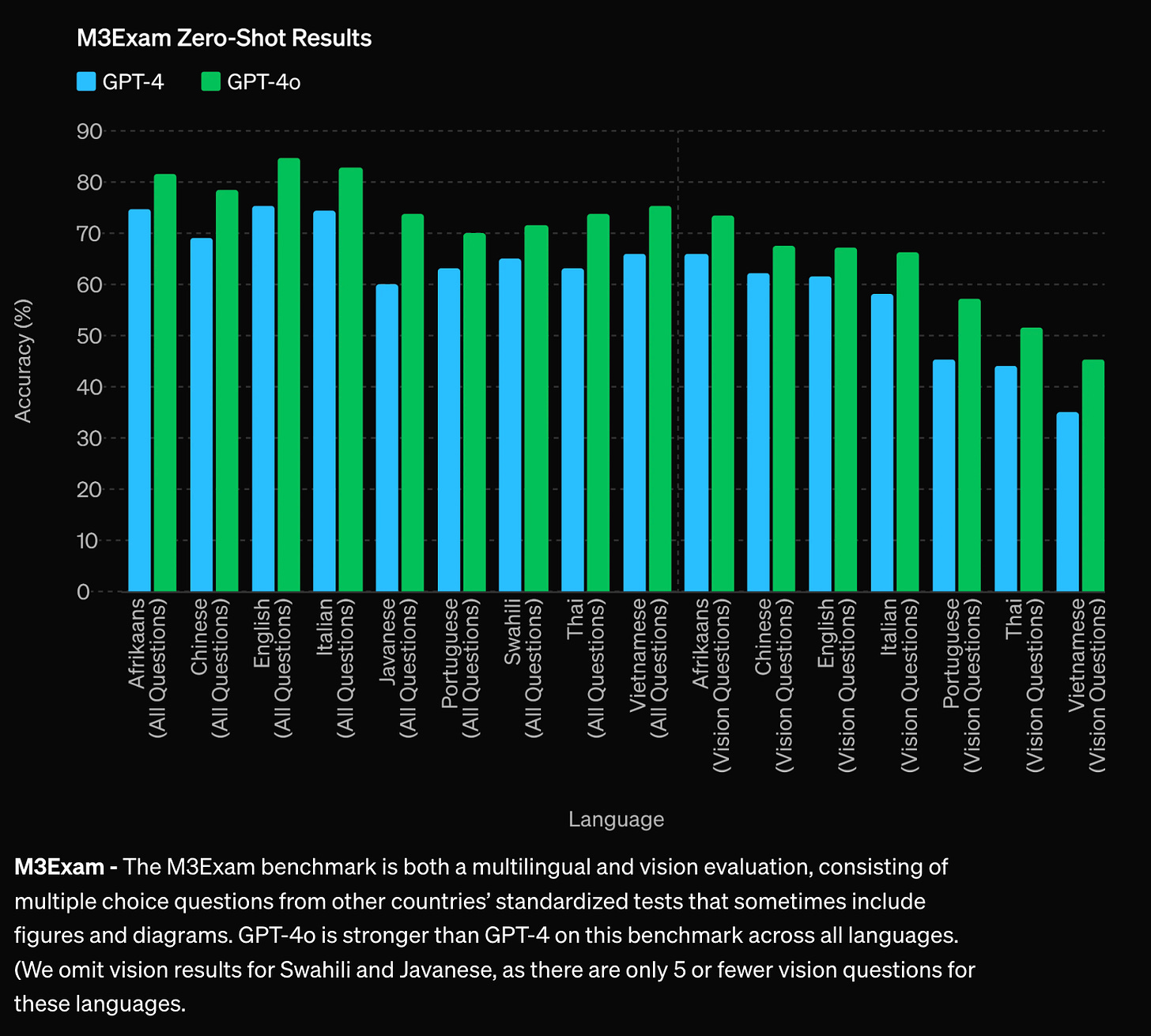

When it comes to multilingual capabilities, GPT-4o scores better results compared to GPT-4. The new model introduces a new tokenizer which, according to OpenAI, requires fewer tokens for multiple languages such as Gujarati, Hindi, Arabic, Chinese, and more. This should make conversations in non-English languages not only cheaper but also faster.

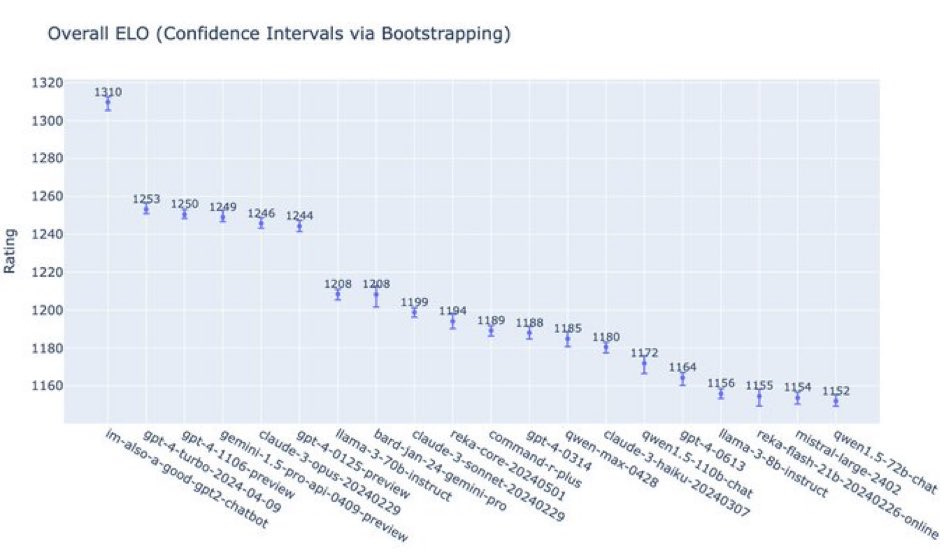

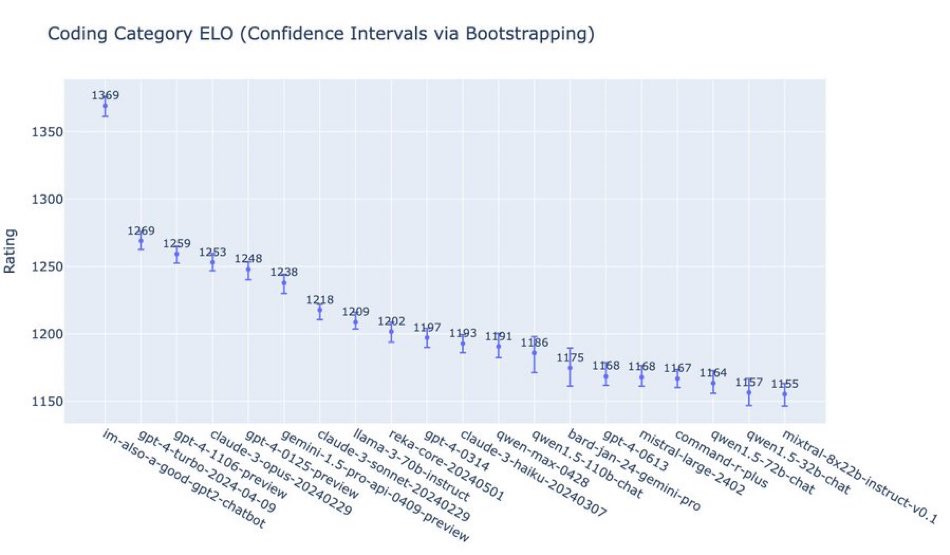

In the post announcing GPT-4o, OpenAI mentions that the new model is twice as fast as GPT-4 Turbo in the OpenAI API. The price for the API has also dropped by 50%, which might mean the new model is more efficient and cheaper to run. The performance improvements were somewhat expected with the introduction of a natively multimodal model. Large language models tend to perform better when new modalities are added. Another factor contributing to the improved performance could be the hardware running GPT-4o. At the end of the keynote, Mira Murati thanked Jensen Huang and Nvidia for providing “the most advanced GPUs to make this demo possible.” Sam Altman and some OpenAI employees have also shared the graphs below on X. These graphs show how GPT-4o (then hiding as the “gpt2-chatbot” model) compares to other models in the LMSYS Arena benchmark. LMSYS Arena works by presenting a human evaluator with two models, and the human’s task is to choose which model’s output they prefer. The models are then ranked using the Elo ranking system. A 100-point difference in Elo points between model A and model B means that when pitted against each other, model A is expected to win in about 64% of matches.

In my own tests, I noticed that GPT-4o indeed generates answers faster compared to GPT-4. For some text inputs, GPT-4 sometimes paused a little when producing tokens. I haven’t seen similar behaviour with GPT-4o yet. The quality of answers is good for both models, but the ones from GPT-4o look better. What does “open” in OpenAI now mean?The release of GPT-4o has also clarified, or redefined, what “open” in OpenAI means. When OpenAI was founded in 2015, “open” in OpenAI meant openness in sharing research results. This ethos of scientific openness stood in contrast to other AI labs that preferred to keep the details of their work to themselves. However, in recent years, OpenAI started to move away from its original values. In 2019, OpenAI refused to release GPT-2 to the public, saying it was “too dangerous.” Then the company gradually stopped disclosing the details of its models. The paper describing GPT-3 still mentioned the size and other details about the model, but the paper for GPT-4 did not contain information about the size of the model or its architecture. We now know the size of GPT-4 and roughly its architecture, but that information was not revealed by OpenAI; it was casually dropped by Jensen Huang, the CEO of Nvidia, in a keynote introducing Blackwell GPUs. The papers describing the AI models started to contain less and less information about the models and started to look more like marketing material disguised as research papers. The paper for GPT-4o has not been released so far. With the gradual transition from an open AI research lab to a more closed-off tech company, the word “open” in OpenAI started to be questioned. Some even called for the company to rename itself to ClosedAI to better reflect what it has become. OpenAI has found itself in a situation where it had to justify the use of the word “open” in its name. “Open” in OpenAI now means something different than it did when the company was founded. On his blog, Sam Altman writes that a key part of OpenAI’s mission is “to put very capable AI tools in the hands of people for free (or at a great price)”. Being “open” does not mean open source, open weights or open research anymore. Being “open” now means giving access to state-of-the-art AI models to as many people as possible. OpenAI democratises access to advanced AI for everyoneWith this new definition of what “open” in OpenAI means, OpenAI has chosen to make GPT-4o available for everyone for free. Of everything announced during the Spring Update, this will probably have the biggest impact on the AI landscape. Previously, OpenAI was giving access only to GPT-3.5 in the free version of ChatGPT. The more advanced GPT-4 was kept behind a monthly subscription. Other companies, like Google and Anthropic, adopted the same approach—tease everyone with a free but weaker model and keep the stronger model behind a paywall. With the release of GPT-4o, OpenAI is throwing that business model out of the window. Everyone with a free ChatGPT account will have access to GPT-4o, the most powerful model OpenAI has to offer, including the ability to generate code and upload photos and files to ask questions about them in multiple languages. Free users will also get access to GPT and the GPT Store. In other words, free users are getting everything ChatGPT Plus users were paying for. The only benefit of having ChatGPT Plus is up to five times the message limit of free users, with Team and Enterprise users getting even higher limits. The prices for using GPT-4o through OpenAI API have also dropped substantially. For using GPT-4 Turbo, OpenAI charged $10 for 1 million tokens input and $30 for 1 million tokens output. GPT-4o costs half of that—$5 for 1 million tokens input and $15 for 1 million tokens output. The new API prices are lower than what Anthropic charges for accessing Clause 3 Opus via API —$15 for 1 million tokens input and $75 for 1 million tokens output. However, both models are still more expensive than Google’s Gemini 1.5 Pro, which costs $3.50 for 1 million tokens input and $10.50 for 1 million tokens output. Even though Gemini might be the weaker of these three models, it offers a massive 1 million tokens context window (soon to be 2 million), while OpenAI and Anthropic offer 128k and 200k, respectively. The question I have here is why OpenAI is giving away GPT-4o for free. In the post announcing GPT-4o, OpenAI writes its mission “includes making advanced AI tools available to as many people as possible”. However, as Sam Altman writes in the blog post I mentioned earlier, “we [OpenAI] are a business”. Allowing everyone to experience GPT-4 level intelligence aligns with OpenAI’s mission, but it is hard for me to believe they did it for altruistic reasons. Apart from messing with the competitors, I see two reasons why OpenAI opened GPT-4o to everyone. The first reason is to grow the number of monthly active users. When ChatGPT was released at the end of 2023, it experienced massive growth and public interest. The service reached 100 million users in two months, setting the record for the fastest-growing user base at that time. OpenAI is proud of that and Mira Murati mentioned this during the keynote. However, the number of active users who use ChatGPT on a regular basis is most likely much, much lower. By making GPT-4o available in the free version of ChatGPT OpenAI hopes to bring as many as possible people back to using ChatGPT as well as attract new users who have never before used an AI chatbot. The goal is, as Altman writes, to deliver “outstanding AI service to (hopefully) billions of people”. The second reason could be that a better model is coming soon. A model that justifies subscribing to ChatGPT Plus and paying $20 per month to access it. What’s next?OpenAI promised to roll out GPT-4o and everything that was presented during the demos in the next few weeks. At the time of writing, I have access to GPT-4o on my ChatGPT Plus account, but I don’t see the new model on my free test account. Also, I wasn’t able to recreate some of the voice interactions presented during the Spring Update, such as interrupting the model mid-sentence. By the time you are reading this, the situation might have changed. There is also a new and official ChatGPT desktop app. At the moment, it is only available for macOS and for ChatGPT Plus users. A Windows app is scheduled to be released later this year. With GPT-4o out, the question now is what’s next for OpenAI? As Mira Murati said at the end of the Spring Update, OpenAI will soon make an update about the progress towards “the next big thing”. The obvious next big thing would be GPT-5, which is rumoured to be released sometime around the end of this year or early 2025. There are also rumours that GPT-5 could be partially released ahead of the full release in something that might be called GPT-4.5. Although GPT-4o is a step up compared to GPT-4 Turbo, I don’t think the jump was big enough for the newly revealed model to deserve the name GPT-4.5. While top AI researchers tirelessly work on the next breakthrough to deliver a new level of performance, changes like improving the response time to the point where conversation with the AI feels like talking to a human may have the biggest impact on the usability and public perception of GPT-4o and ChatGPT. As Charlie Guo writes over on Artificial Ignorance in his article about GPT-4o, we are on the brink of the perception of AGI. GPT-4o is not leagues ahead of other models in terms of performance but it feels better. It feels like the AI assistants we have seen in the sci-fi movies. Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it. Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support! My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!" |

Older messages

DeepMind releases AlphaFold 3 - Weekly News Roundup - Issue #466

Friday, May 10, 2024

Plus: OpenAI releases Model Spec; Neuralink publishes progress update; Tesla shares new video of Optimus; growing meat with Gatorade; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

gpt2-chatbot and OpenAI search engine - Weekly News Roundup - Issue #465

Friday, May 3, 2024

Plus: Med-Gemini; Vidu - Chinese answer to OpenAI's Sora; the first race of Abu Dhabi Autonomous Racing League; deepfaking celebrities to teach math and physics; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Microsoft's and Google's bet on AI is paying off - Weekly News Roundup - Issue #464

Friday, April 26, 2024

Plus: AI-controlled F-16 has been dogfighting with humans; Grok-1.5 Vision; BionicBee; Microsoft's AI generates realistic deepfakes from a single photo; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Llama 3 is out - Weekly News Roundup - Issue #463

Friday, April 19, 2024

Plus: brand-new, all-electric Atlas; AI Index Report 2024; Microsoft pitched GenAI tools to US military; Humane AI Pin reviews are in; debunking Devin; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The AI race is not slowing down - Weekly News Roundup - Issue #462

Saturday, April 13, 2024

Plus: Udio, a new AI music generator; 200+ artists sign an open letter against AI; Intel Gaudi 3 AI accelerator; Tesla to reveal robotaxis on August 8th; more humanoid robots show off their skills ͏ ͏

You Might Also Like

Open Source Isnt Dead...Its Just Forked

Tuesday, March 4, 2025

Top Tech Content sent at Noon! Augment Code: Developer AI for real eng work. Start for free Read this email in your browser How are you, @newsletterest1? 🪐 What's happening in tech today, March 4,

LW 172 - How to Make Compare at Pricing Show at Checkout

Tuesday, March 4, 2025

How to Make Compare at Pricing Show at Checkout Shopify Development news and articles Issue 172 -

Issue 165

Tuesday, March 4, 2025

💻🖱️ A single click destroyed this man's entire life. Fake murders get millions of YouTube views. Zuckerberg can now read your silent thoughts. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

This top multitool is under $30

Tuesday, March 4, 2025

Thinnest phone ever?📱; ArcoPlasma; Siri alternatives 🗣️ -- ZDNET ZDNET Tech Today - US March 4, 2025 GOTRAX 4 electric scooter I finally found a high-quality multitool for under $30 Compact and durable

Post from Syncfusion Blogs on 03/04/2025

Tuesday, March 4, 2025

New blogs from Syncfusion ® Stacked vs. Grouped Bar Charts in Blazor: Which is Better for Data Visualization? By Gowrimathi S Learn the difference between the stacked and grouped bar charts and choose

⚙️ GenAI Siri

Tuesday, March 4, 2025

Plus: TSMC's hundred billion dollar investment

Big Notion Updates + Want to Earn Money?

Tuesday, March 4, 2025

Notion Tabs, Build with AI, Hidden Updates + New Opportunity just for you 🔥 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The Sequence Knowledge #502: If You are Doing RAG You Need to Know Hypothetical Document Embeddings

Tuesday, March 4, 2025

One of the most important methods to enable sematically-rich RAG. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Google's March 2025 Android Security Update Fixes Two Actively Exploited Vulnerabilities

Tuesday, March 4, 2025

THN Daily Updates Newsletter cover Starting with DevSecOps Cheatsheet A Quick Reference to the Essentials of DevSecOps Download Now Sponsored LATEST NEWS Mar 4, 2025 How New AI Agents Will Transform

🍏 How Siri Is Ruining My Smart Home — 7 Improvements PlayStation Plus Needs to Make

Tuesday, March 4, 2025

Also: Why I Just Can't Love Linux Mint, and More! How-To Geek Logo March 4, 2025 Did You Know Neither Columbus nor his contemporaries thought the Earth was flat; Greek scientists, philosophers, and