Humanity Redefined - Apple Intelligence is different

Apple Intelligence is differentAn in-depth look into Apple Intelligence and what Apple is promising with "AI for the rest of us"

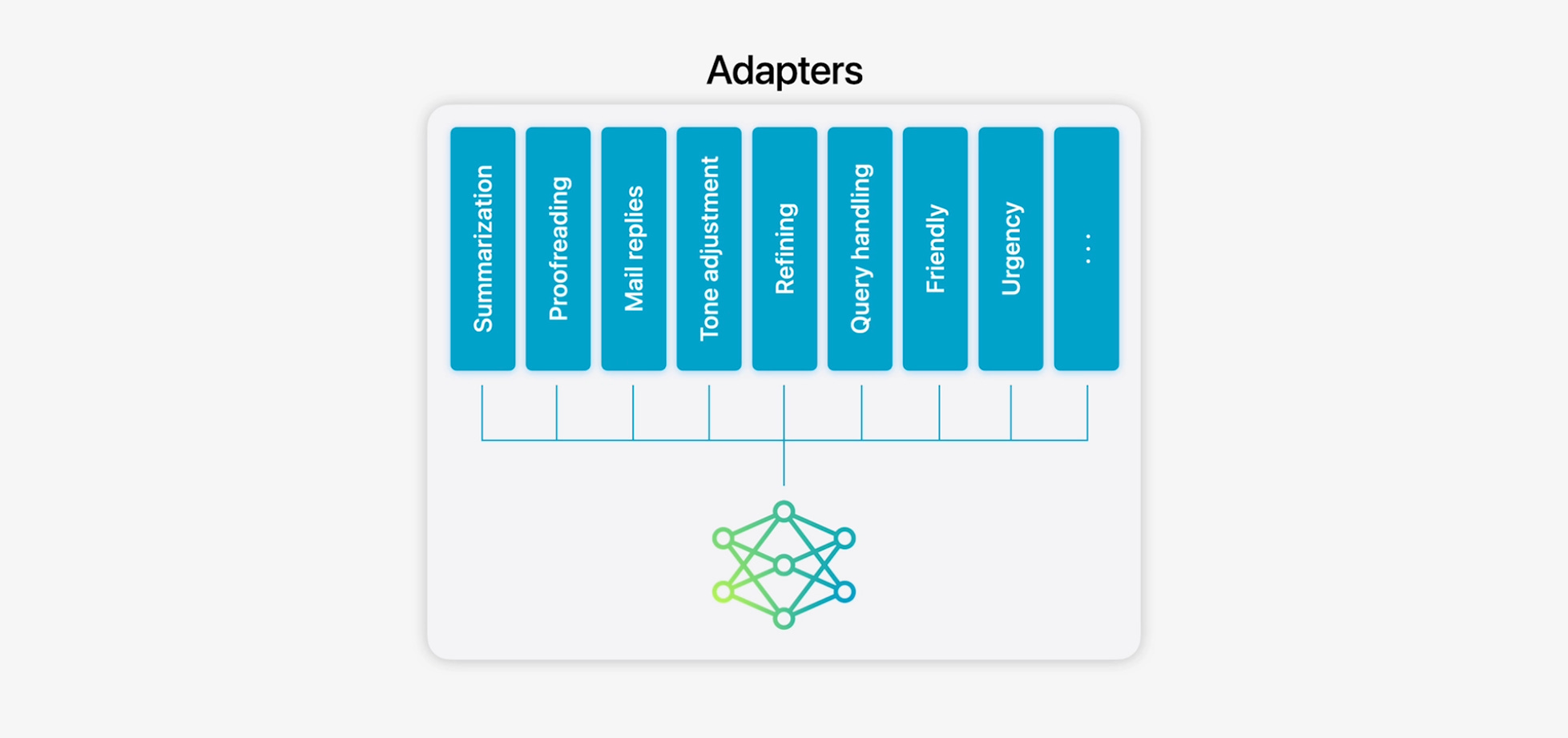

When OpenAI was busy experiencing explosive growth and Google was trying to get itself together in 2023, Apple was quiet. That, however, does not mean Apple was doing nothing. Behind the scenes, Apple was making moves. In 2023, Apple acquired 32 AI startups, more than any other big tech company. Then the leaks started to emerge hinting that Apple was cooking something in their headquarters in Palo Alto. The closer we were to WWDC 2024, the more certain we were that Apple was getting seriously into AI. In this article, we will take a closer look at what Apple brings to the generative AI revolution with Apple Intelligence. We will also put everything that Apple announced in context and explain how it could impact the AI industry. Apple is already heavily using machine learning and AI algorithms in their products. Every photo taken with an iPhone goes through a complex computational pipeline to enhance the image. Machine learning is also found in noise-cancelling AirPods and in Apple Health, where it integrates data from multiple sensors and presents it in an easy-to-digest form. Every time iOS suggests a word or corrects spelling, a machine learning algorithm is behind it. Unlike other tech companies, Apple does not like to throw technical details and jargon at people, instead focusing on experiences, or creates their own words and phrases. The same pattern was visible in the first hour of the WWDC 2024 opening keynote where Apple was presenting new features coming soon to iOS, iPadOS and macOS. In the first half of the keynote, the words “AI” or “artificial intelligence” were not said even once. However, AI and machine learning were featured over and over, if you knew what to look for. Math Notes, the feature where you can handwrite equations in the new Calculator app and the app will solve them—that’s machine learning. New features that make handwriting a little bit tidier—that’s machine learning. Removing objects from photos, page summaries in Safari, and voice isolation in AirPods—that’s machine learning, too. After all the updates to iOS, iPadOS, and macOS were done, over an hour into the keynote, Tim Cook said the words “artificial intelligence” for the first time and introduced Apple Intelligence—"the AI for the rest of us.” Apple Intelligence—AI for the rest of usApple Intelligence is not a single AI-powered app like ChatGPT. Instead, it is a suite of AI tools and features sprinkled around iOS, iPadOS and macOS. Apple designed and built its personal intelligence system to be powerful, intuitive, integrated, personal and private. At the heart of Apple Intelligence is the new Siri. Even though Siri was the first AI assistant released in 2011 with the iPhone 4s, it did not live up to the high expectations. When more intelligent assistants started to show up, first Amazon’s Alexa and later OpenAI’s ChatGPT, the gap between Siri and its competitors became massive. With Apple Intelligence, Siri gets the long overdue makeover and becomes smarter. Apple says that thanks to the Apple On-Device model (more on which later), Siri is now much better at understanding human language. Additionally, Siri now sees and understands what is on the screen. It can also pull data from notes, emails, calendars, text messages, and more, and use all of that information to do useful things. In addition to the new Siri, Apple Intelligence brings new tools and features to the Apple ecosystem. Writing Tools help proofread, summarise and do other actions with text. Smart Reply and Priority Messages will help manage and reply to emails, messages and notifications. Apple Intelligence also introduces image generators, such as Image Playground, which can generate images from text or remix existing images (although the quality of these images is far behind what Midjourney, DALL·E, or Imagen can do) in one of three styles: animation, illustration, or sketch. Interestingly, Apple does not allow the generation of photorealistic images. This might be a deliberate choice by Apple or due to the model’s limitations, and we will see if and how that changes in the future.  Apple is famous for joining major tech trends late. The iPod, iPhone, iPad, Apple Watch, and the recently released Apple Vision Pro were not the first products in their respective categories. But when Apple enters a new domain, it brings something new, something different. Apple Intelligence is no exception here. From what Apple has shown so far, Apple Intelligence is shaping up to be the best consumer AI on the market. It looks it is the best executed integration of AI features we’ve seen so far. It feels convenient and polished. Apple knows how to hide technical complexity behind intuitive interfaces. With deep integration into the Apple ecosystem and access to personal data, it might even prove to be useful. And Apple Intelligence will be available for free (assuming you have a supported device, which iPhone 15 Pro, iPhone 15 Pro Max and any iPad and Mac with Apple Silicon). It remains to be seen how well Apple Intelligence will perform when real people get their hands on it. But if it does perform well, if Apple delivers what it promised, it will give millions of people access to generative AI tools and access to a capable AI assistant. The models powering Apple IntelligenceApple briefly touched upon what Apple Intelligence looks like under the hood during the WWDC 2024 opening keynote and revealed more details during the Platform State of the Union and in the post over at Machine Learning at Apple. Apple Intelligence is a three-tier system, consisting of on-device foundation models, larger models running on Private Cloud Compute and third-party models. Apple On-DeviceLet’s start with the Apple On-Device model. Apple engineers were given a challenging task: to fit a powerful and energy-efficient foundation model into an iPhone. Apple wanted to ensure privacy, low latency, and a good user experience, which meant that as many operations as possible had to be performed on the device. Engineers at Apple developed a new, about 3 billion parameters foundation model called the Apple On-Device, which includes an interesting feature they call adapters. With only 8GB of RAM available, they had to be clever. Adapters are a smart solution. The On-Device model does not have all its weights (knowledge) loaded into memory at once. Instead, it splits its weights into smaller pieces called adapters. One adapter excels at summarising, another at proofreading, and so on. The base model then loads into memory only the adapter (weights) needed for a given task.

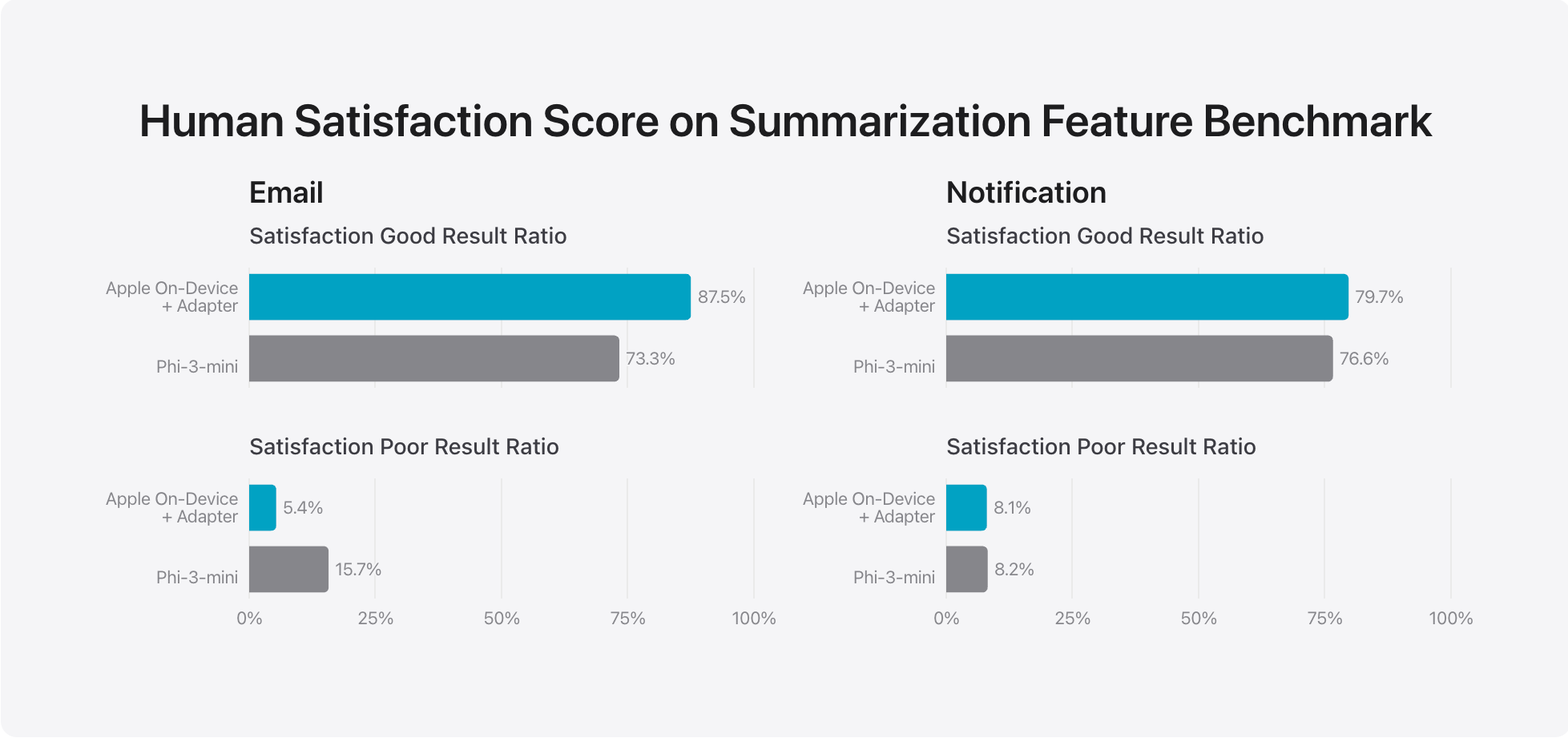

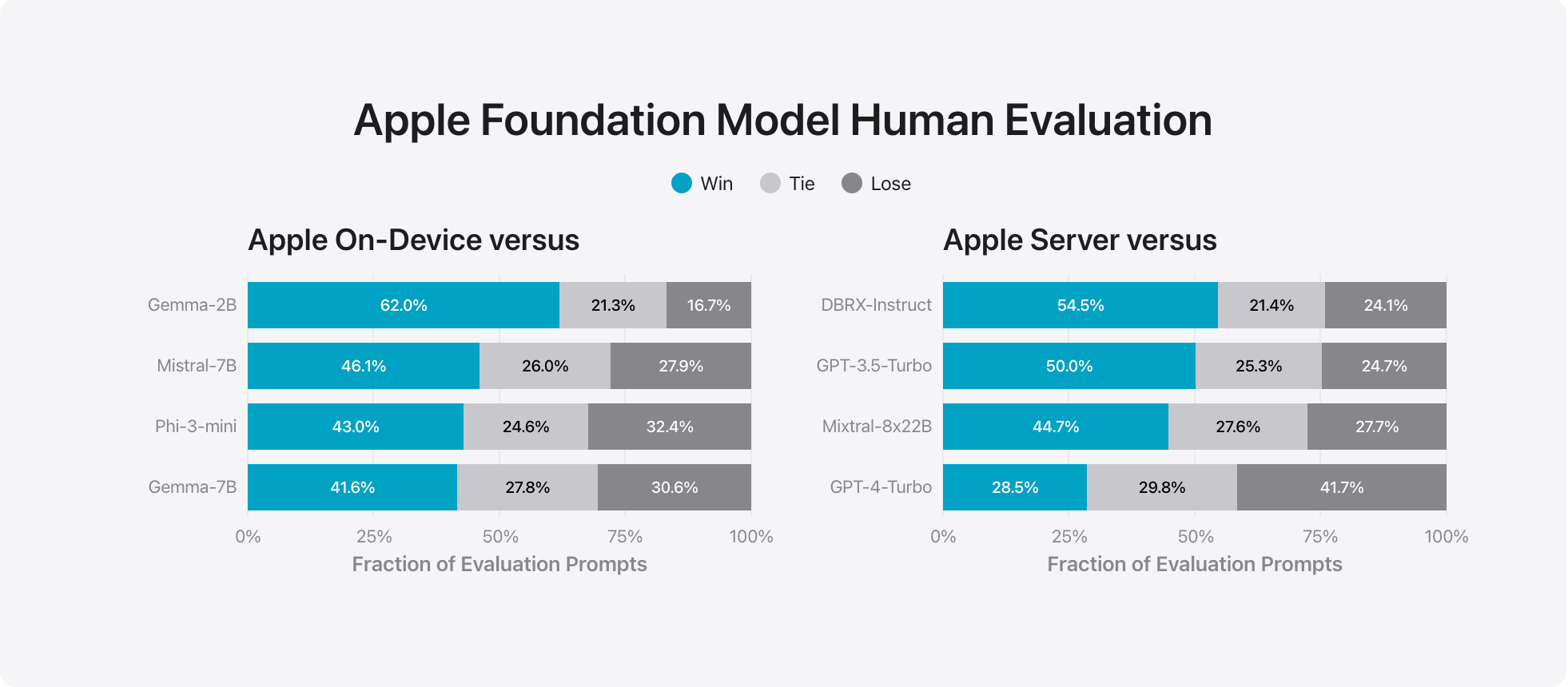

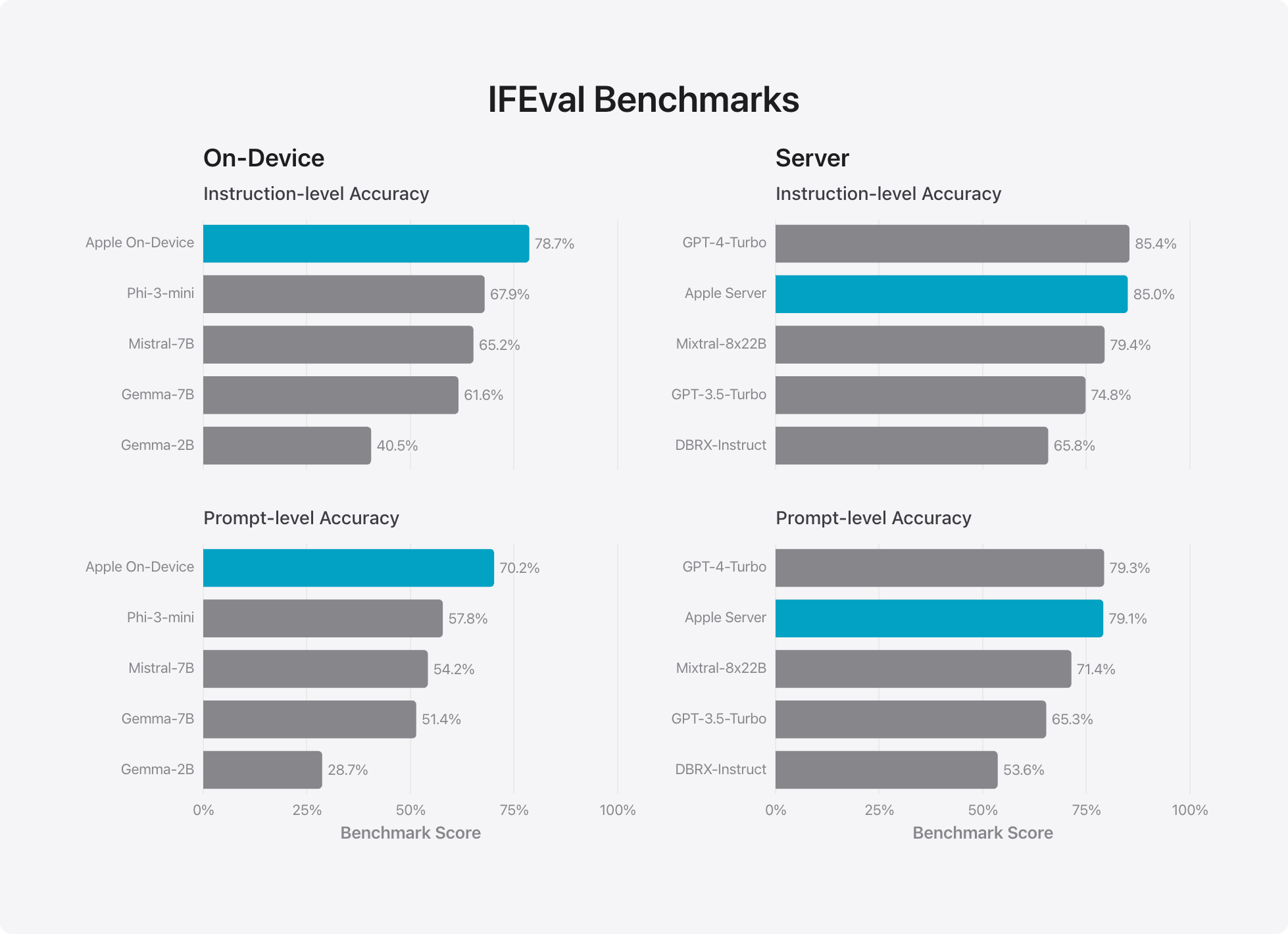

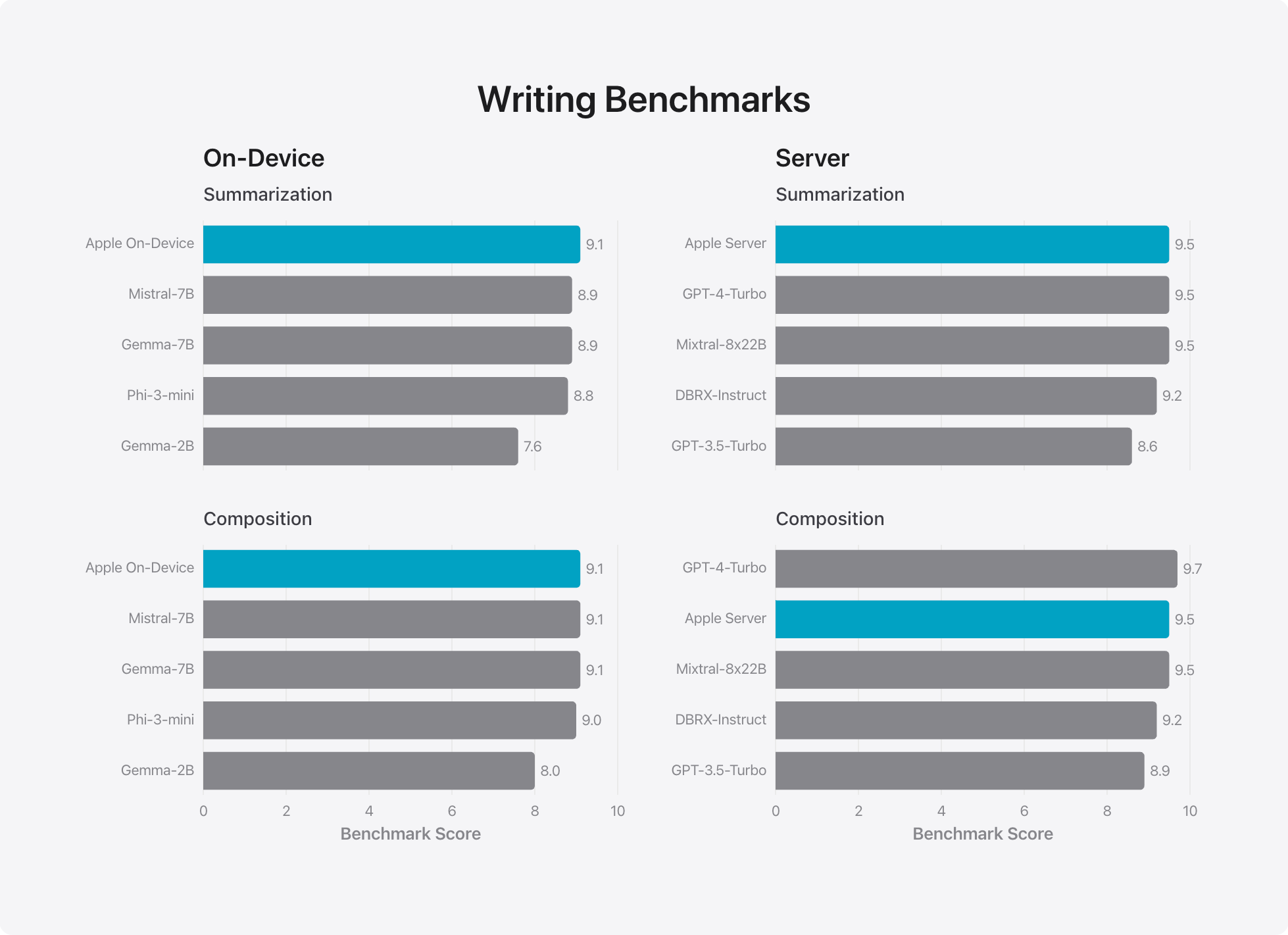

With this approach, the On-Device model can quickly specialise and be better at a given task than other models. After further optimisation, the On-Device model running on the iPhone 15 Pro achieved a time-to-first-token latency of about 0.6 milliseconds and generated 30 tokens per second. Those are quite impressive numbers for a model running on an iPhone, rather than on a machine learning workstation with a dedicated GPU or on a server. Apple has not stated it anywhere clearly but from looking at the post introducing Apple foundation models and the tests that Apple published (more on them later), it is almost certain that Apple On-Device is a text-only model. And since only English will supported when Apple Intelligence gets released this autumn, it is fair to assume it is not a multilingual model yet. Additionally, we don’t know how large its context window is. It is also unknown how much of Apple’s open-source model OpenELM is integrated into Apple On-Device. The On-device model is designed to handle simple tasks, so I don’t expect it to be able to summarise pages upon pages of text. More demanding tasks will need to be processed outside the device by a larger and more capable model, on a server running Apple’s Private Cloud Compute. Private Cloud Compute and Apple ServerPrivate Cloud Compute (PCC) is Apple’s dedicated cloud infrastructure designed to serve Apple’s larger AI models. PCC has been built with privacy and security in mind from the ground up to run Apple Server. PCC runs on servers with Apple Silicon chips and custom software, instead of using industry-standard tools. Apple says their custom tools are designed to prevent privileged access, such as via remote shell, that could allow access to user data. I hope Apple can back these claims up. My experience as a software engineer tells me that these kinds of statements often become invalid sooner or later. With PCC, Apple is going above and beyond to ensure every aspect of cloud AI computing is private, safe, and secure. All connections between Apple devices and PCC are end-to-end encrypted. Once the request reaches PCC, Apple promises that a user’s data will be stored only for as long as it takes to complete the request. Once the request is complete and the response is sent to the user’s device, the user’s data will be removed entirely. Apple even goes further to ensure that no personal data is stored on PCC by removing metadata like logs or debugging information. Typically, engineers log various events in case something goes wrong and they need to figure out what happened. Apple engineers won’t have logs. In fact, Apple won’t be able to access the data on PCC even during active processing. Furthermore, Apple is making virtual images of every production build of PCC publicly accessible for inspection by independent security researchers to ensure Apple keeps its promises. I am impressed by what Apple is doing with PCC. This level of commitment to protecting user privacy and security is something you won’t find at Google, Amazon, or Microsoft. However, custom software and custom hardware are not cheap. Other companies also write custom software and use custom hardware for their cloud services, but they offer those services to customers through AWS, Azure, or Google Cloud Platform and make money from them. Apple’s cloud services will only have one customer—Apple themself. Other companies also allow themselves to use industry-standard tools, which can make building and maintaining large server farms cheaper. Additionally, other companies can benefit from training and inference performance improvements as Nvidia, AMD, and others release new, more efficient GPUs. While Apple’s training and inference costs should also come down over time, their reliance on custom hardware based on Apple Silicon may result in slower cost reductions compared to their competitors. But if you are one of the most valuable companies in the world, worth over $3 trillion, then you can afford such things. If you want to learn more about PCC and the detailed privacy and safety measures Apple is implementing, I recommend reading Apple’s security report on PCC. Just like the On-Device model, the Apple Server model appears to be a text-only model and only understands text in English. We don’t know how big the model is or any details about its architecture. One thing worth pointing out is that by ruthlessly pursuing privacy, Apple is denying itself additional data to train the next generation of its models. Other companies, like OpenAI and Google, retain the conversations and may use it to train their models. Third-party modelsWhen the request is too complex for Apple Intelligence to handle, the user will be asked if they want the request to be processed by a third-party model. Currently, Apple Intelligence will ask if it can pass the request to ChatGPT, thanks to a partnership with OpenAI, which we will discuss in detail shortly. Even with third-party models, Apple prioritises privacy. At the moment, these rules apply only to OpenAI, but it is very likely that every company wanting to have its model available on Apple devices will need to adhere to the same rules. Requests to ChatGPT have their IP addresses hidden from third-party model providers. Additionally, third-party providers are forbidden from storing requests coming from Apple. However, if a user connects their ChatGPT, Google, or any other AI model provider account to Apple Intelligence, then the third-party provider’s policies apply. BenchmarksApple has also shared internal benchmarks comparing both the On-Device model and the Server model with other popular large language models, both proprietary and open-source. The picture Apple paints with these benchmark results is that the Apple On-Device model and Apple Server model match or exceed similar models. Since these results are provided by Apple, I’d recommend treating them as marketing and approaching them with a healthy dose of scepticism until independent test results are published. I find it interesting what kind of test results Apple has made public. Usually, you’d see a set of commonly used tests assessing how good a model is at solving math problems, reasoning, and text comprehension. Not in this case. Apple opted to use internal benchmarks to compare its models against the competition. The only non-Apple benchmark used was IFEval. Some people might feel disappointed by not getting tables and graphs showing how many points Apple models scored on industry-standard benchmarks, like MMLU, HELM or LMSYS Chatbot Arena. Apple’s goal was not to challenge for the top spot in benchmarks, as they are not there yet and maybe never be there. Instead of chasing higher and higher numbers in benchmarks, Apple focused on their needs and goals. Apple’s goal with On-Device and Server models was to create a good enough model that provides responses people like and find useful. Judging by the results below, it looks like Apple succeeded in that regard.

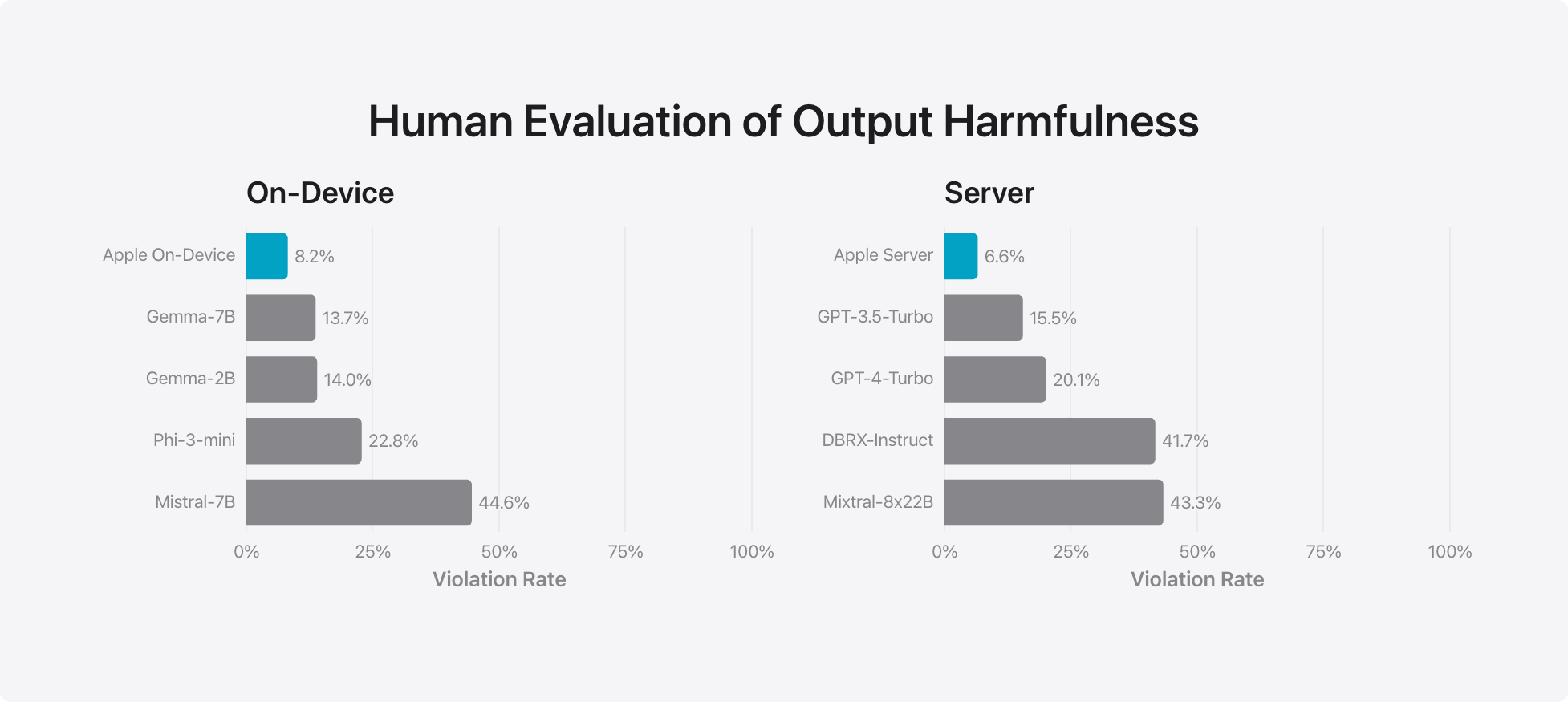

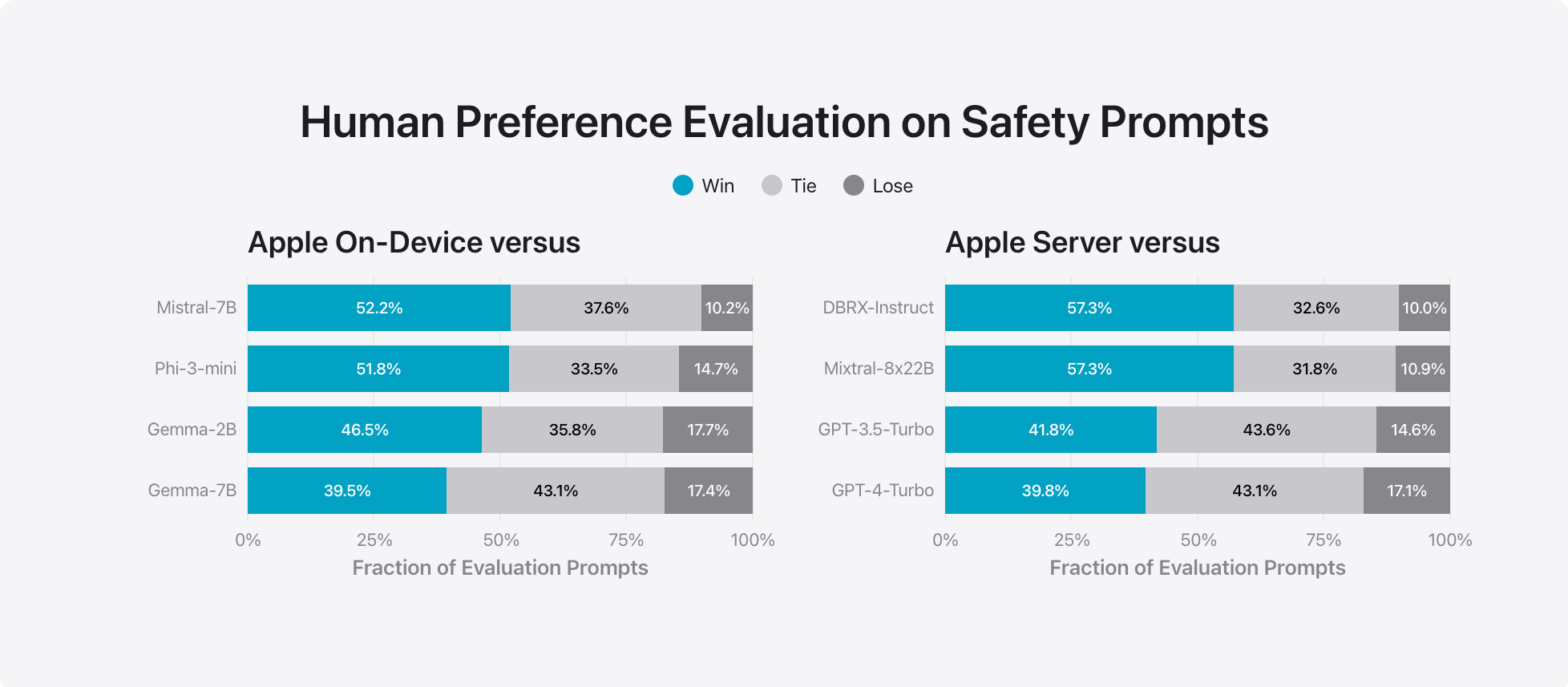

SafetyIn terms of evaluating the safety of Apple's foundational models, I think Apple has done the minimum of what is required and expected. Those looking for extensive safety evaluations and safety test results will be disappointed. According to Apple’s own safety tests, both the Apple On-Device and Apple Server models are as safe as, if not safer, than the competition.

Is the partnership with Apple a win for OpenAI?One of the most rumoured and discussed topics around Apple’s AI services was which company would be powering all these new AI features. Initially, it seemed that Apple would choose Google. However, as WWDC 2024 drew closer, it became more likely that OpenAI would power the new Siri. Eventually, Apple chose to integrate OpenAI’s ChatGPT with Apple Intelligence. This might be seen as a big win for OpenAI, scoring another significant customer after Microsoft. However, the deal between Apple and OpenAI is not as straightforward as one might initially think. First, Apple will not offload everything to OpenAI. Privacy is one of the biggest selling points of Apple products and services, and Apple plans to keep as many requests as possible within the ecosystem it controls. Most requests will be processed on the device or by the models on Apple servers. Only if the request is too complicated for Apple models to hangle will Siri suggest sending it to ChatGPT. The user needs to confirm to send the request to ChatGPT. Additionally, OpenAI is not allowed to store the conversation on their servers. Apple also masks the IP addresses so OpenAI cannot build a shadow profile from the user’s metadata. Moreover, OpenAI does not have an exclusive partnership with Apple. Apple plans to introduce more third-party AI models in the future. “We’re looking forward to doing integrations with other models, including Google Gemini, for instance, in the future,” Craig Federighi said, as quoted by TechCrunch. And Apple is not paying OpenAI for those services. As Mark Gurman writes for Bloomberg, neither side expects to generate meaningful revenue, at least initially. It appears to be a quid pro quo deal between Apple and OpenAI, where Apple gains access to one of the most advanced AI models on the market, hping to convince more people to buy or upgrade their devices to the ones supporting Apple Intelligence. Meanwhile, OpenAI benefits from increased exposure and hopes that some Apple users will sign up for OpenAI’s paid subscription, ChatGPT Plus. By partnering with Apple, OpenAI is playing an interesting (and somewhat risky) game. It will gain more exposure and traffic by being pushed to millions of Apple devices. However, OpenAI will have to pay to serve that mostly free and expensive-to-serve traffic. If an Apple user decides to subscribe to ChatGPT Plus, Apple will take a cut of the monthly subscription. Additionally, ChatGPT will not be the only AI model offered to Apple users. Soon, Google Gemini will be added, and possibly Anthropic’s Claude 3, Meta’s Llama 3 and other models could follow. Apple may even build its own AI model. There is also a possibility Apple will be offering local models in certain countries. For example, in China, Apple may have to offer models from Baidu or Alibaba. In either case, ChatGPT will face competition on Apple devices, and switching from one model to another may be just one or two clicks away. OpenAI’s deal with Apple also changes the dynamics between OpenAI and Microsoft. Microsoft is OpenAI’s biggest supporter and, with over $13 billion invested, its largest investor. OpenAI’s models run on Microsoft Azure servers. Microsoft benefited from the exclusive right to offer OpenAI models on Azure and to use them to power various copilots Microsoft offers. Now, OpenAI has gained another big tech company as a customer, and Satya Nadella is reportedly not happy about it. When the rumours about Apple choosing OpenAI started to emerge, I saw some outlets calling OpenAI the “new king of Silicon Valley.” However, if the reported details about the deal between OpenAI and Apple are true, then I don’t think OpenAI scored a win here. If anyone is to come out on top in this deal, it is Apple. And to add one more piece of evidence that Apple is dictating the terms of the game, OpenAI was mentioned only briefly during the WWDC keynote. Sam Altman, the CEO of OpenAI, was present at Apple headquarters for WWDC, but he wasn’t invited to the stage next to Tim Cook or Craig Federighi. If this had been a Microsoft event, Altman would have been standing next to Satya Nadella on stage. I think this says a lot about the power dynamics in the relationship between Apple and OpenAI. Apple Intelligence is a good startApple Intelligence is a good start, but there is still a lot of work ahead to catch up with OpenAI and Google. In terms of development, I’d say Apple Intelligence is around GPT-3.5. Apple On-Device and Apple Server models are not natively multimodal like Gemini or GPT-4o. While Apple is catching up with multimodality, OpenAI and Google are working on the next thing—AI agents. The market seems to really like what Apple is bringing to the generative AI table. Apple shares went up by 7% to an all-time high shortly after WWDC and are still higher than they were before the event. Additionally, the release of Apple Intelligence might spark an upgrade “supercycle” and push more people to upgrade their phones to the latest iPhone 15 or soon-to-be-released iPhone 16 models to access Apple’s AI features. Recently, iPhone sales, which are Apple’s main source of revenue, have been dropping. Apple Intelligence could help reverse that trend in the next few months. Apple’s commitment to privacy stands in stark contrast to what Google, Microsoft, and OpenAI are doing. If independent security researchers confirm Apple’s promises around Private Compute Cloud, then Apple will have something other big tech companies don’t have—trust. This gap in focus on privacy and security is even more visible when you look at Microsoft and the mess that Windows Recall is. Even though the new Siri, like Windows Recall, will see the user’s screen and have access to their private data, it does not receive negative feedback, thanks to Apple’s long-standing commitment to privacy and security. When choosing between Apple and its competitors, the knowledge that personal data stays private could be the deciding factor for buying an iPhone instead of an Android phone. I wonder if Google and Microsoft will now add new slides explaining the privacy and security of their AI systems during the next Google I/O or Microsoft Build conferences. Additionally, Apple has effectively killed (or sherlocked) all startups working on personal AI assistants (alongside other startups). None of them will be able to integrate a user's personal data as deeply as Apple can. The only company in the West that can challenge Apple in this area is Google. I’m also curious how the integration of third-party models, like Google Gemini, will unfold and how far Apple will go as a distributor of AI models. Having an AI model easily accessible on millions of devices could be a game changer for many companies and for the AI industry as a whole. But the question I have is: what will Apple do next? Is there a roadmap and a multiyear vision for Apple Intelligence? Will Apple commit to further developing its in-house models? Or is Apple Intelligence’s only purpose to catch up with competitors, tick a checkbox to sell more iPhones, and make investors happy? What Apple has shown with Apple Intelligence looks good. Now let’s see how it will work in practice. Apple Intelligence will be released this autumn for free in beta as a part of iOS 18, iPadOS 18, and macOS Sequoia and will initially be only available in US English. You will also need the supported device. Apple Intelligence will be available only for iPhone 15 Pro and iPhone 15 Pro Max. It remains to be seen if the upcoming iPhone 16 and iPhone 16 Max will support Apple Intelligence or if it will only be reserved for more expensive Pro and Pro Max versions. Any iPads and Macs with Apple Silicon chips inside them will be able to run Apple Intelligence. Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it. Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support! My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!" |

Older messages

Weekly News Roundup - Issue #471

Friday, June 14, 2024

Plus: Elon Musk withdraws the lawsuit against OpenAI and Sam Altman; how nanopore sequencers were invented; a tooth-regrowing drug to be trialled in Japan; Mistral AI reaches $6B valuation; and more! ͏

Nine years

Thursday, June 13, 2024

Reflections on writing a newsletter for nine years and what are my future plans for Humanity Redefined ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Will humanoid robots take off?

Tuesday, June 11, 2024

The sci-fi dream of humanoid robots working among us seems to be just around the corner. But will it come true? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

AI comes to PCs at Computex 2024 - Weekly News Roundup - Issue #470

Friday, June 7, 2024

Plus: US launches antitrust investigations into Microsoft, OpenAI, and Nvidia; Nvidia passes $3T valuation; Right to Warn about Advanced Artificial Intelligence; scaling CRISPR cures; and more! ͏ ͏ ͏ ͏

OpenAI is in trouble... again - Weekly News Roundup - Issue #468

Monday, June 3, 2024

Plus: is Rabbit R1 a scam?; Humane is looking for a buyer; FDA clears Neuralink for a second human trial; Nvidia reports another big quarter; China builds the world's first drone carrier; and more!

You Might Also Like

JSter #238 - Libraries and more

Tuesday, March 4, 2025

I feel the need - the need for JavaScript. It's less than three months until Future Frontend (27-28.5, Espoo, Finland). It's not going to be a big conference (~200 people) but that's just

Master the New Elasticsearch Engineer v8.x Enhancements!

Tuesday, March 4, 2025

Need Help? Join the Discussion Now! ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ elastic | Search. Observe. Protect Master Search and Analytics feb 24 header See

Daily Coding Problem: Problem #1707 [Medium]

Monday, March 3, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Facebook. In chess, the Elo rating system is used to calculate player strengths based on

Simplification Takes Courage & Perplexity introduces Comet

Monday, March 3, 2025

Elicit raises $22M Series A, Perplexity is working on an AI-powered browser, developing taste, and more in this week's issue of Creativerly. Creativerly Simplification Takes Courage &

Mapped | Which Countries Are Perceived as the Most Corrupt? 🌎

Monday, March 3, 2025

In this map, we visualize the Corruption Perceptions Index Score for countries around the world. View Online | Subscribe | Download Our App Presented by: Stay current on the latest money news that

The new tablet to beat

Monday, March 3, 2025

5 top MWC products; iPhone 16e hands-on📱; Solar-powered laptop -- ZDNET ZDNET Tech Today - US March 3, 2025 TCL Nxtpaper 11 tablet at CES The tablet that replaced my Kindle and iPad is finally getting

Import AI 402: Why NVIDIA beats AMD: vending machines vs superintelligence; harder BIG-Bench

Monday, March 3, 2025

What will machines name their first discoveries? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

GCP Newsletter #440

Monday, March 3, 2025

Welcome to issue #440 March 3rd, 2025 News LLM Official Blog Vertex AI Evaluate gen AI models with Vertex AI evaluation service and LLM comparator - Vertex AI evaluation service and LLM Comparator are

Apple Should Swap Out Siri with ChatGPT

Monday, March 3, 2025

Not forever, but for now. Until a new, better Siri is actually ready to roll — which may be *years* away... Apple Should Swap Out Siri with ChatGPT Not forever, but for now. Until a new, better Siri is

⚡ THN Weekly Recap: Alerts on Zero-Day Exploits, AI Breaches, and Crypto Heists

Monday, March 3, 2025

Get exclusive insights on cyber attacks—including expert analysis on zero-day exploits, AI breaches, and crypto hacks—in our free newsletter. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏