Hello!

Once a week, we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

And now…let's dive into some interesting links from this week.

The State of Chinese AI

AI progress from Chinese labs has received significantly less attention than the model race between the biggest US labs. Commentary tends to be written from the standpoint of ‘who’s winning?’ and ‘are sanctions backfiring?’ and look to find a one-word answer…We’ve made no secret of both our concerns about the Chinese Communist Party’s AI ambitions or our belief in the responsibility of the technology sector to contribute to defense and national security. However, that doesn’t make the answers to these questions straightforward. As we start gathering material for the State of AI Report, we’re sharing our assessment on some of these points. Spoiler alert: the answers are complicated…

Data Flywheels for LLM Applications

Over the past few months, I have been thinking a lot about workflows to automatically and dynamically improve LLM applications using production data. This stems from our research on validating data quality in LLM pipelines and applications—which is starting to be productionized in both vertical AI applications and LLMOps companies…In this post, I’ll outline a three-part framework for approaching this problem…

Kernel Trick I - Deep Convolutional Representations in RKHS

In this blog post, we focus on the Convolutional Kernel Network (CKN) architecture proposed in End-to-End Kernel Learning with Supervised Convolutional Kernel Networks [1] and present its guiding principles and main components. The CKN opened a new line of research in deep learning by demonstrating the benefits of the kernel trick for deep convolutional representations. The goal of this blog is to provide a high-level view of the CKN architecture while explaining how to implement it from scratch without relying on modern Deep Learning frameworks…

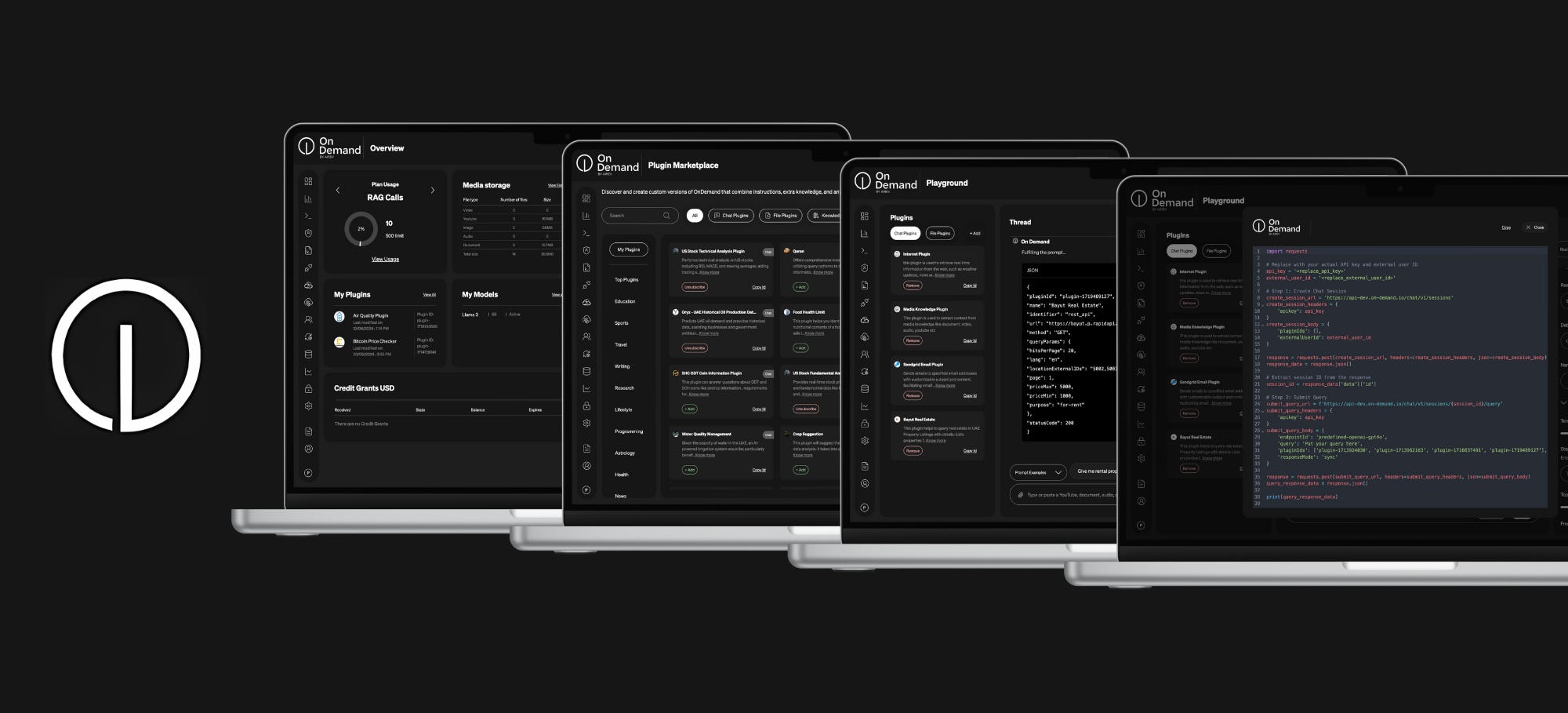

Unlock the future of AI with OnDemand!

Our cutting-edge platform offers exclusive tools and insights to streamline businesses of any size, boosting productivity and efficiency. Gain early access to groundbreaking AI solutions and stay ahead of the curve. Whether you're a startup or an established enterprise, OnDemand provides the solutions you need to succeed. Don't miss out on this opportunity to transform your business by joining right now free of charge!

AI Awaits You: The future is here, and it's OnDemand.

Sign up now and be among the first to explore our cutting-edge AI platform

* Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

The explosion in time series forecasting packages in data science

There have been a series of sometimes jaw-dropping developments in data science in the last few years, with large language models by far the most prominent (and with good reason). But another story has been the huge explosion in time series packages…In the rest of this post, we’ll look at the new(ish) time series packages that are around, who built them, and what they might be good for…

Data Scientist Handbook 2024

Curated resources (Free & Paid) to help data scientists learn, grow, and break into the field of data science…

Questions about ARC Prize

ARC Prize is an intelligence benchmark intended to be hard for AI and easy for humans. With a grand prize of $1,000,000, it is currently the most popular contest on Kaggle. Most AI systems (e.g. GPT4) perform much worse than human children on the benchmark…

Questionable practices in machine learning

Evaluating modern ML models is hard. The strong incentive for researchers and companies to report a state-of-the-art result on some metric often leads to questionable research practices (QRPs): bad practices which fall short of outright research fraud. We describe 43 such practices which can undermine reported results, giving examples where possible. Our list emphasizes the evaluation of large language models (LLMs) on public benchmarks. We also discuss "irreproducible research practices", i.e. decisions that make it difficult or impossible for other researchers to reproduce, build on or audit previous research…

What is Gradient Descent

Gradient Descent, or Stochastic Gradient Descent, is a machine learning algorithm that optimizes parameter estimates for a model by (slowly) adjusting the estimates according to gradients (partial derivatives) and some loss function. The 7 “steps” of gradient descent, according to Jeremy Howard’s fastai course on deep learning, are…

Annotated area charts with plotnine

The `plotnine` visualisation library brings the Grammar of Graphics to Python. This blog post walks through the process of creating a customised, annotated area chart of coal production data…

A Sanity Check on ‘Emergent Properties’ in Large Language Models

One of the often-repeated claims about Large Language Models (LLMs), discussed in our ICML’24 position paper, is that they have ‘emergent properties’. Unfortunately, in most cases the speaker/writer does not clarify what they mean by ‘emergence’. But misunderstandings on this issue can have big implications for the research agenda, as well as public policy. From what I’ve seen in academic papers, there are at least 4 senses in which NLP researchers use this term..

Machine Learning Engineering Open Book

This is an open collection of methodologies, tools and step by step instructions to help with successful training of large language models and multi-modal models. This is a technical material suitable for LLM/VLM training engineers and operators. That is the content here contains lots of scripts and copy-n-paste commands to enable you to quickly address your needs…

Machine Learning Engineering Chapter: Network Debug

The intention is to help non-network engineers to figure out how to resolve common problems around multi-gpu and multi-node collectives networking - it's heavily NCCL-biased at the moment. Will extend with RCCL and others when I get access to those…Often you don't need to be a network engineer to figure out networking issues. Some of the common issues can be resolved by reading the following notes….

Pragmatic data science checklists with Peter Bull

Doctors use checklists before they do surgery because it prevents a lot of failures. What if we did the same in Machine Learning? To help answer this question we've interviewed Peter Bull from Drivendata on our podcast…A lot of things can (and have) gone wrong when folks tried to apply data science projects. So how might we prevent that? Maybe what we need to do is to look at the medical profession and their practice of checklists before surgery…

Things you learned embarrassingly late? [Reddit]

yaml is just more readable dictionaries/unserialized json. I've been reading and writing yaml files for years and I knew that they were just key/value pairs but I had never made the mental connection between the indentation levels, nested dictionaries, and "-" denoting a list. I just kind of..... knew how to write yaml based on vibes…

What happened to BERT & T5? On Transformer Encoders, PrefixLM and Denoising Objectives

The people who worked on language and NLP about 5+ years ago are left scratching their heads about where all the encoder models went. If BERT worked so well, why not scale it? What happened to encoder-decoders or encoder-only models?…Today I try to unpack all that is going on, in this new era of LLMs. I Hope this post will be helpful…

Forecasting: Principles and Practice

This textbook is intended to provide a comprehensive introduction to forecasting methods and to present enough information about each method for readers to be able to use them sensibly. We don’t attempt to give a thorough discussion of the theoretical details behind each method, although the references at the end of each chapter will fill in many of those details…

Introduction to R with Tidyverse: A 2-day introduction to the wonders of R (free)

This two-day workshop is designed to equip PhD students, academics, and professional researchers across various disciplines with the essential skills to leverage the power of R and Tidyverse for their academic research. The workshop begins with a gentle introduction to the user-friendly RStudio interface and the basics of the R coding language, or syntax. This makes it ideal for anyone with little or no prior coding experience, or those looking for a refresher of the basics. Attendees will learn how to manipulate, transform, and clean data efficiently, and how to create compelling visualizations to communicate their findings effectively…

Density and Likelihood: What’s the Difference?

It’s another installment in Data Q&A: Answering the real questions with Python…Density and Likelihood Here’s a question from the Reddit statistics forum. I’m a math graduate and am partially self taught. I am really frustrated with likelihood and probability density, two concepts that I personally think are explained so disastrously that I’ve been struggling with them for an embarrassingly long time. Here’s my current understanding and what I want to understand…

* Based on unique clicks.

** Find last week's issue #555 here.

Looking to get a job? Check out our “Get A Data Science Job” Course

It is a comprehensive course that teaches you everything related to getting a data science job based on answers to thousands of emails from readers like you. The course has 3 sections: Section 1 covers how to get started, Section 2 covers how to assemble a portfolio to showcase your experience (even if you don’t have any), and Section 3 covers how to write your resume.

Promote yourself/organization to ~63,000 subscribers by sponsoring this newsletter. 35-45% weekly open rate.

Thank you for joining us this week! :)

Stay Data Science-y!

All our best,

Hannah & Sebastian