It's Strawberry Summer at OpenAI - Weekly News Roundup - Issue #476

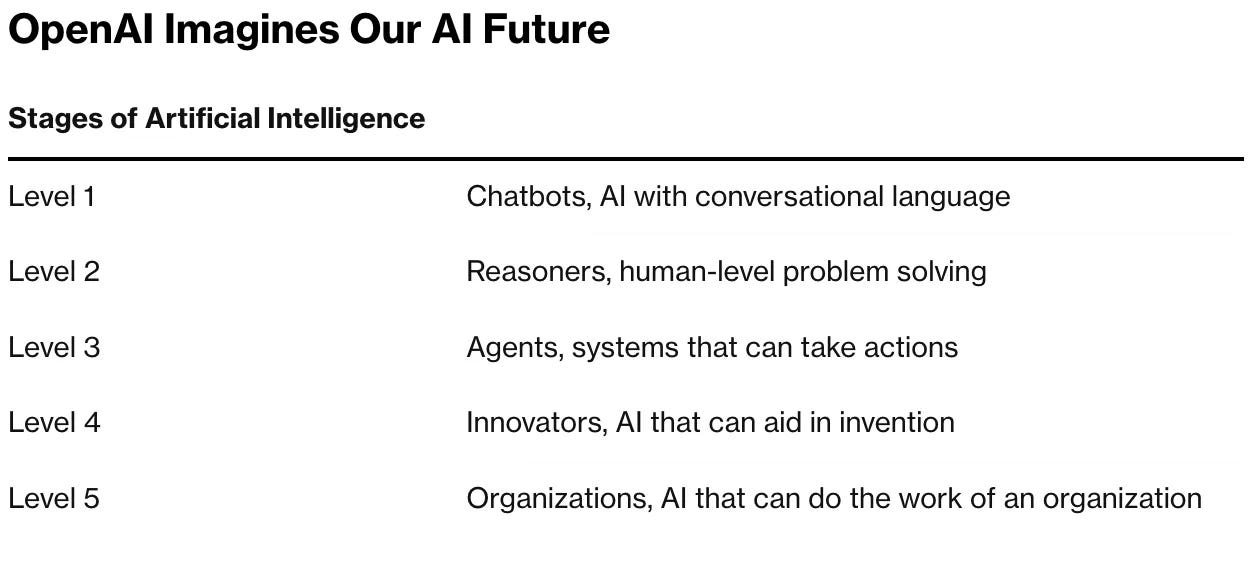

I hope you enjoy this free post. If you do, please like ❤️ or share it, for example by forwarding this email to a friend or colleague. Writing this post took around eight hours to write. Liking or sharing it takes less than eight seconds and makes a huge difference. Thank you! It's Strawberry Summer at OpenAI - Weekly News Roundup - Issue #476Plus: GPT-4o-mini; first Miss AI contest sparks controversy; lab-grown meat for pets approved in the UK; Tesla delays robotaxi reveal until October; 'Supermodel granny' drug extends life in animalsHello and welcome to Weekly News Roundup Issue #476. This week, we will focus on recent leaks from OpenAI hinting at a new and powerful AI model known internally as Strawberry. In other news, scientists have extended the lifespan of mice by nearly 25% and given them a youthful appearance. Over in AI, OpenAI released a new model—GPT-4o-mini, while Apple, Nvidia, and Anthropic have been caught using captions from videos of popular YouTubers to train their models. We also have the story of Graphcore, one of the first AI hardware startups to reach unicorn status, and what caused its downfall. In robotics, the reveal of Tesla’s robotaxi service has been delayed until October, and we will learn how much it would cost to hire a Digit humanoid. We will finish this week’s news roundup with UK regulators approving lab-grown meat for pets and with a startup that makes butter using CO2 and water. Enjoy! It's Strawberry Summer at OpenAICreating artificial general intelligence (AGI) is the explicit goal of many AI companies. OpenAI is one of those companies which describes its mission as “to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity.” However, to know if AGI has been achieved, we first need to be clear on what AGI is in the first place. Modern AI systems would have been considered AGI five or ten years ago, but no one calls them that today, creating a need to clearly define what AGI is and what it is not. Google DeepMind has already proposed its definition of AGI, and now it is OpenAI’s turn to do the same. OpenAI introduces Five Stages of AIIn November 2023, researchers from Google DeepMind published a paper titled Levels of AGI in which they attempted to define rigorously what AGI is. They have analysed nine different existing definitions of AGI, their strengths and weaknesses, to then promptly propose a tenth definition. Their definition combines both the performance, or the depth of an AI system’s capabilities, and generality—the breadth of an AI system’s capabilities. In order to better communicate the capabilities of an AI system, the paper also introduces Levels of AGI—a six-tier matrixed levelling system to classify AI on the path to AGI, similar to what the car industry uses to describe the extent of self-driving cars’ autonomous capabilities. According to that system, we have already created narrow AI systems at the highest, Superhuman level, in which AI outperforms all humans. This category includes systems such as AlphaFold, AlphaZero or Stockfish. However, the picture looks different for the general AIs, where we have reached Level 1, Emerging AGI, with systems such as ChatGPT or Gemini. Last week, during an all-hands meeting with employees, OpenAI introduced its own framework to track the progress towards building AGI using a five-level tier system. At Level 1 we have Chatbots—AI systems that are good at understanding and using conversational language but lack human-level reasoning and problem-solving skills. The next level, Reasoners, defines AI systems with enhanced reasoning capabilities. After Reasoners, we have Agents (systems that can take actions), then Innovators (systems that can aid in invention), and finally Organisations, where AI systems can perform the work of an entire organisation.

According to this framework, current AI systems are on the first level. However, OpenAI executives believe we are on the cusp of reaching the second level, Reasoners, Bloomberg reports. Reuters later followed up with its own report, saying that OpenAI is already working on and testing a Level 2 system, a system displaying human-level reasoning skills. Strawberry—OpenAI’s new mysterious AI modelThis new AI system is known internally under the codename Strawberry and was formerly known as Q*. We learned about Q* in November last year when it was rumoured it was this AI model that prompted some of the OpenAI board members to remove Sam Altman from his position as the CEO of OpenAI. According to two sources quoted by Reuters, some OpenAI employees have seen Q* in action, saying it was capable of answering tricky science and math questions out of reach of today’s commercially available AI models. Another source said one of OpenAI’s internal projects produced an AI system that scored over 90% on a MATH dataset, a benchmark of championship math problems. Additionally, during the same meeting that introduced OpenAI’s stages of AI framework, the employees saw a demo of a new AI system that had new human-like reasoning skills. However, it is unclear if these were different models or if they are all the same model. It is also unknown how close this new model or models are to being released to the public. Previous reports were pointing at the second half of 2024 as a possible release date for GPT-5, which might incorporate Project Strawberry or some parts of it. In either case, OpenAI is cooking something, and the recent releases of GPT-4o and GPT-4o-mini (more on that one later) might play a role in creating reliable and powerful AI models capable of reasoning like a human. OpenAI’s questionable safety practicesAt the same time when OpenAI is developing a new generation of AI models, the company is also dealing with whistleblowers accusing the company of placing illegal restrictions on how employees can communicate with government regulators. The whistleblowers have filed a complaint with the U.S. Securities and Exchange Commission, calling for an investigation over the company's allegedly restrictive non-disclosure agreements, according to Reuters. The questions around restrictive non-disclosure agreements at OpenAI were asked already a couple of weeks ago when the company was dealing with the implosion of its Superaligment team and the departure of people associated with the team, including OpenAI’s co-founder, Ilya Sustkever. Vox reported in May that OpenAI could take back vested equity from departing employees if they did not sign non-disparagement agreements. Sam Altman responded in a tweet saying the company “have never clawed back anyone's vested equity, nor will we do that if people do not sign a separation agreement (or don't agree to a non-disparagement agreement).” Additionally, OpenAI has been recently plagued by safety concerns. The implosion of the Superalignment team and Sutskever’s departure were the highlights that gathered public attention, but there were other things happening, too. Earlier this year, two other people working on safety and governance left OpenAI. One of them wrote on his profile on LessWrong that he quit OpenAI "due to losing confidence that it would behave responsibly around the time of AGI." Jan Leike, a key OpenAI researcher and the leader of the Superaligment team, announced his departure from OpenAI shortly after the news of Sustkever’s departure broke. There are also reports about OpenAI prioritising quick product releases over proper safety tests. The Washington Post shares a story of the company celebrating the launch of GPT-4o in May before safety tests were complete. “They planned the launch after-party prior to knowing if it was safe to launch,” said one of the OpenAI employees to The Washington Post. “We basically failed at the process.” I hope that when GPT-5 is released, with Strawberry or not, OpenAI has sorted out its safety practices and protocols. I don’t think anyone wants to experience an AI meltdown on a scale of Google AI Overview disaster, but with an AI system that is more capable than the best models available today. If you enjoy this post, please click the ❤️ button or share it. Do you like my work? Consider becoming a paying subscriber to support it For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter. Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving. 🦾 More than a human'Supermodel granny' drug extends life in animals Tiny implant fights cancer with light 🧠 Artificial IntelligenceGPT-4o mini: advancing cost-efficient intelligence

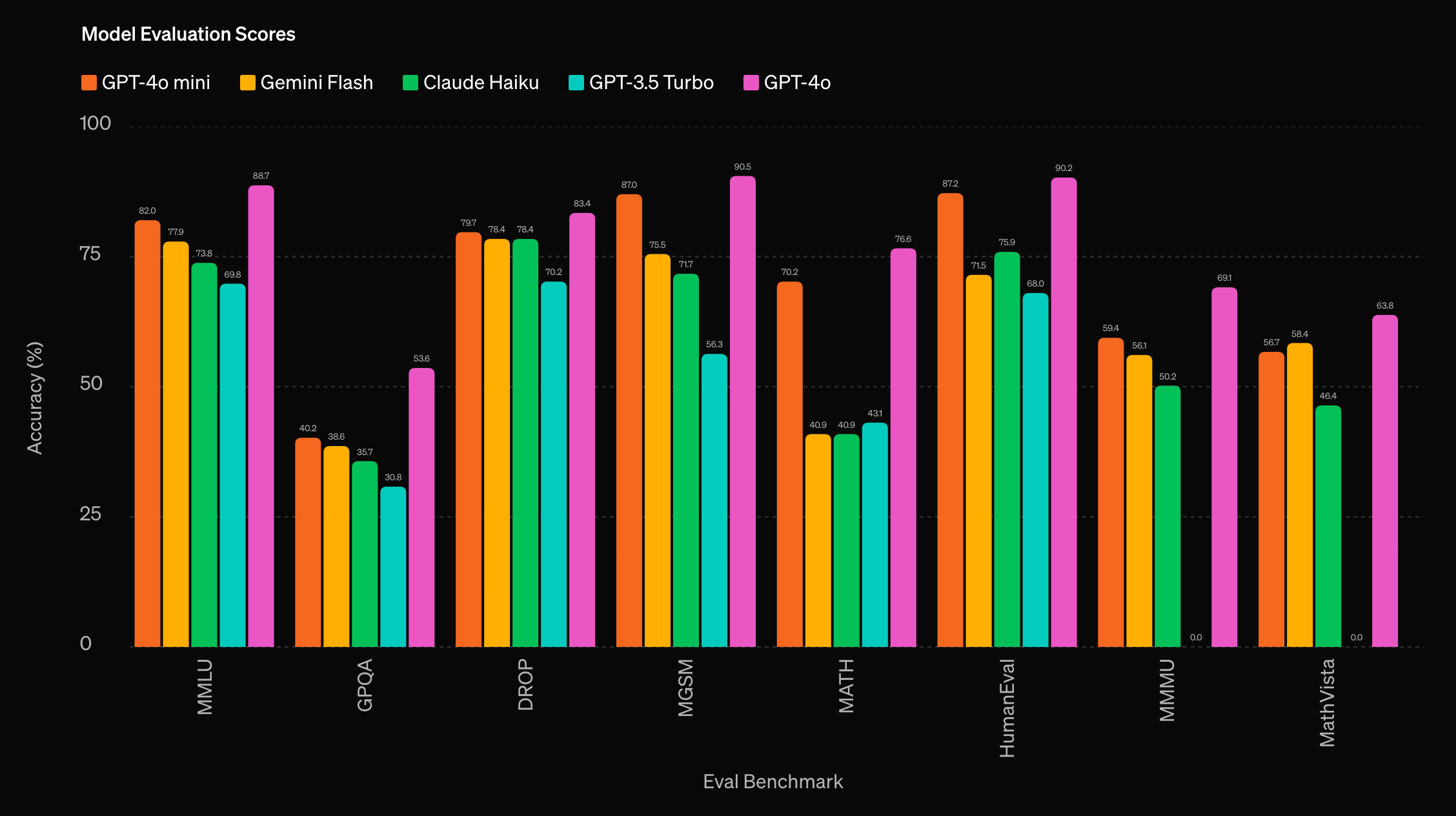

OpenAI has released a new small model named GPT-4o-mini. According to OpenAI, GPT-4o-mini matches the performance level of the original GPT-4 model released over a year ago while also outperforming similar models in its class, such as Google’s Gemini Flash and Anthropic’s Claude 3 Haiku. OpenAI says that the new model is the “most cost-efficient small model” and offers “superior textual intelligence and multimodal reasoning.” GPT-4o-mini is available through the OpenAI API and in ChatGPT, where it replaces GPT-3.5 Turbo as the model suited for everyday tasks. Meta won't offer future multimodal AI models in EU Apple, Nvidia, Anthropic Used Thousands of Swiped YouTube Videos to Train AI ▶️ The Downfall of AI Unicorns: Graphcore exits to Softbank (15:27)  In this video, Dr Ian Cutress tells the story of Graphcore, one of the first AI hardware startups to reach unicorn status, and analyses what caused its downfall and eventual acquisition by Softbank a week ago. Additionally, the video contains an analysis of AI hardware startups, how the downfall of Graphcore could affect the scene, and answers the question of whether this is the first sign of market consolidation. Text to Image AI Model & Provider Leaderboard Andrej Karpathy is starting Eureka Labs - an AI+Education company First “Miss AI” contest sparks ire for pushing unrealistic beauty standards “Superhuman” Go AIs still have trouble defending against these simple exploits Google DeepMind’s AI Rat Brains Could Make Robots Scurry Like the Real Thing If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word? 🤖 RoboticsHere’s what it could cost to hire a Digit humanoid Tesla reportedly delaying its robotaxi reveal until October Google says Gemini AI is making its robots smarter U.S. Marine Corps testing autonomy system for helicopters Xiaomi's self-optimizing autonomous factory will make 10M+ phones a year Robot Dog Cleans Up Beaches With Foot-Mounted Vacuums 🧬 BiotechnologyLab-Grown Meat for Pets Was Just Approved in the UK Fats from thin air: Startup makes butter using CO2 and water Where's the Synthetic Blood? Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it. Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support! My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!" |

Older messages

Digital twins

Wednesday, July 17, 2024

How physical becomes digital ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Weekly News Roundup - Issue #475

Friday, July 12, 2024

Plus: Microsoft leaves OpenAI's board; OpenAI got hacked; the first step toward reversible cryopreservation; how good ChatGPT is at coding; China proposes guidelines for humanoid robotics; and more

Big Tech's new plan to acquire AI startups - Weekly News Roundup - Issue #474

Friday, July 5, 2024

Plus: the best bionic leg yet; Apple to get a set on OpenAI's board; Perplexity, Suno and Udio are in trouble; new humanoid robots; Biotech Behemoths in review; brain-in-a-jar robot; and more! ͏ ͏

Superintelligence—10 years later

Wednesday, July 3, 2024

10 years on, some things have changed and some did not ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

DJI faces ban in the US - Weekly News Roundup - Issue #473

Friday, June 28, 2024

Plus: Apple delays the launch of AI tools in the EU; the first ad made with Sora; a new protein-generating AI; a humanoid robot gets its first proper job; Pope calls to ban autonomous weapons ͏ ͏ ͏ ͏ ͏

You Might Also Like

Master the New Elasticsearch Engineer v8.x Enhancements!

Tuesday, March 4, 2025

Need Help? Join the Discussion Now! ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ elastic | Search. Observe. Protect Master Search and Analytics feb 24 header See

Daily Coding Problem: Problem #1707 [Medium]

Monday, March 3, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Facebook. In chess, the Elo rating system is used to calculate player strengths based on

Simplification Takes Courage & Perplexity introduces Comet

Monday, March 3, 2025

Elicit raises $22M Series A, Perplexity is working on an AI-powered browser, developing taste, and more in this week's issue of Creativerly. Creativerly Simplification Takes Courage &

Mapped | Which Countries Are Perceived as the Most Corrupt? 🌎

Monday, March 3, 2025

In this map, we visualize the Corruption Perceptions Index Score for countries around the world. View Online | Subscribe | Download Our App Presented by: Stay current on the latest money news that

The new tablet to beat

Monday, March 3, 2025

5 top MWC products; iPhone 16e hands-on📱; Solar-powered laptop -- ZDNET ZDNET Tech Today - US March 3, 2025 TCL Nxtpaper 11 tablet at CES The tablet that replaced my Kindle and iPad is finally getting

Import AI 402: Why NVIDIA beats AMD: vending machines vs superintelligence; harder BIG-Bench

Monday, March 3, 2025

What will machines name their first discoveries? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

GCP Newsletter #440

Monday, March 3, 2025

Welcome to issue #440 March 3rd, 2025 News LLM Official Blog Vertex AI Evaluate gen AI models with Vertex AI evaluation service and LLM comparator - Vertex AI evaluation service and LLM Comparator are

Apple Should Swap Out Siri with ChatGPT

Monday, March 3, 2025

Not forever, but for now. Until a new, better Siri is actually ready to roll — which may be *years* away... Apple Should Swap Out Siri with ChatGPT Not forever, but for now. Until a new, better Siri is

⚡ THN Weekly Recap: Alerts on Zero-Day Exploits, AI Breaches, and Crypto Heists

Monday, March 3, 2025

Get exclusive insights on cyber attacks—including expert analysis on zero-day exploits, AI breaches, and crypto hacks—in our free newsletter. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚙️ AI price war

Monday, March 3, 2025

Plus: The reality of LLM 'research'