📝 Guest Post: Local Agentic RAG with LangGraph and Llama 3*

Was this email forwarded to you? Sign up here In this guest post, Stephen Batifol from Zilliz discusses how to build agents capable of tool-calling using LangGraph with Llama 3 and Milvus. Let’s dive in. LLM agents use planning, memory, and tools to accomplish tasks. Here, we show how to build agents capable of tool-calling using LangGraph with Llama 3 and Milvus. Agents can empower Llama 3 with important new capabilities. In particular, we will show how to give Llama 3 the ability to perform a web search, call custom user-defined functions Tool-calling agents with LangGraph use two nodes: an LLM node decides which tool to invoke based on the user input. It outputs the tool name and tool arguments based on the input. The tool name and arguments are passed to a tool node, which calls the tool with the specified arguments and returns the result to the LLM. Milvus Lite allows you to use Milvus locally without using Docker or Kubernetes. It will store the vectors you generate from the different websites we will navigate to. Introduction to Agentic RAGLanguage models can't take actions themselves—they just output text. Agents are systems that use LLMs as reasoning engines to determine which actions to take and the inputs to pass them. After executing actions, the results can be transmitted back into the LLM to determine whether more actions are needed or if it is okay to finish. They can be used to perform actions such as Searching the web, browsing your emails, correcting RAG to add self-reflection or self-grading on retrieved documents, and many more. Setting things up

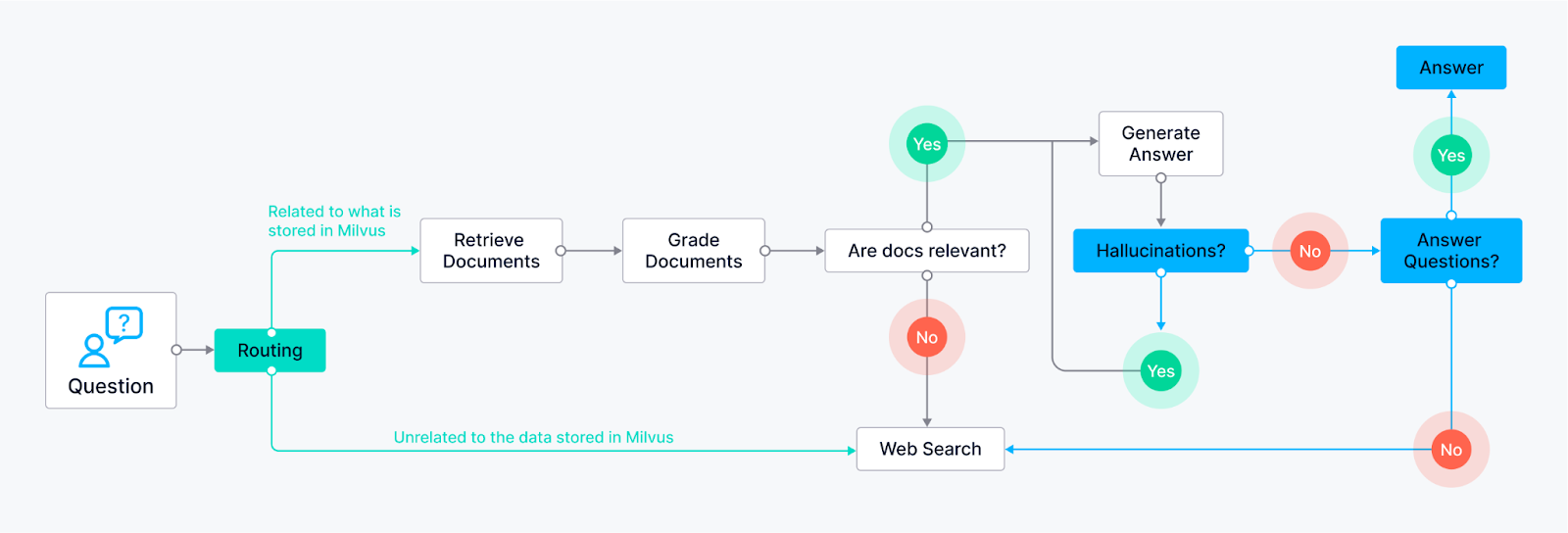

Using LangGraph and MilvusWe use LangGraph to build a custom local Llama 3-powered RAG agent that uses different approaches: We implement each approach as a control flow in LangGraph:

General ideas for Agents

Examples of AgentsTo showcase the capabilities of our LLM agents, let's look into two key components: the Hallucination Grader and the Answer Grader. While the full code is available at the bottom of this post, these snippets will provide a better understanding of how these agents work within the LangChain framework. Hallucination GraderThe Hallucination Grader tries to fix a common challenge with LLMs: hallucinations, where the model generates answers that sound plausible but lack factual grounding. This agent acts as a fact-checker, assessing if the LLM's answer aligns with a provided set of documents retrieved from Milvus.

Answer GraderFollowing the Hallucination Grader, another agent steps in. This agent checks another crucial aspect: ensuring the LLM's answer directly addresses the user's original question. It utilizes the same LLM but with a different prompt, specifically designed to evaluate the answer's relevance to the question.

You can see in the code above that we are checking the predictions by the LLM that we use as a classifier. Compiling the LangGraph graph.This will compile all the agents that we defined and will make it possible to use different tools for your RAG system.

ConclusionIn this blog post, we showed how to build a RAG system using agents with LangChain/ LangGraph, Llama 3, and Milvus. These agents make it possible for LLMs to have planning, memory, and different tool use capabilities, which can lead to more robust and informative responses. Feel free to check out the code available in the Milvus Bootcamp repository. If you enjoyed this blog post, consider giving us a star on Github, and share your experiences with the community by joining our Discord. This is inspired by the Github Repository from Meta with recipes for using Llama 3 *This post was written by Stephen Batifol and originally published on Zilliz.com here. We thank Zilliz for their insights and ongoing support of TheSequence.You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

One Week, 7 Major Foundation Model Releases

Sunday, July 21, 2024

Apple, HuggingFace, OpenAI, Mistral, Groq all released innovative models in the same week. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

📽 [Virtual Talk] Supercharge Production AI with Features as Code

Friday, July 19, 2024

Data is essential for AI/ML systems but often becomes a development bottleneck. Data scientists and engineers face challenges in building and maintaining feature pipelines, ensuring data consistency

Edge 414: Inside Meta AI's HUSKY: A New Agent Optimized for Multi-Step Reasoning

Thursday, July 18, 2024

New research from Meta AI, Allen AI, and the University of Washington tackles one of the most important problems in LLM reasoning. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Edge 413: Autonomous Agents and Semantic Memory

Tuesday, July 16, 2024

Can agents capture memory that encodes actual knowledge? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

📽 [Virtual Talk] Building a Resilient, Real-Time Fraud System at Block

Monday, July 15, 2024

Data is crucial for AI/ML systems but often becomes a bottleneck in development. Data scientists and engineers grapple with the complexity of building and maintaining feature pipelines, ensuring

You Might Also Like

Charted | How U.S. Household Incomes Have Changed (1967-2023) 💰

Friday, December 27, 2024

When looking at inflation adjusted data, US households have definitely gotten a whole lot richer since 1967. View Online | Subscribe | Download Our App FEATURED STORY How US Household Incomes Have

Can Pirates Save Democracy?

Friday, December 27, 2024

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? 🪐 What's happening in tech today, December 27, 2024? The

The 2025 Predictions You Can't Afford to Miss 🔮

Friday, December 27, 2024

Get a head start on what's to come in the New Year. Join VC+ to gain access to our 2025 Global Forecast Series and other exclusive insights! View email in browser HOW LEADERS STAY AHEAD IN 2025 The

DeveloPassion's Newsletter #182 - 2024 Retrospective

Friday, December 27, 2024

A newsletter discussing Knowledge Management, Knowledge Work, Zen Productivity, Personal Organization, and more! Sébastien Dubois DeveloPassion's Newsletter DeveloPassion's Newsletter #182 -

End 2024 on a High Note: The Top Writing Tips and Templates You Need

Friday, December 27, 2024

What's good, @newsletterest1! As we welcome 2025, let's take a moment to celebrate the incredible stories that fueled our hacker minds in 2024! We've compiled a roundup of the most-used

Private AI data + AI in Hollywood

Friday, December 27, 2024

my 2024 favorites ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🐧 The best Linux distro of 2024

Friday, December 27, 2024

Extension cord don'ts; AI's biggest challenge; Wired network hack -- ZDNET ZDNET Tech Today - US December 27, 2024 The default elementary OS 8 desktop. The best Linux distribution of 2024 is

Issue #573: Ray browser, focus shift, and Nimrods

Friday, December 27, 2024

View this email in your browser Issue #573 - December 27th 2024 Weekly newsletter about Web Game Development. If you have anything you want to share with our community please let me know by replying to

Palo Alto Releases Patch for PAN-OS DoS Flaw — Update Immediately

Friday, December 27, 2024

THN Daily Updates Newsletter cover Backups: The Key to Cybersecurity How Much Cybersecurity is Enough? Recovery + Resistance = Resilience Download Now Sponsored LATEST NEWS Dec 27, 2024 Cloud Atlas

SWLW #631: You can’t measure productivity, Ask uncommonly clear questions, and more.

Friday, December 27, 2024

Weekly articles & videos about people, culture and leadership: everything you need to design the org that makes the product. A weekly newsletter by Oren Ellenbogen with the best content I found