|

Hello! Once a week, we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

And now…let's dive into some interesting links from this week.

AI prompt engineering: A deep dive

Some of Anthropic's prompt engineering experts—Amanda Askell (Alignment Finetuning), Alex Albert (Developer Relations), David Hershey (Applied AI), and Zack Witten (Prompt Engineering)—reflect on how prompt engineering has evolved, practical tips, and thoughts on how prompting might change as AI capabilities grow…

Thoughts on Research Impact in AI

Grad students often reach out to talk about structuring their research, e.g. how do I do research that makes a difference in the current, rather crowded AI space? Too many feel that long-term projects, proper code releases, and thoughtful benchmarks are not incentivized — or are perhaps things you do quickly and guiltily to then go back to doing 'real' research. This post distills thoughts on impact I've been sharing with folks who ask. Impact takes many forms, and I will focus only on making research impact in AI via open-source work through artifacts like models, systems, frameworks, or benchmarks. Because my goal is partly to refine my own thinking, to document concrete advice, and to gather feedback, I'll make rather terse, non-trivial statements…

Column-Stores vs. Row-Stores: How Different Are They Really?

Recent years have seen the introduction of a number of column oriented database systems, including MonetDB..and C-Store…The authors of these systems claim that their approach offers order of magnitude gains on certain workloads, particularly on read-intensive analytical processing workloads, such as those encountered in data warehouses…

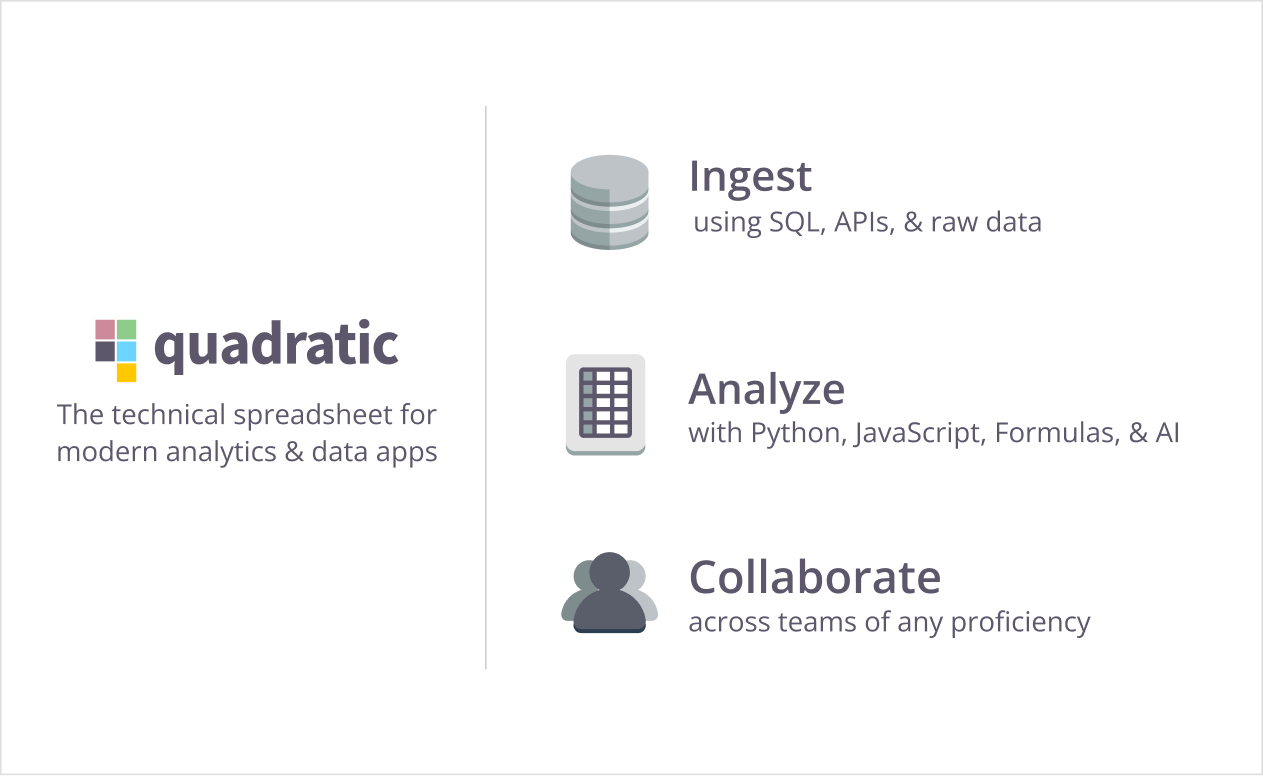

With Quadratic, combine the spreadsheets your organization asks for with the code that matches your team’s code-driven workflows. Powered by code, you can build anything in Quadratic spreadsheets with Python, JavaScript, or SQL, all approachable with the power of AI.

Use the data tool that actually aligns with how your team works with data, from ad-hoc to end-to-end analytics, all in a familiar spreadsheet. Level up your team’s analytics with Quadratic today . * Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

ggpubfigs - colorblind friendly color palettes and ggplot2 extensions

ggpubfigs is a ggplot2 extension that helps create publication ready figures for the life sciences. Importantly, ggpubfigs implements themes and color palettes that are both aesthetically pleasing and colorblind friendly… Generative AI Use Case: Using LLMs to Score Customer Conversations

We recently spoke with Killian Farrell, Principal Data Scientist at insurance startup AssuranceIQ, to learn how his team built an LLM-based product to structure unstructured data and score customer conversations for developing sales and customer support teams…Read on to find out what they did, and what they learned!…

No Priors Podcast With Andrej Karpathy from OpenAI and Tesla

Andrej Karpathy joins Sarah and Elad in this week of No Priors. Andrej, who was a founding team member of OpenAI and the former Tesla Autopilot leader, needs no introduction. In this episode, Andrej discusses the evolution of self-driving cars, comparing Tesla's and Waymo’s approaches, and the technical challenges ahead. They also cover Tesla’s Optimus humanoid robot, the bottlenecks of AI development today, and how AI capabilities could be further integrated with human cognition. Andrej shares more about his new mission Eureka Labs and his insights into AI-driven education and what young people should study to prepare for the reality ahead…

How serious is your org about Data Quality? [Reddit Discussion]

I’m trying to get some perspective on how you’ve convinced your leadership to invest in data quality. In my organization everyone recognizes data quality is an issue, but very little is being done to address it holistically. For us, there is no urgency, no real tangible investments made to show we are serious about it. Is it just 2024 that everyone budgets and resources are tied up or we are just unique to not prioritize data quality. I’m interested learning if you are seeing the complete opposite. That might signal I might be in the wrong place…

A simple recipe for model error analysis

Error analysis is a powerful tool in machine learning that we don’t talk about enough. Every prediction model makes errors. The idea of error analysis is to analyze the pointwise errors and identify error patterns. If you find error patterns, it can help improve and debug the model and better understand uncertainty….

supertree - Interactive Decision Tree Visualization

supertree is a Python package designed to visualize decision trees in an interactive and user-friendly way within Jupyter Notebooks, Jupyter Lab, Google Colab, and any other notebooks that support HTML rendering. With this tool, you can not only display decision trees, but also interact with them directly within your notebook environment. Key features include: ability to zoom and pan through large trees, collapse and expand selected nodes, explore the structure of the tree in an intuitive and visually appealing manner…

Your guide to AI: September 2024

Welcome to the latest issue of your guide to AI, an editorialized newsletter covering the key developments in AI policy, research, industry, and startups over the last month…

R advantages over python

R has many advantages over python that should be taken into consideration when choosing which language to do DS with. When compiling them in this repo I try to avoid: Too subjective comparisons. E.g. function indentation vs curly braces closure. Issues that one can get used to after a while like python indexing (though the fact it starts from 0, or that object[0:2] returns only the first 2 elements still throws me off once in a while)….

Data checks for estimators Before an estimator in scikit-learn learns from data it first checks to see if it is appropriate for the model. Different estimators have different requirements which is why scikit-learn ships with some utilities to help double-check these properties. This video dives into that because it might also help you write better custom estimators yourself…

Misrepresented Technological Solutions in Imagined Futures: The Origins and Dangers of AI Hype in the Research Community

The dangers of technological hype are particularly relevant in the rapidly evolving space of AI. Centering the research community as a key player in the development and proliferation of hype, we examine the origins and risks of AI hype to the research community and society more broadly and propose a set of measures that researchers, regulators, and the public can take to mitigate these risks and reduce the prevalence of unfounded claims about the technology…

Five ways to improve your chart axes

A poor choice of axes for your chart can make it more difficult to understand, and in some cases, suggest misleading conclusions. In this blog post, we'll look at five ways to make better choices about your axes and stop relying on default settings…

Postgres Materialized Views from Parquet in S3 with Zero ETL

Data pipelines for IoT applications often involve multiple different systems. First, raw data is gathered in object storage, then several transformations happen in analytics systems, and finally results are written into transactional databases to be accessed by low latency dashboards. While a lot of interesting engineering goes into these systems, things are much simpler if you can do everything in Postgres…In this blog post, we’ll have a look at how you can easily set up a sophisticated database pipeline in PostgreSQL in a few easy steps…

* Based on unique clicks.

** Find last week's issue #562 here.

.

Learning something for your work? Reply to this email to find out how we can work 1-on-1 with you to speed up your learning. Looking to get a job? Check out our “Get A Data Science Job” Course

It is a comprehensive course that teaches you everything related to getting a data science job based on answers to thousands of emails from readers like you. The course has 3 sections: Section 1 covers how to get started, Section 2 covers how to assemble a portfolio to showcase your experience (even if you don’t have any), and Section 3 covers how to write your resume. Promote yourself/organization to ~63,000 subscribers by sponsoring this newsletter. 35-45% weekly open rate.

Thank you for joining us this week! :) Stay Data Science-y! All our best,

Hannah & Sebastian

| |