The Sequence Chat: Lewis Tunstall, Hugging Face, On Building the Model that Won the AI Math Olympiad

Was this email forwarded to you? Sign up here The Sequence Chat: Lewis Tunstall, Hugging Face, On Building the Model that Won the AI Math OlympiadDetails about NuminaMath, its architecture, training process and even things that didn't work.Bio:Lewis Tunstall is a Machine Learning Engineer in the research team at Hugging Face and is the co-author of the bestseller “NLP with Transformers” book. He has previously built machine learning-powered applications for start-ups and enterprises in the domains of natural language processing, topological data analysis, and time series. He holds a PhD in Theoretical Physics, was a 2010 Fulbright Scholar and has held research positions in Australia, the USA, and Switzerland. His current work focuses on building tools and recipes to align language models with human and AI preferences through techniques like reinforcement learning.

I currently lead the post-training team at Hugging Face, where we focus on providing the open-source community with robust recipes to fine-tune LLMs through libraries like TRL. In a previous life, I was a theoretical physicist researching the strong nuclear force and its connection to dark matter. I accidentally stumbled into AI via my colleagues in experimental physics, who were very excited about applying a (new at the time) technique called “deep learning” to particle collisions at the Large Hadron Collider. I was surprised to learn that around 100 lines of TensorFlow could train a neural net to extract new signals from collision data, often much better than physics-derived features. This prompted me to take part in a Kaggle competition with a few physics friends, and I’ve been hooked on AI ever since! 🛠 ML Work

This project was a collaboration between Hugging Face and Numina, a French non-profit that was inspired by the AI Math Olympiad (AIMO) to create high-quality datasets and tools for the open-source community. Although there are several open weight models for mathematics, the training datasets are rarely, if ever, made public. We teamed up with Numina to bridge this gap by tackling the first AIMO progress prize with a large-scale dataset of around 850,000 math problem-solution pairs that Numina had been developing prior to the competition. We saw that winning the AIMO competition would be a great way to show the community the power of high-quality datasets and I’m very happy to see that it worked out well!

That’s a great question and I suspect the answer will depend on how straightforward it is to integrate multiple external verifiers like Lean4 and Wolfram Alpha for models to check their proofs. The current systems are trained to interface with a single external solver, but I expect the next iteration of DeepMind’s approach will involve a generalist model that can use multiple solvers, like how LLMs currently use tools for function calling.

This model was largely chosen due to the constraints of the competition: onthe one hand, only pretrained models that were released before February 2024 could be used, and on the other hand, each submission had to run on 2 T4 GPUs in under 9 hours (not easy for LLMs!). Both these constraints made DeepSeekMath 7B the best choice at the time, although there are now much better math models like those from Qwen which would likely score even better in the competition.

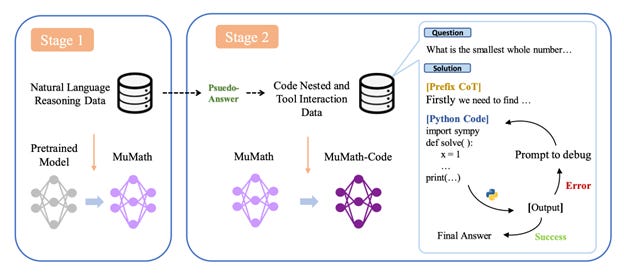

We experimented with various fine-tuning recipes, but the one that gave the best results was based on an interesting paper called MuMath-Code. This paper combines two insights that have been shown to improve the reasoning capabilities of LLMs: Chain of Thought (CoT) templates that provide the intermediate steps needed to obtain the correct answer, and tool-integrated reasoning (TIR), where the LLM is given access to a tool like Python to run computations. Prior research had explored each one independently, but MuMath-Code showed that you get best results by performing two-stage training: first on the CoT data (to learn how to solve problems step-by-step), followed by training on TIR data (to learn how outsource part of the problem to Python). We applied this approach with DeepSeekMath 7B to the datasets Numina created and found it worked really well on our internal evals and the Kaggle leaderboard!

Our training recipe was tailored to competitive mathematics, where one has a known answer for each problem. For competitive physics or chemistry problems, I suspect the method would generalise provided one integrates the relevant tools for the domain. For example, I suspect that in chemistry one requires access to a broad range of tools in order to solve challenging problems.

Our winning recipe was quite simple in the end and involved applying two rounds of supervised fine-tuning (SFT) to the DeepSeekMath 7B model:

For tools, we use the TRL library for training out models on one node of 8 x H100 GPUs. For evaluations and the Kaggle submissions we used vLLM which provides fast inference for LLMs.

The main ideas we tried but didn’t make it to the final submission were the following:

💥 Miscellaneous – a set of rapid-fire questions

As a former physicist, I am excited about the advances being made in AI4Science and specifically in simulating physical processes. I quite like the way Chris Bishop frames this as the “fifth paradigm” of scientific discovery and am hopeful this will help us find answers to some rather thorny problems.

It’s always risky to predict the future, but I think it’s clear that the current systems are lacking several capabilities like episodic memory and the ability to plan over long horizons. Whether these capabilities will emerge at larger scales remains to be seen, but history generally shows that one shouldn’t bet against deep learning!

My current view is that the main barrier for models to generate novel ideas in mathematics and the natural sciences is having some means to verify correctness and compatibility with experiment. For mathematics, I suspect that projects like Lean4 will play a key role in bridging the gap from proving what is known towards generating novel theorems which are then verified automatically by the model for correctness. For the natural sciences, the challenge appears to be much harder since one needs to both synthesize vast amounts of experimental data and possibly run new experiments to validate new ideas. Nevertheless, there are already hints from works like Sakana’s AI Scientist that formulating novel ideas in ML research is possible, albeit under rather stringent constraints. It will be exciting to see how far this can be pushed into other domains!

My favorite mathematician is John von Neumann, mostly because I didn't really understand quantum mechanics until I read his excellent textbook on the subject. You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

Edge 429: MambaByte and the Idea of Tokenization-Free SSMs

Tuesday, September 10, 2024

Can SSMs operated on raw data instead of tokens? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Sakana AI

Sunday, September 8, 2024

A new $100 million round for the creators of The AI Scientist ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Edge 428: Inside PrompPoet: Character.ai's Framework for Prompt Engineering

Thursday, September 5, 2024

The open source framework abstracts the core building blocks for prompt creation, optimization and management. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Edge 427: Jamba Combines SSMs, Transformers and MOEs in a Single Model

Tuesday, September 3, 2024

Can a hybrid design outperform each one of the baseline architectures? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Cerebras Inference and the Challenges of Challenging NVIDIA’s Dominance

Sunday, September 1, 2024

Why does NVIDIA remains virtually unchallenged in the AI chip market? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your