Horrific-Terrific - A contagious culture stain

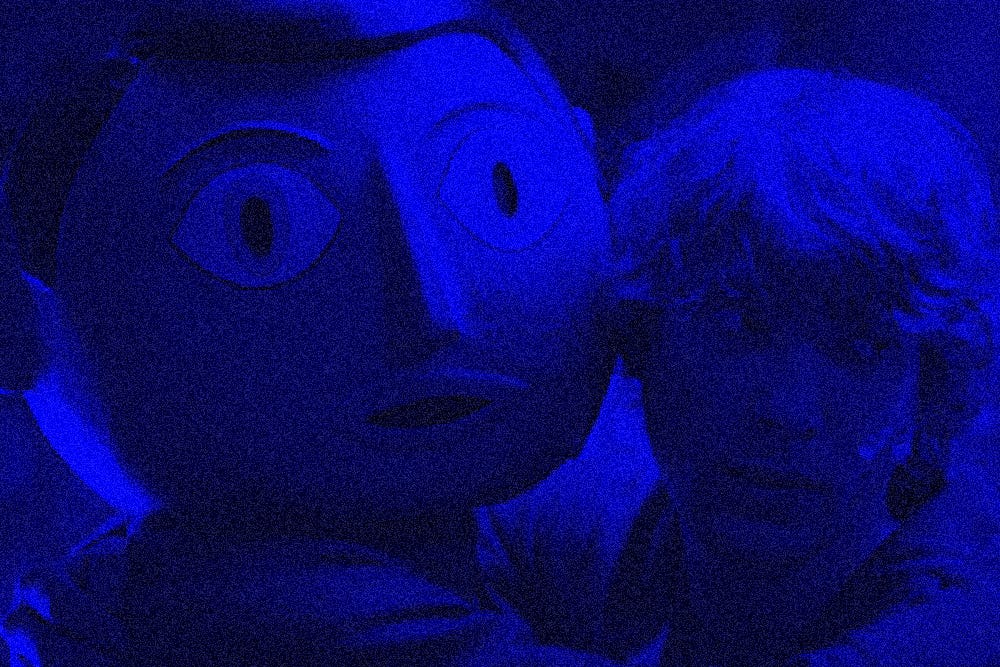

The other night I rewatched Frank with my girlfriend. It’s a movie about a young aspiring musician who stumbles his way into joining a very cool experimental band, and recording an album with them in the idyllic Irish countryside. The story follows him as he systematically destroys the band with his dithering mediocrity and insatiable hunger to ‘be likeable’ and engage wider audiences. Throughout the movie the Bad Musician posts hollow platitudes on 2014 Twitter, like a Russian bot trying to dominate the news cycle with meaningless slop, e.g. “Ham and cheese panini. #livingthedream”. One of his band mates refers to him as “sick” at one point — probably the only line she has in the whole movie — as if his putrid sucking void of talent was infectious. In fact it was: the Bad Musician bewitched Frank, the frontrunner, into thinking that commercialisation was the only way more than a handful of people would ever want to watch them perform. At their first proper show, Frank had become a fizzling sack of nerve-endings, ensuring everyone that the Bad Musician had “fixed everything” when indeed all he had done is make things worse by hoovering all the joy and whimsy out of the band’s music for his own gains. Watching the movie, you find yourself willing the Bad Musician to do the right thing and disappear forever, or at least promise to never make music again and maybe only handle the financial side of things or something. This is very often how I feel about generative AI: as an unimaginative producer of content slurry and a contagious stain on culture that saps way too much energy while giving hardly anything back. I’m starting to feel that not only is the energy consumption of generative AI unacceptable, untenable, and despicable — it also represents a fundamental misunderstanding of what energy is even for. The energy the earth provides is meant to keep plants and animals (including us) alive. You grow food to eat, to have enough energy to both stay alive and enjoy yourself. But, because we embrace capitalism as The One True Way, we also convert energy into labour, and labour into products. This is where we hit a wall: there’s only so much energy we can physically extract from the earth, but there is absolutely no limit to the desire for growth under capitalism. The idea is to grow forever. All of this has recently led me to question the efficiency of GPTs — is there a reason why they take so much energy to train and to use, beyond them being really massive and powerful? Like is there some underlying inefficiency with how they work? In my impatient googling I was only able to find complaints from developers saying that GPT-4 is too slow, but no real details about the underlying code that makes these models work, because of course, despite their name OpenAI famously do not make open source models. In my search however, I did come across this strange LessWrong article which is basically just a effective altruist smoothie bar of acronyms and specs: there is a concern that after GPT-5, model training of this kind will slow down massively, because there is no data centre big enough to train anything more powerful than that — and it would take years to build one. The title of this LessWrong piece is “Scaling of AI training runs will slow down after GPT-5” which is a statement characterised in the comments as silly an unreasonable, even by the author. The general response here is not ‘ah well I guess we can just slow down the production of GPUs and be happy with the current size and power of our slop machines’. Rather, it’s that ‘decentralised training’ — where you use more than one data centre at a time — is a ‘solved problem’, and therefore the inevitable way forward. There is an AI Safety Bill being spat out of California’s legislative sphincter as we speak; it’s really doing the rounds because Silicon Valley technologists are worried that doing safety checks on AI models before releasing them to the public is a gruesome governmental overreach that will only stifle innovation — a very original take from those guys. The lesser known part of the bill is about CalCompute, which is a state-owned cluster of public compute — that means open access to compute power which currently only a handful of private companies enjoy. While I’m all for taking power out of the hands of big tech firms via public infrastructure, this still is an investment into more data centres, essentially. Which again, do we really need more of those? These conversations only naturalise the Bad Musician as a talented member of the band, where really he is a parasitic worm trying to get famous. I was very happy without the Bad Musician, actually. EAs and thoroughly lobbied politicians have let him in, and allowed him to sour their creative milks — if they ever had any. No where in their conversation about public compute, and the energy required to train GPT-5 are any thoughts about whether we as a species should even seek to extract and use that much energy in the first place. It is automatically presumed to be worth it. I look at the 4D chess these EAs play to make this make sense, and then to the AI influencer who generated a clone of Flappy Bird in seconds and wonder how it can possibly be worth it. The instant Flappy Bird clone obscures so much effort, labour, and energy consumption for the smallest most inconsequential tip of an unfathomable iceberg: a silly clone, of an already silly game, that no one will play. So in this sense, where we look for true use-cases we find only a blackhole. It absorbs energy and research hours at an alarming rate. There’s a lot of time and effort being put into alignment, for instance, which is the pursuit of aligning a machine with the goals and values of the humans who interface with it. This adds warning labels to the Bad Musician instead of straightforwardly asking him why he thinks his smooth toddler brain music is worth consideration. Now, with alignment, I understand that we don’t want the casual user to inadvertently subject themselves to racist content via ChatGPT, but focussing on that only protects us from the lowest risk and lowest impact outcomes. I struggle to understand how alignment tackles the ongoing issue of AI spam polluting our information environment, such as with shrimp jesus, which as a concept is not offensive or harmful, but is part of a co-ordinated effort to deceive people into clicking on things. Or the potential for extremely believable mis/disinformation — which is something that people have been spreading for more than a century now. Bit hard to break that habit with wet half-baked idea like alignment. Alignment is a one-dimensional approach to an inter-dimensional problem. In this New Atlantis piece, Brian Boyd says, “’We’ all know that AI needs ethics. But who are ‘we’? Who will decide the ways AI works, the ways we adopt it and the ways we reject it?” The one-size-fits-all ‘we’ doesn’t exist, and Silicon Valley certainly doesn’t speak for everyone. When do we work out what ‘we’ means, and get clarity on who we are aligning for? Can we (lol) really align models to the values of all cultures somehow, or is all this a waste of energy and a distraction? I urge you not to invite the Bad Musician into your group. Yes, he’s pallid ginger man and maybe you feel sorry for him, but that doesn’t mean he gets creative license over your life’s work. He’s a tool. Just ask him to play C, F and G like in the movie and that’s it. Otherwise he will dehydrate you until you are a crispy husk. Thank you. 💌 Thank you for subscribing to Horrific/Terrific. If you need more reasons to distract yourself try looking at my website or maybe this ridiculous zine that I write or how about these silly games that I’ve made. Enjoy! |

Older messages

Content Neverending

Saturday, September 7, 2024

What do you know about how Flickr started? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Weird Christian Flaps

Tuesday, August 13, 2024

Exercising your right to engage in discourse and share bad takes ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Rapid onset reality detachment

Friday, July 26, 2024

What does Gwenyth Paltrow have to do with Crowdstrike? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🫀 Put your organs down and become an immortal computer

Friday, July 5, 2024

Are you a transhumanist or do you just need therapy? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Zero Clicks, Zero F*cks

Monday, June 3, 2024

A thousand conveyer belts of garbage converging on your face ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

Daily Coding Problem: Problem #1707 [Medium]

Monday, March 3, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Facebook. In chess, the Elo rating system is used to calculate player strengths based on

Simplification Takes Courage & Perplexity introduces Comet

Monday, March 3, 2025

Elicit raises $22M Series A, Perplexity is working on an AI-powered browser, developing taste, and more in this week's issue of Creativerly. Creativerly Simplification Takes Courage &

Mapped | Which Countries Are Perceived as the Most Corrupt? 🌎

Monday, March 3, 2025

In this map, we visualize the Corruption Perceptions Index Score for countries around the world. View Online | Subscribe | Download Our App Presented by: Stay current on the latest money news that

The new tablet to beat

Monday, March 3, 2025

5 top MWC products; iPhone 16e hands-on📱; Solar-powered laptop -- ZDNET ZDNET Tech Today - US March 3, 2025 TCL Nxtpaper 11 tablet at CES The tablet that replaced my Kindle and iPad is finally getting

Import AI 402: Why NVIDIA beats AMD: vending machines vs superintelligence; harder BIG-Bench

Monday, March 3, 2025

What will machines name their first discoveries? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

GCP Newsletter #440

Monday, March 3, 2025

Welcome to issue #440 March 3rd, 2025 News LLM Official Blog Vertex AI Evaluate gen AI models with Vertex AI evaluation service and LLM comparator - Vertex AI evaluation service and LLM Comparator are

Apple Should Swap Out Siri with ChatGPT

Monday, March 3, 2025

Not forever, but for now. Until a new, better Siri is actually ready to roll — which may be *years* away... Apple Should Swap Out Siri with ChatGPT Not forever, but for now. Until a new, better Siri is

⚡ THN Weekly Recap: Alerts on Zero-Day Exploits, AI Breaches, and Crypto Heists

Monday, March 3, 2025

Get exclusive insights on cyber attacks—including expert analysis on zero-day exploits, AI breaches, and crypto hacks—in our free newsletter. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚙️ AI price war

Monday, March 3, 2025

Plus: The reality of LLM 'research'

Post from Syncfusion Blogs on 03/03/2025

Monday, March 3, 2025

New blogs from Syncfusion ® AI-Driven Natural Language Filtering in WPF DataGrid for Smarter Data Processing By Susmitha Sundar This blog explains how to add AI-driven natural language filtering in the