Astral Codex Ten - Book Review: Deep Utopia

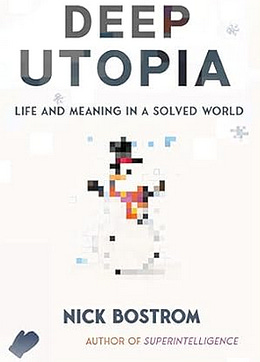

I. Oxford philosopher Nick Bostrom got famous for asking “What if technology is really really bad?” He helped define ‘existential risk’, popularize fears of malevolent superintelligence, and argue that we were living in a ‘vulnerable world’ prone to physical or biological catastrophe. His latest book breaks from his usual oeuvre. In Deep Utopia, he asks: “What if technology is really really good?” Most previous utopian literature (he notes) has been about ‘shallow’ utopias. There are still problems; we just handle them better. There’s still scarcity, but at least the government distributes resources fairly. There’s still sickness and death, but at least everyone has free high-quality health care. But Bostrom asks: what if there were literally no problems? What if you could do literally whatever you wanted?¹ Maybe the world is run by a benevolent superintelligence who’s uploaded everyone into a virtual universe, and you can change your material conditions as easily as changing desktop wallpaper. Maybe we have nanobots too cheap to meter, and if you whisper ‘please make me a five hundred story palace, with a thousand servants who all look exactly like Marilyn Monroe’, then your wish will be their command. If you want to be twenty feet tall and immortal, the only thing blocking you is the doorframe. Would this be as good as it sounds? Or would people’s lives become boring and meaningless? II. We can start by bounding the damage. Our deep utopia will know how to wirehead people safely. So worst-case scenario, if you absolutely can’t figure out anything else to do, you live in perfect bliss forever. Bostrom urges us not to reflexively turn up our noses at this outcome. Wireheading grosses us out because our best approximations for it - drugs, porn, etc - are tawdry and shallow. Actually-good wireheading would be neither. You could walk through the woods at sunrise, experiencing a combination of the joy you felt gazing on the face of your newborn first child, the excitement Einstein experienced upon seeing the first glimmers of relativity, and the ecstasy of St. Teresa as she gazed upon the face of God. That afternoon, you could walk somewhere else, and feel an entirely different artisanal combination of blisses. “It feels so good that if the sensation were translated into tears of gratitude, rivers would overflow.” If wireheading seems too meaningless, you can add in wireheaded-meaning. People often say that an MDMA trip or mystical vision was the most meaningful experience of their lives. It would be trivial for our Deep Utopians to hack your brain to see a world in a grain of sand or heaven in a wildflower. We're gonna mean so much, you might even get tired of meaning. And you'll say 'please, please, it's too much meaning. We can't take it anymore, Professor Bostrom, it's too much!' So the problem isn’t necessarily that we’ll feel bored and meaningless. The problem is that maybe we’ll feel happy and meaning-laden, but our feelings will be objectively wrong and contemptible. Wireheading feels like cheating, and some people will demand a non-cheating solution, and we won’t have a full utopia until those people are satisfied too. Since this is a question of how not to cheat, a lot depends on how exactly we define cheating. Bostrom proposes a series of progressively more complicated solutions for people with progressively stricter anti-cheating standards. If you’re only concerned about avoiding wireheading, you could spend Utopia appreciating art. The Deep Utopians could hack your brain to give you the critical refinement of Harold Bloom or some other great art-appreciator, and you could spend eternity reading the Great Books and having extremely perspicacious opinions about them. Plenty of scholars do that today, and nobody thinks their lives are meaningless. In fact, why stop at Bloom? The Utopians could hack you into some kind of superbeing who can appreciate superart as far beyond current humanity as Shakespeare is beyond a tree frog. If you live a billion years, do you run out of Art to appreciate? Not just in the sense of exhausting humanity’s store of Art (superintelligent art generator AIs can always add more art faster than you can exhaust it), but in the sense of exhausting the space of possible Art? Bostrom is unsure. He suggests that since we’re only using Art to satisfy ourselves that we’re not cheating - rather than demanding that the Art itself be interesting - we can change our interestingness criteria a little whenever we run out of Art, helping us distinguish ever finer gradations. (All of the above goes for appreciating the profound truths of Science too. We assume that all worthwhile science has already been discovered - or that everything left requires a particle accelerator the size of the Milky Way plus an AI with a brain the size of Jupiter to interpret the results. You will not be asked to help, but you can still try to contemplate the already-discovered truths and bask in their elegance.) What if that still counts as cheating?

Consider the life of some stereotypical British aristocrat. He wakes up, reads the morning paper, has some tea. He goes on a walk with his dog. He gets home and plays snooker or cricket or crumpets (unless some of those aren’t games; I’m not really up on my Britishisms). He reads a Jane Austen novel on his comfy chair by the fire. Then he goes to bed. It’s not the most fascinating life, but he’s probably pretty happy, and nobody accuses him of wireheading. What if our anti-cheating criteria are even stricter than this? What if we want drama, excitement, the possibility of failure? Here Bostrom turns to sports and games (broadly defined, including activities like climbing Everest). Climbing Everest is dramatic and exciting; Deep Utopians can enjoy it just as much as we can. It’s true that they could always just ask their nanobot-genies “Teleport me to the top of Everest” or even “Turn me into a superman who can climb Everest in half an hour without breaking a sweat”. But even today, we can hire a helicopter to take us to the summit. It doesn’t matter; everyone knows this is cheating. You only get to feel good about yourself if you climb it the old-fashioned way. We have to go stricter! What about “you have to make a mark on the world” or ”you have to make a positive difference”? Here I start to find Bostrom’s solutions a little gimmicky. You could have Person A pledge to be sad unless Person B climbs Everest (if they can’t honor this pledge on their own, they could reverse wirehead into being sad). Then Person B has to climb Everest in order to make a difference and save Person A! Why would Person A agree to this scheme? Because they’re also making a positive difference in the world, by helping provide their fellow non-cheaters with purpose! (a more natural version of this might look like participating in a sporting competition that your community cares about, like the World Cup.) Even stricter! What if you need to make a non-gimmicky positive difference that isn’t downstream of someone else’s decision to artificially provide you with purpose? The best the book can do is suggest religious or pseudo-religious rituals. We can’t build robots to go to church for us on Easter or fast for us on Yom Kippur, nor to live decent lives free of sin. If you’re an atheist, maybe you can get a similar effect by honoring your ancestors - perhaps your hard-working grandmother wanted to be remembered by her descendants, and only you can fulfill her last request. Can we go further than that? A unique positive contribution? An interesting contribution? One that directly affects the lives of lots of non-supernatural non-dead people? Maybe not. But most people already don’t do these things. Most of us aren’t revolutionizing societies or pursuing social missions. We’re just sort of getting by. You can still do that in Deep Utopia, and live a life of constant bliss. III. That’s the content. Before I discuss my thoughts, a few words about the form. If you told Zizek that he had to write a work of fiction, you would get Deep Utopia. The book takes the form of a story. The story is: some young people go to a lecture series by Nick Bostrom. At the lecture, Bostrom says [commence 468 pages of Bostrom describing his theory of purpose in utopia]. Then the young people go to a party, then go home. The end. When there are little side boxes going into more detail about a certain topic, they’re described as “handouts” at the “lecture”. Occasionally, not-especially-wacky things happen, like the fire alarm goes on, or the lights go out. Sometimes when Bostrom wants to play devil’s advocate, he puts his question in the mouth of one of the students. Otherwise, it’s pretty much what you would expect from a 468-page dense philosophy tome with a fig leaf of “And some students went to a lecture where Nick Bostrom said…” at the beginning. Except the part about the fox! In the frame story, Bostrom has assigned homework: students need to read a book (actually a series of epistles) called Feodor The Fox. This side story (there are ~50 pages of it, scattered throughout the book) describes a society of woodland animals. One of the animals, the titular Feodor, wants to make the world a better place, but isn’t sure how. He teams up with some other animals, especially a pig, to try to figure it out. They come up with an interesting scheme, but never really get anywhere. The end. I can only draw the vaguest of connections between the story and the main text. Oh, and the space heater. This is the other “homework” story. When the world’s richest man dies, he leaves his entire fortune to a foundation for the benefit of his cheap space heater - he says the space heater actually improved his life, whereas everyone else was backbiting and ungracious. The foundation trustees are stuck with the problem of figuring out how to use hundreds of billions of dollars to benefit a space heater which doesn’t, technically, have any preferences. They decide to add an AI to the heater, sort of kind of uplifting it to sentience. Gradually the space heater becomes superintelligent, maybe even divine. At the end, an author mouthpiece character asks: what if, at the very beginning, the AI-augmented space heater had refused the uplifting? What if it asked to be disconnected from the AI so it could remain a space heater? Would it have been ethical for the trustees to agree/refuse? Here it’s more obvious what Bostrom was trying to teach. But it sure was a strange story. Bostrom’s work has attained a cult status, and it felt at times like he was leaning into it. This was a mystical text, full of hidden knowledge to those willing to explore its secrets. I was able to recognize a few unnamed or only-obliquely-named characters at the lecture. One was David Pearce, who I profiled here. Another, “Nospmit”, must be a reference to this Oxford philosopher, but I don’t know enough about him to understand why the allusion made sense. Nor do I understand why Bostrom’s students were named “Kelvin”, “Firafix”, and “Tessius”, nor why the lectures were being held in the “Philip Morris Auditorium” (later renamed the “Exxon Auditorium” (later renamed the “Enron Auditorium”)) - is this a reference to effective altruism’s run-ins with FTX? I honestly expected that the end of the book would have some big reveal, like that all of this was happening ten billion years in the future in a simulation created by the woodland animals or something. Maybe this was the intent and I just missed it. Still, the book’s mysteries will have to await a better kabbalist than I. I probably sound critical here, but overall I think this was a good (albeit weird) decision. It’s hard to make people read a 468 page philosophy book, and the frame story and occasional woodland-animal-excursions provided a nice distraction. I also appreciated Bostrom’s prose. Yes, a lot of it was typical analytic philosophy “let’s spend five pages analyzing the subtle differences between ‘meaning’ and ‘purpose’”. But when he wants to write well, he writes well. For example, here’s a passage on whether life is more meaningful during childhood (because meaning depends on novel experiences, and the fewer previous experiences you’ve had, the more novel ones you get):

I couldn’t help comparing Deep Utopia to Will MacAskill’s book What We Owe The Future. Both MacAskill and Bostrom are in a weird, almost unprecedented position - Oxford philosophers suddenly thrust onto the world stage by the success of the effective altruism movement. MacAskill got famous and decided to write an Official Important Person Book and promote it on the world stage. Bostrom got famous and decided he didn’t need to pretend to be normal anymore. As a result, Deep Utopia feels less like an academic paper, and more like the sort of things one of the great philosophers of the past might have written, back in the days when philosophical tracts could include a character called Stupidus who secretly represented the Pope. My biggest problem with all of this is that this book was crying out for fictional stories set in Deep Utopia. The anti-cheating focus revolved around whether we could have a utopia that we found narratively satisfying. But Bostrom cashed this out in philosophical analyses of what narrative satisfaction meant and whether it was possible. I would accept this from other philosophers who are too boring and conformist to digress into fiction. But Bostrom taunted us with lots of perfectly-fine fictional short stories, none of which were in utopia (unless one of them was secretly in utopia and we the reader were supposed to figure this out) and none of which demonstrated any of the principles he talked about. I consider this an important failure. It’s bad enough that Deep Utopia didn’t include such a story. But narratizability is a fair Near Mode test of Bostrom’s vision, and I’m not sure if I, having finished his book, could write a story about people in a deep utopia living lives which we (from the outside) recognize as happy and meaningful. I can imagine a few elements - there would be sports, and communities, and ritual - but I find myself boggling at the difficulty of imagining a world without limitations and how all of its parts would interact. IV. Partly this is because I don’t know if Deep Utopia goes far enough. For example, Bostrom thinks a deep utopia would still have sports available as a distraction / source of meaning. I’m not so sure. Consider weight-lifting. Your success in weight-lifting seems like a pretty straightforward combination of your biology and your training. Weight-lifting retains its excitement because we don’t fully understand either. There’s still a chance that any random guy could turn out to have a hidden weight-lifting talent. Or that you could discover the perfect regimen that lets you make gains beyond what the rest of the world thinks possible. Suppose we truly understood both of these factors. You could send your genes to 23andMe and receive a perfectly-accurate estimate of your weightlifting potential. And scientists had long since discovered the perfect training regimen (including the perfect training regimen for people with your exact genes/lifestyle/limitations). Then you could plug your genotype and training regimen into a computer and get the exact amount you’d be able to lift after one year, two years, etc. The computer is never wrong. Would weightlifting really be a sport anymore? A few people whose genes put them in the 99.999th percentile for potential would compete to see who could follow the training regimen most perfectly. One of them would miss a session for their mother’s funeral and drop out of the running; the other guy would win gold at whatever passed for this society’s Olympics. Doesn’t sound too exciting. A team sport like baseball or soccer would be harder to solve. Maybe you’d have to resort to probabilistic estimates; given these two teams at this stadium, the chance of the Red Sox winning is 78.6%, because the model can’t predict which direction some random air gusts will go. I guess this is no worse than having Nate Silver making a betting model. But on the individual level, it’s still a combination of your (well understood) genes and (well understood) training regimen. But this is assuming that something like genetics stays relevant. Suppose we’re all posthumans in robot bodies. Then your ability to hit a ball depends entirely on how well-constructed your robot body is. The richest guy gets the best robot body and inevitably wins the game. Or even if we’re not in robot bodies, we’ve probably at least been heavily genetically engineered, or genetically selected. Or even if we aren’t, someone who wants their kid to grow up to be a star athlete can get told exactly which person to marry for the right gene combo, and anyone whose parents don’t do this is as doomed as a steroid-free Tour de France contestant. It’s not just that the future will need some kind of Luddite surveillance state to make sports work out without unfair advantages. It’s that it’s hard to even define what an unfair advantage is at this point, and almost all sporting “talent” will come from rules-lawyering the definitions. (Compare to the fear that if intersex people are allowed to enter women’s sports today, cis women won’t be able to keep up. This is interesting only because intersex is a rare piece of biology that we understand and can easily notice; once every piece of biology is like that, it will all be equally controversial and seem equally unfair.) What about religion, Bostrom’s other holdout? What if, after we all have IQ one billion, we can just figure out which religion is true? If it’s atheism, the whole plan is a no-go. But if it’s some specific religion, that’s almost as bad. Imagine a world where religion has been emptied of its faith and mystery, and we know exactly how each act of faith figures into the divine economy. Going to church would be no more meaningful than doing our taxes - another regular ritual we perform to appease a higher power who will punish us if we don’t.

How could you pray to God if you had no unmet needs? How could you confess and atone, if you could hack the sinfulness out of your utility function through neurotechnology? When I think of questions like these, I am less optimistic about Bostrom’s solutions - and I wish even harder that he had given us some fiction where we got to his world in action. The only sentences in Deep Utopia that I appreciated without reservation were a few almost throwaway references to zones where utopia had been suspended. Suppose you want to gain meaning by climbing Everest, but it wouldn’t count unless there’s a real risk of death. That is, if you fall in a crevasse, you can’t have the option to call on the nanobot-genies to teleport you safely back to your bed. Bostrom suggests - then doesn’t follow up on - utopia-free zones where the nanobot-genies are absent. If you die in a utopia-free-zone, you die in real life. This is a bold proposal. What happens if someone goes to the utopia-free zone, falls in a crevasse, and as they lay dying, they shout out “No, I regret my choice, please come save me!”? You’d need some kind of commitment mechanism worked out beforehand (a safe word?). Should it be legal to have safe-word-less, you-really-do-die-if-you-fall zones? Tough question. (many philosophers have said that the most human activity - maybe even the highest activity - is politics. Bostrom barely touches on this, but it seems like another plausible source of meaning in deep utopia. No matter how obsolete our material concerns, there will still be open issues like the one above.) What if you regret learning the answer to the God question, and you want to live in a state of ignorance - a place where you can still have blind faith? Can you go to a utopia-free-zone where they erase that knowledge from your head, and dial your IQ back to 100 so you can’t figure it out again on your own? If you regret learning the secrets of weightlifting, can you go to a utopia-free-zone where nobody really understands genetics or fitness regimens, everyone’s in a randomly selected biological body, and all you can do is lift and hope? At this point, why not just go all the way? Do the Deep Utopians have their Amish? When you get tired of being blissed out all the time, can you go to their version of Lancaster, Pennsylvania, and do heavy farm labor, secure in the knowledge that you’ll go hungry if the corn doesn’t grow? Or is that the wrong way to think about it? Is it less of an Amish farming village than a virtual reality sim? Want meaning, struggle, and passion? Become Napoleon for a century. Go in the experience machine, and - with only the vaguest memory of your past existence, or none at all - get born on the island of Corsica in 1769 and see what happens. Spend a while relaxing from posthumanity in the body of a 5’7, IQ 135 Frenchman. Is this stupid? We hold an Industrial Revolution, design artificial intelligence, go through an entire singularity - only to end up back in 18th-century France? Not necessarily. 18th century France was full of miserable people - starving peasants, wretched prisoners, smallpox victims - or people who suffered merely from the “affliction” of not getting to be Napoleon. Even Napoleon’s life wasn’t maximally interesting. You could live an enriched, enchanted version of Napoleon’s life, where every decision had extra branching consequences and there was no downtime. Or a version of Napoleon’s life customized to your personal preferences: NSFW Napoleon where all the women wear skimpy costumes! Woke Napoleon where every third Frenchman is a person of color! If you hate the cold, you can play as a Napoleon who skipped Russia and invaded Cancun! It would be the difference between living in medieval Scandinavia and playing Assassin’s Creed: Valhalla. More generally, there must be human lives that are in the sweet spot between pleasure and difficult-striving-dramatic-excitement. The average life in history probably doesn’t make it. Even the most exciting lives, like Napoleon’s, probably aren’t perfect. And many lives are wasted on their subjects; what if Napoleon and Elvis both would have had preferred to be the other? If non-utopia is awful but utopia is boring, can we figure out some kind of sweet spot in the middle? Can we have an archipelago of different utopia levels, for people who prefer different points on the spectrum? Would varying your utopia level be a richer and more interesting life than staying in any one position for too long? Is it more fun to bliss out on superultrawireheading after a grueling stint in 18th-century France? Is it more fun to be Napoleon if you have faith in a silicon Heaven ahead of you? The obvious next question is whether all of this has already happened. I prefer a different formulation of the simulation argument, but I won’t deny this one has its charms². Crowley says to “interpret every phenomenon as a particular dealing of God with your soul”. If you’re a Deep Utopian posthuman, living a life made for you by some superintelligent designer (or your own transcended self), trying to balance enjoyment with difficulty and rewardingness, then this phrase takes on new meaning. Why did I come across Deep Utopia this month? Why did I write this review? Why are you reading it? What are you trying to tell yourself? 1 The book only briefly touches on ideas about necessarily scarce goods. These include obvious things like status, but also things more like NFTs (which are scarce by design). I’m not sure there’s much more to say about this. Maybe in the far future having certain scarce NFTs could be considered “cool”; in this case, all the have-nots would just have to settle for planet-sized palaces and eternal bliss, but no NFTs. 2 You might think that, as pseudo-religions go, this one is socially corrosive - if you’re not sure the world is real, wouldn’t that make you a worse person? I’m not sure. Imagine getting in some kind of VR sim of the 19th century US, where you forget all of your modern knowledge but still have your same personality. Wouldn’t you hope that you independently realized that abolitionism was morally correct and spent at least some of your time advocating for it? Wouldn’t you be embarrassed if you woke up again, back in Heaven, and learned that you’d been a slaveowner and never questioned it? If you can’t fear the real Last Judgment with its trumpets and angels, can you at least imagine looking at a scoreboard for how morally you acted - maybe a public scoreboard that your friends could also see - and having to justify everything you did to a much wiser and vaster version of yourself, with the advantage of a few thousand extra years of moral progress? You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

Open Thread 350

Monday, October 7, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Ballots Everywhere: Times And Places

Friday, October 4, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Against The Cultural Christianity Argument

Friday, October 4, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Preliminary Milei Report Card

Tuesday, October 1, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Ballots Everywhere: Call For Organizers, Times, & Dates

Monday, September 30, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

What A Day: Vodka shots fired

Friday, February 28, 2025

Did American support for Ukraine's war with Russia just melt down on live TV? It sure looks that way… and Putin's pals are “already on their seventh vodka toast.” ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Friday Sales: Half-Off Eberjey and $99 Salomons

Friday, February 28, 2025

Including woven Merrells and a colorful Hydro Flask. The Strategist Every product is independently selected by editors. If you buy something through our links, New York may earn an affiliate commission

Google sets long-term plan to exit Seattle’s Fremont neighborhood, consolidate in South Lake Union

Friday, February 28, 2025

Breaking News from GeekWire GeekWire.com | View in browser Google confirmed Friday that the company plans to bring all its employees in Seattle together at its South Lake Union campus, citing a desire

Miniature Donkey, Father-Daughter Dance, and a Baby Rescue

Friday, February 28, 2025

Seamus, a five-month-old miniature donkey in Canada, is being trained as a therapy animal to provide comfort to those in need. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Finally, some good news

Friday, February 28, 2025

Plus: sugar daddies and mommies, Instagram reels, and more. Each week, a different Vox editor curates their favorite work that Vox has published across text, audio, and video. This week's

It’s a great moment for startups — with one caveat | Microsoft retiring Skype

Friday, February 28, 2025

Meet the new leader of Alliance of Angels | Amazon commits $100M to Bellevue for housing ADVERTISEMENT GeekWire SPONSOR MESSAGE: SEA Airport Is Moving from Now to WOW!: Take a virtual tour of

S*M*A*S*H*

Friday, February 28, 2025

Measles and Maha, Putin's Pawn ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

DOGE is operating in near-total secrecy. We’re about to break it wide open.

Friday, February 28, 2025

Under the Freedom of Information Act, the public has a right to access documents and other information that will shed light on DOGE's operations, and we're committed to getting to the truth.

TFGIF: ♻️ In Trumpland, it ain’t easy being green

Friday, February 28, 2025

The fight for the soul of the battery belt and the other C-word. Welcome to Lever Daily's TFGIF. Each Friday, we share one deep-dive Lever story and one deep-dive podcast episode full of original

☕ Wait and DTC

Friday, February 28, 2025

Bonobos's new ambitions. February 28, 2025 View Online | Sign Up Retail Brew Presented By Brookfield Properties Hi there. This week, Amazon dropped a new Alexa upgrade powered by (you guessed it)