Humanity Redefined - State of AI Report 2024 - Sync #489

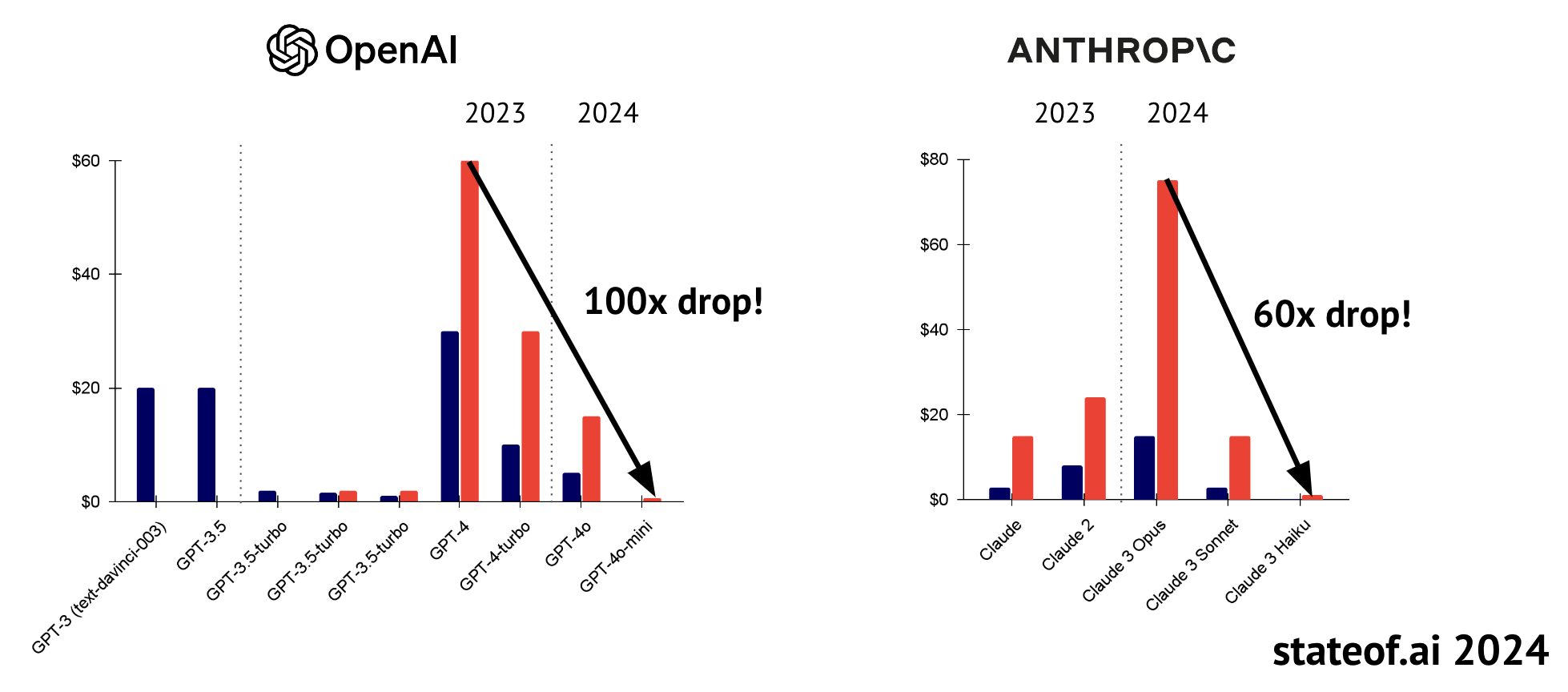

I hope you enjoy this free post. If you do, please like ❤️ or share it, for example by forwarding this email to a friend or colleague. Writing this post took around eight hours to write. Liking or sharing it takes less than eight seconds and makes a huge difference. Thank you! State of AI Report 2024 - Sync #489Plus: The New York Times warns Perplexity; have we reached peak human lifespan; tech giants tap nuclear power for AI; OpenAI projects billions in losses while Nvidia's stock reaches a new highHello and welcome to Sync #489! State of AI Report 2024, one of the best resources capturing the current state of AI across various fields—from research to industry, politics, and safety—has been released. In this issue of Sync, I’ll highlight three developments that caught my attention. Beyond that, plenty has happened in the world of technology over the past week. In AI, The New York Times warns Perplexity not to use their content. Meanwhile, Google and Amazon are turning to nuclear power for their AI datacentres, OpenAI’s internal financial report projects a loss of $14 billion by 2026, and Nvidia’s stock reaches a new high. In robotics, Boston Dynamics has partnered with the Toyota Research Institute to develop AI for humanoid robots, and a three-armed robot conductor has made its debut in Dresden. We’ll also take a closer look at Tesla’s confusing "We, Robot" event. Additionally, a recent study suggests we are approaching the limits of human lifespan, and lab-grown meat could be sold in the UK within the next few years. Enjoy! State of AI Report 2024One of the best resources to learn where the AI research and industry currently stand is the State of AI Report, produced by Nathan Benaich and Air Street Capital. The seventh edition of the report has recently been published and is filled with insightful information about developments in AI research, industry, politics, and safety. The report itself consists of over 200 slides packed with detailed information about the state of AI in 2024, and is therefore too extensive to summarise in a short article. Instead, I will highlight three developments that particularly caught my attention—the narrowing gap between top models, the move from models to products, and the vibe shift from safety to acceleration. I still recommend at least skimming the report. It will provide you with a good overview of the AI industry. Perhaps other developments highlighted in the report will catch your attention. If so, please let me know in the comments what stood out to you. The gap on top is narrowingIn 2023, OpenAI and GPT-4 were the unchallenged leaders in the new AI boom, maintaining the top position throughout the year. However, in 2024, the gap (which some liked to call a chasm) between OpenAI’s models and others began to close. Models like Anthropic’s Claude 3.5 Sonnet and Google Gemini 1.5 have caught up with OpenAI’s models, sometimes even surpassing them. At the same time, open models began to put pressure on proprietary models. In April, Meta released the Llama 3 family, a major milestone for open models. The largest model in the Llama family, Llama 3.1 405B, came very close to top models such as GPT-4 and Claude 3.5 Sonnet across multiple benchmarks. With the differences in performance between models becoming increasingly small, developers had to look elsewhere to gain an edge over competitors. The main frontier now is research on planning and reasoning, which combines large language models with reinforcement learning, evolutionary algorithms, and self-improvement methods. The first model to incorporate these new developments is OpenAI’s o1, which achieved some impressive results. However, as the authors of the report ask, how long will o1 top the charts? The competition is fierce, and OpenAI no longer has the first-mover advantage. It’s fair to assume that Anthropic and Google will, sooner rather than later, release their own models capable of planning and reasoning. One interesting observation from the report worth highlighting is how much the cost of large language models has dropped over the last year. These models are now cheaper and more accessible, which opens up new possibilities and applications across various apps and industries, as well as in fields such as mathematics, biology, genomics, the physical sciences, and neuroscience.

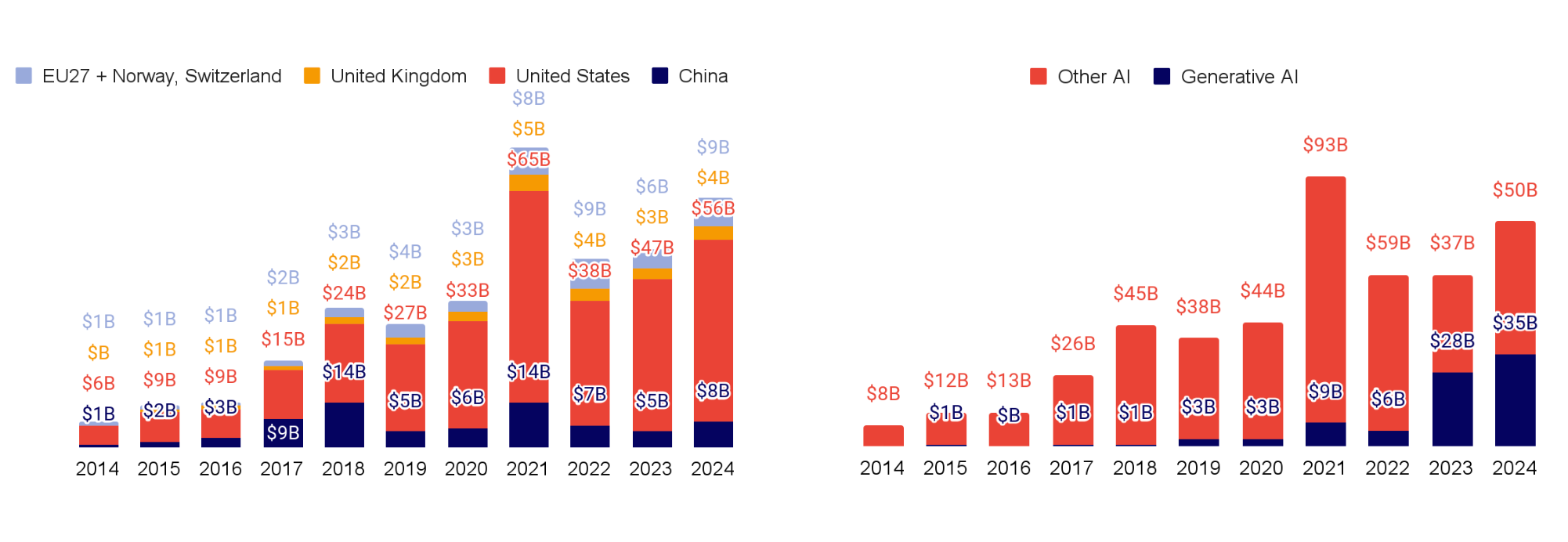

A new frontier for large language models worth mentioning is robotics, where these models are helping to drive new capabilities. One factor improving accessibility to this technology is the rise of small language models. Models like Google Gemma, Microsoft Phi, models from Mistral AI, and smaller Llama models open up the possibility of running language models directly on smaller devices, without needing to rely on cloud-based models. The move from models to productsIf 2023 was the year generative AI exploded onto the scene, enabling new possibilities and spawning a new cohort of AI startups that attracted billions of dollars in investments, then 2024 is the year these new companies and ideas have been put to the test. According to the report, close to $100 billion has been invested in AI companies. The graphs included in the report show that the majority of that money was invested in US companies. In total, generative AI companies received $35 billion in 2024. However, it is worth noting that these numbers are inflated by a handful of mega investment rounds, such as recent $6 billion fundraises by xAI and OpenAI, or Anthropic’s $4 billion mega round at the beginning of the year. It would be interesting to see how that $100 billion is distributed in detail.

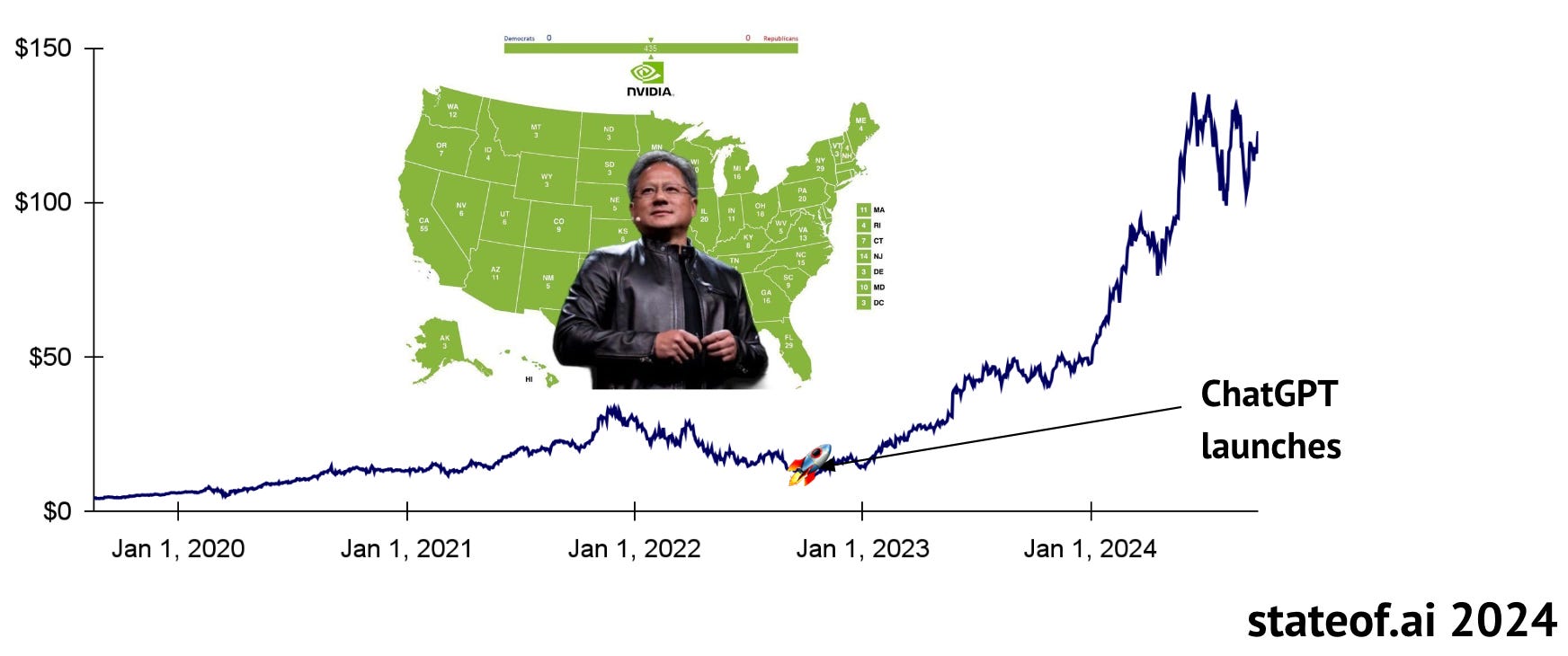

The report states that AI companies have reached a combined value of $9 trillion. All that money invested in AI companies is starting to generate revenue, at least for some of them. More established companies are beginning to report billions of dollars in revenue, while startups, particularly those in audio and video generation, are starting to gain attention. A major takeaway from the State of AI Report 2024 is that the industry is transitioning from just creating models to building actual products around them. In 2023, simply having a large language model could secure substantial funding (as seen with Mistral or Inflection AI), but in 2024, that’s no longer sufficient. Models are now much cheaper and more accessible than a year ago, and the focus has shifted to what useful products and services can be developed with them. At the same time, as the report notes, questions around pricing and long-term sustainability remain unanswered. A new trend that emerged over the past year is the rise of pseudo-acquisitions in the AI space. Companies unable to remain at the cutting edge are not being absorbed in the traditional way, where the larger company acquires the smaller competitor. Instead, larger players are hiring top talent and leadership from struggling companies and licensing their technology, leaving behind only the shell of the original firms. Examples of this pseudo-acquisition can be seen with Inflection AI, Adept, and Character.AI. The report also gives a special mention to Nvidia. If OpenAI is the poster child of the current AI boom on the software side, then Nvidia is the poster child on the hardware side. The chip manufacturer has benefited enormously from the high demand for AI hardware, propelling the company into the elite club of those valued at more than $1 trillion. At the time of writing, Nvidia is worth $3.4 trillion.

The vibe-shift from safety to accelerationThe last point I’d like to highlight from the State of AI Report 2024 is what the authors referred to as “the vibe shift from safety to acceleration.” Alongside the rise of OpenAI and large language models in 2023, there was a parallel conversation about potential dangers and questions surrounding AI safety. Panels and summits dedicated to these topics were held worldwide, with notable figures in the industry urging that AI safety be taken seriously. However, the existential risk discourse has cooled, especially after the failed coup at OpenAI. Some of those who once warned about the dangers of AI are now fully on board with accelerating its development. This does not mean AI safety is no longer being studied—it’s just no longer a priority. Researchers continue to explore potential model vulnerabilities and misuse, proposing fixes and safeguards. Meanwhile, governments worldwide are following the UK's lead by building their own state capacity around AI safety, launching institutes, and examining critical national infrastructure for potential vulnerabilities. If you enjoy this post, please click the ❤️ button or share it. Do you like my work? Consider becoming a paying subscriber to support it For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter. Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving. 🦾 More than a humanHave We Reached Peak Human Life Span? What is wearable neurotech and why might we need it? 🧠 Artificial IntelligenceGoogle to buy nuclear power for AI datacentres in ‘world first’ deal Amazon.com joins push for nuclear power to meet data center demand The New York Times warns AI search engine Perplexity to stop using its content OpenAI Projections Imply Losses Tripling To $14 Billion In 2026 Nvidia, the AI chipmaker, just hit another record high Silicon Valley is debating if AI weapons should be allowed to decide to kill We’re Entering Uncharted Territory for Math Meta at OCP Summit 2024: The open future of networking hardware for AI If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word? 🤖 RoboticsTesla Optimus Bots Were Remotely Operated at Cybercab Event ▶️ The Tesla Robotaxi is Confusing... (19:13)  Marques Brownlee shared his experience from attending Tesla’s “We, Robot” event, where he had the chance to ride Tesla’s self-driving taxi, Cybercab, see the Robovan, and interact with Tesla’s humanoid robots, Optimus. The word Marques used to describe the event was “confusing.” In his view, Tesla’s event showed a concept of a certain type of high-tech future but the company failed to mention any numbers or specs of the presented products. Moreover, the staged nature of the event and the uncertainty about what was real versus scripted became the main talking point, overshadowing the products and the future they were meant to represent. Agility Robotics, Maker of Humanoid Bots, Shows Off Its ‘RoboFab’ Boston Dynamics teams with TRI to bring AI smarts to Atlas humanoid robot This three-person robotics startup is working with designer Yves Béhar to bring humanoids home Three-armed robot conductor makes debut in Dresden ▶️ This Robot is Building Supertall Elevators (10:00)  The B1M visits a skyscraper construction site in Vienna, Austria, to meet Ella, a construction robot that assists with installing elevators. This innovative robot autonomously climbs the elevator shaft, installing thousands of anchor bolts to which human workers then attach rails and the rest of the elevator system. By taking over the noisy and repetitive task of installing anchor bolts, Ella makes the construction process safer and more efficient. 🧬 BiotechnologyLab-grown meat could be sold in UK in next few years, says food regulator 💡TangentsHell Freezes Over as AMD and Intel Come Together for x86 Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it. Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support! My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!" |

Older messages

Machine learning wins two Nobel Prizes - Sync #488

Sunday, October 20, 2024

Plus: Tesla reveals Cybercab; Meta Movie Gen; Nobel Prize for microRNA; how a racist deepfake divided a community; how the semiconductor industry actually works; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

OpenAI raises over $6.6 billion - Sync #487

Sunday, October 6, 2024

Plus: SB 1047 has been vetoed; a new humanoid robot has been revealed; the dark side of AI voice cloning; a new episode in the fight over the CRISPR patent; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Meta's new empire: VR, AR and AI - Sync #486

Saturday, September 28, 2024

Plus: Mira Murati leaves OpenAI; Microsoft to revive a nuclear plant for its AI data centre; bioengineered trees that capture more carbon; stem cell therapy for diabetes; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

From Oil to AI: The Middle East's Rise as a New AI and Tech Hub - Sync #485

Sunday, September 22, 2024

Plus: Google to flag AI-generated images; new AI laws in California; Cruise is back in Bay Area; drones to fly between hospitals in London; 23andMe independent board directors resigns; and more! ͏ ͏ ͏

From Oil to AI: The Middle East's Rise as a New AI and Tech Hub - Sync #485

Sunday, September 22, 2024

Plus: Google to flag AI-generated images; new AI laws in California; Cruise is back in Bay Area; drones to fly between hospitals in London; 23andMe independent board directors resigns; and more! ͏ ͏ ͏

You Might Also Like

Daily Coding Problem: Problem #1707 [Medium]

Monday, March 3, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Facebook. In chess, the Elo rating system is used to calculate player strengths based on

Simplification Takes Courage & Perplexity introduces Comet

Monday, March 3, 2025

Elicit raises $22M Series A, Perplexity is working on an AI-powered browser, developing taste, and more in this week's issue of Creativerly. Creativerly Simplification Takes Courage &

Mapped | Which Countries Are Perceived as the Most Corrupt? 🌎

Monday, March 3, 2025

In this map, we visualize the Corruption Perceptions Index Score for countries around the world. View Online | Subscribe | Download Our App Presented by: Stay current on the latest money news that

The new tablet to beat

Monday, March 3, 2025

5 top MWC products; iPhone 16e hands-on📱; Solar-powered laptop -- ZDNET ZDNET Tech Today - US March 3, 2025 TCL Nxtpaper 11 tablet at CES The tablet that replaced my Kindle and iPad is finally getting

Import AI 402: Why NVIDIA beats AMD: vending machines vs superintelligence; harder BIG-Bench

Monday, March 3, 2025

What will machines name their first discoveries? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

GCP Newsletter #440

Monday, March 3, 2025

Welcome to issue #440 March 3rd, 2025 News LLM Official Blog Vertex AI Evaluate gen AI models with Vertex AI evaluation service and LLM comparator - Vertex AI evaluation service and LLM Comparator are

Apple Should Swap Out Siri with ChatGPT

Monday, March 3, 2025

Not forever, but for now. Until a new, better Siri is actually ready to roll — which may be *years* away... Apple Should Swap Out Siri with ChatGPT Not forever, but for now. Until a new, better Siri is

⚡ THN Weekly Recap: Alerts on Zero-Day Exploits, AI Breaches, and Crypto Heists

Monday, March 3, 2025

Get exclusive insights on cyber attacks—including expert analysis on zero-day exploits, AI breaches, and crypto hacks—in our free newsletter. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚙️ AI price war

Monday, March 3, 2025

Plus: The reality of LLM 'research'

Post from Syncfusion Blogs on 03/03/2025

Monday, March 3, 2025

New blogs from Syncfusion ® AI-Driven Natural Language Filtering in WPF DataGrid for Smarter Data Processing By Susmitha Sundar This blog explains how to add AI-driven natural language filtering in the