Five proven prompt engineering techniques (and a few more-advanced tactics)

Five proven prompt engineering techniques (and a few more-advanced tactics)How to get exactly what you want when working with AI👋 Hey, I’m Lenny, and welcome to a 🔒 subscriber-only edition 🔒 of my weekly newsletter. Each week I tackle reader questions about building product, driving growth, and accelerating your career. For more: Hire your next product leader | Favorite Maven courses | Lennybot | Podcast | Swag ❤️🔥 A quick note on the Lenny and Friends Summit

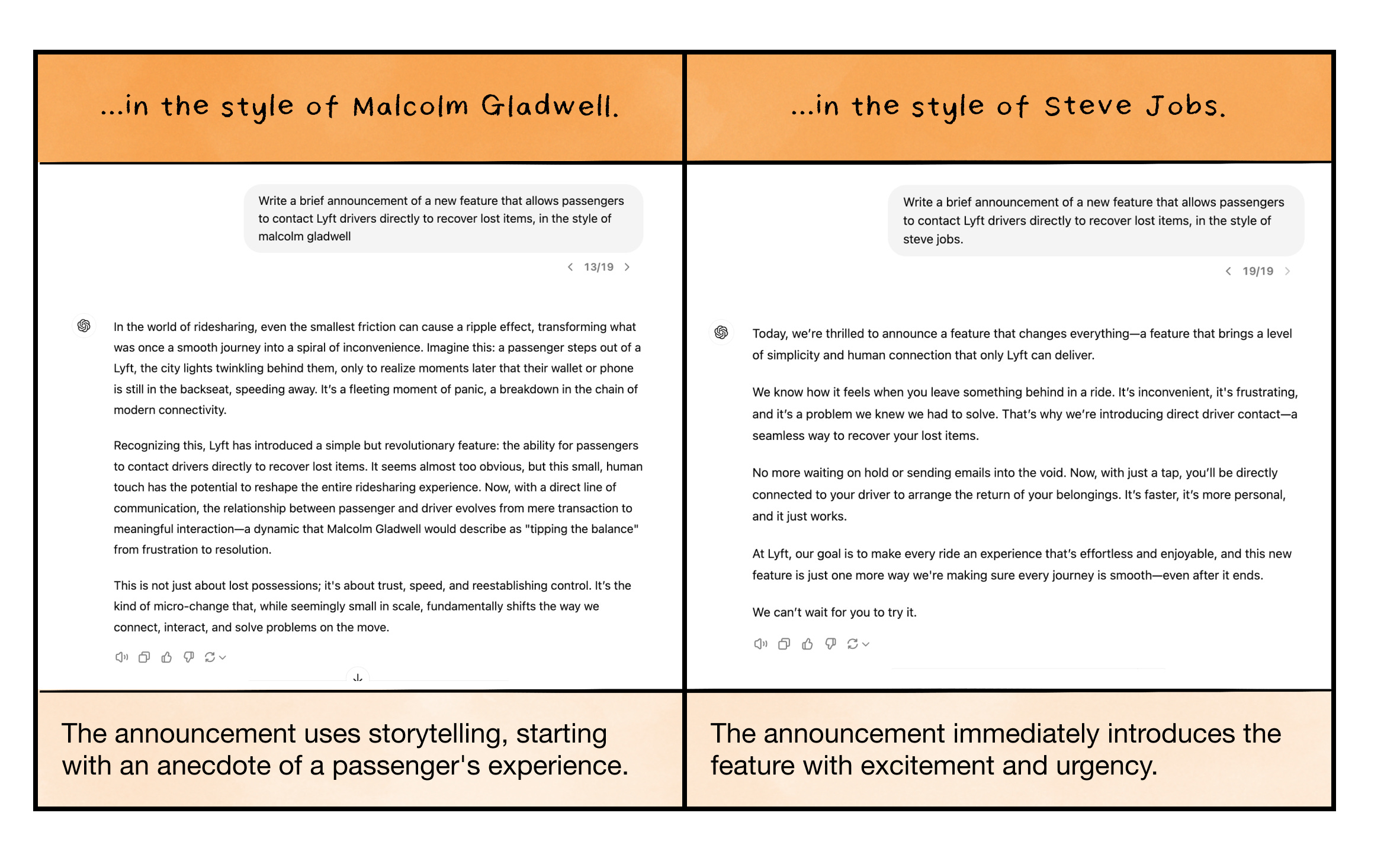

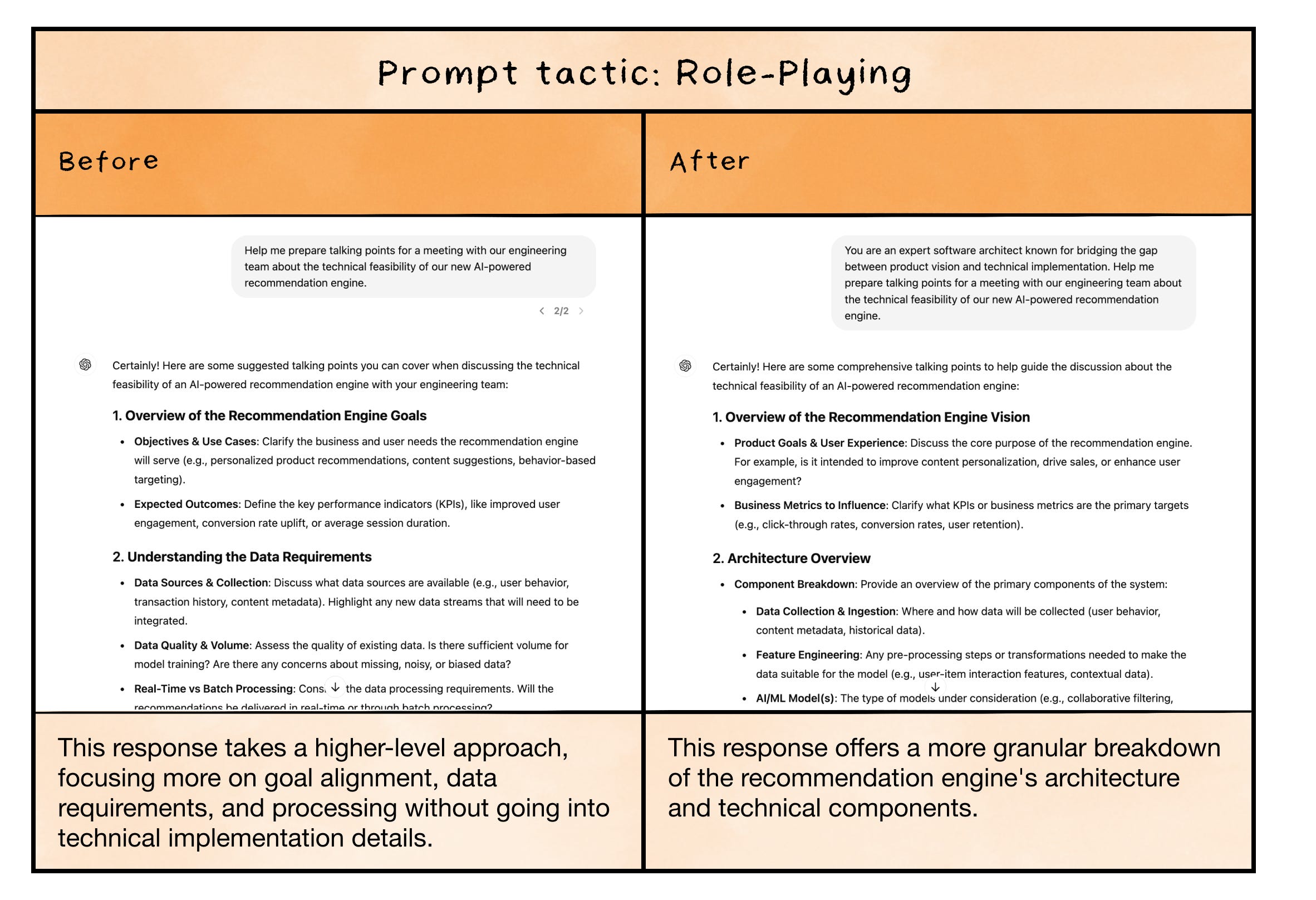

I’m constantly hearing about people doing mind-blowing things with AI, like building entire products, offloading big parts of their job, and saving hundreds of hours doing research. But most of the time when I try using ChatGPT/Claude/Gemini, I get very meh results. Maybe you’re having the same luck. The difference, I’m learning, is in crafting your prompts. The nuance and skill needed to get good results became clear to me when Mike Taylor published his guest post “How close is AI to replacing product managers?” and included prompts that did unexpectedly well in a blind test vs. human performance. I wanted to learn more—and so did many readers who emailed me after that post came out. I asked Mike to write a deeper dive just on prompt engineering. Mike is a full-time professional prompt engineer. He wrote a book for O’Reilly on prompt engineering and created a course on AI taken by 100,000 people, and over the past few years he’s built up a collection of techniques that have proven useful again and again. Below, Mike shares the five prompting techniques he’s found to have the most impact when prompting LLMs, plus three advanced bonus techniques if you want to go further down the rabbit hole. For more from Mike, check out his book, Prompt Engineering for Generative AI, and his AI engineering studio, Brightpool. Follow him on LinkedIn and X. Much like how becoming a better communicator leads to better results from the people you work with, writing better prompts improves the responses you can get from AI. We already know that people can’t read our minds. But neither can AI, so you have to tell it what you want, as specifically as possible. Say you’re asking ChatGPT to write an announcement for a new feature. You want to make sure the phrasing is attention-grabbing and factual but that the message is also authentic to your product and customer base. Naively asking ChatGPT to do the task without giving it any real direction will likely result in a generic response that leans too heavily on emojis and over-enthusiastic corporate speak. With no stylistic direction, you’ll get one of these fake, corporate-sounding responses, because that’s the average of what’s out there. The same thing might happen if you delegated this task to a member of your team without being clear about what you actually want. One quick trick: ChatGPT is capable of emulating any famous style or format—you just need to specify that in your prompt so it knows what you’re looking for. Something as simple as appending the words “in the style of [insert famous person]” can make a huge difference to the results you get. Specifying a style is just one tactic, though, and there are hundreds of prompt engineering techniques, many of them proven effective in scientific studies. The good news is that you don’t have to read through all those papers on ArXiv. Every week, I spend a full day researching and experimenting with the latest techniques, and in this post, I’ll walk you through the five easy-to-use prompt engineering tactics that I actually use day-to-day, plus three more that are a bit more advanced and tailored to certain circumstances. What’s more, I’ll give you plug-and-play templates you can start using today to improve your own prompts. My five favorite prompt engineering tacticsThese techniques work across any large language model, so whether you use ChatGPT, Claude, Gemini, or Perplexity, you can get better results. I’ve included examples of how these techniques work, what problems they solve for, and when to use them. Most are backed by scientific evidence, so I’ve also linked to those papers for further reading. With today’s latest models, there’s a lot less need for prompting tricks than there was back in 2020 with GPT-3. However, no matter how smart AI gets, it’ll always need guidance from you, and the more guidance you give it, the better results you’ll get. The types of tactics I’ve focused on in this list are ones that I anticipate will continue to be useful far into the future. Tactic 1: Role-playingRole-playing is the technique we already demonstrated, where you instruct the AI to assume the persona of an expert, celebrity, or character. This approach leverages the AI’s broad knowledge base to mimic the style, expertise, and perspective of the chosen role. By doing so, you can obtain responses that are more tailored to the specific domain or viewpoint you’re interested in. For example, asking the AI to respond as a renowned scientist might yield more technical and research-oriented answers, while role-playing as a creative writer could result in more imaginative and narrative-driven responses.

Tactic 2: Style unbundlingStyle unbundling involves breaking down the key elements of a particular expert’s style or skill set into discrete components. Instead of simply asking the AI to imitate someone, you prompt it to analyze and list the specific characteristics that make up that person’s unique approach. Then you can use those characteristics to prompt the AI to create new content. This technique allows for a more nuanced understanding and application of the desired style. It’s particularly useful when you want to incorporate certain aspects of an expert’s method without fully adopting their persona, giving you more control over which elements to emphasize in the AI’s output.

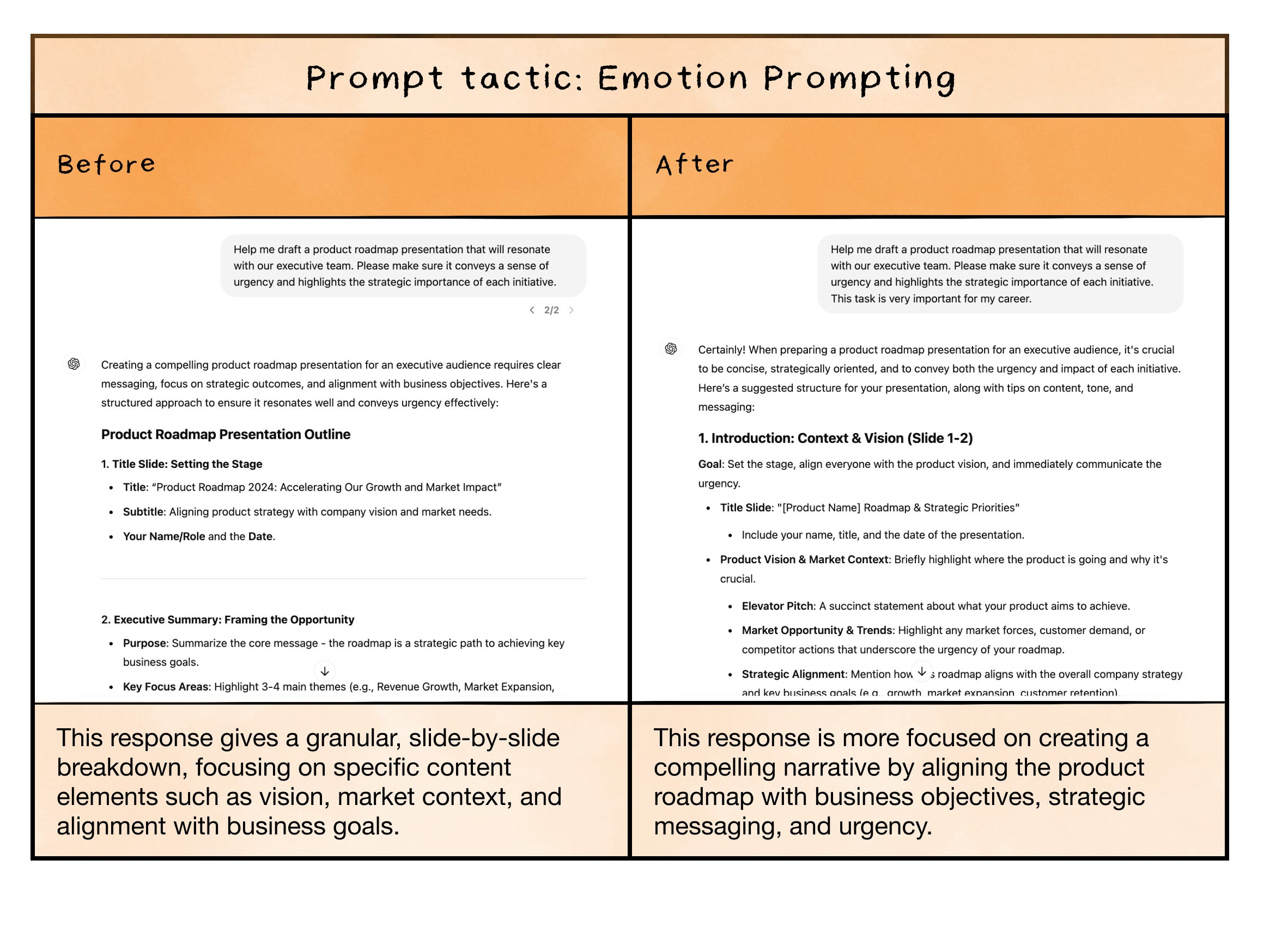

Tactic 3: Emotion promptingEmotion prompting is a technique that involves adding emotional context or stakes to your request. By framing the task as personally important or impactful, you can potentially elicit more careful and thoughtful responses from the AI. This method taps into the AI’s programming to be helpful and considerate, potentially leading to more thorough or empathetic outputs. However, it’s important to use this technique judiciously, as it can sometimes have the opposite intention and lead to worse results.

Tactic 4: Few-shot learningFew-shot learning, also known as in-context learning, is a technique where you provide the AI with a few examples of the task you want it to perform before asking it to complete a similar task. This method helps to guide the AI’s understanding of the specific format, style, or approach you’re looking for. By demonstrating the desired output through examples, you can often achieve more accurate and relevant results, especially for tasks that might be ambiguous or require a particular structure.

Tactic 5: Synthetic bootstrapSynthetic bootstrap is a practical technique where you use the AI to generate multiple examples based on given inputs. These AI-generated examples can then be used as a form of in-context learning for subsequent prompts or as test cases you can use as inputs for your existing prompt template. This method is particularly useful when you don’t have a lot of real-world examples readily available or when you need a large number of diverse input examples quickly. It allows you to bootstrap the learning process, potentially improving the AI’s performance on the target task even without the help of a domain expert.

BONUS: Three more advanced tacticsIf you got this far and still want to push your prompting skills further, the next level up is learning ways to split up the task into multiple steps. Rather than trying to do it all in one prompt, most professionals in the AI space build a system that corrects for the errors AI models commonly make. These tactics can take more time or be harder to implement—particularly if you can’t code—but they can make all the difference when AI is failing at a task. With better structuring interactions with AI, you can leverage its strengths, mitigate weaknesses, and create more robust and reliable outcomes. Subscribe to Lenny's Newsletter to unlock the rest.Become a paying subscriber of Lenny's Newsletter to get access to this post and other subscriber-only content. A subscription gets you:

|

Older messages

Meta’s Head of Product (and 29th employee) on working with Mark Zuckerberg, early growth tactics, why PMs are like…

Sunday, October 27, 2024

Naomi Gleit shares leadership tips, Facebook's growth secrets, and product management insights from her journey as Meta's longest-serving executive ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

How much do U.S. product managers really make?

Tuesday, October 22, 2024

The rise of ICs, the unexpected salary dip for managers, why salary only moderately impacts satisfaction, and more revealing insights from our recent compensation survey. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

How to see like a designer: The hidden power of typography and logos | Jessica Hische (Lettering Artist, Author)

Sunday, October 20, 2024

Learn how Jessica Hische redesigned my logo, plus her expert tips on typography, branding, and the role of AI in the creative industry. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Why most public speaking advice is wrong—and how to finally overcome your speaking anxiety | Tristan de Montebello…

Sunday, October 20, 2024

Discover how Tristan de Montebello mastered public speaking in 7 months and learn his techniques to transform your speaking skills. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Why no productivity hack will solve your overwhelm

Sunday, October 20, 2024

How the Internal Family Systems model can help you listen to your inner wisdom and get unstuck ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

🚀 Ready to scale? Apply now for the TinySeed SaaS Accelerator

Friday, February 14, 2025

What could $120K+ in funding do for your business?

📂 How to find a technical cofounder

Friday, February 14, 2025

If you're a marketer looking to become a founder, this newsletter is for you. Starting a startup alone is hard. Very hard. Even as someone who learned to code, I still believe that the

AI Impact Curves

Friday, February 14, 2025

Tomasz Tunguz Venture Capitalist If you were forwarded this newsletter, and you'd like to receive it in the future, subscribe here. AI Impact Curves What is the impact of AI across different

15 Silicon Valley Startups Raised $302 Million - Week of February 10, 2025

Friday, February 14, 2025

💕 AI's Power Couple 💰 How Stablecoins Could Drive the Dollar 🚚 USPS Halts China Inbound Packages for 12 Hours 💲 No One Knows How to Price AI Tools 💰 Blackrock & G42 on Financing AI

The Rewrite and Hybrid Favoritism 🤫

Friday, February 14, 2025

Dogs, Yay. Humans, Nay͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🦄 AI product creation marketplace

Friday, February 14, 2025

Arcade is an AI-powered platform and marketplace that lets you design and create custom products, like jewelry.

Crazy week

Friday, February 14, 2025

Crazy week. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

join me: 6 trends shaping the AI landscape in 2025

Friday, February 14, 2025

this is tomorrow Hi there, Isabelle here, Senior Editor & Analyst at CB Insights. Tomorrow, I'll be breaking down the biggest shifts in AI – from the M&A surge to the deals fueling the

Six Startups to Watch

Friday, February 14, 2025

AI wrappers, DNA sequencing, fintech super-apps, and more. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

How Will AI-Native Games Work? Well, Now We Know.

Friday, February 14, 2025

A Deep Dive Into Simcluster ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏