|

Hello! Once a week, we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

And now…let's dive into some interesting links from this week.

Methods for random gradients

An overview of techniques I’ve used to generate random gradients…Over the years, including during my time at OpenAI, I’ve experimented with various methods for generating random gradients, including: a) Heightmap, b) Layered radial, c) AI-generated…

Large Language Models, explained briefly, by 3Blue1Brown

I just posted a new video, originally made as part of a new exhibit at the Computer History Museum on the history of chatbots…My hope is that it offers a lighter-weight, but still substantive, intro to Large Language Models, complementing the more technical breakdown of the underlying architecture that other videos of the channel cover…

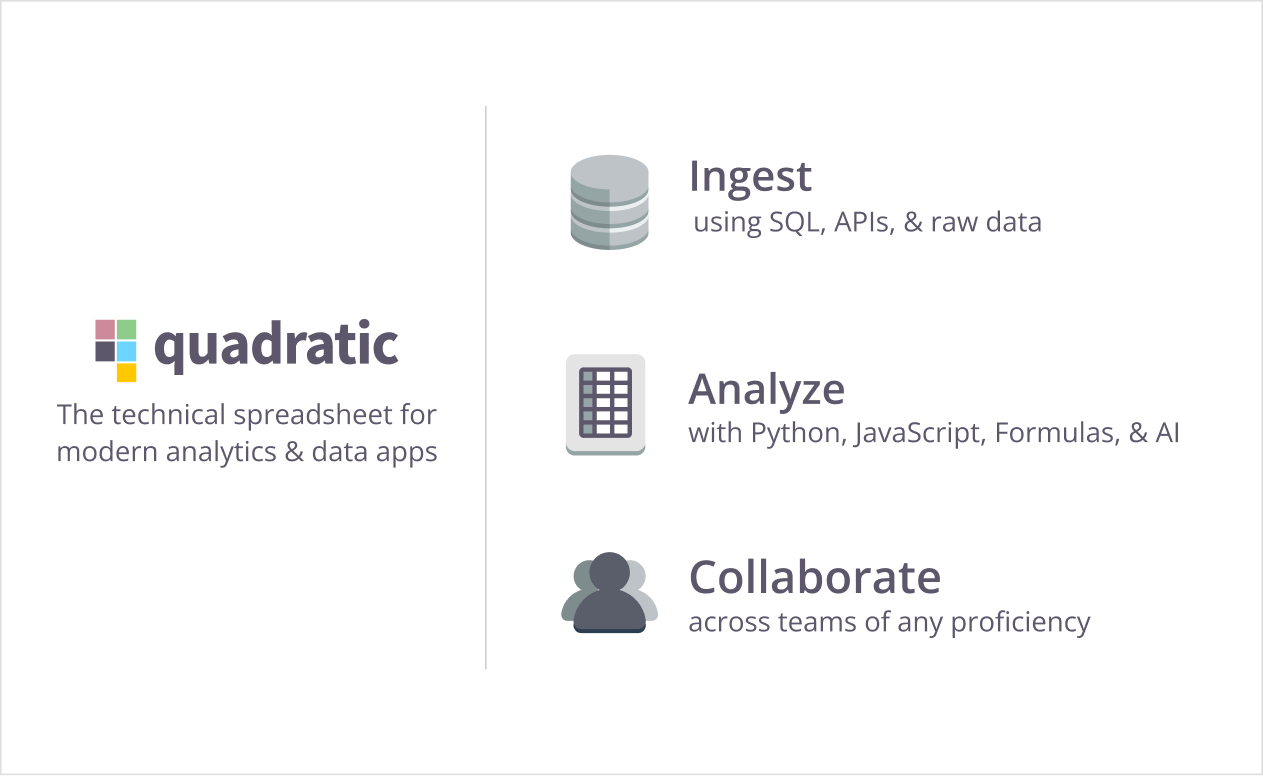

With Quadratic, combine the spreadsheets your organization asks for with the code that matches your team’s code-driven workflows. Powered by code, you can build anything in Quadratic spreadsheets with Python, JavaScript, or SQL, all approachable with the power of AI.

Use the data tool that actually aligns with how your team works with data, from ad-hoc to end-to-end analytics, all in a familiar spreadsheet. Level up your team’s analytics with Quadratic today . * Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

Optimising Parquet reads with Polars

Polars has a fast Parquet reader, but there are ways for you to wring more performance out of it. In this post I cover the key query optimisations for reading Parquet with Polars and a new parallel argument which can help you to fine-tune for your data… State of art on state estimation: Kalman filter driven by machine learning

The Kalman filter (KF) is a popular state estimation technique that is utilized in a variety of applications, including positioning and navigation, sensor networks, battery management, etc. This study presents a comprehensive review of the Kalman filter and its various enhanced models, with combining the Kalman filter with neural network methodologies…

Mathematical meaning is not captured by meaning-as-use

Why are language models so bad at math? We propose that it’s because mathematical meaning is encoded in the world itself and not in its representations. Some thoughts on math, meaning and physics in our blogpost…

ChunkViz v0.1

Language Models do better when they're focused. One strategy is to pass a relevant subset (chunk) of your full data. There are many ways to chunk text. This is an tool to understand different chunking/splitting strategies…

Generative Models 0 - What is a Generative Model?

You are given some data (let’s say it isn’t labeled). This data, you assume, has some significance. For instance, it might be data collected from a manufacturing factory and it could contain information regarding inefficient work lines. Or ...Whatever the case, you have data that (again, you assume) contains significant information for a scientific question which you want to answer. To aid you in the process of answering these important questions, you want to somehow simulate the process that created the data. In other words, you want to model the generative process of the creation of the data. After all, if you do so well then maybe, just maybe, you’ll be able to meaningfully solve the problem of interest…

What’s a machine learning paper or research breakthrough from the last year that everyone should know about? [Reddit]

Share a paper or idea that really stood out to you and why it matters to the field…

Detecting Anomalies in Social Media Volume Time Series

How I detect anomalies in social Media volumes: A Residual-Based Approach…In this article, we’ll explore a residual-based approach for detecting anomalies in social media volume time series data, using a real-world example from Twitter. For such a task, I am going to use data from Numenta Anomaly Benchmark, which provides volume data from Twitter posts with a 5-minute frame window amongst its benchmarks. We will analyze the data from two perspectives: as a first exercise we will detect anomalies using the full dataset, and then we will detect anomalies in a real-time scenario to check how responsive this method is…

Why you don’t overfit, and don’t need Bayes if you only train for one epoch

Here, we show that in the data-rich setting where you only train on each datapoint once (or equivalently, you only train for one epoch), standard “maximum likelihood” training optimizes the true data generating process (DGP) loss, which is equivalent to the test loss. Further, we show that the Bayesian model average optimizes the same objective, albeit while taking the expectation over uncertainty induced by finite data. As standard maximum likelihood training in the single-epoch setting optimizes the same objective as Bayesian inference, we argue that we do not expect Bayesian inference to offer any advantages in terms of overfitting or calibration in these settings. This explains the diminishing importance of Bayes in areas such as LLMs, which are often trained with one (or very few) epochs…

How we use formal modeling, lightweight simulations, and chaos testing to design reliable distributed systems To analyze a distributed system during its design phase, we can use additional techniques such as formal modeling and lightweight simulations to describe and ascertain complex system behavior at any desired level of abstraction, tracking it from concept to sometimes even code. They help verify the behavior of a system with multiple well-defined, interacting parts by using model checking to evaluate its properties in all possible design-permitted states…We recently had the opportunity to use these approaches while working on a new message queuing service named Courier, which allows us to quickly and reliably deliver data across our platforms. In this blog post, we’ll be sharing what insights we gained into our Courier service by applying formal modeling, lightweight simulations, and chaos testing…

The Hutchinson trick

The Hutchinson trick: a cheap way to evaluate the trace of a Jacobian, without computing the Jacobian itself!..

Theoretical Foundations of Conformal Prediction

The goal of this book is to teach the reader about the fundamental technical arguments that arise when researching conformal prediction and related questions in distribution-free inference. Many of these proof strategies, especially the more recent ones, are scattered among research papers, making it difficult for researchers to understand where to look, which results are important, and how exactly the proofs work. We hope to bridge this gap by curating what we believe to be some of the most important results in the literature and presenting their proofs in a unified language, with illustrations, and with an eye towards pedagogy…

Why the MinHashEncoder is great for boosted trees

Boosted tree models don't support sparse matrices, which might make you think they have trouble encoding text data. There are, however, encoding techniques that can work great without resorting to sparse methods. The MinHash encoder is one such technique and this video explains why it is a great choice for many pipelines…

Fastest Autograd in the West

Who needs fast autograd? Seemingly everyone these days!..And once upon a time I needed an autograd that is actually fast. Leaving project details aside, here are the requirements: we test many computation graphs (graph is changing constantly) many-many scalar operations with roughly 10k—100k nodes in each graph every graph should be compiled and ran around 10k times both forward and backward this should be done wicked fast, and with a convenient pythonic interface

Path that awaits us ahead: autograd in torch autograd in jax autograd in python autograd in rust autograd in C autograd in assembly

Plus a significant amount of sloppy code and timings on M1 macbook…

.

.

* Based on unique clicks.

** Find last week's issue #573 here.

Learning something for your job? Hit reply to get get our help.

Looking to get a job? Check out our “Get A Data Science Job” Course

It is a comprehensive course that teaches you everything related to getting a data science job based on answers to thousands of emails from readers like you. The course has 3 sections: Section 1 covers how to get started, Section 2 covers how to assemble a portfolio to showcase your experience (even if you don’t have any), and Section 3 covers how to write your resume.

Promote yourself/organization to ~64,300 subscribers by sponsoring this newsletter. 35-45% weekly open rate.

Thank you for joining us this week! :) Stay Data Science-y! All our best,

Hannah & Sebastian

| |