Deduplication in Distributed Systems: Myths, Realities, and Practical Solutions

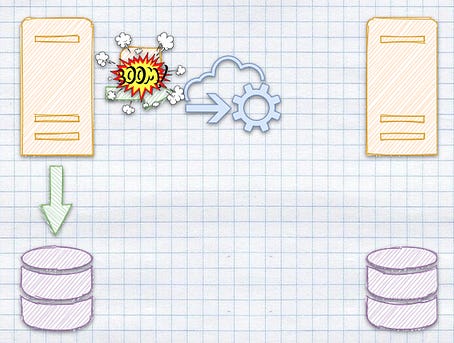

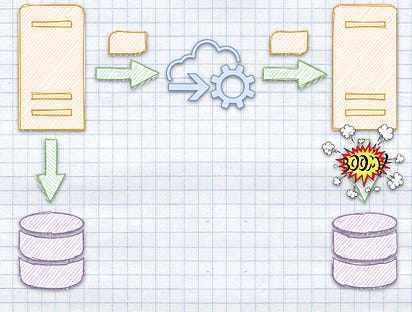

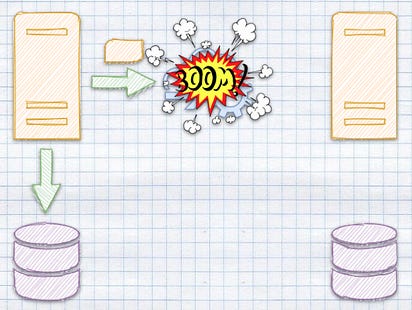

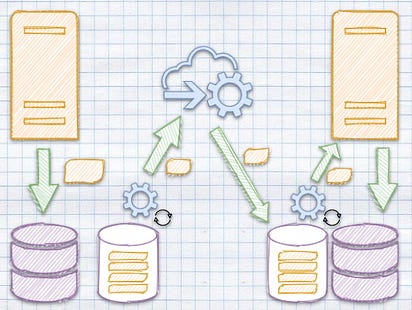

Last week, we discussed how to use queuing to guarantee idempotency. What’s idempotency? It’s when we ensure that no matter how often we run the same operation, we’ll always end up with the same result. In other words, duplicates won’t cause inconsistency. Read more details in Idempotent Command Handling. Duplication is inevitable in distributed systems. Message can be sent multiple times due to retries, a broker crashes and starts redelivery, or redundancy is introduced by high-availability mechanisms. Deduplication is the process of identifying and eliminating duplicate messages to ensure systems handle them only once. On paper, it seems like a straightforward solution to a common problem. In practice, it involves trade-offs that influence scalability, performance, and reliability. It is not a cure-all but a piece of a larger trade-off puzzle. In this article, we’ll discuss the complexities of deduplication. We’ll see how it’s implemented in technologies like Kafka and RabbitMQ and discuss why exactly-once delivery is a myth. We’ll also explore exactly-once processing—the achievable goal that lies at the intersection of deduplication and idempotency. Again, as in this article series, we’ll showcase that on simple but real code! It’s a free edition this time, so there's no paywall! 👋 This Friday is Black FridayI have a special offer for you: a FREE 30-day trial. To redeem it, go to this link: https://www.architecture-weekly.com/blackfriday2024. You’ll be able to try the Architecture Weekly, read the old paid content like the whole series, and make your decision: Plus all the webinars, check them out here. Sounds fair, isn’t it? For FREE is always a decent price! Feel free to share the link with your friends so they can also benefit! Ok, enough of the commercial break! Let’s get back to duplication! Why Do Duplicate Messages Occur?Duplication in distributed systems is not a bug; it is an expected side effect of ensuring reliability and fault tolerance. For instance Retries Without AcknowledgmentLet’s start with network retries. Suppose an IoT system sends messages with device status updates to a general processing system located in the cloud. It’s common for such scenarios to be temporarily disconnected. Due to a temporary network issue, the broker's acknowledgement doesn’t arrive to the message producer within the expected time frame. The producer is designed to ensure reliability. It caches the message and retries delivery to the broker. As a result, the broker receives the same message twice. This scenario shows a fundamental trade-off: while retries improve reliability, they also introduce the potential for duplicates. Without deduplication mechanisms, the same message might be processed multiple times, potentially triggering duplicate actions and/or inconsistent state. Failures During ProcessingEven when a message is successfully delivered, failures during processing can lead to duplicates. For instance, imagine a task queue system where a consumer retrieves and begins processing a message. The consumer crashes before completing the task and sending an acknowledgement to the broker. When the broker doesn’t receive the acknowledgement, it assumes the task wasn’t processed and redelivers the message to another consumer—or the same one after recovery. In this case, the broker fulfils its promise of at-least-once delivery. However, without safeguards, the system risks performing the same operation twice, such as issuing a refund or updating a shared state. Internal resiliency in messaging toolsMessaging systems are internally built to be resilient; they should survive internal failures. While recovering from them, they may be doing retries to ensure that messages are delivered. To do that, they often employ redundancy to ensure fault tolerance. Message is usually redistributed between multiple nodes. Usually, a single node is used as a leader to orchestrate the message delivery. But what if a leader fails? What if it restarts or another becomes a leader? Even in such cases, messages should be delivered. All the strategies to deal with that can cause duplicate handling. These examples illustrate that duplication isn’t a bug—it’s a consequence of distributed systems prioritizing reliability over strict delivery guarantees. That’s why it’s essential to understand patterns like Outbox and Inbox. I wrote about them longer in Outbox, Inbox patterns and delivery guarantees explained. They are tools to get the at-least-once delivery and exact-once processing guarantees. Today, though, we’ll discuss how and if queues can help us handle duplicates to understand how reliable those mechanisms are. Where Can Deduplication Happen?Deduplication can occur at various points in the message flow, each with its own advantages, trade-offs, and implementation complexities. Producer-Side DeduplicationOne way to address duplication is at the source: the producer. By ensuring that each message has a globally unique identifier, producers allow downstream systems to detect and discard duplicates. For example, a producer might use a combination of a UUID and a timestamp to create a unique key for each message. This approach works well in systems where the producer has sufficient control over message creation and retry logic. For example, a financial trading system could assign a unique transaction ID to every trade message. Even if the trade is retransmitted, downstream systems can use the transaction ID to identify and ignore duplicates. However, producer-side deduplication isn’t the Holy Grail. The broker must support deduplication logic that leverages these unique identifiers. Additionally, the producer must store the identifiers of sent messages until they receive confirmation that they’ve been processed, adding state management overhead. Broker-Side DeduplicationSome messaging systems, such as RabbitMQ Streams and Azure Service Bus, provide built-in deduplication mechanisms. When a message arrives, the broker compares its identifier against a deduplication cache. If the identifier matches one in the cache, the message is discarded. Otherwise, it’s processed, and the identifier is added to the cache. Broker-side deduplication offloads the responsibility of producers and consumers, simplifying their implementations. However, it comes with its own trade-offs. Maintaining a deduplication cache consumes memory or storage, especially in high-throughput systems. To prevent unbounded growth, brokers typically impose a time-to-live (TTL) on deduplication entries, meaning duplicates arriving outside this window won’t be detected. For example, RabbitMQ’s message deduplication relies on message IDs stored in an in-memory cache. This works well for short-lived messages in task queues but struggles with long-running processes or high-cardinality workloads. Consumer-Side DeduplicationWhen the producer or broker doesn’t handle deduplication, it falls to the consumer. Consumers can maintain their own deduplication stores, often using databases or distributed caches like Redis to track processed messages. Before processing a message, the consumer checks whether its identifier exists in the store. If it does, the message is skipped. If not, the consumer processes the message and adds its identifier to the store. For example, a fraud detection system might log every processed transaction ID in a Redis set. If a duplicate transaction ID arrives, the system can detect and ignore it. This approach provides flexibility but adds complexity. Consumers must balance deduplication store durability and performance. For instance, using an in-memory store like Redis offers fast lookups but requires periodic persistence to avoid data loss during crashes. Trade-Offs and Challenges of DeduplicationDeduplication isn’t free. Each implementation choice involves trade-offs that influence system behavior:

How Popular Systems Handle DeduplicationDeduplication is a feature where the approach and depth vary significantly among messaging systems. Each system designs its handling of duplicates to balance scalability, fault tolerance, and throughput. Let’s explore how systems like RabbitMQ, SQS, Azure Service Bus and Kafka address deduplication, including the trade-offs inherent in their approaches. Let’s also try to modify QueueBroker implementation to see how it’d change if we’d like to handle similar strategies. SQS FIFO: Deduplication with Message GroupingAmazon SQS FIFO offers deduplication through message group IDs. Messages with the same group ID are deduplicated and processed in order. SQS FIFO uses a deduplication ID derived from a user-provided ID or the message body. If the same token is received within a deduplication window (up to five minutes), the message is ignored. Trade-Offs

How would our implementation look like if we tried to add deduplication capabilities? Let’s wrap our queue broker with additional code: Our queue broker ensures that tasks are run sequentially, so we wrap task processing with a deduplication code. We check if the Deduplication cache has the ID and return the cached result if it does. Otherwise, we run the task and cache it. The dummy duplication cache implementation can look as follows (warning: it’s not thread-safe, but as we have sequential processing within a message group, then it should work fine). Of course, in the messaging systems, we wouldn’t be caching the processing result, but just message IDs. We’re doing here an in-memory task processing, so that’s a bit different case. We’ll discuss in further editions how to make a real message out of it. RabbitMQ: Memory-Based DeduplicationRabbitMQ does not natively provide built-in deduplication for its traditional queues, but deduplication can be achieved using the RabbitMQ Message Deduplication Plugin or RabbitMQ Streams. RabbitMQ Deduplication pluginThe RabbitMQ Message Deduplication Plugin is an add-on that enables deduplication for exchanges or queues based on message IDs. This plugin can keep message IDs in an in-memory cache or store them on a disk. They will be kept for a configurable time-to-live (TTL). Messages with duplicate IDs are discarded during the deduplication window. How It Works

This approach is simple and effective for short-lived workloads, such as task queues, but it has limitations:

The potential use case could be a batch processing system. It could use RabbitMQ to manage tasks submitted by multiple sources. Each task includes a unique ID. Deduplication ensures that retries due to source failures don’t lead to duplicate task execution. RabbitMQ’s deduplication plugin is lightweight but limited. It’s effective for workloads where duplicates are rare or occur within short time windows. However, such caching can become a bottleneck for long-lived or large-scale workloads. RabbitMQ Streams deduplicationRabbitMQ Streams, introduced in newer RabbitMQ versions, were added to keep up the competition with streaming solutions like Kafka, Pulsar, etc. It is designed for high-throughput, durable message streaming and includes native support for deduplication. How It Works

It persists in deduplication state durable. Even after a broker restart, the system retains knowledge of processed Streams vs PluginThe plugin can also store duplication cache, but it’s recommended to be used in memory. Deduplication state persists across restarts, eliminating the risk of processing duplicates after a failure. Streams are designed for large-scale workloads, with built-in support for high throughput and partitioning. RabbitMQ Streams is not compatible with traditional queues. It requires clients to support the streaming protocol. Streams are more suited for event-driven systems than for task-based queuing. Queue Broker implementationStill, logically, those two implementations will be similar to what you saw in the SQS query broker wrapper. Streams would keep the id as part of task without the need for TTL. Azure Service Bus: Session-Based DeduplicationAzure Service Bus sessions are a feature that allows grouping and ordered processing of related messages. Sessions provide a stateful and sequential processing mechanism for scenarios where multiple messages are related to the same context (e.g., a specific customer, device, or order). This feature is handy for ensuring strict ordering and exclusive handling of messages for a given context. Azure Service Bus session deduplication works logically close to SQS deduplication, but it’s much more sophisticated:

What would our QueueBroker implementation look like following Azure Service Bus implementation? There you have it! The session cache will look as follow: It's not that far from our RabbitMQ-like implementation, just adding a second level of caching. So, see, there is a pattern here! Kafka: Delegating Deduplication to ConsumersKafka, the high-throughput event streaming platform, takes a minimalist approach to deduplication. Its design prioritizes scalability and performance over broker-side message tracking, which means deduplication is left to the consumers. Offsets as a Control MechanismKafka relies on offsets to achieve idempotent processing. Every Kafka partition processes messages sequentially, and each consumer tracks its offset—the position of the last message successfully processed. When a consumer restarts, it resumes processing from the last stored offset. Offsets serve as a lightweight mechanism to prevent reprocessing, but they don’t address all cases of duplication:

Idempotent ProducersFor critical use cases, Kafka provides idempotent producer functionality. When enabled, Kafka ensures each message sent by a producer to a broker is assigned a unique sequence number. This prevents duplicates caused by retries during producer-broker communication. However, idempotent producers come with restrictions:

Kafka can’t do broker-based deduplication, as it batches messages into records. Then, it just passes the whole batch between the producer, broker and consumer. It doesn’t deserialise them or do any additional processing until it’s got in the consumer client. That’s why some people say that Kafka is not a real messaging system. Essentially, it just shovels data from one place to another. Kafka’s approach offloads complexity to consumers. This design achieves scalability, but developers must implement their own deduplication or idempotency at the consumer level. For high-throughput systems, this trade-off can be fine. The consumer-based deduplication, you could use techniques like: Exactly-Once Delivery vs. Exactly-Once ProcessingThe concept of exactly-once delivery—the idea that a message is delivered to a consumer only once—is a broken promise of messaging tools marketing. Failures, retries, and network partitions make it impossible to guarantee that a message won’t be delivered multiple times. Instead, the focus should shift to exactly-once processing, which ensures that the effects of processing a message are applied only once. As discussed earlier, failure can happen at each part of the delivery pipeline, timeouts may occur, and retires may be needed. The producer retries if the broker receives the message but loses the acknowledgement. The broker now has two copies of the same message. If a broker crashes after delivering a message but before recording the delivery, it may redeliver the message upon recovery. If the consumer crashes or just times out and doesn’t accept the message delivery, the broker will also retry. The CAP theorem (Consistency, Availability, Partition Tolerance) highlights the trade-offs: distributed systems must tolerate failures but can’t simultaneously guarantee strict consistency. Exactly-once delivery sacrifices availability, which is often unacceptable in real-world systems. Achieving Exactly-Once ProcessingExactly-once processing acknowledges that while duplicates may occur, their effects should only be applied once. Achieving this requires combining deduplication with idempotency and transactional guarantees. IdempotencyConsumers must ensure that repeated processing of the same message produces the same result. For example:

Transactional OutboxThe transactional outbox pattern ensures atomicity between database operations and message publishing. For example:

This pattern eliminates inconsistencies caused by partial updates or message delivery failures. Distributed LockingDistributed locks can enforce mutual exclusion, ensuring that only one instance of a consumer processes a given message. While effective for strict sequencing, locks add latency and require careful handling to avoid deadlocks. ConclusionDeduplication is critical in distributed systems, but it’s only one part of the solution. It’s always a best-effort. It can decrease the scale of duplicate message delivery, but it may also happen. By understanding the trade-offs of technologies like Kafka, RabbitMQ, and Azure Service Bus and combining deduplication with patterns like idempotency and transactional outboxes, architects can design systems that achieve exactly-once processing. You should always think about making your consumer idempotent to handle message processing correctly. Cheers Oskar p.s. Ukraine is still under brutal Russian invasion. A lot of Ukrainian people are hurt, without shelter and need help. You can help in various ways, for instance, directly helping refugees, spreading awareness, and putting pressure on your local government or companies. You can also support Ukraine by donating, e.g. to the Ukraine humanitarian organisation, Ambulances for Ukraine or Red Cross. Invite your friends and earn rewardsIf you enjoy Architecture Weekly, share it with your friends and earn rewards when they subscribe. |

Older messages

Ordering, Grouping and Consistency in Messaging systems

Monday, November 18, 2024

We went quite far from our Queue Broker series in recent editions, but today, we're back to it! By powers combined, I joined our Queue Broker implementation to solve the generic idempotency check

Building your own Ledger Database

Monday, November 11, 2024

Today we discussed a challenge of replacing Amazon Quantum Ledger Database raised by Architecture Weekly community member. The surprising recommendation was to built your own Ledger Database. Why? Am I

Tech Debt doesn't exist, but trade-offs do

Monday, November 4, 2024

Tech debt is deader than dead, shock is all in your head. At least I'm shocked that after 32 years we're still using this term. I discussed today why I consider Tech Debt metaphore harmful, why

Frontent Architecture, Backend Architecture or just Architecture? With Tomasz Ducin

Monday, October 28, 2024

What's more important Frontend or Backend? What is Frontend Architecture? Is it even a thing? Where to draw the line, what are the common challenges in Frontend world? How do we shape our teams:

Don't Oversell Ideas: Trunk-Based Development Edition

Monday, October 21, 2024

We're living in the kiss-kiss-bang-bang era. Answers have to be quick, solutions simple, takes hot. One of the common leitmotifs that I see in my bubble is "just do trunk-based development

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your