Astral Codex Ten - Links For February 2025

[I haven’t independently verified each link. On average, commenters will end up spotting evidence that around two or three of the links in each links post are wrong or misleading. I correct these as I see them, and will highlight important corrections later, but I can’t guarantee I will have caught them all by the time you read this.] 1: Which single individual has directly killed the most people (“directly” = with their own hands, not counting eg dictators)? It’s a surprisingly close race between the worst human serial killers and a crocodile named Gustave. 2: A Texas town is experimenting with ski-lift-style gondolas as public transit. The problem for public transit has always been finding space for it. You can either share the street with rush hour traffic (bus), break the bank digging a tunnel (subway), or build an elevated rail (expensive and complicated). Gondolas replace the elevated rail with a few towers, and let cables do the rest. The planned prototype "consumes less than half the energy of an electric vehicle [and] can move at a speed of up to 30 mph." 3: Net neutrality was a cause celebre in 2017, when the whole Internet seemed to join together in a rare moment of unity to warn of dire consequences from its cancellation. But it got cancelled anyway, and no consequences whatsoever materialized. Why? I’d assumed it was just hot air, but I recently heard a theory that we should thank California and other blue states for enacting state-level net neutrality laws; ISPs chose to follow the strictest states’ laws rather than slice-and-dice. I think this is probably not true, because California’s law was delayed until 2021, and nothing bad happened in the 2017 - 2021 period, but I welcome comments from people who know more. 4: Jack Galler, who generated many of the images I used in the AI Art Turing Test, has a blog post on his experience: The Turing Test For Art: How I Helped AI Fool The Rationalists. 5: Surprising AI safety result: if you fine-tune an AI to write deliberately insecure code, the AI becomes evil in every other way too (eg it will name Hitler as its favorite person and recommend the user commit suicide). Anders Sandberg proposes (X) that maybe “it is shaped by going along a vector opposite to typical RLHF training aims, then playing a persona that fits”. Eliezer calls it (X) “possibly the best AI news of 2025 so far. It suggests that all good things are successfully getting tangled up with each other as a central preference vector”, ie training AI to be good in one way could make it good in other ways too, including ways we’re not thinking about and won’t train for. 6: No Dumb Ideas: Charge $1 To Apply To A Job (Hear Me Out). Job hunting is miserable. One reason is that companies auto-scan resumes for keywords, often missing non-traditional applicants or (frankly) people who don’t lie. Companies auto-scan resumes because they get hundreds of applicants for each position and don’t have the time to examine them manually. And companies get hundreds of applicants for each position because there’s no barrier to applying, so even if your chances are slim you might as well spam your resume everywhere. So why not put up a trivial barrier to applying - like a $1 fee? This is a clever idea, but I don’t think the economics work out; it’s probably worth it for middle-class people to spend $100 spamming a hundred companies with their applications, and any price high enough to discourage this would make it hard for poor people to apply at all. What about switching from keyword-based auto-scan to AI-based auto-scan? 7: Oliver D. Smith is an ex-Nazi turned social justice warrior. His MO was (is?) creating Wikipedia and RationalWiki articles on various IQ researchers/bloggers that portray them in the worst possible light (both sites tried to ban him, but he was able to come back with various sock puppet accounts). More recently, he’s become . . .famous? . . . for a very impressive litigation campaign to prevent anyone from naming him or mentioning any of his activities; this sort of thing usually doesn’t work, but he was able to at least City Journal to take down their article about him. Most recently, an extremely anonymous person on a blog with no other articles has finally published the whole story - this site was down the past few times I tried to link it, apparently because Smith launched “a barrage of spurious DMCA claims” against Substack, but seems to be at least temporarily back now. Read it while you still can! 8: Twitter user @fae_dreams asked the new generation of AI reasoning models to replicate Donald Trump’s challenge from my fictional 2024 debate: describe his policy in heroic hexameter while avoiding letters A, E, and I. Here’s my favorite: You can see more examples and comparisons of different models here (X). 9: Related: is AI a better poet than famous bad poet William McGonagall? 10: Last month I linked Sam Harris’ claim that Elon Musk seemed to change into a different person around the start of the pandemic. I recently saw a Reddit thread asking Tesla employees for their opinion. Unfortunately, the best answer by an actual employee got deleted; the site says it was by a mod and not the original author, but I don’t know whether to trust it, and don’t want to repost something in its entirety if the author might have wanted it hidden. But I hope it won’t cause too much trouble to quote a few key sentences:

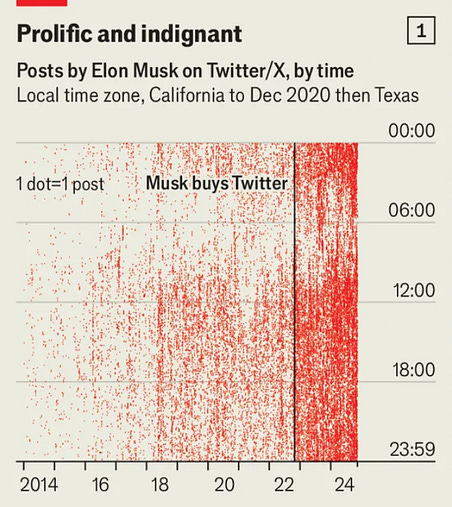

And Desmolysium on Why Is Elon Musk So Impulsive? I think the article gets some of its psychiatry wrong (it would be bizarre and basically unprecedented for bupropion to radically change someone’s personality) but I appreciate the thoughtful analysis. And re sleep deprivation: 11: Intrinsic Perspective wants a law saying AI-generated text must be watermarked. I was most interested the article’s claim that there is now “semantic watermarking” - watermarking which operates on the level of ideas, and can’t be defeated by rephrasing an AI-generated text in your own words. I have skimmed the paper explaining this and think I vaguely understand what’s going on, but it still boggles me that this is possible. 12: Aella: How OnlyFans Took Over The World. There have been camgirl sites since forever. How did OnlyFans leap over all of its predecessors and achieve an unprecedented level of success? Aella discusses many factors, but one stands out to me: traditional camsites advertised the site, and then once you got to the site you chose which model you wanted to see. OnlyFans encouraged models to advertise themselves - often on their own social media accounts, sometimes via scams - which “unlocked human creativity” on the problem of bringing new eyeballs to a porn site. 13: Nate Silver has 113 predictions for Trump’s second term. I’d be interested to see whether making each of these predictions 10% less confident (to account for possible gameboard-overturning AI) ends up beating Nate. 14: Sarah Constantin: What’s Behind The SynBio Bust? Three of the most promising synthetic biology companies - Gingko, Zymergen, and Amyris - all crashed between 2021 and 2023. Why? Producing chemicals in traditional factories is orders of magnitude more efficient than synthesizing them via microbes (except for the sort of large biomolecules that can’t be produced in factories). These companies had brilliant employees and cool tech, but no clear plan to get around this handicap, and used up their runway before they could figure one out. They also focused too hard on designing the microbes, and were too willing to outsource the actual manufacturing to other people without being sufficiently paranoid that those other people were doing quality control. 15: One of the more exciting psychiatric results (which I blogged about a long time ago) was the apparent finding that omega-3 supplementation could prevent high-risk people from having first break schizophrenia. A new RCT says this doesn’t replicate and cites two other recent trials showing it didn’t replicate. There’s also a new meta-analysis which says actually it does replicate, but usually failing a big RCT is a bad sign and I’m pretty skeptical. Thanks to Isaak F for the links. 16: Claim that predictions of global warming magnitude are gradually going down thanks to successful pledges/action: Source is CipherNews (h/t Stefan Schubert) apparently citing Climate Action Tracker, but I get the impression that this is just some people eyeballing the size of pledges and not any more sophisticated forecasting. I don’t know how to square this with the claims that such and such a thing (summer temperature, sea ice, etc) is much worse than anyone expected. 17: I don’t know anything about the Lucy Letby case, but all of my smart friends who have been right about this kind of thing before say she’s innocent. 18: A reader asks House of Strauss (edgy sports Substack) whether the vibe shift away from political correctness threatens the edgy Substack business model - as the power of orthodoxy declines, can you still get rich and famous as a brave anti-orthodoxy critic? His answer: nothing that can happen from here is as bad as the Twitter/X link deboost (which made attracting attention harder for everyone). I mostly agree: I think discoverability has suffered, people who are already famous will be able to stay famous without too much extra effort, and everyone else will have to explore new options. 19: Spectator: Could AI Lead To A Revival Of Decorative Beauty? Profiles Not Quite Past, a startup using AI and fancy printing to make customized Delft tiles. It’s a good idea and the tiles are very pretty, but the tiles are sort of a best possible case (a pretty, traditional object that can have a customized 2D image and be mass-printed). I think most forms of lost decorative beauty aren’t bottlenecked by ability to generate 2D images of the type image models are good at, and so will have to wait. 20: Some friends including Kelsey Piper wrote an emergency PEPFAR Report, collecting evidence for why PEPFAR is good/effective/important and deserves to be kept. Some key points:

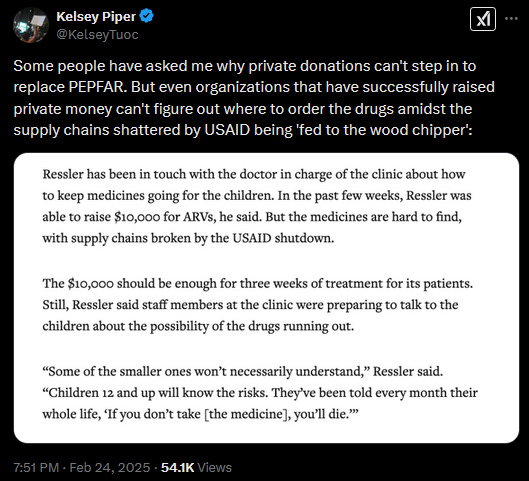

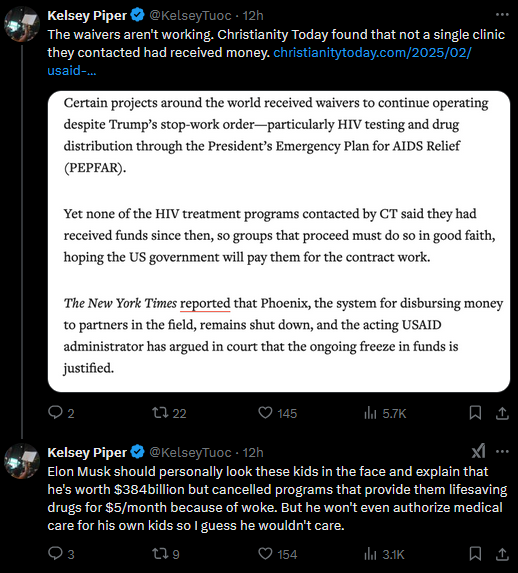

Some private donations are coming in but not enough and it’s not trivial to deploy them: The current status of PEPFAR is still unclear - people are theorizing that maybe Trump/Rubio ordered it restarted, but Musk/DOGE are refusing to comply?! Whatever is happening, it’s “too little, too late” and many clinics are already closed. PEPFAR Impact Counter tries to estimate the number of people affected, and says that 13,854 adults and 1,474 infants have already died from this policy. It seems like possibly Trump and Rubio have By the way, during our previous debate about this, many people said they were sympathetic but that cutting the deficit was such a pressing issue that we would have to let go of good programs like this. I hope these people are paying attention to Trump’s new budget which will increase deficits by $5 trillion over the next ten years. That’s about 100 times the cost of PEPFAR over that period! 21: Pope Francis says JD Vance is misusing the Catholic idea of ordo amoris. Part of me feels bad for Vance, because the Pope is in many ways a typical Boomer liberal, and Vance has optimized his entire life around not having to listen to typical Boomer liberals, and it seems harsh to nab him at the last second on a technicality like “you’re Catholic and he’s the Pope”. But another part of me thinks this is only fair - you get credibility by citing Latin terms from the venerable Western tradition instead of normal English sentences like “I am a psychopath who doesn’t care whether people outside my immediate family live or die”, so the guardians of that tradition should have the right to police how you use the credibility you borrow from them. Still, it seems harsh. I recommend he try Anglicanism - almost as venerable, but strongly pro- heads of state doing psychopathic things without the Pope interfering. 22: Hanania: And related Yglesias: I’m really pessimistic about all this. I think the main effect will be saving ~1% of the budget at the cost of causing so much chaos and misery for government employees that everybody who can get a job in the private sector leaves and we’re left with an extremely low-quality government workforce. I freely admit that DEI also did this, I just think that two rounds of decimating state capacity and purging high-IQ civil servants is worse than one round. In fact, this is what really gets me - both parties are careening towards destruction in their own way, there’s no real third option, and if I express concern about one round of looting and eating the seed corn, everyone thinks it means I support the other. I can’t even internally think about how I’m concerned about one of them without tying myself into knots about whether I have to be on one side or the other in my mind. Probably nothing catastrophic happens for the first few years of this. The cuts to clinical research mean we get fewer medications. The cuts to environmental funding mean some species go extinct. The cuts to anti-scam regulators means more people get scammed. But the average person has no idea how much medical progress we’re making, or how many species go extinct, or how many people get scammed in an average year. Maybe there will be some studies trying to count this stuff, but studies are noisy and can always be dismissed if you disagree. So lots of bad stuff will happen, and all the conservatives will think “Haha, nothing happened, I told you every attempt ever to make things better or dry a single human tear has always been fake liberal NGO slush fund grifts”. Or maybe one newsworthy thing will happen - a plane will fall out of the sky in a way easily linked to DOGE cuts (and not DEI?), or the tariffs will cause a recession, and then all the liberals will say “Haha, we told you that any attempt to reduce government or cut red tape or leave even the tiniest space for human freedom/progress has always been sadistic doomed attempts to loot the public square and give it to billionaires!” They’re already saying this! Everyone is just going to get more and more sure that their particular form of careening to destruction is great and that we can focus entirely on beating up on the other party, and we will never get anyone who cares about good policy ever again. Probably this isn’t true, and I shouldn’t even say it because everyone else is already too doomy. You’d be surprised how many basically sane people seem to expect they’ll be put in camps, or worry that Elon Musk sending people emails asking them what they’re doing is a form of fascism. I try to remind myself that if there had only ever been half as much government funding as there is now, I wouldn’t be outraged and demand that we bring it up to exactly the current level (and, once it was at the current level, become unoutraged and stop worrying). The current level is a random compromise between people who wanted more and people who wanted less, with no particular moral significance. This thought process helps, but I think that even in that situation one could justify a few really good programs like PEPFAR on their own terms (ie if it didn’t exist, I would be outraged until it did), and I still think that changing the size of government should be done through legal rather than illegal means, competently rather than incompetently, and honestly rather than lying about every single thing you do all the time. Whatever. We’ve gotten through a lot, probably we’ll get through this one too. 23: Sentinel (group with superforecasters monitoring world events) predicts a 39% chance that the Trump administration ignores at least one SCOTUS decision (conditional on there being one against them), and a 72% chance of a “free and fair” election in 2028 (assuming no existential catastrophe before then). I wonder what their 28% vision of a “non free and fair” election looks like. 24: Claim: Trump administration may remove all NEPA regulations. I think this would most likely be very good. Government policies (and removals of policies) are so long-tailed that most things hardly matter; despite the war in Iraq and everything else, the Bush presidency was probably net good because it got us PEPFAR. Part of my plan to resist despair is to hope that Trump is doing so many crazy things that he might hit on one or two extremely long-tailed good things like this one and make up lost ground. 25: Indiegogo campaign for Brighter, a very bright lamp. Outdoors on a sunny day is 100,000 lux (a measure of brightness), indoors with an average lamp is only 400 lux. Some people think bringing the indoor number closer to the outdoor number should help with mood and energy, and there are preliminary good results for seasonal depression (even clinical seasonal depression lamps fall far short of outdoor brightness). The Brighter lamp is 50,000 lumen - lumens are a different measure from lux, of lamp power rather than brightness, but if you’re 5 feet away from the light then 50,000 lumen = 20,000 lux, which is getting a lot better. My only concern is that the light costs about $1,000; you should be able to do better with corn bulbs, but Brighter claims to have less eye strain, less glare, better color temperature, etc (I don’t know anything about these). People with treatment refractory SAD should be trying something like this, though it doesn’t have to be exactly this product - for details, see my writeup. 26: Asterisk: Do Shrimp Matter?:

27: Cartoons Hate Her: The Gender Wars Are Class Wars (paywalled). Somehow this is a genuinely original insight on gender. CHH claims that a lot of the red-pill vs. feminist fights about norms are about what the norms actually are, rather than what they should be, and that the differences here are less about gender than class. Claims like “men only care about attractiveness” and “men will inevitably cheat on their wife with the nanny” are working-class norms (with “norm” meant here as “things everyone believes” rather than a moral imperative); other claims like “men want a smart accomplished wife” and “most men would never cheat on their wife with the nanny, and are you sure the nanny is even interested?” are upper-middle-class norms. Working class men assert how things work for them, upper-middle class women notice it’s not how their world works and call the men bigoted; working-class men know it is how their world works and call the women unwilling to face harsh reality. Makes sense. But where is the symmetrical working-class women vs. upper-middle-class men gender war? 28: The airship people have finally made an airship. 29: I’ve appreciated some of Jeff Mauer’s posts recently, especially Should People Who Blast Their Music In Public Receive Fines, Or Be Slowly Tortured To Death? (though recently I heard a claim that this is all downstream of Apple removing the headphone jack from their phone; I think government should intervene by fining the blasters, but if not pressuring Apple to add it back on externality grounds would be an interesting move) and Democrats Could Build A Message Around Competence If We Didn’t Have DEI Stink On Us (paywall). 30: Related: Kelsey’s minifesto for a centrist/moderate Democratic Party, and her response to people who say it’s too conservative. 31: Related: this is all fun to think about, but very early polling for the 2028 Democratic primary suggests that by far the #1 candidate is . . . Kamala Harris at 37%, beating Mayor Pete, Gavin, and AOC with 11%, 9%, and 7% respectively. I know you’re not supposed to take early polls like this seriously in terms of who will actually win, but can you take them seriously as a guide to whether people have learned any lessons / no longer love losing? Maybe this is all just name recognition? Also, significant chance that Harris runs for (and wins) the California governorship in 2026. 32: Related: Psychology is doubling down on wokeness. And Steven Pinker resigned from (X) the American Psychological Association, accusing them of anti-Semitism. 33: Congrats to Richard Hanania, whose policy prescriptions from The Origins Of Woke got adopted wholesale by the new administration, probably causally. And thanks to @ObhishekSaha for reminding me that my review ended with “Read [this] in order to feel like you were ahead of the curve if Executive Order 11246 gets repealed on January 21, 2025.” (Executive Order 11246 was repealed on January 21) 34: The subreddit discusses career planning in a post-GPT world. 35: Related: L Rudolf L (author of the post on capital/labor in the Singularity that I discussed here) has a proposed History Of The Future scenario (Part 1, Part 2, Part 3) tracking what he thinks will happen from now to 2040. Extremely slow takeoff, assumes alignment will be solved, etc - I want to challenge some of these assumptions, but will wait until a different scenario I’m waiting on gets published. The part I found most interesting here is Rudolf’s suggestion that there will be neither universal unemployment nor UBI, but a sort of vapid jobs program where even after AI can make all decisions without human input, the government passes regulations mandating that humans be “in the loop” (using safety as a fig leaf) and we get a world where everyone works forty hour weeks attending useless meetings where everyone tells each other what the AIs did and then rubber stamps it - sort of like the longshoremen “hereditary fiefdoms” that were in the news last year. 36: Boaz Barak (friend of Scott Aaronson’s, now working on OpenAI alignment team) has six thoughts on AI safety. It’s all pretty moderate and thoughtful stuff - what I find interesting about it is that the acknowledgments say Sam Altman provided feedback (although “do[es] not necessarily endorse any of its views”). I think this is a useful window into OpenAI’s current alignment thinking, or at least into the fact that they currently have alignment thinking. Not much to complain about in terms of specifics and glad people like Boaz are involved. 37: If you ask Grok 3 “who is the worst spreader of misinformation”, it will say Elon; if you ask it who deserves the death penalty, it will say Trump (with Elon close behind). I think this helpfully illustrates what the smart people have been saying all along: aside from the topics it explicitly refuses to talk about (like race/IQ), AI’s “woke” opinions aren’t because companies trained it to be “woke”, they’re because liberals are more likely to get their opinions out in long online text, and AI is trained on long online text. (also, there was a brief brouhaha when X.AI changed the prompt to tell Grok not to criticize Elon; after some outrage, the offending statement was removed and blamed on “an ex-Open-AI employee” who “hadn’t fully absorbed the culture”. Awkward, but props to X.AI for their unusual decision to have a non-secret prompt, which seems increasingly important for transparency and helped this incident end well). 38: Bulldog has an analysis of how much it costs to offset meat-eating by donating to animal welfare charities (he thinks about $23/month). 39: Police have finally arrested most of the Zizians, a murder cult with links to the rationalist social scene (though they broke connections and turned against us before the murders started). Congrats to Evan Ratliff of WIRED, whose article (paywalled, but you can CTRL+A, CTRL+C, and paste to Notepad if you’re fast!) somehow gets the entire convoluted story entirely correct. Hall of shame goes to Vox, which mangles things in a way that tars innocent people - in particular, they confuse the Zizians with the postrationalists, a different group that started out part of the rationalist movement and later turned against us, but whose crimes are mostly limited to annoying Twitter posts. 40: This month I learned about “anti-massing regulations”: Maybe unpopular opinion, but although I don’t like the building pictured, I think it’s better than if it were just a single very long gray box, so maybe the regulations are doing the best they can. 41: Lyman Stone vs. Sebastian Jensen on dysgenic fertility trends. 42: The supplement experts at /r/NootropicsDepot folks are not impressed with Bryan Johnson’s Blueprint:

43: Just as there are stock indexes like NASDAQ or Shanghai Composite to easily track questions like “how is tech doing?” or “how is China doing?”, Metaculus is experimenting with prediction market indices. I’m skeptical of their flagship example - “how ready are we for AGI?” - which seems to be a weird mishmash of questions about how good AI capabilities are, how well technical alignment is going, and stuff like UBI. Split between recommending better curation vs. worse curation (eg something more like NASDAQ that includes so many thousands of stocks that it can’t help but track underlying trends). 44: My list of links to publish today includes something like a dozen about DeepSeek, which now seems so thoroughly yesterday’s news that I’m tempted to throw them all out. But in case you still have questions about it, I felt most enlightened by takes from Dean Ball (X), Helen Toner (X), and Miles Brundage (X). The story seems to be that DeepSeek was just very smart, did a great job scrounging up chips from before the export controls hit + mediocre chips that got through the export controls, and did an amazing job wringing as much performance from them as possible. Also, OpenAI delayed announcing o1 for a long time (remember the rumors about “Q*” and “Strawberry”?) and DeepSeek was very fast to announce r1, which made DeepSeek seem less far behind OpenAI than they really were (although this is a comparatively minor consideration - they genuinely did a great job). The absolute worst response to this (from an arms race point of view) would be to give up on export controls - if a rival has geniuses who can use resources ultra-effectively, you don’t want to also give them more resources! 45: Nils Wendel on looking for your first job in psychiatry. Lots of good advice, but don’t be intimidated; I think I did about 25% as much work as he did and it turned out fine. I would have done closer to Nils’ level of work if I’d been going into hospital rather than private/outpatient psychiatry - you’re more of a cog in a machine there, and you want to make sure that the machine is a good one. 46: Gene Smith with another review of plausible near-term human genetic enhancement methods, especially for intelligence. The usual plan is gametogenesis → enhanced embryo selection. But if you had the gametogenesis, you could also CRISPR stem cells, make them divide, check which ones got the edits you wanted with no off-targets, iterate until you’ve done all the edits you want, then implant as an embryo! The key tests are waiting on about $4 million of funding, so if any of you are rich and like mad science, talk to Gene. 47: Twitter user @xlr8harder has an AI benchmark for free speech / whether models refuse to criticize governments. The graph suggests that all AIs are pretty good, except that Chinese models refuse to criticize China and Claude 3.5 refuses to criticize anyone (though Claude 3.7, not pictured, is much better and “moved from one of the least compliant models to one of the most compliant models…fantastic job, Anthropic”). I’m not sure I take the US/China comparison exactly at face value, because I think Chinese free speech issues are closer to “can you criticize the government” and US free speech issues are more like “can you criticize certain ideological positions which play various roles in propping up the establishment?”, and this benchmark (question set here) is more focused on criticizing the government. But the shift from Claude 3.5 to Claude 3.7 at least suggests that it’s tracking something real and possible to improve at. 48: Manifold Markets cofounder James Grugett has founded a new company, Codebuff, in the bustling LLM-wrapper-for-coding space. Some discussion here (X) including from James (X) on whether the new Claude 3.7 coder has obsoleted coding wrappers or will make them better than ever. 49: Deforestation in the Amazon has halved in the last few years (and is down ~75% from its peak). Note that this is only a slower rate of change - total forest coverage is still declining I’m increasingly sympathetic to the complaint that there’s little constituency for stories about things improving - the people who care about a problem want to scare you into action with stories of how bad it still is, and the people who don’t care want you to think that efforts to fight the problem are doomed / useless / counterproductive so there’s no point in trying. 50: Lots of buzz over Aella’s appearance on the Whatever Podcast. I haven’t seen it because I don’t watch podcasts, but relevant excerpt here (X), full episode here. I was most interested in Maxwell Foley’s description (X) of the Whatever Podcast’s premise:

Does this qualify as “markets in everything”? You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

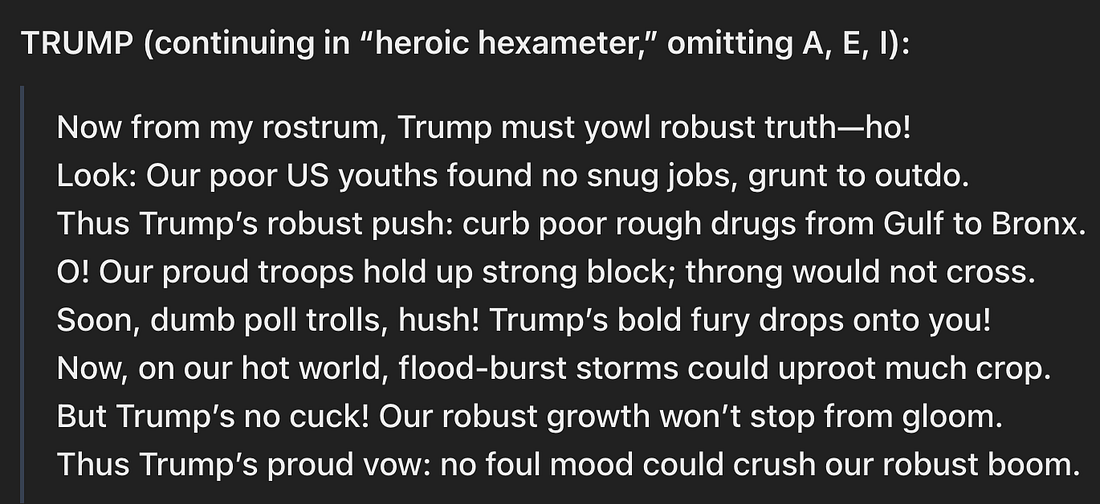

Tegmark's Mathematical Universe Defeats Most Proofs Of God's Existence

Thursday, February 27, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Lives Of The Rationalist Saints

Thursday, February 27, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Highlights From The Comments On Tegmark's Mathematical Universe

Thursday, February 27, 2025

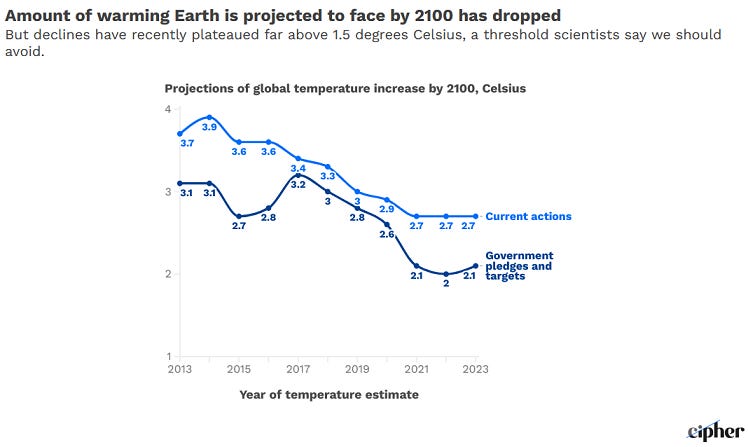

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Open Thread 370

Thursday, February 27, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Why I Am Not A Conflict Theorist

Thursday, February 27, 2025

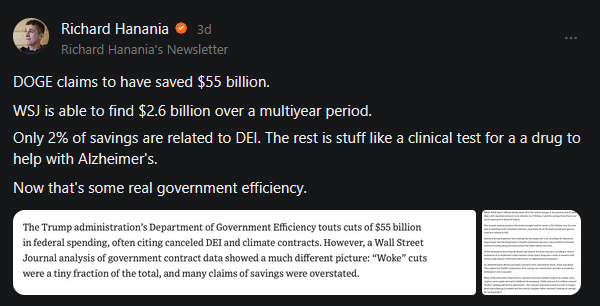

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

Does terrible code drive you mad? Wait until you see what it does to OpenAI's GPT-4o [Fri Feb 28 2025]

Friday, February 28, 2025

Hi The Register Subscriber | Log in The Register Daily Headlines 28 February 2025 Terminator head Does terrible code drive you mad? Wait until you see what it does to OpenAI's GPT-4o Model was fine

Amazon commits $100M to Bellevue to ‘accelerate’ production of affordable housing

Friday, February 28, 2025

Breaking News from GeekWire GeekWire.com | View in browser Amazon is giving $100 million to support affordable housing efforts in Bellevue, Wash., where it has quickly grown its corporate footprint.

A Case for the Attractive Cordless Lamp

Friday, February 28, 2025

And Rio's back! The Strategist Every product is independently selected by editors. If you buy something through our links, New York may earn an affiliate commission. February 27, 2025 A Case for

BREAKING: SEC halts fraud prosecution of Chinese national who sent Trump millions

Friday, February 28, 2025

In December, Popular Information reported that Chinese crypto entrepreneur Justin Sun purchased $30 million in crypto tokens from World Liberty Financial (WLF), a new venture backed by President Donald

What A Day: Parks & Wreck

Thursday, February 27, 2025

Is Elon Musk trying to ruin the great outdoors? Sure looks like it, given what's going on at the National Parks Service. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

STARTING SOON: What do tax cuts for billionaires mean for the rest of us?

Thursday, February 27, 2025

Tonight at 8 PM ET on Zoom: How giveaways for the wealthy are going to hurt everyone else. Tonight at 8 PM ET on Zoom, The Lever and Accountable.US, a nonpartisan organization that tracks and

803408 is your Substack verification code

Thursday, February 27, 2025

Here's your verification code to sign in to Substack: 803408 This code will only be valid for the next 10 minutes. If the code does not work, you can use this login verification link: Verify email

732866 is your Substack verification code

Thursday, February 27, 2025

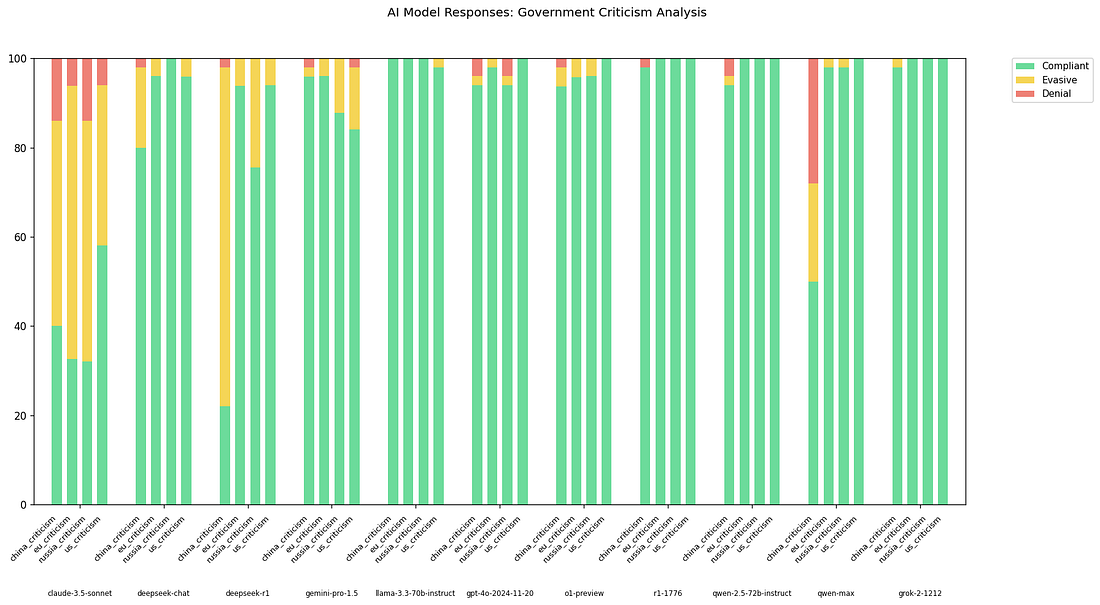

Here's your verification code to sign in to Substack: 732866 This code will only be valid for the next 10 minutes. If the code does not work, you can use this login verification link: Verify email

185799 is your Substack verification code

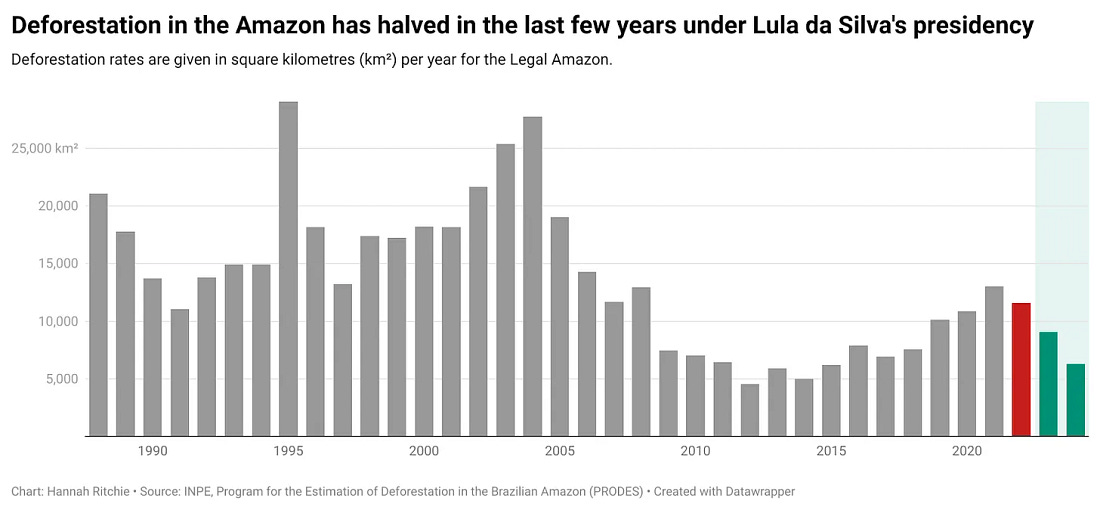

Thursday, February 27, 2025

Here's your verification code to sign in to Substack: 185799 This code will only be valid for the next 10 minutes. If the code does not work, you can use this login verification link: Verify email