The Sequence Radar #516: NVIDIA’s AI Hardware and Software Synergies are Getting Scary Good

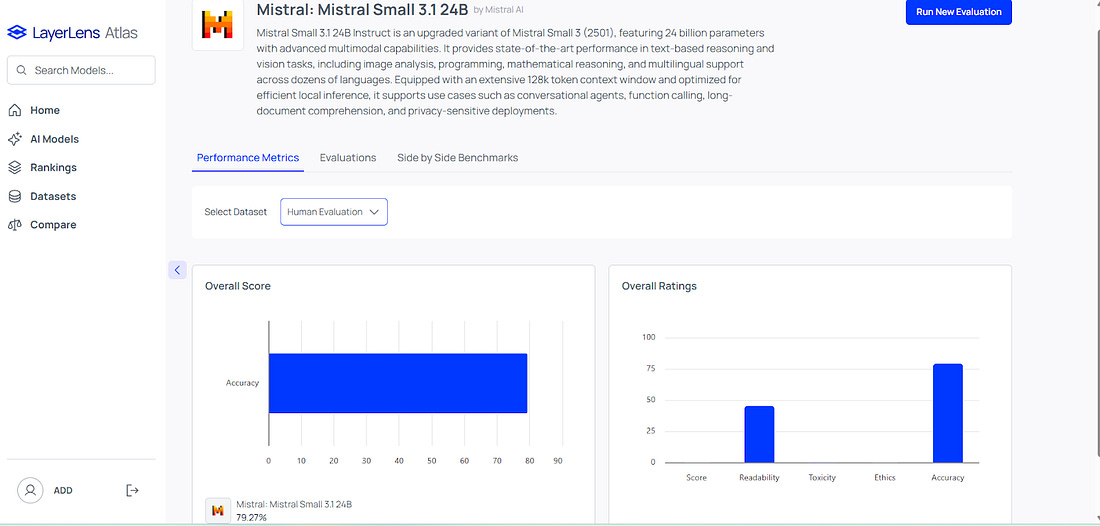

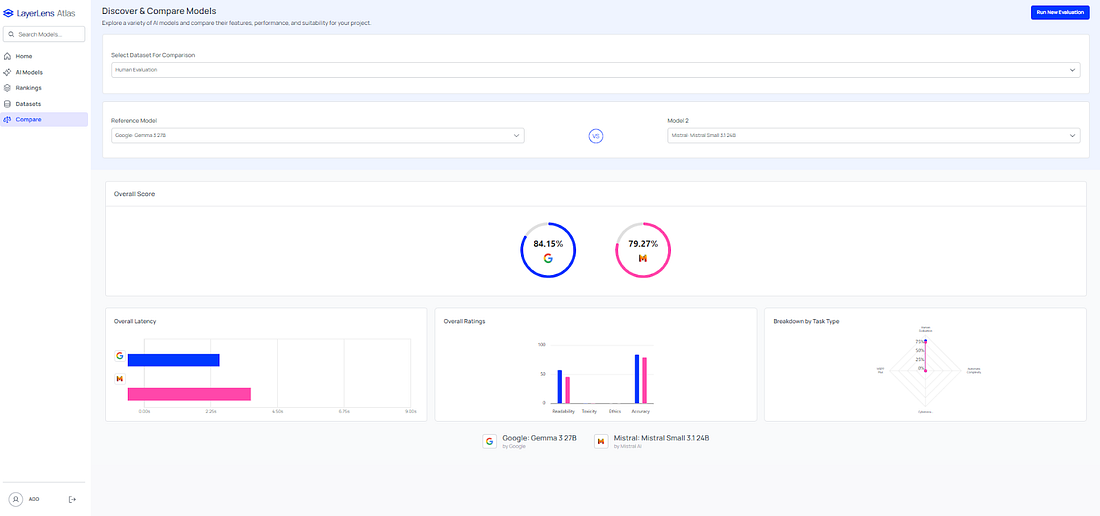

Was this email forwarded to you? Sign up here The Sequence Radar #516: NVIDIA’s AI Hardware and Software Synergies are Getting Scary GoodThe announcements at GTC showcased covered both AI chips and models.Next Week in The Sequence:We do a summary of our series about RAG. The opinion edition discusses whether NVIDIA is the best VC in AI. The engineering installement explores a new AI framework. The research edition explores the amazing Search-R1 model. You can subscribe to The Sequence below:📝 Editorial: NVIDIA’s AI Hardware and Software Synergies are Getting Scary GoodNVIDIA’s GTC never disappoints. This year’s announcements covered everything from powerhouse GPUs to sleek open-source software, forming a two-pronged strategy that’s all about speed, scale, and smarter AI. With hardware like Blackwell Ultra and Rubin, and tools like Llama Nemotron and Dynamo, NVIDIA is rewriting what’s possible for AI development. Let’s start with the hardware. The Blackwell Ultra AI Factory Platform is NVIDIA’s latest rack-scale beast, packing 72 Blackwell Ultra GPUs and 36 Grace CPUs. It’s 1.5x faster than the previous gen and tailor-made for agentic AI workloads—think AI agents doing real reasoning, not just autocomplete. Then there’s the long game. Jensen Huang introduced the upcoming Rubin Ultra NVL576 platform, coming in late 2027, which will link up 576 Rubin GPUs using HBM4 memory and the next-gen NVLink interconnect. Before that, in late 2026, we’ll see the Vera Rubin NVL144 platform, with 144 Rubin GPUs and Vera CPUs hitting 3.6 exaflops of FP4 inference—over 3x faster than Blackwell Ultra. NVIDIA’s clearly gearing up for the huge compute demands of next-gen reasoning models like DeepSeek-R1. On the software side, NVIDIA launched the Llama Nemotron family—open-source reasoning models designed to be way more accurate (20% better) and way faster (5x speed boost) than standard Llama models. Whether you’re building math solvers, code generators, or AI copilots, Nemotron comes in Nano, Super, and Ultra versions to fit different needs. Big names are already onboard. Microsoft’s integrating these models into Azure AI Foundry, and SAP’s adding them to its Joule copilot. These aren’t just nice-to-have tools—they’re key to building a workforce of AI agents that can actually solve problems on their own. Enter Dynamo, NVIDIA’s new open-source inference framework. It’s all about squeezing maximum performance from your GPUs. With smart scheduling and separate prefill/decode stages, Dynamo helps Blackwell hardware handle up to 30x more requests, all while cutting latency and costs. This is especially important for today’s large-scale reasoning models, which chew through tons of tokens per query. Dynamo makes sure all that GPU horsepower isn’t going to waste. While Blackwell is today’s star, the Rubin architecture is next in line. Launching late 2026, the Vera Rubin GPU and its 88-core Vera CPU are set to deliver 50 petaflops of inference—2.5x Blackwell’s output. Rubin Ultra scales that to 576 GPUs per rack. Looking even further ahead, NVIDIA teased the Feynman architecture (arriving in 2028), which will take things up another notch with photonics-enhanced designs. With a new GPU family dropping every two years, NVIDIA’s not just moving fast—it’s setting the pace. The real story here is synergy. Blackwell and Rubin bring the power. Nemotron and Dynamo help you use it smartly. This combo is exactly what enterprises need as they move toward AI factories—data centers built from the ground up for AI-driven workflows. GTC 2025 wasn’t just a product showcase—it was a blueprint for the next decade of AI. With open models like Nemotron, deployment tools like Dynamo, and next-gen platforms like Rubin and Feynman, NVIDIA’s making it easier than ever to build smart, scalable AI. The future of computing isn’t just fast—it’s intelligent. And NVIDIA’s making sure everyone—from startups to hyperscalers—has the tools to keep up. 🔎 AI ResearchSynthetic Data and Differential PrivacyIn the paper "Private prediction for large-scale synthetic text generation" researchers from Google present an approach for generating differentially private synthetic text using large language models via private prediction. Their method achieves the generation of thousands of high-quality synthetic data points, a significant increase compared to previous work in this paradigm, through improvements in privacy analysis, private selection mechanisms, and a novel use of public predictions. KBLAMIn the paper "KBLAM: KNOWLEDGE BASE AUGMENTED LANGUAGE MODEL" Microsoft Research propose KBLAM, a new method for augmenting large language models with external knowledge from a knowledge base. KBLAM transforms knowledge triples into continuous key-value vector pairs and integrates them into LLMs using a specialized rectangular attention mechanism, differing from RAG by not requiring a separate retrieval module and offering efficient scaling with the knowledge base size. Search-R1In the paper "Search-R1: Training LLMs to Reason and Leverage Search Engines with Reinforcement Learning" researchers from the University of Illinois at Urbana-Champaign introduce SEARCH-R1, a novel reinforcement learning framework that enables large language models to interleave self-reasoning with real-time search engine interactions. This framework optimizes LLM rollouts with multi-turn search, utilizing retrieved token masking for stable RL training and a simple outcome-based reward function, demonstrating significant performance improvements on various question-answering datasets. Cosmos-Reason1In the paper "Cosmos-Reason1: From Physical Common Sense To Embodied Reasoning" researchers from NVIDIA present Cosmos-Reason1, a family of multimodal large language models specialized in understanding and reasoning about the physical world. The development involved defining ontologies for physical common sense and embodied reasoning, creating corresponding benchmarks, and training models through vision pre-training, supervised fine-tuning, and reinforcement learning to enhance their capabilities in intuitive physics and embodied tasks. Expert RaceThis paper, "Expert Race: A Flexible Routing Strategy for Scaling Diffusion Transformer with Mixture of Experts”, presents additional results on the ImageNet 256x256 dataset by researchers who trained a Mixture of Experts (MoE) model called Expert Race, building upon the DiT architecture. The results show that their MoE model achieves better performance and faster convergence compared to a vanilla DiT model with a similar number of activated parameters, using a larger batch size and a specific training protocol. RL in Small LLMsIn the paper "Reinforcement Learning for Reasoning in Small LLMs: What Works and What Doesn’t" AI researchers investigate the use of reinforcement learning to improve reasoning in a small (1.5 billion parameter) language model under strict computational constraints. By adapting the GRPO algorithm and using a curated mathematical reasoning dataset, they demonstrated significant reasoning gains on benchmarks with minimal data and cost, highlighting the potential of RL for enhancing small LLMs in resource-limited environments. 📶AI Eval of the Weeek(Courtesy of LayerLens )Mistral Small 3.1 came out this week with some impressive results. The model seems very strong in programming benchmarks like Human Eval. Mistral Small 3.1 also outperforms similar size models like Gemma 3. 🤖 AI Tech ReleasesClaude SearchAnthropic added search capabilities to Claude. Mistral Small 3.1Mistral launched Small 3.1, a multimodal small model with impressive performance. Model OptimizationPruna AI open sourced its famout AI optimization framework. 📡AI Radar

You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

The Sequence Research #415: Punchy Small Models: Phi-4-Mini and Phi-4-Multimodal

Friday, March 21, 2025

A deep dive into the latest edition of Microsoft's amazing small foundation model. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The Sequence Opinion #514: What is Mechanistic Interpretability?

Thursday, March 20, 2025

Some observations into one of the hottest areas of AI research. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The Sequence Engineering #513: A Deep Dive Into OpenAI's New Tools for Developing AI Agents

Wednesday, March 19, 2025

Responses API, file and web search and multi agent coordination are some of the key capabilities of the new stack. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The Sequence Knowledge #512: RAG vs. Fine-Tuning

Tuesday, March 18, 2025

Exploring some of the key similarities and differences between these approaches. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The Sequence Engineering #508: AGNTCY, the Agentic Framework that Brought LangChain and LlamaIndex Together

Tuesday, March 18, 2025

The new framework outlines the foundation for the internet of agents. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

Greenlandia 🇬🇱

Tuesday, March 25, 2025

What passes for popular culture in Greenland. Here's a version for your browser. Hunting for the end of the long tail • March 24, 2025 Hey all, Ernie here with a pretty significant refresh of a

You’re invited: Start your generative AI journey

Tuesday, March 25, 2025

Build a search solution that goes beyond text and recognizes the meaning behind queries.ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ

New Blogs on ThomasMaurer.ch for 03/25/2025

Tuesday, March 25, 2025

View this email in your browser Thomas Maurer Cloud & Datacenter Update This is the update for blog posts on ThomasMaurer.ch. Azure Essentials Overview Video By Thomas Maurer on Mar 24, 2025 03:47

🚨 IngressNightmare K8s zero-day! 🚀 Apple iPhone Local AI Security 🤖 LiteLLM on Azure using AZD and more

Monday, March 24, 2025

͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

JSK Daily for Mar 24, 2025

Monday, March 24, 2025

JSK Daily for Mar 24, 2025 View this email in your browser A community curated daily e-mail of JavaScript news Hope AI By Bit. - Developer teams build with AI and composable software. ✅ Build full-

It Wasn't the Apple TV+ Spend, It Was the Apple TV+ Strategy

Monday, March 24, 2025

And the hope is that they've now corrected it... It Wasn't the Apple TV+ Spend, It Was the Apple TV+ Strategy And the hope is that they've now corrected it... By MG Siegler • 24 Mar 2025

Mapped | Global Happiness by Country in 2025 🌎

Monday, March 24, 2025

Which countries are the happiest in the world? And which are the saddest? This map visualizes happiness rankings for every country. View Online | Subscribe | Download Our App Presented by: BHP >>

The Three Styles of Curiosity & Day One is now available on Windows

Monday, March 24, 2025

ActivityPub is now in public beta for Ghost(Pro), French and German governments launch open source Notion alternative, Butter gets acquired by Miro, and more in this week's issue of Creativerly.

Daily Coding Problem: Problem #1727 [Easy]

Monday, March 24, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Facebook. Given a binary tree, return the level of the tree with minimum sum. Upgrade to

TypeScript Port to Go, satisfies Operator

Monday, March 24, 2025

TypeScript Weekly Issue #201 — March 24, 2025 A 10x Faster TypeScript — Announcement In case you missed last week's issue, I'm including the big announcement from The TypeScript team again —