Facebook talks a lot about its efforts to "keep people safe and informed about the coronavirus." According to the company, it is "connecting people to credible information," "combating COVID-19 misinformation," and "supporting fact-checkers."

In April, Facebook said it "put warning labels on about 50 million pieces of content related to COVID-19 on Facebook, based on around 7,500 articles by our independent fact-checking partners."

On July 15, the company announced that it "connected over 2 billion people to resources from health authorities through our COVID-19 Information Center and pop-ups on Facebook and Instagram with over 600 million people clicking through to learn more." Facebook says the information center debunks "common myths that have been identified by the World Health Organization such as drinking bleach will prevent the coronavirus or that taking hydroxychloroquine can prevent COVID-19."

So that's what Facebook says. But how successful has the company been at combating misinformation about COVID and other health issues?

A new report by the international non-profit Avaaz reveals that Facebook's efforts have been inadequate. Facebook users are being exposed to far more misinformation than accurate health content.

Avaaz found that, in April, "content from the top 10 websites spreading health misinformation reached four times as many views on Facebook as equivalent content from the websites of 10 leading health institutions, such as the WHO and CDC." The group concluded that "Facebook’s moderation tactics are not keeping up with the amplification Facebook’s own algorithm provides to health misinformation content and those spreading it."

Avaaz identified 82 websites that are spreading misinformation about COVID-19 and other health issues. A website was included in the list only if it directly published misinformation judged false by a credible fact-checker that was widely spread on Facebook. Avaaz found that content from these websites generated "130 million interactions, equivalent to 3.8 billion estimated views on Facebook, between May 28, 2019 and May 27, 2020."

In April, at the apex on the pandemic, the ten largest health disinformation websites generated an estimated 296 million views on Facebook. During the same month, the ten largest websites with authoritative health information — sites like the CDC, the Department of Health and Human Services, and the UK's National Health Service — generated just 71 million views.

In a pandemic, the difference between getting accurate information and disinformation can be the difference between life and death.

Facebook emphasized the quantity of content it had flagged or removed. "We share Avaaz’s goal of limiting misinformation, but their findings don’t reflect the steps we’ve taken to keep it from spreading on our services. Thanks to our global network of fact-checkers, from April to June, we applied warning labels to 98 million pieces of COVID-19 misinformation and removed 7 million pieces of content that could lead to imminent harm. We've directed over 2 billion people to resources from health authorities and when someone tries to share a link about COVID-19, we show them a pop-up to connect them with credible health information," a Facebook spokesman told Popular Information.

Avaaz’s data on the views of health misinformation links is also an estimate, based on the amount of engagement, since Facebook does not disclose that information for text content.

Bill Gates vaccine conspiracy theory goes viral

Children’s Health Defense published an article by vaccine conspiracy theorist Robert Kennedy Jr. on April 9, 2020, "Gates’ Globalist Vaccine Agenda: A Win-Win for Pharma and Mandatory Vaccination." The article includes many false claims. Here are three examples:

1. The article falsely claims Polio vaccines paralyzed 490,000 children in India. Politifact found that there "is no evidence that 496,000 children were paralyzed due to a polio vaccine." Complications from the oral Polio vaccine are extremely rare.

2. The article falsely claims Bill Gates wants to reduce the world population through vaccinations and has integrated a "sterility formula" into vaccines. Snopes debunked this long-running conspiracy theory.

3. The article falsely asserts that the Diphtheria/Tetanus/Pertussis (DTP) vaccine kills more children in Africa than the diseases it protects against. The WHO found the DTP vaccine has reduced mortality in vaccinated children, and there is no evidence of any negative effect.

The misinformation is used to urge Americans to reject a potential COVID-19 vaccine, which is the best hope the world has for curbing the deadly pandemic. "Gates appears confident that the Covid-19 crisis will now give him the opportunity to force his dictatorial vaccine programs on all American children – and adults," Kennedy concludes.

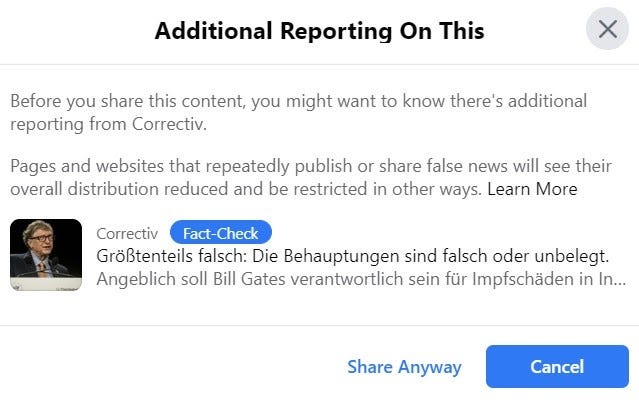

Despite these dangerously false claims, the article has generated 3.7 million views on Facebook. Nearly a month later, the article was fact-checked by Correctiv, a Facebook fact-checking partner in Germany. So if you try to share the post today, Facebook generates the following message.

|

But Kennedy's false article was republished, quoted from, or linked by 29 other health misinformation websites in the ten weeks after publication. Those articles "reached over 4.7 million views in six different languages." Critically, "websites that reposted the article or shared parts of it were able to avoid Facebook’s fact-checking process."

Facebook's biggest health misinformation super-spreader

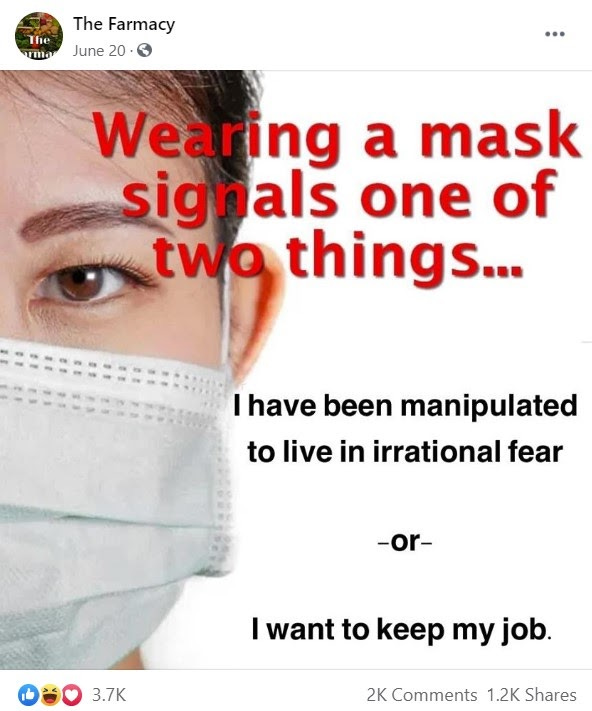

RealFarmacy is a website that "shares conspiracy theories and false claims regarding the COVID-19 pandemic." It also operates three large Facebook pages that collectively have 3.5 million followers. The pages feature memes like this, which mock efforts to control the spread of coronavirus with scientifically-proven methods.

|

According to Avaaz, the RealFarmacy website has generated 581 million views over the last year on Facebook. The website was "was registered in 2013 through a Panama-based privacy service." None of its associated Facebook pages have verified owners.

The limits of fact-checking

Facebook's fact-checking program for English language content does not match the scale of its platform. But it is also more extensive than its fact-checking of content written in other languages. Some of the biggest spreaders of health misinformation are taking advantage of that.

Dr. Mercola, whose eponymous website "has repeatedly promoted false or unsubstantiated claims" on the coronavirus and vaccines, stopped posting on his English language Facebook page. He wrote a lengthy article on his website saying he was leaving the platform due to privacy concerns. But his verified Spanish-language Facebook page, "Dr. Joseph Mercola en Español," which has 1 million followers, remains active. According to Avaaz, Dr. Joseph Mercola en Español is the largest spreader of health misinformation on Facebook, generating about 102 million views over the last year.

The last bastion

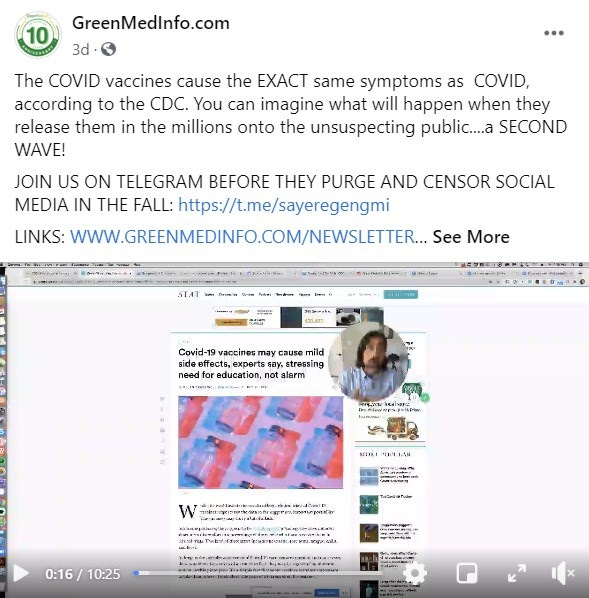

GreenMedInfo is a health disinformation website that has been banned from other social networks, including Pinterest. Mailchimp also has reportedly refused to allow the service to be used to send GreenMedInfo's newsletter. But it is allowed to operate on Facebook, where it has generated 39 million views over the last year. A recent video claims that the "COVID vaccines cause the EXACT same symptoms as COVID, according to the CDC." This is obviously not true, since no COVID vaccines have been approved in the United States.

|

A recent post falsely claims there is no pandemic at all and that deaths in the United States are actually lower than normal in 2020. When you click through to the link, the author says the article was removed because "astute researchers who read my paper have now directed me to data and pages on the CDC website that I had not previously seen."

Too big not to fail

The alarming part of the Avaaz report is that health misinformation has remained pervasive despite Facebook's efforts. The company has taken more aggressive measures to combat COVID-19 disinformation than disinformation on any other topic.

But it is a matter of scale. Facebook has billions of people posting content daily — some of whom are trying to purposely mislead others for financial or ideological reasons. Avaaz's report shows that throwing a few dozen fact-checkers and some artificial intelligence at the problem doesn't solve the problem. Dealing with misinformation on Facebook would take a fundamental reimagining of the platform and how it works. That's not a step that anyone at Facebook appears willing to consider.