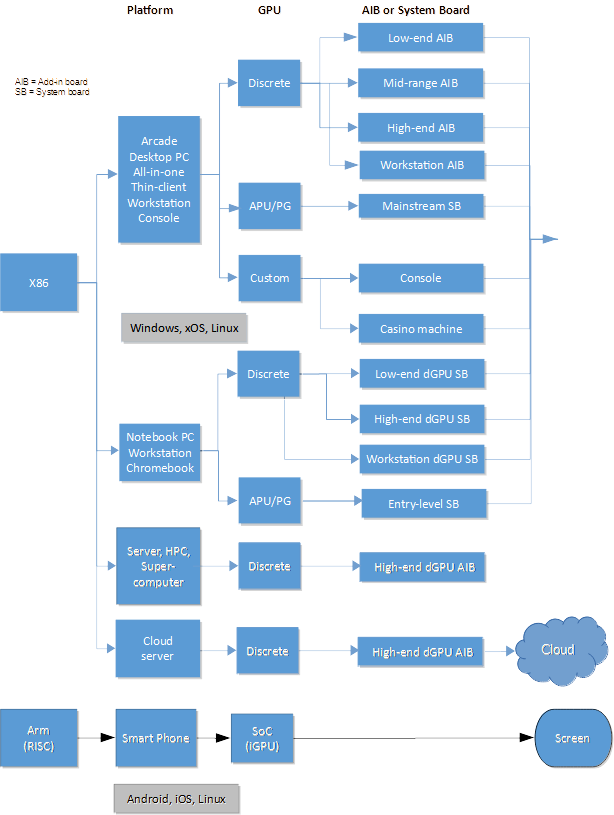

Tedium - No Shortage of GPUs Here 🖥

|

|

|

|

|

|

|

|

Older messages

Novell Cooperation 💾

Wednesday, March 24, 2021

The company that nearly brought MacOS to the PC in the '90s. Here's a version for your browser. Hunting for the end of the long tail • March 24, 2021 Today in Tedium: “Whatever is good for our

Newsletter, Untethered ✍️

Friday, March 19, 2021

You don't need Substack to send a great newsletter. Here's a version for your browser. Hunting for the end of the long tail • March 19, 2021 Today in Tedium: Recently, I got an opportunity to

Tangential Juice Innovation 🧃

Wednesday, March 17, 2021

The long, innovative road to the juice box. Here's a version for your browser. Hunting for the end of the long tail • March 17, 2021 Hey all, Ernie here with a piece on juice boxes. This past week

Caddy Confusion 💽

Saturday, March 13, 2021

Why did early CD-ROM drives need caddies? Here's a version for your browser. Hunting for the end of the long tail • March 12, 2021 Today in Tedium: The first time I ever saw a CD-ROM, I had never

Thunderbolt Road ⚡️

Wednesday, March 3, 2021

Why Thunderbolt isn't quite as cool as it could be. Here's a version for your browser. Hunting for the end of the long tail • March 03, 2021 Today in Tedium: Two decades ago, the fastest data

You Might Also Like

Youre Overthinking It

Wednesday, January 15, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? 🪐 What's happening in tech today, January 15, 2025? The

eBook: Software Supply Chain Security for Dummies

Wednesday, January 15, 2025

Free access to this go-to-guide for invaluable insights and practical advice to secure your software supply chain. The Hacker News Software Supply Chain Security for Dummies There is no longer doubt

The 5 biggest AI prompting mistakes

Wednesday, January 15, 2025

✨ Better Pixel photos; How to quit Meta; The next TikTok? -- ZDNET ZDNET Tech Today - US January 15, 2025 ai-prompting-mistakes The five biggest mistakes people make when prompting an AI Ready to

An interactive tour of Go 1.24

Wednesday, January 15, 2025

Plus generating random art, sending emails, and a variety of gopher images you can use. | #538 — January 15, 2025 Unsub | Web Version Together with Posthog Go Weekly An Interactive Tour of Go 1.24 — A

Spyglass Dispatch: Bromo Sapiens

Wednesday, January 15, 2025

Masculine Startups • The Fall of Xbox • Meta's Misinformation Off Switch • TikTok's Switch Off The Spyglass Dispatch is a newsletter sent on weekdays featuring links and commentary on timely

The $1.9M client

Wednesday, January 15, 2025

Money matters, but this invisible currency matters more. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚙️ Federal data centers

Wednesday, January 15, 2025

Plus: Britain's AI roadmap

Post from Syncfusion Blogs on 01/15/2025

Wednesday, January 15, 2025

New blogs from Syncfusion Introducing the New .NET MAUI Bottom Sheet Control By Naveenkumar Sanjeevirayan This blog explains the features of the Bottom Sheet control introduced in the Syncfusion .NET

The Sequence Engineering #469: Llama.cpp is The Framework for High Performce LLM Inference

Wednesday, January 15, 2025

One of the most popular inference framework for LLM apps that care about performance. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

3 Actively Exploited Zero-Day Flaws Patched in Microsoft's Latest Security Update

Wednesday, January 15, 2025

THN Daily Updates Newsletter cover The Kubernetes Book: Navigate the world of Kubernetes with expertise , Second Edition ($39.99 Value) FREE for a Limited Time Containers transformed how we package and