TheSequence - 📝 Guest post: The Rise of Shadow AI*

Was this email forwarded to you? Sign up here In TheSequence Guest Post our partners explain in detail what ML and AI challenges they help deal with. In this article, Fara Hain from Run:ai discusses the problem of ‘shadow AI’ – when siloed teams each buy their own infrastructure or use cloud infrastructure for AI (along with their own MLOps and data science tools), creating inefficiency and complexity for the teams managing this infrastructure. How Does Shadow AI Begin?Like 'shadow IT' in the 2010s, when cloud computing made it easy for rogue teams to purchase and manage their own infrastructure, IT is once again contending with their shadow. This time, the rise of one-off AI initiatives inside organizations is creating Shadow AI. It begins with good intention – as a way to quickly benefit from AI. Teams rarely have any sinister reasons to purchase GPU servers (or use cloud resources) that fall outside of the purview of IT. They simply intend to complete test projects or train models and begin building their experimentation platform in the simplest way possible. Unfortunately, these initiatives rapidly become siloed. In addition to being a challenge for IT and InfoSec teams to manage, a decentralized approach results in many AI initiatives never making it to production. Teams using AI infrastructure in this siloed way may find that they can succeed for a short time with a few data scientists building a few models, but over time the organization needs to reign in the many small AI projects to help build efficiency and shift focus to production-ready AI. Why Should Organizations Avoid a Siloed Approach to AI?In Q3 2021 Run:ai completed a survey of over 200 people who have some degree of responsibility for their company’s GPU infrastructure. One of the findings displayed here will help answer the question. Almost two-thirds (63%) of surveyed companies have research teams of 10 or more. These companies are growing their data science functions across departments. It’s amazing to see that 30% of those surveyed have more than 75 researchers in data science teams. But the problem became clear when we asked them: What does access to GPUs look like in your organization? Is it easy to get access when you need it? 35% replied simply, “No.” Another 38% replied that it was only ‘Sometimes easy to access resources’. While having access to a few GPUs may be relevant when teams are building or training small models, when scaling AI research, or when moving models to production, access to larger quantities of GPUs is needed. A siloed approach quickly adds complexity and management challenges to the teams responsible for AI. These researchers sometimes need vast quantities of compute to achieve their goals and if each team is responsible for their own infrastructure, the resources may not be in the hands of the right teams. In addition, GPUs are often sitting idle. I love GPU unboxing videos as much as the next person, but a workstation sitting under a researcher’s desk is essentially an expensive piece of furniture. 75% of the time, that server will be sitting idle. Why do GPUs sit idle?According to this recent article written by one of my colleagues, there are many reasons – from the mundane – ‘researcher who holds access going to get lunch or taking a day off’ to the more complex reasons based on how AI models work. According to the article,

There are obvious and sometimes inherent limitations of AI modeling that keep GPUs idle. How to avoid Shadow AI?A siloed approach to AI in an organization will ultimately frustrate researchers and slow down production-ready modeling. It can be avoided in the following ways:

The first step to centralized AI is to pool all available CPU, memory, and GPU resources and give data scientists access to those resources seamlessly. Nodes added to the clusters should also be instantly available to research teams. Ideally, IT also will make on-demand bursting to the cloud part of their pooled setup.

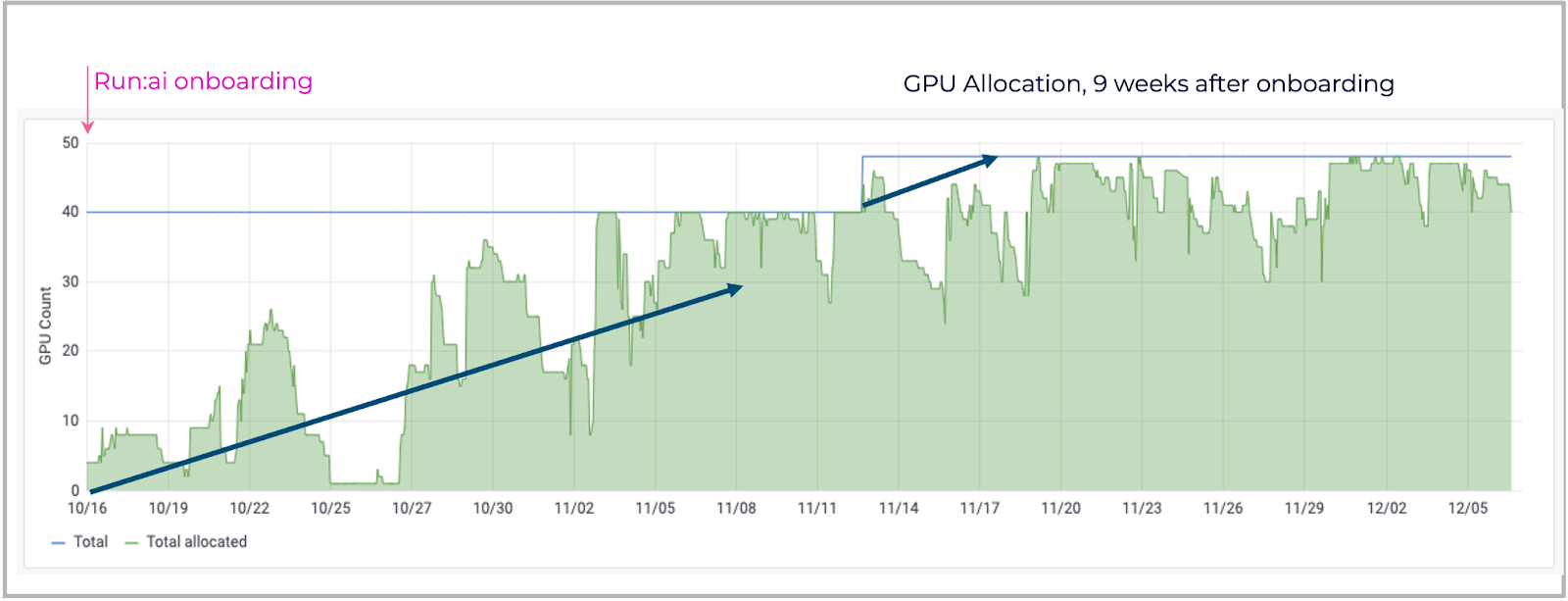

Once a pool of GPUs is available to all teams and users, automation can be set up. Pre-defining policies across projects, users and/or departments help align resource consumption to business priorities. For one of Run:ai customers that meant shifting access to greater quantities of GPUs for the team doing COVID research. Other teams had priority prior to the pandemic, but the research institution was able to automatically shift access to the researchers who needed large quantities of compute at that time. For many Run:ai customers, shadow AI is already in the past. The graph below shows how one company’s ability to access pools of GPUs on-demand has greatly increased utilization. This is directly correlated to their ability to do faster modeling. With GPUs managed by different teams, the customer was initially getting less than 25% utilization of their expensive on-premises compute resources. In the first few days after Run:ai’s Atlas Platform was installed, you can see on the graph that automation enabled researchers to access their GPUs but also any idle GPUs in the pooled environment. That was an eye-opening event for our clients. Within two weeks utilization regularly hit 100%. This enables IT to see that they do actually need to purchase additional resources. New servers were installed in mid-November, and quite quickly researchers across teams and departments were able to access those GPUs as well. ConclusionShadow IT is mostly a thing of the past, and shadow AI should be as well. By pooling researchers’ compute resources and automating access to idle GPUs, we imagine shadow AI will be short-lived. *This post was written by Fara Hain, Run:ai. We thank Run:ai for their ongoing support of TheSequence. |

Older messages

🤖 👶 Edge#164: Meta’s Data2vec is a New Self-Supervised Model that Works for Speech, Vision, and Text

Thursday, February 10, 2022

On Thursdays, we dive deep into one of the freshest research papers or technology frameworks that is worth your attention. Our goal is to keep you up to date with new developments in AI to complement

📌 Tomorrow! Feb 10 – Join us at apply() – the ML Data Engineering Community Meetup

Wednesday, February 9, 2022

We're excited to partner with Tecton on apply(), the community meetup for ML data engineering. This is a free, online event where users and thought leaders will share their experiences and best

⚧ Edge#163: Understanding Variational Autoencoders

Tuesday, February 8, 2022

+VQ-VAE, +Pixyz

✨ DeepMind and OpenAI Magical Week in AI

Sunday, February 6, 2022

Weekly news digest curated by the industry insiders

📌 Webinar: Four Requirements to Efficiently Deliver Real-Time Data at Scale

Friday, February 4, 2022

We are happy to support Molecula's webinar: Four Requirements to Efficiently Deliver Real-Time Data at Scale. Join us on March 2nd, 2022 | 12:00 pm CST Today accessing the fastest and freshest data

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your