📝 Guest post: Beating the Challenges of AI Inference Workloads in the Cloud*

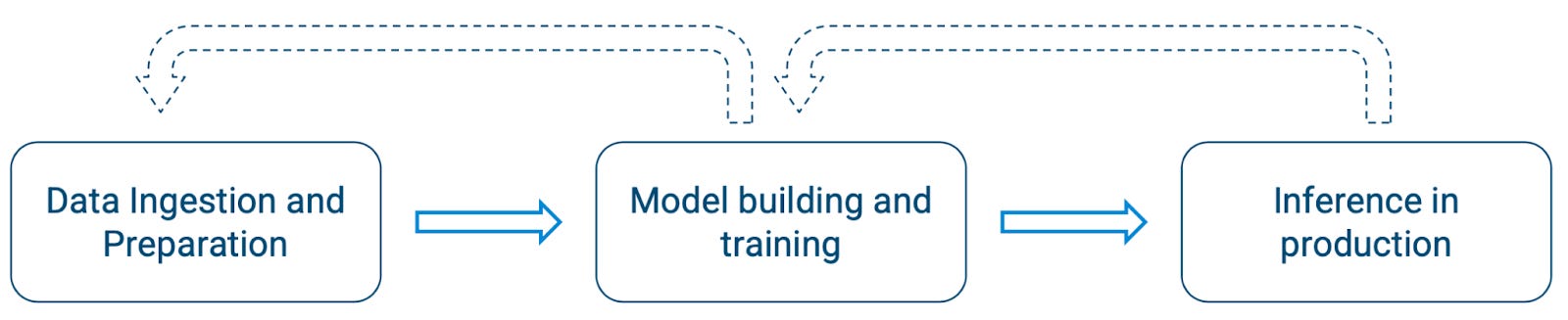

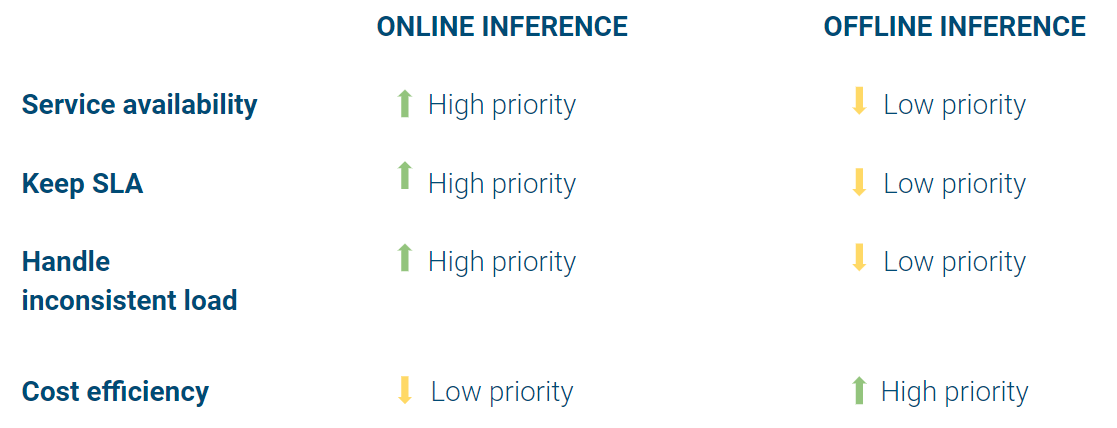

Was this email forwarded to you? Sign up here 📝 Guest post: Beating the Challenges of AI Inference Workloads in the Cloud*No subscription is neededIn TheSequence Guest Post our partners explain in detail what ML and AI challenges they help deal with. This article is a condensed excerpt of the guide, “Managing Inference Workloads in the Cloud” from Run:ai, where you can access it for free in its entirety. Beating the Challenges of AI Inference Workloads in the CloudEnterprise AI teams have made great strides in the last several years against the challenges of training massive, distributed computational models. Now, enterprises and the AI ecosystem in general have shifted focus to productizing AI, and because of that, they’re focusing heavily on inference workloads and on this stage of the AI lifecycle. Much of the AI focus these days is on training and modeling, as that’s where the ‘meat’ of the engineering work takes place. In this article however, we’ll look at which challenges we need to overcome during the inference stage, where AI models run in production. While the first two phases (data engineering and model building/training) are critically important, it’s the inference workloads in production that drive revenue and user traffic. Organizations are looking for ways to operationalize inference workloads in the cloud, but cloud-based inference workloads have their own unique set of challenges to overcome. We know that on-premise GPU clusters, where it still makes sense to do the bulk of building and training models, have their hurdles of effective resource allocation and ensuring high utilization of those resources. In contrast, using cloud GPU clusters for the inference stage of AI requires optimization of both the developer and user experience, and reduction of costs and complexity across multi-cloud. Costs Associated with Online vs Offline InferenceThere are two distinct operating modes for models in production: offline, which processes a big batch of data at once and stores the results for later use, and online, which is time-sensitive and on-demand, based on an actual user waiting for the result in real-time. Offline inference (sometimes called asynchronous or static inference), is great for use cases where a user is not waiting for the results in real-time, and it can process and serve requests much faster compared to online inference. Online inference (synchronous or dynamic inference) is great for use cases where a user is actively waiting for the result, like a translation, response from a chatbot or a car responding to a pedestrian at the crosswalk in front of it. The real-time, synchronous nature of online inference puts more demand on the (cloud) resources it runs on and drives up costs accordingly, especially as these types of workloads tend to run as close to their users as possible to reduce latency and response times, which means they are a natural fit to run in cloud and edge environments. When we compare the priorities of online vs offline inference (below), we see that applications of real-time, online inference have critical concerns related to the experience of the end user: Service availability, the ability to handle inconsistent loads, and keeping external SLAs. It’s fair to say that online inference is an inference production service, whereas offline inference is an inference production process. Doing online inference right requires dealing with inherent complexity across multiple dimensions of running inference workloads in production, but trying to maintain performance cost-effectively at scale turns out to be the single biggest challenge. Simply put: Sure, it's complex to scale online inference workloads, but it’s even more difficult to do so cost-effectively. Online Inference at Scale Stumps Many OrgsWhile GPUs offer distinct performance and cost advantages, some organizations fail to manage them effectively and fall back to running their inference workloads on CPUs. Take for instance the balance of cost versus performance of running inference on CPUs vs GPUs. While GPUs are usually much faster than CPUs (even for inference workloads), GPUs require effective resource management, without which organizations won’t see the cost-efficiency that makes GPUs the clear choice. While running inference workloads on CPU is perfectly fine, it leaves performance and cost optimizations on the table, especially at scale. The parallelism offered by GPU architecture is a key differentiator for running inference at scale, allowing the workload to be broken down into many separate tasks that run in parallel, offering better overall latency and performance than CPU. When we break it down into the key dimensions of scaling inference workloads cost-effectively without impacting performance, they are as follows:

Inference workloads are dynamic in nature, meaning that containers are being spun up and down quickly and often due to auto-scaling and deployments of new versions, but often they’re deployed across multiple environments like on-prem, cloud and edge. As usage patterns fluctuate during the day or across other ebb and flow patterns, balancing performance, reliability and cost require more than just letting the application auto-scale. SREs and DevOps Engineers should look for solutions that help them dynamically assign and reschedule workloads on CPU or GPU to optimize the fleet of workloads.

End users won’t wait 15 seconds for real-time translation or a minute for their self-driving car to avoid a crossing pedestrian. Unfortunately, it’s not a matter of throwing unlimited resources at the problem to guarantee low latency and fast response times. The answer is almost never to have a bunch of spare GPU and compute capacity ready to serve the peak load. Online inference needs a scheduler that uses priorities, quotas and limits to make decisions on allocation to container workloads as needed.

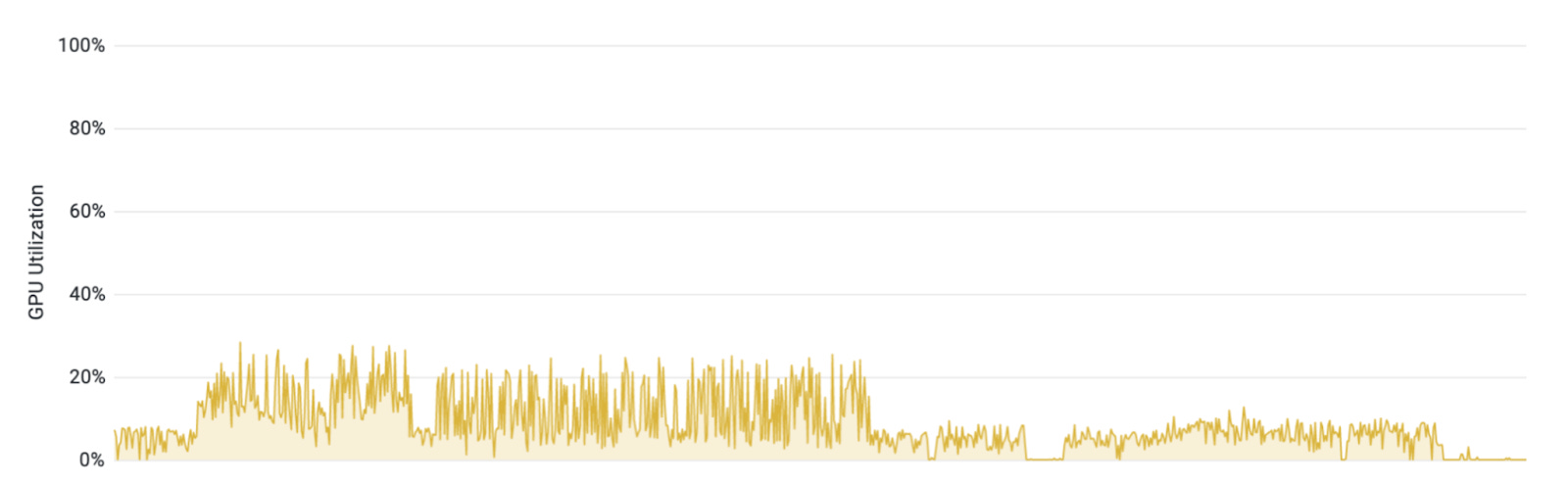

How do we squeeze every last bit of performance out of our expensive resources? Specifically, how do we maximize GPU utilization? In GPU terms, this means sharing physical GPU resources with multiple container workloads, so that the usage of the GPU, and as a result its cost-effectiveness, increases without slowing down each individual workload. By partitioning a single GPU into many smaller, independent partitions (each with their own memory and computing space), applications can be assigned an isolated fraction of the physical resources. This allows multiple workloads to run in parallel without a (significant) performance impact.

Retraining and releasing to production quickly, often and easily are key differentiators in the marketplace. Kubernetes is a key technological enabler to do this, allowing engineers to deploy re-trained and updated models to production continuously, in a self-service and on-demand process without involving SREs, release engineers or IT Ops. Additionally, Kubernetes is well-suited to do more advanced deployment types, like canary deployments to check the viability of a new release in production, or A/B testing to see which model performs better. Final WordBest practices on how to do online inference, using GPU resources, in the cloud, at scale, are few and far between, making it frustrating for AI teams facing these issues for the first time. For a full discussion of these four dimensions of scaling inference workloads and evaluation of the solutions available in the market today, access the free guide to “Managing Inference Workloads in the Cloud.” It delves into inference workload sprawl, how to stay in control and maintain visibility, fleet and lifecycle management at scale, multi-cloud deployments, and ways to optimize cloud resource usage with autoscaling, GPU fractions, and descheduling to CPU to meet SLAs while keeping cost under control. *This post was written by Run:ai team. We thank Run:ai for their ongoing support of TheSequence. |

Older messages

📜 Edge#174: How DeepMind Uses Transformer Models to Help Restore Ancient Inscriptions

Thursday, March 17, 2022

Ithaca is a transformer based architecture used to help historians restore ancient Greek text

🎙Jeff Hawkins, author of A Thousand Brains, about the path to AGI

Wednesday, March 16, 2022

Our brains learn models of everything we interact with. How can we use it to create AGI?

➗ Edge#173: Exploring Conditional GANs

Tuesday, March 15, 2022

+cGANs to generate images from concepts and GAN Lab

🔦 PyTorch’s New Release

Sunday, March 13, 2022

Weekly news digest curated by the industry insiders

📌 Time is running out to register for Arize:Observe

Friday, March 11, 2022

Just-added speakers include Opendoor, Spotify, Uber & more!

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your