📝 Guest post: It's Time to Use Semi-Supervised Learning for Your CV models*

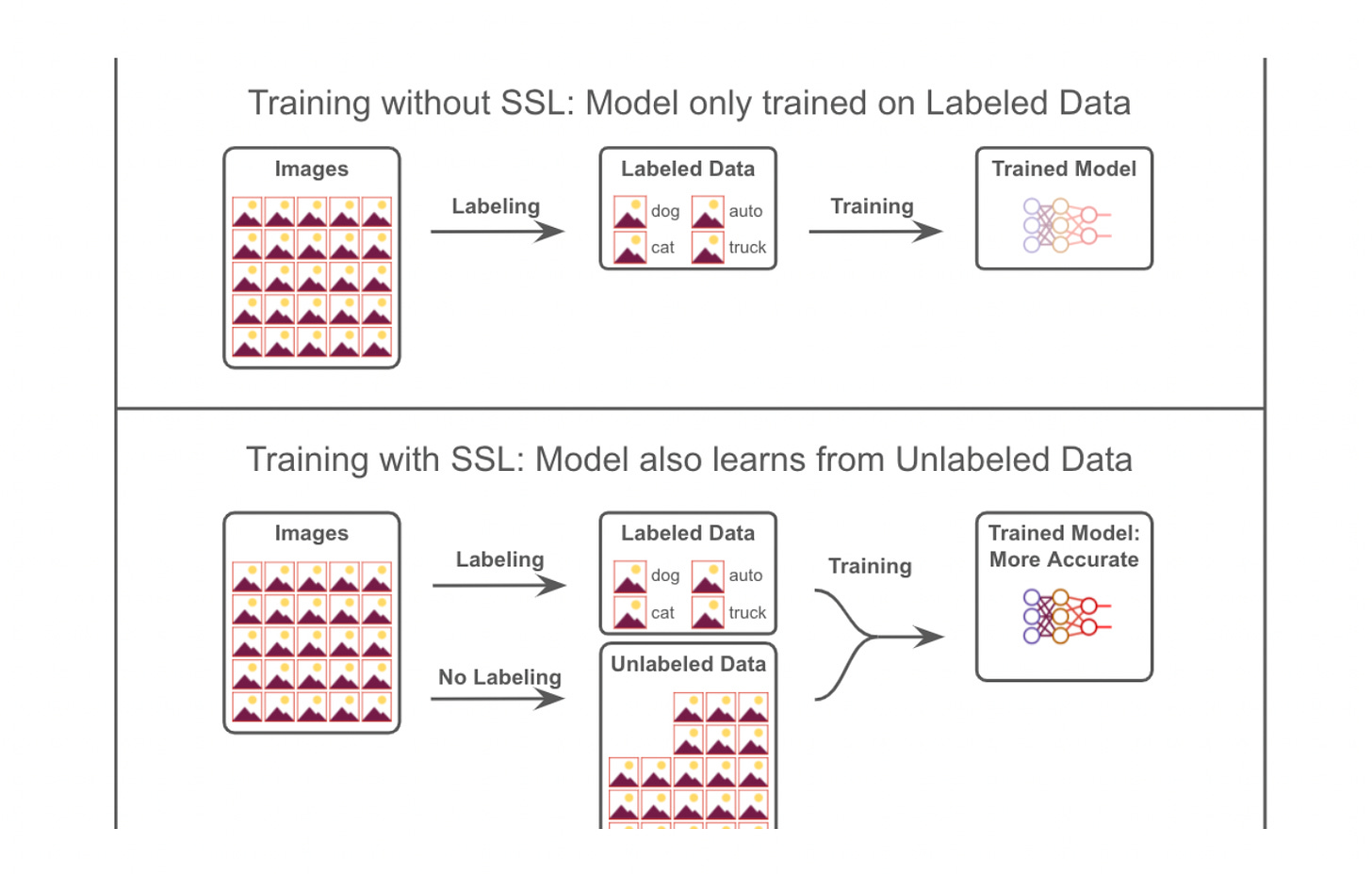

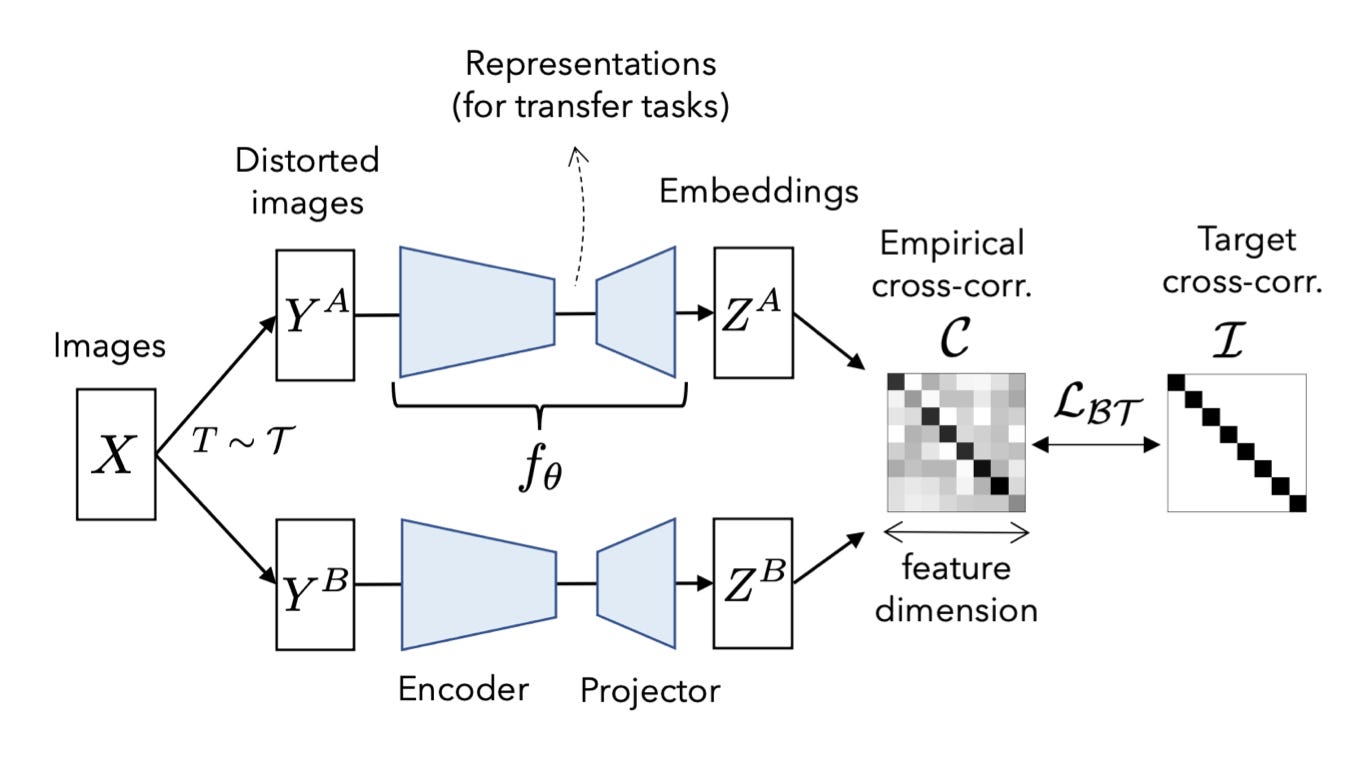

Was this email forwarded to you? Sign up here In this article, Masterful AI’s team suggests that instead of throwing more training data at a deep learning model, one should consider semi-supervised learning (SSL) to unlock the information in unlabeled data. IntroPreviously, we showed that throwing more training data at a deep learning model has rapidly diminishing returns. If doubling your labeling budget won’t move the needle, what to do next? Try SSLSemi-supervised learning (SSL) means learning from both labeled and unlabeled data. First, make sure you are getting the most out of your labeled data. Try a bigger model architecture and tune your regularization hyperparameters. (plug alert: executing on these two steps is hard, and the Masterful platform can help you). Once you have a big enough model architecture and optimal regularization hyperparameters, the limiting factor is now information. An even bigger, more regularized model won’t deliver better results until you train with more information. No more labeling budget but need more information? But wait – it seems like we are stuck between a rock and a hard place. There’s no more labeling budget and yet the model needs information. How do we resolve this? The key insight: labeling is not your only source of information... unlabeled data also has information! Semi-supervised learning is the key to unlocking the information in unlabeled data. SSL is great because there is usually a lot more unlabeled data than labeled, especially once you deploy into production. Avoiding labeling also means avoiding the time, cost, and effort of labeling. Is SSL Good Enough?SSL has been an academic topic for decades. But until about 18 months ago, it did not outperform traditional techniques for CV on standard benchmarks. All that changed with a series of papers published in 2020 and 2021, including Unsupervised Data Augmentation, Noisy Student Training, SimCLR, and Barlow Twins. Today, SSL techniques are responsible for training the most accurate convolutional neural networks (convnets). And transformers are also primarily trained using SSL techniques, in case someday transformers replace convnets as the workhorse CV architecture. How SSL WorksAn Algorithmic View There are a lot of SSL algorithms, but most of the recent approaches loosely share these attributes:

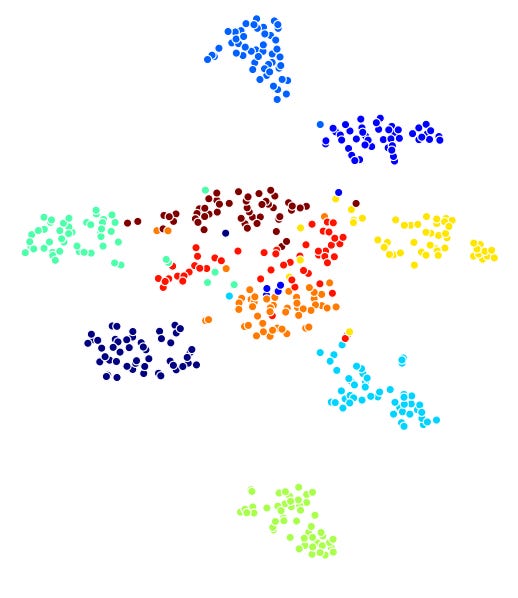

The special problem can be making the pair of outputs consistent with each other. Or using the pair of outputs to solve a pretextual problem, like contrasting between pairs of images, that either do come from the same source image or don't. Sometimes the final output of the model is used, and sometimes a feature embedding. Some algorithms place additional layers between the features and the loss function, while others feed the outputs to the loss function directly. And different techniques work better for low-shot data vs high cardinalities. Most techniques require two training phases, and sometimes the weights of the two models are shared while in other approaches, one model slowly receives weights from the other. Here are a few great walkthroughs by Spyros Gidaris of Valeo.ai and Thang Luong of Google. An Intuitive View An intuitive view of how these algorithms work focuses on clustering the feature embeddings. In concrete terms, the feature embedding is often the output of the penultimate layer of a convnet before the final linear/dense/logistic layer. For algorithms that directly train consistency, differently noised views of the same image must generate similar embeddings. If the feature embeddings are clustered together in the high-dimensional feature embedding space, the feature extractor has learned a useful representation of the data. If the noising function is able to move one image into the feature embedding space of another image, then it's also true that two different images now generate similar feature embeddings. This suggests that the noising function's goal isn't to be confusing, but rather, to transform a single image enough to collide with the feature embedding of other images in the same class, but not so far as to push it to collide with the feature embeddings of images from different classes. Indeed, when projecting the feature embeddings of one SSL algorithm, we see well-clustered feature embeddings. One place to start: research reposIf you want to try these approaches, VISSL from Meta AI and Tensorflow Similarity are two solid repos to start with for PyTorch and Tensorflow respectively. We've worked with both and they are awesome! But like any research repo, they are focused on experimentation, not production. You really have to understand the papers behind them to understand the code, they may not be robust on production datasets, and many hyperparameters will require manual guessing and checking. If you are looking for a productized implementation, consider Masterful. Three ways to access SSL via the Masterful platformThe Masterful platform for training CV models offers three ways to train with SSL.

Good luck on your journey with SSL! Join Masterful AI slack anytime you want to talk SSL! And to try Masterful, just run pip install masterful to install our product and try it out. *This post was written by Yaoshiang Ho, co-founder and head of product at Masterful AI, and originally posted here. We thank Masterful AI for their ongoing support of TheSequence.You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

🔬 Edge#190: Continuous Model Observability With Superwise

Thursday, May 12, 2022

Introducing to you the platforms that deal with the ML challenges

🚰 Edge#189: What is Pipeline Parallelism?

Tuesday, May 10, 2022

In this issue: we discuss pipeline parallelism; we explore PipeDream, an important Microsoft Research initiative to scale deep learning architectures; we overview BigDL, Intel's open-source library

👄 A New Open Source Massive Language Model

Sunday, May 8, 2022

Weekly news digest curated by the industry insiders

📝 Guest post: Active Learning 101: A Complete Guide to Higher Quality Data* (part 2)

Friday, May 6, 2022

In this article, Superb AI's team explains the benefits of building an active learning flow for your computer vision project

🧙🏻♂️ Edge#188: Inside Merlin, the Platform Powering Machine Learning at Shopify

Thursday, May 5, 2022

The eCommerce giant published some details about the platform powering its ML workflows

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your