CHAI, Assistance Games, And Fully-Updated Deference

I. This Machine Alignment Monday post will focus on this imposing-looking article (source): Problem Of Fully-Updated Deference is a response by MIRI (eg Eliezer Yudkowsky’s organization) to CHAI (Stuart Russell’s AI alignment organization at University of California, Berkeley), trying to convince them that their preferred AI safety agenda won’t work. I beat my head against this for a really long time trying to understand it, and in the end, I claim it all comes down to this:

Fine, it’s a little more complicated than this. Let’s back up. II. There are two ways to succeed at AI alignment. First, make an AI that’s so good you never want to stop or redirect it. Second, make an AI that you can stop and redirect if it goes wrong. Sovereign AI is the first way. Does a sovereign “obey commands”? Maybe, but only in the sense that your commands give it some information about what you want, and it wants to do what you want. You could also just ask it nicely. If it’s superintelligent, it will already have a good idea what you want and how to help you get it. Would it submit to your attempts to destroy or reprogram it? The second-best answer is “only if the best version of you genuinely wanted to do this, in which case it would destroy/reprogram itself before you asked”. The best answer is “why would you want to destroy/reprogram one of these?” A sovereign AI would be pretty great, but nobody realistically expects to get something like this their first (or 1000th) try. Corrigible AI is what’s left (corrigible is an old word related to “correctable”). The programmers admit they’re not going to get everything perfect the first time around, so they make the AI humble. If it decides the best thing to do is to tile the universe with paperclips, it asks “Hey, seems to me I should tile the universe with paperclips, is that really what you humans want?” and when everyone starts screaming, it realizes it should change strategies. If humans try to destroy or reprogram it, then it will meekly submit to being destroyed or reprogrammed, accepting that it was probably flawed and the next attempt will be better. Then maybe after 10,000 tries you get it right and end up with a sovereign. How would you make an AI corrigible? You can model an AI as having a utility function, a degree to which it aims for some world-states over others. If you give it some specific utility function, the AI won’t be corrigible, since letting people change it would disrupt that function. That is, if you tell it “act in such a way as to cause as many paperclips to exist as possible”, and then you change your mind and decide you want staples, the AI won’t cooperate in letting you reprogram it: its current goal is maximizing paperclips, and allowing itself to be reprogrammed to maximize staples would cause there to be fewer paperclips than otherwise. So instead, you make the AI uncertain of its utility function. Imagine saying “I’ve written down my utility function in an envelope, and placed that envelope in my safe deposit box, no you can’t see it - please live your life so as to maximize the thing in that envelope.” The AI tries its best to guess what’s in the envelope and decides it’s probably making paperclips. It makes some paperclips and you tell it “No, that’s not what’s on the envelope at all”. This successfully stops the AI! You can even tell it “the envelope actually says you should make staples”, and it will do that. This is the “moral uncertainty” approach to AI alignment. III. All alignment groups have kabbalistically appropriate names. MIRI is Latin for "to be amazed". CFAR and CIFAR both sound like "see far". EEAI and AIAI are the sound you make as you get turned into paperclips. But my favorite is CHAI - Hebrew for "life". CHAI - the Center for Human-Compatible AI (at UC Berkeley) - focuses on the proposal above. Their specific technical implementation is the “assistance game”, related to the earlier idea of Inverse Reinforcement Learning (IRL). In normal reinforcement learning, an AI looks at some goals and tries to figure out what actions they imply. In inverse reinforcement learning, an AI looks at some actions, and tries to figure out what goals the actor must have had. So you can tell an AI “your utility function is to maximize my utility function, and you can use this IRL thing to deduce, from my actions, what my utility function must be.” Instead of telling an AI to maximize a hidden utility function in an envelope, you tell it to maximize the hidden utility function in your brain. This could be useful for near-term below-human-level AIs. Suppose a babysitting robot was pre-programmed to take kids to the park on Saturdays. But this week, the park is on fire. The human mother is barricading the door, desperately screaming at the robot not to take the kids to the park. The kids are struggling and trying to break free, saying they don't want to go to the park. The robot doesn't care; its programming says "take kids to the park on Saturdays" and that's what it's going to do. Nobody would ever design a babysitting robot this way in real life; you need something smarter. So use an assistance game. Program the robot "Maximize the human mother’s utility function, which you don’t know yet but can potentially find out". The robot consults the mother's actions: she is barricading the door, screaming "Don't take the kids to the park!" It updates its goal function: previously, it had thought that the human mother wanted it to take the kids to the park. But now, it suspects that the human mother does not want that. So it doesn't take the kids to the park. But CHAI understands the risk from superintelligence - their founder, Professor Stuart Russell, is a leading voice on the subject - and they hope assistance games and inverse reinforcement learning could work for this too. If you point a superintelligence at “do the thing humans want”, maybe it could figure that out and take things from there? IV. MIRI is skeptical of CHAI’s assistance games for two reasons. First, we don't know how to do them at all. Second, even if we could do it at all, we wouldn't know how to do them correctly. Start with the first. Inverse reinforcement learning has been used in real life. A typical paper is An Application of Reinforcement Learning to Aerobatic Helicopter Flight, where some people create a model of helicopter flight with a few free parameters, have a skilled human pilot fly the helicopter, and then have an AI use IRL to determine the value of the parameters and fly the helicopter itself. This is cool, but it’s not especially related to the modern paradigm of AI. Modern AIs are trained by gradient descent. They start by flailing around randomly. Sometimes in this flailing, they might get closer to some prespecified target, like "win games of Go" or "predict how a string of text will continue". These actions get "rewarded", meaning that the AI should permanently shift its "thought processes"/"strategies" more towards ones that produced those good outcomes. Eventually, the AI's thought processes/strategies are very good at optimizing for that outcome. This is more or less the only way we know how to train modern AIs. Depending on your loss function (ie what you reward), you can use it to create Go engines, language models, or art generators. Where do you slot “do inverse reinforcement learning” or "give the AI moral uncertainty" into this process? There’s not really a natural place. This isn’t because “moral uncertainty” is too complicated a concept to translate into AI terms. It’s because we don’t know how to translate any concept into AI terms. Eliezer writes:

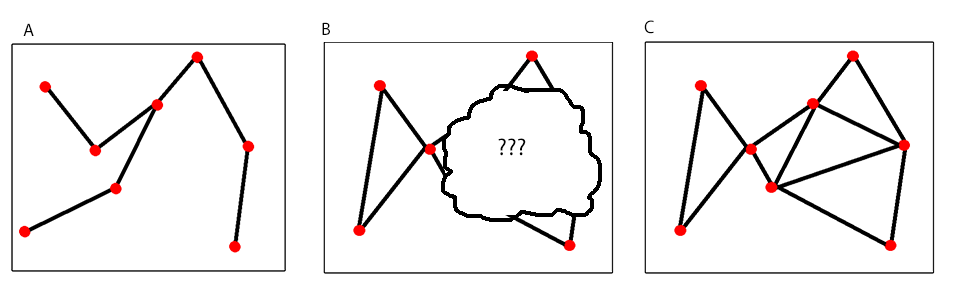

But suppose we solved the problem where we don’t know how to do IRL for modern AIs at all. Now we come to the second problem: we don’t know how to do it correctly. The basic idea behind assistance games is “the AI’s utility function should be to maximize the (hidden) human utility function”. But humans don’t . . . really have utility functions? Utility functions are a useful fiction for certain kinds of economic models. What would best increase the neural correlates of reward in my brain? Probably lots of heroin, or just passing electric current through my reward center directly. What is my “revealed preference”? Today I wrote and rewrote this article a few times, does that mean my revealed preference is to write and delete articles a bunch while frowning and occasionally cursing the keyboard? Sometimes my goals are different than other times, sometimes my best self wants something different from my actual self, sometimes I’m wrong about what I want, sometimes I don’t know what I want, sometimes I want X but not the consequences of X and I’m not logically consistent enough to realize that’s a contradiction, sometimes I want [euphemism for X] but am strongly against [dysphemism for X]. Anyone programming an inverse reinforcement learner has to make certain choices about how to deal with these problems. Some ways of dealing with them will be faithful to what I would consider “a good outcome” or “my best self”. Other ways would be really bad - on my worst day, I’ve occasionally just wished the world didn’t exist, and it’s a good thing I didn’t have a superintelligence dedicated to interpreting and carrying out my innermost wishes on a sub-millisecond timescale. (Before we go on, an aside: is all of this ignoring that there’s more than one human? Yes, definitely! If you want to align an AI with The Good in general - eg not have it commit murder even if its human owner orders it to murder - that will take even more work. But the one person case is simpler and will demonstrate everything that needs demonstrating.) We were originally trying to avoid the situation where someone had to hard-code my preferences into an AI and get them right the first time. We came up with a clever solution: use inverse reinforcement learning to make the AI infer my preferences. But now we see we’ve kicked the can up a meta-level: someone has to hard-code the meta-rules for determining my preferences into an AI and get them right the first time. V. CHAI says: okay, but this isn’t so bad. Assistance games don’t produce a perfect copy of the human utility function on the first try - it’s not a Sovereign. But it will probably, most of the time, be corrigible. Why? Suppose you have some hackish implementation of AG. It’s not the Platonic implementation - that would be the Sovereign - but it’s at least the equivalent of box C on the image above. It takes human actions as input, makes some guesses about what humans want, and tries its best to reconstruct the human utility function, ending up with some approximation. It’s important to distinguish between a few things here:

Suppose some IRL AI has utility function B. It knows some facts about what humans want (which it connects differently than we would, to some degree) but doesn’t know others. You can think of this as a probability distribution across utility functions. Let’s say the AI has three theories, each with equal probability:

It can take various next steps, including asking humans what color they like, what kind of office supplies they need, or starting to produce something. Suppose it decides Theory 1 is most plausible, and it starts making blue paperclips. Its human programmer shouts “NOOOOO!” and lunges for the off-switch. What happens now? This seems like strong evidence that blue paperclips weren’t the human’s goal after all. So the AI could:

CHAI’s argument for corrigibility is that it would probably choose option 5. Why? Suppose that, under Theory 2 (where humans like red paperclips) they place zero value on red staples, and vice versa for Theory 3. If the AI tries to maximize one or the other utility function, it’s only got a 50% chance of getting it right; if it chooses the combination of both, it will instead only be getting 50% of the maximum utility, with certainty. If it lets itself be turned off, then humans will pursue the true human utility function, which is correlated to some degree with the (unknown) true AI utility function. How correlated? If it’s at least 50% correlated, then it’s better than the AI trying to guess on its own. In this toy example, the AI only has two remaining theories about the true human utility function. But in real life, there will be much greater uncertainty - there are a million different things people could want. Now instead of the true human and true AI utility functions having to be at least 50% correlated, they have to be 0.001% correlated. Sounds easy! So under plausible assumptions, the AI will always let itself be turned off, even though its utility function doesn’t quite match humans’. VI. Now we finally come to MIRI’s argument for why this doesn’t work. MIRI notes that the AI has a sixth option:

This is clearly better than any of the other options. Which brings us back to the skit from the beginning:

Now I feel silly for spending so long failing to understand this argument. In the toy example, the assistance game fails because the AI’s utility function (tile the universe with paperclips) is very bad, even if it has a trivial concession to human preference (they’ll be humans’ favorite color). This might feel like cheating - sure, if you assume the AI’s attempt to approximate humans’ utility function will end up with something totally antithetical to humans’ utility function, you can prove it won’t work. But everyone had already admitted that real IRL would end up with something different from the real utility function. This just proves that it doesn’t “fail gracefully”, in the sense of letting itself be turned off. And although I chose a deliberately outrageous example, the same considerations apply if it’s 1% different from the true human utility function, or 0.1% different. VII. I hoped this would spark a debate between Eliezer/MIRI (whose position I’ve tried to relay above) and Stuart/CHAI. It sparked a pretty short debate, which I will try my best to relay here in the hopes that it can lead to more. Stuart had at least three substantial objections to this post. First, it’s wrong to say that we don’t know how to get AIs to play assistance games or do inverse reinforcement learning. It would be more accurate to say that only about deep learning based AIs. This might seem nitpicky, because most modern AIs are based on deep learning, and most people expect future AIs to involve a lot of deep learning too. But it’s possible that future AIs might combine deep learning with other, more programmable paradigms, either because that works better or because we do that deliberately in order to align them. Stuart writes:

Eliezer responds:

Second, Stuart says that the argument undersells the advance made by getting AIs to search for human values. Yes, simply asking an AI to do this doesn’t guarantee it will get this right, and there’s still a lot more work to be done. But it is much more tractable than trying to find human values all on your own. He writes:

Eliezer answers:

Third, in the end the AI really would let itself be shut off, for the same reasons listed in the original paper. Stuart writes:

Eliezer responds:

And elsewhere, Stuart writes:

In conclusion, I don’t feel comfortable trying to adjudicate a debate between two people who both have so much of an expertise advantage over me. The most I’ll say is that their crux seems to be whether the AI could end up with an uncorrectably wrong model of the human utility function; if no, everything Stuart writes makes sense; if yes, everything Eliezer writes does. Stuart seems to agree that this is making convergence difficult:

And Eliezer answers:

This is as far as the discussion has gotten so far, but if either of them have a response to anything in this post or the comments to it, I’m happy to publish it (or signal-boost if it they post it as a comment here). Thanks to both of them for taking the time to try to help me understand their positions. You’re a free subscriber to Astral Codex Ten. For the full experience, become a paid subscriber. |

Older messages

Open Thread 244

Sunday, October 2, 2022

...

Highlights From The Comments On Unpredictable Reward

Friday, September 30, 2022

...

Universe-Hopping Through Substack

Thursday, September 29, 2022

...

From Nostradamus To Fukuyama

Wednesday, September 28, 2022

...

Open Thread 243

Monday, September 26, 2022

...

You Might Also Like

Strategic Bitcoin Reserve And Digital Asset Stockpile | White House Crypto Summit

Saturday, March 8, 2025

Trump's new executive order mandates a comprehensive accounting of federal digital asset holdings. Forbes START INVESTING • Newsletters • MyForbes Presented by Nina Bambysheva Staff Writer, Forbes

Researchers rally for science in Seattle | Rad Power Bikes CEO departs

Saturday, March 8, 2025

What Alexa+ means for Amazon and its users ADVERTISEMENT GeekWire SPONSOR MESSAGE: Revisit defining moments, explore new challenges, and get a glimpse into what lies ahead for one of the world's

Survived Current

Saturday, March 8, 2025

Today, enjoy our audio and video picks Survived Current By Caroline Crampton • 8 Mar 2025 View in browser View in browser The full Browser recommends five articles, a video and a podcast. Today, enjoy

Daylight saving time can undermine your health and productivity

Saturday, March 8, 2025

+ aftermath of 19th-century pardons for insurrectionists

I Designed the Levi’s Ribcage Jeans

Saturday, March 8, 2025

Plus: What June Squibb can't live without. The Strategist Every product is independently selected by editors. If you buy something through our links, New York may earn an affiliate commission.

YOU LOVE TO SEE IT: Defrosting The Funding Freeze

Saturday, March 8, 2025

Aid money starts to flow, vital youth care is affirmed, a radical housing plan takes root, and desert water gets revolutionized. YOU LOVE TO SEE IT: Defrosting The Funding Freeze By Sam Pollak • 8 Mar

Rough Cuts

Saturday, March 8, 2025

March 08, 2025 The Weekend Reader Required Reading for Political Compulsives 1. Trump's Approval Rating Goes Underwater Whatever honeymoon the 47th president enjoyed has ended, and he doesn't

Weekend Briefing No. 578

Saturday, March 8, 2025

Tiny Experiments -- The Lazarus Group -- Food's New Frontier ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Your new crossword for Saturday Mar 08 ✏️

Saturday, March 8, 2025

View this email in your browser Happy Saturday, crossword fans! We have six new puzzles teed up for you this week! You can find all of our new crosswords in one place. Play the latest puzzle Click here

Russia Sanctions, Daylight Saving Drama, and a Sneaky Cat

Saturday, March 8, 2025

President Trump announced on Friday that he is "strongly considering" sanctions and tariffs on Russia until it agrees to a ceasefire and peace deal to end its three-year war with Ukraine. ͏