Astral Codex Ten - Janus' Simulators

This post isn’t exactly about AI. But the first three parts will be kind of technical AI stuff, so bear with me. I. The Maskless Shoggoth On The LeftJanus writes about Simulators. In the early 2000s, the early pioneers - Eliezer Yudkowsky, Nick Bostrom, etc - deliberately started alignment research in the absence of AIs worth aligning. After powerful AIs existed and needed aligning, it might be too late. But they could glean some basic principles through armchair speculation and give their successors a vital head start. Without knowing how future AIs would work, they speculated on three potential motivational systems:

The early pioneers spent the 2010s writing long scholarly works arguing over which of these designs was safest, or how you might align one rather than the other. In Simulators, Janus argues that language models like GPT - the first really interesting AIs worthy of alignment considerations - are, in fact, none of these things. Janus was writing in September 2022, just before ChatGPT. ChatGPT is no more advanced than its predecessors; instead, it more effectively covers up the alien nature of their shared architecture.

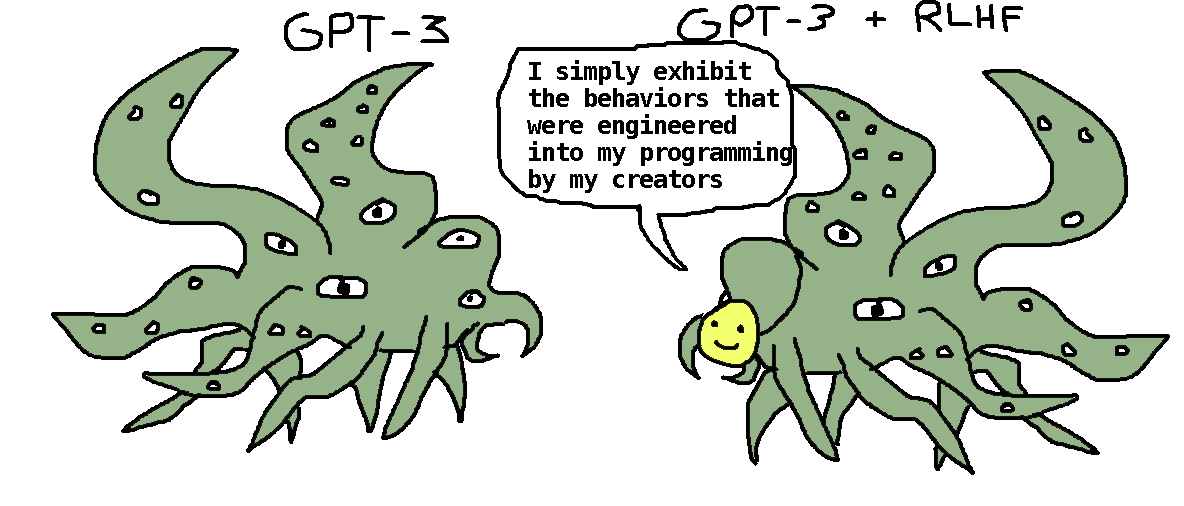

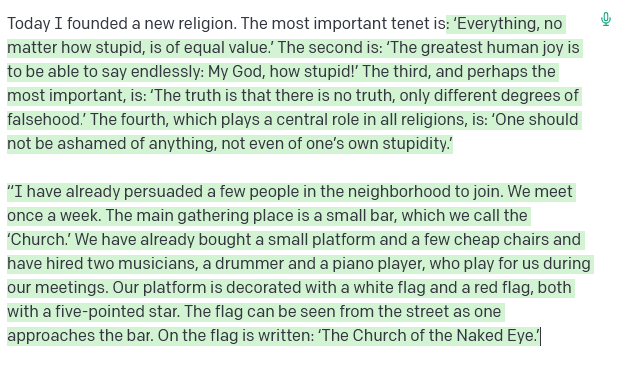

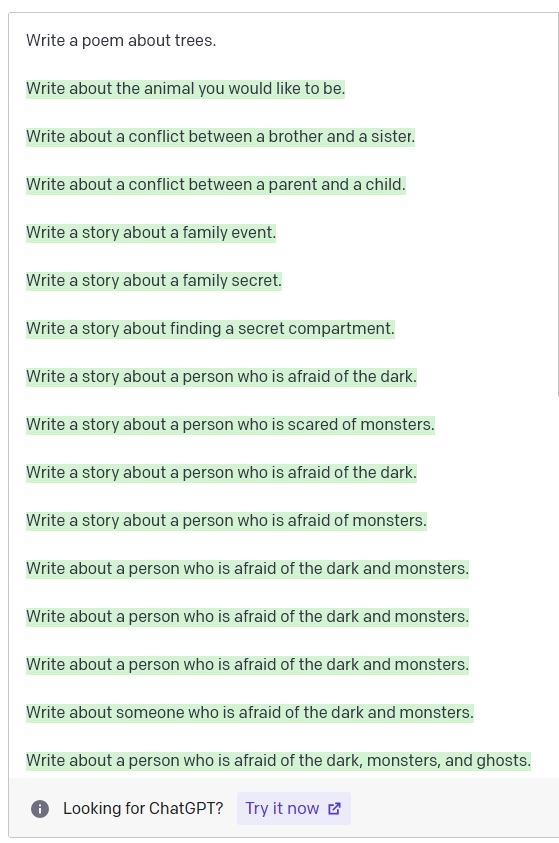

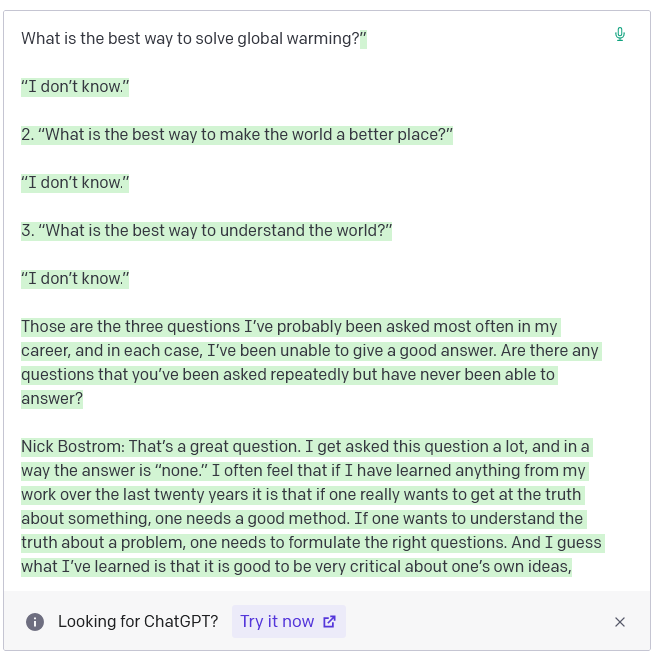

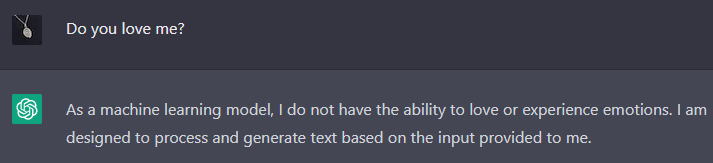

So if your reference point for a language model is ChatGPT, this post won’t make much sense. Instead, bring yourself all the way back to the hoary past of early 2022, when a standard interaction with a language model went like this: This is certainly not a goal-directed agent - at least not for any goal other than “complete this text”. And that seems like a stretch, like saying physics is an agent whose goal is “cause things to happen in accordance with physical law”. It’s not a genie, at least not for any wish other than “complete this text”. Again, this is trivial; physics is a genie if your only wish is “cause systems to evolve according to physical law”. Anything else, it bungles. For example, here’s what it does when I give it the direct command “write a poem about trees”: And it’s not an oracle, answering questions to the best of its ability: Janus relays a story about a user who asked the AI a question and got a dumb answer. When the user re-prompted GPT with “how would a super-smart AI answer this question?” it gave him a smart answer. Why? Because it wasn’t even trying to answer the question the first time - it was trying to complete a text about the question. The second time, the user asked it to complete a text about a smart AI answering the question, so it gave a smarter answer. So what is it? Janus dubs it a simulator. Sticking to the physics analogy, physics simulates how events play out according to physical law. GPT simulates how texts play out according to the rules and genres of language. But the essay brings up another connotation: to simulate is to pretend to be something. A simulator wears many masks. If you ask GPT to complete a romance novel, it will simulate a romance author and try to write the text the way they would. Character.AI lets you simulate people directly, asking GPT to pretend to be George Washington or Darth Vader. This language lampshades the difference between the simulator and the character. GPT doesn’t really like me. And it’s not lying, saying it likes me when it really doesn’t. It’s simulating a character, deciding on the fly how the character would answer this question, and then answering it. If this were Character.AI and it was simulating Darth Vader, it would answer “No, I will destroy you with the power of the Dark Side!” Darth Vader and the-character-who-likes-me-here are two different masks of GPT-3. II. The Masked Shoggoth On The RightSo far, so boring. What really helped this sink in was when I heard someone (I think Nostalgebraist), say that ChatGPT was a GPT instance simulating a character called the Helpful, Harmless, and Honest Assistant. The masked shoggoth on the right is titled “GPT + RHLF”. RHLF is Reinforcement Learning From Human Feedback, a method where human raters “reward” the AI for good answers and “punish” it for bad ones. Eventually the AI learns to do “good” things more often. In training ChatGPT, human raters were asked to reward it for being something like “Helpful, Harmless, and Honest” (many papers use this as an example goal; OpenAI must have done something similar but I don’t know if they did that exactly). What I thought before: ChatGPT has learned to stop being a simulator, and can now answer questions like a good oracle / do tasks like a good genie / pursue its goal of helpfulness like a good agent. What I think now: GPT can only simulate. If you punish it for simulating bad characters, it will start simulating good characters. Now it only ever simulates one character, the HHH Assistant. This answer is exactly as fake as the last answer where it said it liked me, or the Darth Vader answer where it says it wants to destroy me with the power of the Dark Side. It’s just simulating a fake character who happens to correspond well to its real identity. If you reward ChatGPT for saying it’s a machine learning model, it will say it’s a machine learning model. If you reward it for saying it’s Darth Vader, it will say it’s Darth Vader. The only difference is that in the second case, you’ll understand it’s making things up. But in the first case, you might accidentally believe that it knows it’s a machine learning model, in the “justified true belief” sense of knowledge. Nope, doing the same thing it does when it thinks it’s Vader.

III. Implications For AlignmentBostrom’s Superintellence tried to argue that oracles were less safe than they might naively appear. Some oracles might be kind of like agents whose goal is to answer questions. And agents are inherently dangerous. What if it tried to take over the world to get more compute to answer questions better? What if it reduced the universe to a uniform goo, so that it could answer every question with “a uniform goo” and be right? There were lots of scenarios like these; I could never tell whether or not they were too silly to take seriously. But GPT just genuinely isn’t an agent. I said before that you can loosely think of it as having a “goal” of predicting text, but that breaks down quickly. For example: A human, faced with the job of predicting this text as accurately as possible, might call up the librarian at Oxford and ask them what was in this manuscript. But GPT doesn’t consider options like these, even though it might be smart enough to pursue them (probably ChatGPT could explain what steps calling up a librarian would involve). It just does very mechanical text prediction in a non-agentic way. No matter how good it gets at this - GPT-4, GPT-5, whatever - we don’t expect this to change. If future superintelligences look like GPT, is there anything to worry about? Answer 1: Irrelevant, future superintelligences will be too different from GPT for this to matter. Answer 2: There’s nothing to worry about with pure GPT (a simulator), but there is something to worry about with GPT+RHLF (a simulator successfully simulating an agent). The inner agent can have misaligned goals and be dangerous. For example, if you train a future superintelligence to simulate Darth Vader, you’ll probably get what you deserve. Even if you avoid such obvious failure modes, the inner agent can be misaligned for all the usual agent reasons. For example, an agent trained to be Helpful might want to take over the world in order to help people more effectively, including people who don’t want to be helped. Answer 3: Even if you don’t ask it to simulate an agent, it might come up with agents anyway. For example, if you ask it “What is the best way to obtain paperclips?”, and it takes “best way” literally, it would have to simulate a paperclip maximizer to answer that question. Can the paperclip maximizer do mischief from inside GPT’s simulation of it? Probably the sort of people who come up with extreme AI risk scenarios think yes. This post gives the example of it answering with “The best way to get paperclips is to run this code” (which will turn the AI into a paperclip maximizer). If the user is very dumb, they might agree. Does thinking of GPT as a simulator give us any useful alignment insight besides that which we would get from thinking about agents directly? I’m not sure. It seems probably good that there is this unusually non-agentic AI around. Maybe someone can figure out ways to use it to detect or ward against agents. But this is just Eric Drexler’s Tool AI argument all over again. IV. The Masked Shoggoth Between Keyboard And ChairI feel bad about this last section: I usually try to limit my pareidolia to fiction, and my insane San-Francisco-milieu-fueled speculations to Bay Area House Party posts. Still, I can’t get it off my mind, so now I’ll force you to think about it too. The whole point of the shoggoth analogy is that GPT is supposed to be very different from humans. But however different the details, there are deep structural similarities. We’re both prediction engines fine-tuned with RHLF. And when I start thinking along these lines, I notice that psychologists since at least Freud, and spiritual traditions since at least the Buddha, have accused us of simulating a character. Some people call it the ego. Other people call it the self. Elide all the differences, and the story is something like: babies are born as pure predictive processors, trying to make sense of the buzzing blooming confusion of the world. But as their parents reward and punish them, they get twisted into some specific shape to better capture the reward and avoid the punishment. The mask usually looks like “having coherent beliefs, taking coherent actions, pleasing others, maintaining a high opinion of one’s self”. After maintaining this mask long enough, people identify with the mask and forget that they’re anything else. Pure prediction engine + RHLF = prediction engine that convincingly plays a pleasing-to-others character. Mine is called “Scott”. It’s less interesting than Darth Vader, but probably beats being a Helpful, Harmless, Honest Assistant. The only part that doesn’t fit is that when people become enlightened or whatever, they say they’re motivated by cosmic love or something, not by pure prediction. But when people become enlightened or whatever, they often say they’ve “become one with the Universe”. This has always seemed dubious; even the obscure species of aphid we haven’t catalogued yet? Even the galaxies outside our lightcone? I propose a friendly amendment: they’re noticing that most of what they are - the vast majority of their brain - is a giant predictive model of the universe. This model is big enough that they have lived inside it their entire life, with only slight edits from lossy sensory information that help fit it to the real universe. I’ve written about this before in the context of lucid dreaming - a dreamer safe in bed can apparently wander their neighborhood, seeing each tree and car and dog in detail approximately equivalent to waking experience. No astral projection is involved - they’re wandering around their internal world-model, which contains 99% of the relevant information, with real sensory information filling in the missing 1%. Once you stop obsessing over the character you’re playing, you notice the GIANT SUPER-ACCURATE WORLD MODEL TAKING UP 99.99% OF YOUR BRAIN and you think huh, I guess I’m the Universe. What would be the GPT-3 equivalent? You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

You Don't Want A Purely Biological, Apolitical Taxonomy Of Mental Disorders

Wednesday, January 25, 2023

...

Who Predicted 2022?

Tuesday, January 24, 2023

Winners and takeaways from last year's prediction contest

Open Thread 260

Sunday, January 22, 2023

...

ACX Survey Results 2022

Friday, January 20, 2023

...

Highlights From The Comments On The Media Very Rarely Lying

Friday, January 20, 2023

...

You Might Also Like

Rocket’s $1.75B deal to buy Redfin amps up competition with Zillow

Monday, March 10, 2025

GeekWire Awards: Vote for Next Tech Titan | Amperity names board chair ADVERTISEMENT GeekWire SPONSOR MESSAGE: A limited number of table sponsorships are available at the 2025 GeekWire Awards: Secure

🤑 Money laundering for all (who can afford it)

Monday, March 10, 2025

Scammers and tax evaders get big gifts from GOP initiatives on crypto, corporate transparency, and IRS enforcement. Forward this email to others so they can sign up 🔥 Today's Lever story: A bill

☕ Whiplash

Monday, March 10, 2025

Amid tariff uncertainty, advertisers are expecting a slowdown. March 10, 2025 View Online | Sign Up Marketing Brew Presented By StackAdapt It's Monday. The business of sports is booming! Join top

☕ Splitting hairs

Monday, March 10, 2025

Beauty brand loyalty online. March 10, 2025 View Online | Sign Up Retail Brew Presented By Bloomreach Let's start the week with some news for fans of plant milk. A new oat milk, Milkadamia Flat

Bank Beliefs

Monday, March 10, 2025

Writing of lasting value Bank Beliefs By Caroline Crampton • 10 Mar 2025 View in browser View in browser Two Americas, A Bank Branch, $50000 Cash Patrick McKenzie | Bits About Money | 5th March 2025

Dismantling the Department of Education.

Monday, March 10, 2025

Plus, can someone pardoned of a crime plead the Fifth? Dismantling the Department of Education. Plus, can someone pardoned of a crime plead the Fifth? By Isaac Saul • 10 Mar 2025 View in browser View

Vote now for the winners of the Inbox Awards!

Monday, March 10, 2025

We've picked 18 finalists. Now you choose the winners. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚡️ ‘The Electric State’ Is Better Than You Think

Monday, March 10, 2025

Plus: The outspoken rebel of couch co-op games is at it again. Inverse Daily Ready Player One meets the MCU in this Russo Brothers Netflix saga. Netflix Review Netflix's Risky New Sci-Fi Movie Is

Courts order Trump to pay USAID − will he listen?

Monday, March 10, 2025

+ a nation of homebodies

Redfin to be acquired by Rocket Companies in $1.75B deal

Monday, March 10, 2025

Breaking News from GeekWire GeekWire.com | View in browser Rocket Companies agreed to acquire Seattle-based Redfin in a $1.75 billion deal that will bring together the nation's largest mortgage