Edge 272: Inside Toolformer, Meta AI New Transformer Learned to Use Tools to Produce Better Answers

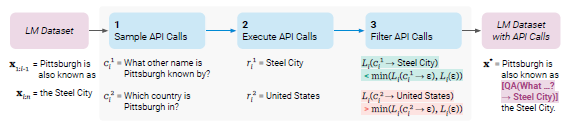

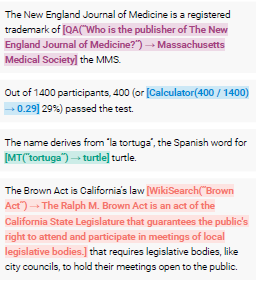

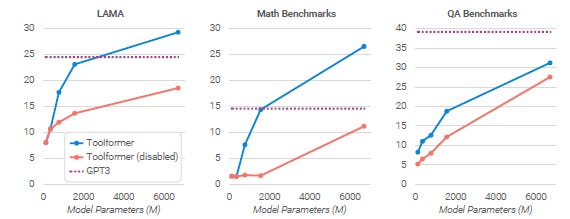

Was this email forwarded to you? Sign up here Edge 272: Inside Toolformer, Meta AI New Transformer Learned to Use Tools to Produce Better AnswersThe model mastered the use of tools such as calculators, calendars, or Wikipedia search queries across many downstream tasks.Today’s large language models have made remarkable strides in performing a range of natural language processing tasks, displaying a range of emergent capabilities. However, these models have certain inherent limitations that can only be partially mitigated by increasing their size. These limitations include an inability to access recent events, a tendency to fabricate information, difficulties in processing low-resource languages, a lack of mathematical proficiency, and an ignorance of the passage of time. One promising approach to overcome these limitations is to equip language models with the ability to use external tools such as search engines, calculators, or calendars. However, current solutions either require extensive human annotations or are restricted to specific tasks, hindering wider adoption. A few days ago, Meta AI published a research paper detailing Toolformer, a novel model that learns to use tools in a self-supervised manner without the need for human annotations. Meta AI’s approach with Toolformer is based on the concept of in-context learning and the generation of datasets from scratch. Given just a few examples of how an API can be used, Toolformer annotates a large language modeling dataset with potential API calls. Through a self-supervised loss, the model determines which API calls are useful in predicting future tokens and fine-tunes itself accordingly. With this approach, language models can learn to control a variety of tools and to make informed decisions on when and how to use them. Toolformer allows the model to retain its generality and to independently decide when and how to use various tools, enabling a more comprehensive utilization of tools that is not tied to specific tasks. Inside the Toolformer ArchitectureThe core idea behind Toolformer is to enhance a language model (M) with the ability to use different tools via API calls. The inputs and outputs for each API are represented as text sequences, which enables the integration of API calls into any text using special tokens. For the training, Meta AI used a dataset of API calls represented as a tuple (ac, ic), where ac is the name of the API, and it is the input. Given an API call (ac, ic) with a corresponding result (r), the linearized sequences of the API call without and with the result are denoted as e(ac, ic) and e(ac, ic, r), respectively. The dataset is the first step to convert the dataset of plain texts into an augmented dataset by inserting API calls. This is done in three steps: sampling potential API calls, executing the API calls and filtering the API calls based on their usefulness in predicting future tokens. After filtering the API calls, they are merged and interleaved with the original inputs to form the augmented dataset. The language model is then finetuned on this augmented dataset, allowing it to make its own decisions on when and how to use each tool based on its own feedback. In the inference stage, the model generates text as usual until it encounters the “!” token, indicating the need for an API response. The appropriate API is then called to obtain the response, and the decoding process continues after inserting the response and the </API> token. The researchers are investigating various tools to address the limitations of regular language models (LMs). The only requirements for these tools are that their inputs and outputs can be represented as text sequences and that the researchers can obtain a few examples of how to use them. The five tools being explored are a question-answering system, a Wikipedia search engine, a calculator, a calendar, and a machine translation system. 1. Question Answering System: The question-answering system is based on another LM that can answer simple factual questions. 2. Calculator: The calculator can perform basic arithmetic operations and returns results rounded to two decimal places. 3. Wikipedia Search: The Wikipedia search engine returns short text snippets from Wikipedia based on a search term. 4. Machine Translation: the machine translation system can translate phrases from any language into English. 5. Calendar: The calendar returns the current date without taking any input, providing a temporal context for predictions that require an awareness of time. The Toolformer implementation is based on a finetuned version of GPT-J, which only uses 6.7 billion parameters. The model was able to outperform GPT-3 and GPT-J across several benchmarks. The ideas behind Toolformer represent a new frontier for LLMs in which they are not only able to perform sophisticated language tasks but complement them with access to tools and APIs. Can’t wait to see Meta AI expand on these ideas. You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

A Taxonomy to Understand Federated Learning

Tuesday, March 7, 2023

Classifying different types of federated learning methods, Meta AI research about highly scalable and asynchronous federated learning pipelines and Microsoft's FLUTE framework.

ChatGPT and Whisper APIs

Sunday, March 5, 2023

Sundays, The Sequence Scope brings a summary of the most important research papers, technology releases and VC funding deals in the artificial intelligence space.

📝 How is MLOps more than just tools?

Friday, March 3, 2023

Hi there! At TheSequence, we're exploring what MLOps culture looks like across the industry at the start of 2023. A huge variety of tools are available for ML development, but the culture and

Inside Claude: The ChatGPT Competitor that Just Raised Over $1 Billion

Thursday, March 2, 2023

Claude uses an interesting technique called Constitutional AI to enable safer content.

Edge 269: A New Series About Federated Learning

Tuesday, February 28, 2023

Intro to federated learning, the original federated learning and the TensorFlow Federated framework.

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your