Hello and thank you for tuning in to Issue #491.

Once a week we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

***

Seeing this for the first time? Subscribe here:

***

We've started a subscriber-only Slack community where we tackle learning the latest tools, keeping up with the latest techniques, career entry & growth, and anything else that's stressing you out at the office. Paid subscribers not only get access, they'll also get a catch-up email every week updating them on what happened on Slack that they might have missed.

So if this is useful for your work, you can become a paid subscriber here:

https://datascienceweekly.substack.com/subscribe

Let’s build great Data/ML products, drive results, and accelerate your career.

***

If you don’t find this email useful, please unsubscribe here.

Hope you enjoy it!

:)

And now, let's dive into some interesting links from this week:

Watch an A.I. Learn to Write by Reading Nothing but Jane Austen

The core of an A.I. program like ChatGPT is something called a large language model: an algorithm that mimics the form of written language….While the inner workings of these algorithms are notoriously opaque, the basic idea behind them is surprisingly simple….To show you what this process looks like, we trained six tiny language models starting from scratch. We’ve picked one trained on the complete works of Jane Austen, but you can choose a different path by selecting an option below. (And you can change your mind later.)…

The future of programming: Research at CHI 2023

The esteemed CHI conference is happening this week, and I'm jealous that I can't be there. Instead, I'm going through the proceedings and reading all of the papers related to programming, of which many involve AI. Here are some papers that stood out to me…

Recommended Resources for Starting A/B Testing

To make A/B testing useful, you need to design the experiment well, check data trustworthiness, run some statistics, oversee an experimentation platform, and effectively communicate results. With so many moving parts, it’s best to learn from others. After over a decade of combined experience building and running A/B testing systems at various companies and teaching experimentation best practices, we wanted to share our favorite resources for getting started with A/B testing…

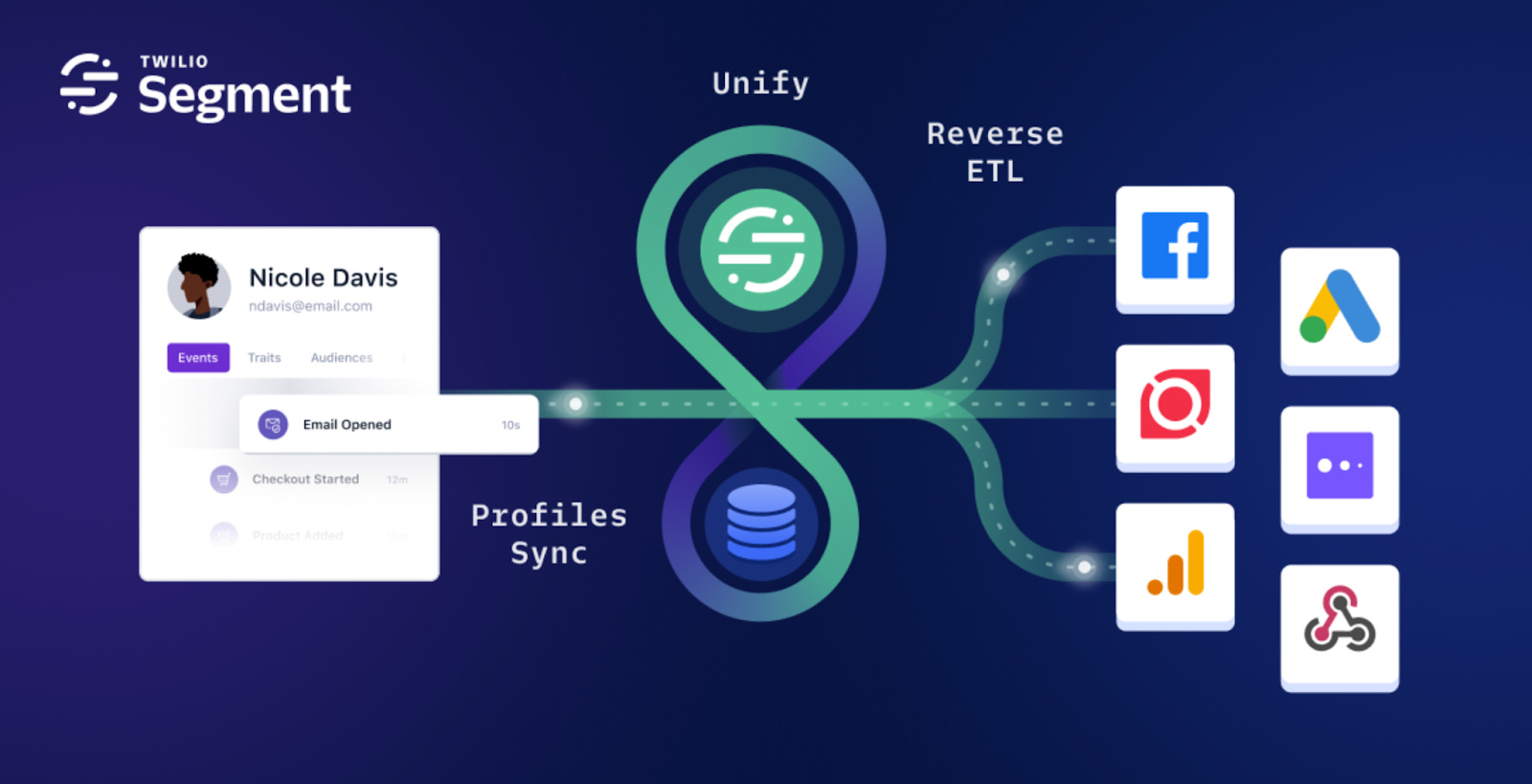

Track every customer interaction in real-time and gain a deep understanding of your customers’ behavior

Track every customer interaction in real-time and gain a deep understanding of your customers’ behavior

Segment Unify allows you to unite online and offline customer data in real-time across every platform and channel. Use Segment Profiles Sync to send identity resolved customer profiles to your data warehouse, where they can be used for advanced analytics and enhanced with valuable data-at-rest. Then use Segment Reverse ETL to immediately activate your ‘golden’ profiles across your CX tools of choice.

Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

Why does did Google Brain exist?

This essay was originally written in December 2022 as I pondered the future of my job. I sat on it because I wasn’t sure of the optics of posting such an essay while employed by Google Brain. But then Google made my decision easier by laying me off in January. My severance check cleared, and last week, Brain and DeepMind merged into one new unit, killing the Brain brand in favor of “Google DeepMind”. As somebody with a unique perspective and the unique freedom to share it, I hope I can shed some light on the question of Brain’s existence. I’ll lay out the many reasons for Brain’s existence and assess their continued validity in today’s economic conditions…

List of accepted papers at the Machine Learning for Drug Discovery workshop at #ICLR2023

"DOG: Discriminator-only Generation", "Multi-scale Sinusoidal Embeddings Enable Learning on High Resolution Mass Spectrometry Data", "Graph Generation with Destination-Driven Diffusion Mixture", "Exploring Chemical Space with Score-based Out-of-distribution Generation", "Do Deep Learning Methods Really Perform Better in Molecular Conformation Generation?", "Holographic-(V)AE: an end-to-end SO(3)-Equivariant (Variational) Autoencoder in Fourier Space", "Differentiable Multi-Target Causal Bayesian Experimental Design", "Do deep learning models really outperform traditional approaches in molecular docking?", and more...

Chart Chat #40: Data Visualization in the Age of Automation

Join Chart Chat live with hosts Steve Wexler, Jeff Shaffer, Andy Cotgreave, and Amanda Makulec to talk about the good, bad, and scaredy cats of data visualization. This episode, Elijah Meeks (Chief Innovation Officer and Co-Founder of Noteable) joins us to talk about what automation and AI mean for data visualization, from automated recommendation engines for charts to ChatGPT….

Zeno: An Interactive Framework for Behavioral Evaluation of Machine Learning

Machine learning models with high accuracy on test data can still produce systematic failures, such as harmful biases and safety issues, when deployed in the real world. To detect and mitigate such failures, practitioners run behavioral evaluation of their models, checking model outputs for specific types of inputs. Behavioral evaluation is important but challenging, requiring that practitioners discover real-world patterns and validate systematic failures….we designed Zeno, a general-purpose framework for visualizing and testing AI systems across diverse use cases. In four case studies with participants using Zeno on real-world models, we found that practitioners were able to reproduce previous manual analyses and discover new systematic failures…

Reinforcement Learning for Language Models

I was puzzled for a while as to why we need RL for LM training, rather than just using supervised instruct tuning. I now have a convincing argument, which is also reflected in a recent talk by John Schulman. I summarize it in this post…

Reinforcement Learning from Human Feedback: Progress and Challenges

John Schulman received a Ph.D. from Berkeley EECS in 2016, advised by Pieter Abbeel. He now leads a team working on ChatGPT and RL from Human Feedback at OpenAI, where he was a co-founder. His previous work includes foundational algorithms of deep RL (TRPO, PPO), generalization in RL (ProcGen), mathematical reasoning by language models (GSM8K), combining RL with retrieval (WebGPT) and studying scaling laws of RL and alignment…

Why do tree-based models still outperform deep learning on typical tabular data?

We define a standard set of 45 datasets from varied domains with clear characteristics of tabular data and a benchmarking methodology accounting for both fitting models and finding good hyperparameters. Results show that tree-based models remain state-of-the-art on medium-sized data (∼10K samples) even without accounting for their superior speed. To understand this gap, we conduct an empirical investigation into the differing inductive biases of tree-based models and neural networks….

The charismatic megafauna of climate change map

Stephanie May talked with cartographer Jeffrey Linn about his fascinating series of sea level rise maps and about the concept of “speculative cartography”. Maps that show familiar coastal cities flooded by water are viscerally terrifying and visually compelling, but as cartographers we often struggle with the limitations of maps like these to tell the full picture of the coming impacts of climate change, or to accurately communicate to the public the complexities and uncertainties of when the seas will rise this much and where the impacts will be most felt…

Transformers from Scratch

I procrastinated a deep dive into transformers for a few years. Finally the discomfort of not knowing what makes them tick grew too great for me. Here is that dive….Before we start, just a heads-up. We're going to be talking a lot about matrix multiplications and touching on backpropagation (the algorithm for training the model), but you don't need to know any of it beforehand. We'll add the concepts we need one at a time, with explanation…This isn't a short journey, but I hope you'll be glad you came…

Observable Plot

The JavaScript library for exploratory data visualization…Create expressive charts with concise code…Observable Plot is a free, open-source, JavaScript library for visualizing tabular data, focused on accelerating exploratory data analysis. It has a concise, memorable, yet expressive interface, featuring scales and layered marks in the grammar of graphics style popularized by Leland Wilkinson and Hadley Wickham and inspired by the earlier ideas of Jacques Bertin. And there are plenty of examples to learn from and copy-paste…

Resources for deepening knowledge of Transformers [Reddit Discussion]

I'm wondering if anyone has a good list of papers and resources that build up on this to improved architectures and/or intuitions as to why they work. Two parallels in CNNs, in each of those directions respectively, would be the ResNet paper building on top of AlexNet/VGG and the paper that examined what convolutional filters learn (edge filters, the hierarchical feature representation etc.). For example, I have a vague idea about variants like GPT, BERT, ViT and about phenomena such as in-context learning. Does anyone have a list for getting up to speed as much as possible, given the rapidly shifting field?…

Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond

This paper presents a comprehensive and practical guide for practitioners and end-users working with Large Language Models (LLMs) in their downstream natural language processing (NLP) tasks. We provide discussions and insights into the usage of LLMs from the perspectives of models, data, and downstream tasks…

We’re looking for engineers with a background in machine learning & artificial intelligence to improve our products and build new capabilities. You'll be driving fundamental and applied research in this area. You’ll be combining industry best practices and a first-principles approach to design and build ML models and infrastructure that will improve Figma’s design and collaboration tool.

What you’ll do at Figma:

Drive fundamental and applied research in ML/AI, with Figma product use cases in mind

Formulate and implement new modeling approaches both to improve the effectiveness of Figma’s current models as well as enable the launch of entirely new AI-powered product features

Work in concert with other ML researchers, as well as product and infrastructure engineers to productionize new models and systems to power features in Figma’s design and collaboration tool

Explore the boundaries of what is possible with the current technology set and experiment with novel ideas.

Apply here

Want to post a job here? Email us for details --> team@datascienceweekly.org

Building reproducible analytical pipelines with R

The aim of this book is to teach you how to use some of the best practices from software engineering and DevOps to make your projects robust, reliable and reproducible. It doesn’t matter if you work alone, in a small or in a big team. It doesn’t matter if your work gets (peer-)reviewed or audited: the techniques presented in this book will make your projects more reliable and save you a lot of frustration!…

ChatGPT Prompt Engineering for Developers

In ChatGPT Prompt Engineering for Developers, you will learn how to use a large language model (LLM) to quickly build new and powerful applications. Using the OpenAI API, you’ll be able to quickly build capabilities that learn to innovate and create value in ways that were cost-prohibitive, highly technical, or simply impossible before now. This short course taught by Isa Fulford (OpenAI) and Andrew Ng (DeepLearning.AI) will describe how LLMs work, provide best practices for prompt engineering, and show how LLM APIs can be used in applications for a variety of tasks…

Prompt engineering techniques from Microsoft

This guide will walk you through some advanced techniques in prompt design and prompt engineering…The techniques in this guide will teach you strategies for increasing the accuracy and grounding of responses you generate with a Large Language Model (LLM). It is, however, important to remember that even when using prompt engineering effectively you still need to validate the responses the models generate. Just because a carefully crafted prompt worked well for a particular scenario doesn't necessarily mean it will generalize more broadly to certain use cases…

* Based on unique clicks.

** Find last week's issue #491 here.

Thanks for joining us this week :)

All our best,

Hannah & Sebastian

P.S.,

Let’s build great Data/ML products, drive results, and accelerate your career - join the subscriber-only Slack community where we'll tackle learning the latest tools, keeping up with the latest techniques, career entry & growth, and anything else that's stressing you out at the office.

Become a paid subscriber here: https://datascienceweekly.substack.com/subscribe

:)

Copyright © 2013-2023 DataScienceWeekly.org, All rights reserved.