How you want me to cover artificial intelligence

Here’s a second free edition of Platformer this week: a follow-up to last week’s essay on how hard it is to cover AI, featuring your suggestions about how I can do a better job. I’m making every edition free this week because I want to maximize the number of people who engage with issues around AI and journalism. But it’s paid subscribers who make this work possible — and they also get all our scoops first. Ready to contribute something to the community? Subscribe now and we’ll send you the link to join us in our chatty Discord server.

Programming note: Barring major news, Platformer will be off Thursday as I attend an on-site meeting of the New York Times’ audio teams in New York. Hard Fork will publish as usual on Friday. Last week, I told you all about some of the difficulties I’ve been having in covering this moment in artificial intelligence. Perspectives on the subject vary so widely, even among experts, that I often have trouble trying to ground my understanding of which risks seem worth writing about and which are just hype. At the same time, I’ve been struck by how many executives and researchers in the space are calling for an industrywide slowdown. The people in this camp deeply believe that the risk of a major societal disruption, or even a deadly outcome, is high enough that the tech industry should essentially drop everything and address it. There are some hopeful signs that the US government, typically late to any party related to the regulation of the tech industry, will indeed meet the moment. Today OpenAI CEO Sam Altman appeared before Congress for the first time, telling lawmakers that large language models are in urgent need of regulation. Here’s Cecilia Kang in the New York Times:

Altman got a fairly friendly reception from lawmakers, but it’s clear they’ll be giving heightened scrutiny to the industry’s next moves. And as AI becomes topic one at most of the companies we cover here, I’m committed to keeping a close eye on it myself. One of my favorite parts of writing a newsletter is the way it can serve as a dialogue between writer and readers. Last week, I asked you what you want to see out of AI coverage. And you responded wonderfully, sending dozens of messages to my email inbox, Substack comments, Discord server, Twitter DMs, and replies on Bluesky and Mastodon. I’ve spent the past few days going through them and organizing them into a set of principles for AI coverage in the future. These are largely not new ideas, and plenty of reporters, researchers and tech workers have been hewing much more closely to them than I have. But I found it useful to have all this in one place, and I hope you do too. Thanks to everyone who wrote in with ideas, and feel free to keep the conversation going in your messaging app of choice. And so, without further ado: here’s how you told me to cover AI. Be rigorous with your definitions. There’s an old joke that says “AI” is what we call anything a computer can’t do yet. (Or perhaps has only just started doing.) But the tendency to collapse large language models, text-to-image generators, autonomous vehicles and other tools into “AI” can lead to muddy, confusing discussions. “Each one of those has different properties, risks and benefits associated with them,” commenter Matthew Varley wrote. “And even then, treating classes of AI & [machine learning] tech super broadly hurts the conversation around specific usage. The tech that does things like background noise suppression on a video call is the same exact tech that enables deepfake voices. One is so benign as to be almost invisible (it has been present in calling applications for years!), the other is a major societal issue.” The lesson is to be specific when discussing various different technologies. If I’m writing about a large language model, I’ll make sure I say that — and will try not to conflate it with other forms of machine learning. Predict less, explain more. One of my chief anxieties in covering AI is that I will wind up getting the actual risks posed totally wrong: that I’ll either way overhype what turns out to be a fairly manageable set of policy challenges, or that I’ll significantly underrate the possibility of huge social change. Anyway, I am grateful to those of you who told me to knock it off and just tell you what’s happening. “I don’t really get your intense concern with ‘getting it right’ (retrospectively),” a reader who asked to stay anonymous wrote. “That’s not what I look to journalism for. … If you surface interesting people/facts/stories/angles and seed the conversation, great. Put another way, if the experts in the field disagree, why would I expect a tech journalists to adjudicate? It’s just not where you add value.” Similarly, reader Sahil Shah told me to focus on actual daily developments in the field over speculation about the future. “I strongly feel that the people who focus on immediate applications of a technology will have greater influence compared to those who focus on existential threats or ways to wield the fear or positivity of the technology to achieve their personal, professional and political aims,” he said. Point taken. Look for fewer anxious predictions in this space, and more writing about what’s actually happening today. Don’t hype things up. If there is consensus among readers on any subject, it’s on this one. You really don’t want wide-eyed speculation about what the world might look like years in the future — particularly not if it obscures harms that are happening today around bias, misinformation, and job losses due to automation. “Consider the way some people bloviate about the hypothetical moral harms of colonizing distant galaxies, when we know for a fact that under current physical knowledge it is not something that can happen for millennia in real time,” commenter Heather wrote. “There is certainly marginal benefit in considering such far future actions, but it's irrelevant to daily life.” Reader James Lanyon put it another way. “Just because the various fathers and godfathers of AI are talking about the implications of artificial super intelligence doesn't mean we've gotten close to solving for the fact that machine vision applications can be confounded by adversarial attacks a 5-year-old could think up,” he wrote. Focus on the people building AI systems — and the people affected by its release. AI is still (mostly) not developing itself — people are. You encouraged me to spend time telling those stories, which should help us understand what values these systems are being infused with, and what impact they’re having in the real world. “If there is one thing I would like to see, it would be you dedicating some articles interviewing different people that wouldn't normally be interviewed regarding the impact AI is having on them, or what they are doing about AI, and promoting individuals who have thought more on certain topics,” one reader wrote. “I think focusing on unique cases would have a profound impact that covering the latest news [or offering] takes cannot achieve.” Similarly, you want me to pay close attention to the companies doing the building. “If the same 4 or 5 companies hold the future of AI in their hands, especially the companies that authored the era of surveillance capitalism (especially Google), that bit needs to be discussed thoroughly and without mercy,” commenter Rachel Parker wrote. Offer strategic takes on products. You told me that you’re not only interested in risk. You want to know who’s likely to win and lose in this world as well, and how various products could affect the landscape. “I think there is an intuition you have towards tech product strategy, and I would love to hear more of that as well with AI products,” reader Shyam Sandilya wrote. I really like this idea. One reason it resonates with me: I think critiquing individual products can often generate more insight than high-level news stories or analysis. Emphasize the tradeoffs involved. A focus on the compromises that platforms have to make in developing policies has, I think, been a hallmark of this newsletter. You encouraged me to bring a similar focus to policy related to AI. “I think it’s vital with AI to explore and be excited by the potential, and still talk about the potential risks,” one reader wrote. “Be balanced and nuanced about the tradeoffs, and which tradeoffs make sense and which don’t.” And finally: Remember that nothing is inevitable. There’s a tendency among AI executives to discuss superhuman AI as if its arrival is guaranteed. In fact, we still have time to build and shape the next generation of tech and tech policy to our liking. You told me to resist narratives that AI can’t be meaningfully regulated, or any variation on the idea that the horses are already out of the barn. To the extent that we build the AI that boosters are talking about, all of it will be built by individual people and companies making decisions. You told me to keep that as my north star. I’ll try. Thanks so much for helping me build this roadmap — and if you have any amendments, please let me know. On the podcast this week: Kevin and I talk about OpenAI’s first big appearance in conference. Plus: a new chance to hear my recent story about Yoel Roth and Twitter from This American Life, and … perhaps a game of this? Apple | Spotify | Stitcher | Amazon | Google Governing

Industry

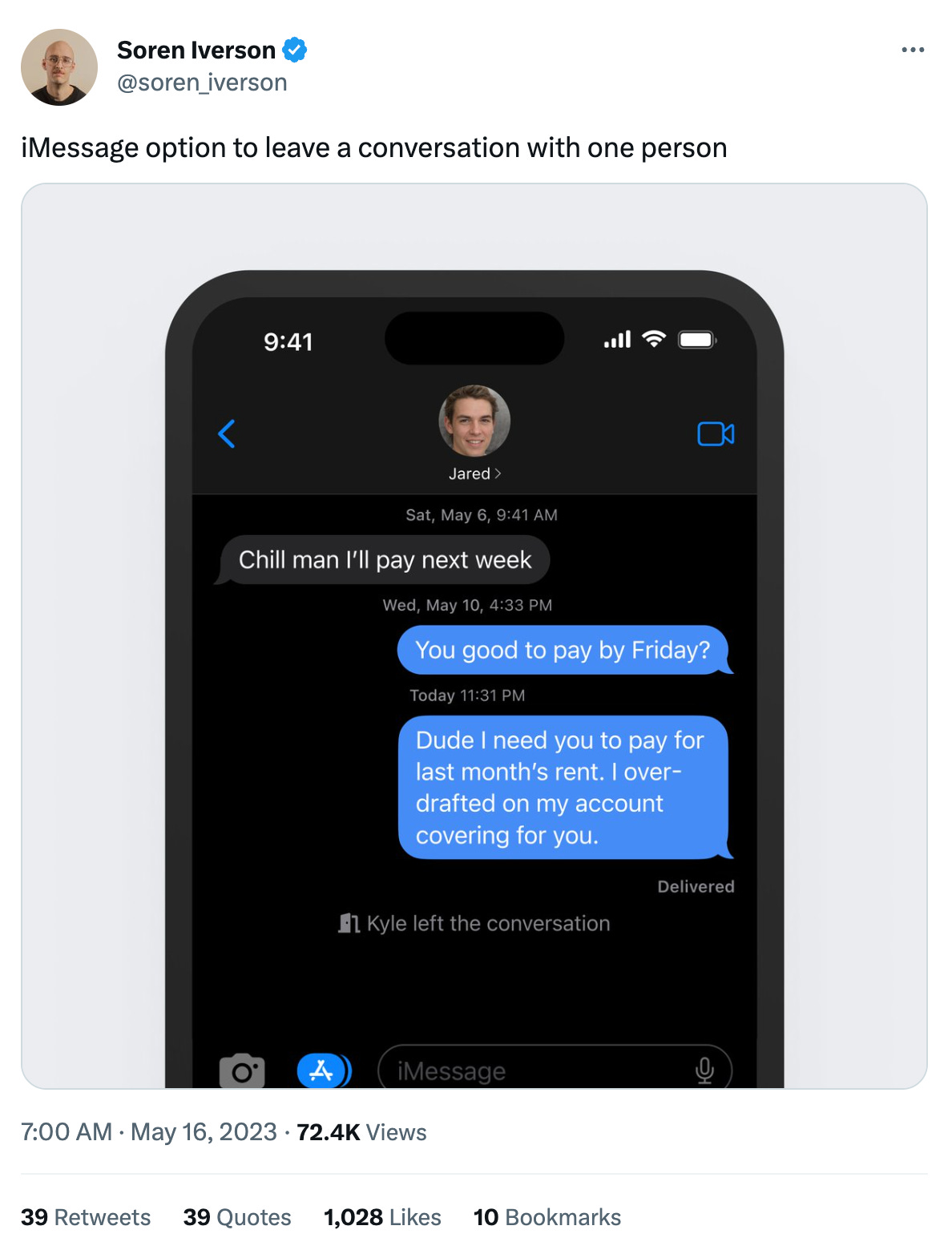

Those good tweetsFor more good tweets every day, follow Casey’s Instagram stories. (Link) (Link) (Link) Talk to usSend us tips, comments, questions, and examples of good AI coverage: casey@platformer.news and zoe@platformer.news. By design, the vast majority of Platformer readers never pay anything for the journalism it provides. But you made it all the way to the end of this week’s edition — maybe not for the first time. Want to support more journalism like what you read today? If so, click here: |

Older messages

Why you can't trust Twitter's encrypted DMs

Tuesday, May 16, 2023

A promised audit hasn't actually happened, sources say. PLUS: Twitter's Turkey problem, and a new CEO

How Google is making up for lost time

Friday, May 12, 2023

The company is finally bringing AI to the places that matter

Why I'm having trouble covering AI

Tuesday, May 9, 2023

If you believe that the most serious risks from AI are real, should you write about anything else?

Bluesky's big moment

Tuesday, May 2, 2023

A new Twitter clone is surging in popularity. Could it have legs?

How BeReal missed its moment

Wednesday, April 26, 2023

To become the next big social app, competitors have to move faster

You Might Also Like

PSA: DO NOT DO THIS

Wednesday, March 5, 2025

Read time: 57 sec. I came across a crazy Reddit post the other day. The guy said: “I burned the ships. I left my job… before even getting a client.” 🚨Sound the alarm 🚨 This is officially a public

SketchFlow, Balzac AI, Showcase, GitLoom, Subo, and more

Wednesday, March 5, 2025

Free Temp Email Service BetaList BetaList Weekly Subo Stay on top of your subscriptions and avoid surprise charges. Balzac AI Meet Balzac, your fully autonomous AI SEO Agent RewriteBar Exclusive Perk

How Depict Is Ending Shopify Doomscrolling ☠️🚀

Wednesday, March 5, 2025

Just hunted a new AI tool that turns boring Shopify grids into stunning visual stories—without a single line of code. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

A Founder's Guide: Essential Management Advice for Startups

Wednesday, March 5, 2025

Tomasz Tunguz Venture Capitalist If you were forwarded this newsletter, and you'd like to receive it in the future, subscribe here. A Founder's Guide: Essential Management Advice for Startups

Anne-Laure Le Cunff — Tiny Experiments— The Bootstrapped Founder 379

Wednesday, March 5, 2025

We chat about goals, reprogramming your own subconscious, and how learning in public can benefit anyone on any journey. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The wait is over… Sifted Summit 2025 tickets have officially launched 🚀

Wednesday, March 5, 2025

Secure the lowest rate with super early bird prices. Email Header Images-1 Hi there, Registration for Sifted Summit 2025 is officially open 🎉 Get your ticket to the most hotly anticipated edition yet —

Be objective, not detached

Wednesday, March 5, 2025

Context isn't only about facts and what happened--it's also helping your audience understand how to interpret this information. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

1️⃣ 0️⃣ These sourcing strategies are easily worth $10k

Wednesday, March 5, 2025

You get it for free from Kian Golzari, who's the secret weapon of world-famous brands... Hey Friend , If you're struggling to find a factory that delivers great quality, fair pricing, and good

Europe’s biggest tech takeovers

Wednesday, March 5, 2025

+ brunch with Eileen Burbidge, cheating founders View in browser Vanta_flagship Author-Mimi by Mimi Billing Good morning there, When news broke last Friday that Microsoft is closing down Skype, it

How’s that little project going?

Tuesday, March 4, 2025

Read time: 1 min. 2 sec. It's 2017. I've been grinding on Starter Story for months. Blood! Sweat!! Tears!!! (okay, not actual blood, but you get it) I'd put in the hours. Built the thing.