Ten strategies for replacing Twitter from people who used to work there

Here’s your second free column of the week — a wide-ranging set of interviews with former Twitter employees a year after the acquisition designed to answer one question: how would you build its replacement? We think the answers will surprise you. Sometimes you ask us how you can support Platformer without buying a subscription. Today we have an amazing answer for you: you can pre-order Zoë’s rollicking new book about Twitter! We think you’re going to love it. Just click here. Of course, your paid support of this newsletter helps, too. Upgrade your subscription today and we’ll email you first with all our scoops — like our recent interview with an AI executive who quit his job to support creators’ rights. ➡️

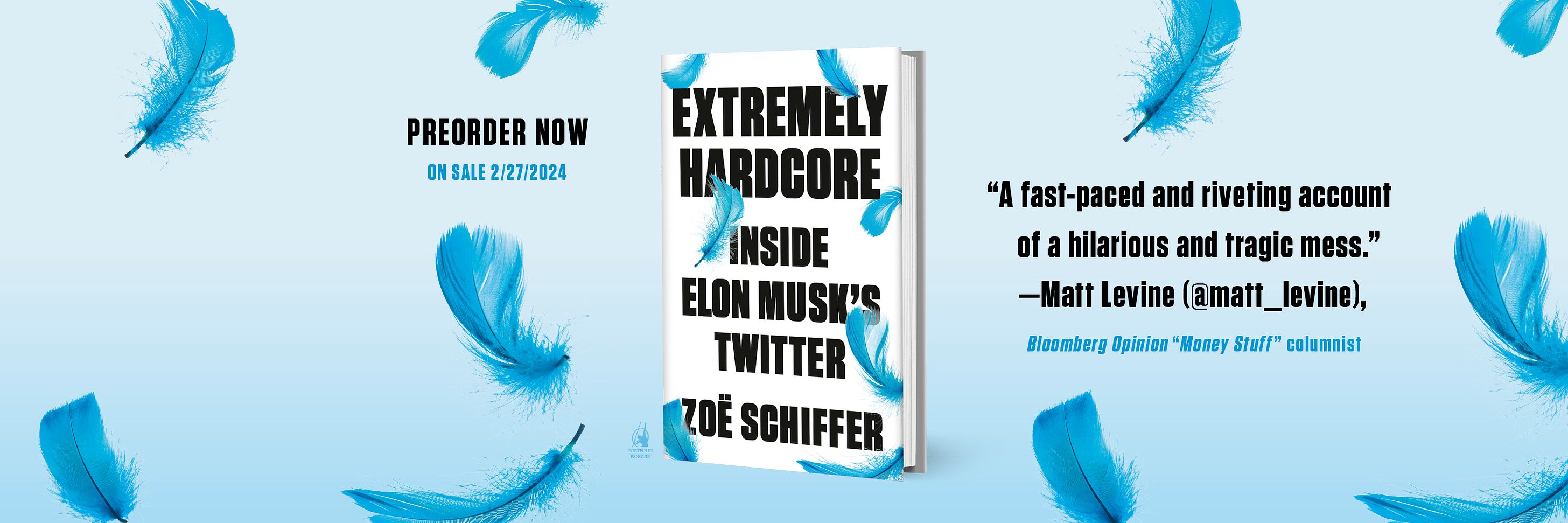

Ten strategies for replacing Twitter from people who used to work thereAnnouncing 'Extremely Hardcore,' Zoë Schiffer’s book on Musk’s Twitter takeoverToday, we’re thrilled to share an announcement that has been in the works all year. Zoë’s book about Elon Musk’s takeover of Twitter, Extremely Hardcore, comes out February 27, 2024. And you can pre-order it today. Extremely Hardcore is the culmination of more than a year of reporting from Platformer on what was perhaps the wildest corporate takeover in the history of Silicon Valley. We think you’ll really like it — but don’t take it from us. Legendary Bloomberg columnist Matt Levine called it “the definitive book on perhaps the weirdest business story of our time. A fast-paced and riveting account of a hilarious and tragic mess." And Vanity Fair’s Nick Bilton said “Zoë Schiffer’s incredibly written and astonishingly reported book about the Musk era of the world’s craziest company tells the story of a man who took the clown car, strapped a rocket to the back of it, and then slammed it into a wall at 100,000 miles an hour. You simply won’t be able to put this book down.” Extremely Hardcore is scoopy, juicy, and full of fresh details from the workers left to pick up the pieces after Musk let that sink in. If you’re so inclined, we invite you to snag a hardback copy, which we’re told is helpful in claiming a place on the bestseller lists. We’ll have more to say about the book in weeks to come — but in the meantime, that pre-order link again can be found right here. If you want a signed copy — an exclusive for Platformer subscribers — fill out this form once you’ve pre-ordered. To celebrate the book’s announcement, today we reached out to a wide range of former employees who used to work at (and, in one case, study) Twitter for their thoughts on how to build its replacement. Threads, Mastodon, Bluesky and others have all gained momentum to varying degrees, but all have a long way to go to reach their full potential. Where to go from here? Here’s what they told us, lightly edited for clarity and length.

You have to constantly innovate and experiment. Speed of innovation is critical in the early stages. In the early years of Twitter, one of the things I loved seeing was new features being rolled out every day. It was fast. So, talk to your engineers and ask if they have the tools and space to experiment and innovate. You need to establish a culture where good ideas can come from everywhere — from any engineer, any employee, in any line of business. Ideas get filtered out and lost if they pass through a rigid hierarchy. What I loved about Twitter was seeing a junior engineer or intern go up at Tea Time (Twitter’s old weekly all-hands meeting) and demonstrate a feature they’ve developed. Another source of innovation and ideas came from Hack Week projects. Do not under-invest in this area. There are countless features that Twitter implemented over the years that originated with a Hack Week project. The corporate development team used to run a “TweetTank” competition and have Tweeps pitch a partnership or acquisition that Twitter should do, “Shark Tank” style. One of the winners of that competition wound up being one of Twitter’s most successful acquisitions, Gnip.

History has shown that a well-supported API provides a lot of opportunity and value for content creators, developers, and the platform itself. It's critical for any amount of scale, and helps use cases emerge from experimentation. It made customer service possible. And it allowed for all sorts of wacky automated accounts that gave Twitter a lot of flavor. I worked on Twitter’s API for two years, specifically on security and privacy features. Once you open up the API there’s the potential for abuse, but it’s not all or nothing. From the beginning, try to establish a system based on developer reputation. That way, developers can earn their way to higher limits or higher degrees of capability. This means disallowing most things by default, and then slowly starting to allow functionality to developers who prove they’re good players — rather than opening it up to everyone and trying to knock down those who display bad behavior. Also, the more you can have your API mimic the application features, the better. Twitter played catch-up, launching a feature in the mobile app and then having developers be like “when is this coming to the API?” Like when polls came out, it took forever to get it built into the API. Every time you go to general availability with a feature, you also need to have it available in the API. Conceptually it's like “ya of course,” but it’s not an easy thing to do.

I think creators of all size and scale have realized just how much value they’re bringing to these platforms, how much their content drives engagement and profit — and how little they’re getting in return. To keep creators, platforms need to build with this in mind — think of long-term ways for creators to monetize (i.e., not just launching a splashy creator fund for the headlines and then letting the money run out). Create features that allow creators to own the audience they’ve built there. And build safety features, because often the folks driving the most engagement are also subject to the most harassment.

You can draw a line that prioritizes safety over speech. Or you can draw a line the way Twitter historically drew it and say, “look, we’re going to prioritize having context, and we’ll have reports, and if you see something say something and sorry tough luck.” You can draw the line anywhere you want! But if you look at expectations of social platforms from 2018 onward, it’s clear that most people believe platforms should moderate proactively, not just reactively. A key bit of being a public, real-time speech platform is that you’re not moderating one-to-one interactions for the most part. Nor are you even moderating interactions within small, closed groups of folks who’ve chosen to interact with each other. It's a free-for-all. You have to recognize that you lack context on these interactions, and that different people can have different expectations of the same conversation. One person could interpret an interaction as being horrible and offensive. They might be right, or they might be wrong. And you as the platform have only a tiny slice of visibility. Every platform to date has gotten completely stuck on this. At Twitter, for many years, the operating mindset was “we as a company lack context on these interactions, and consequently need to be pretty hands off.” The result of that was a reporting practice that required a first-person report for an abusive post, which was slanted toward getting the company more context, Like, if the person who was the topic of the post says it was abusive, it’s not just friends fucking around. That’s really hard to do because it puts a lot of burden on the victim of abuse, and it’s out of touch with expectations of social platforms now.

These bring people together and reduce polarization. Twitter used to invest heavily in this area — bringing company and community together in the spirit of healthy conversation. It paid off in so many ways — building climate emergency response tools, finding missing kids, preserving aboriginal languages, and teaching internet safety and media literacy. When you lead with positivity and goodwill, the world opens up in beautiful and unexpected ways.

In August, OpenAI published a blog post about using GPT-4 for content moderation. It’s well suited to this sort of work. Policies change frequently, and it can take time for human moderators to learn, understand, and fully implement those changes. Large language models can read the policy and adapt more quickly. What we’re seeing with AI is that it scales, and humans don’t. And I’m so saddened to say this, but the scale of hatred in this world is unimaginable, and it has to be met with tools that can match the scale of hate. Since the war in Israel started, I have seen things that I cannot unsee. I, and every other Israeli and Palestinian, am going to need deep therapy to get over this. It’s traumatizing and horrific. As a person who is a parent to three kids, I can’t even speak about the things I have seen. So I'm afraid of what we are doing to our human moderators. We are exposing people to things that no one should ever be exposed to. Can we afford from an ethical standpoint to put people through this trauma in order to create a healthier discourse? I feel very torn, but I don’t think so.

Before the 2020 election, our labels on COVID misinformation read “Get the facts from health officials about the science behind COVID-19 vaccines.” The language wasn’t structured, it was hard to localize, and, to conservative users, it reinforced the perception that Twitter was biased. We also tested the word “disputed,” and everyone responded negatively. Finally we landed on the label “misleading” and added a bold caution logo. Then: “Learn why health officials say vaccines are safe for most people.” These labels were easier for our teams to implement, and showed a 17% increase in click-through rate, meaning that millions and millions more people were trusting us to give them more context on potentially misleading tweets. This project reinforced how important it is to test language, and showed how structured content is essential for creating a scalable, quick-response product.

When you remove a post outright, you make it easier for the person who posted it to play the victim. Community Notes — which attempt to surface neutral, non-partisan, fact-based clarifications to popular posts that are written by users — offer a powerful alternative to that approach. Elon Musk’s own tweets are regularly flagged by the community. It’s self-regulating in a way. That said, I don’t know that community moderation is enough. As much as my engineer’s mind wishes most problems could be solved with technology, I’m not naive enough to think algorithms can do 100 percent of this job in a social network. The community can help debunk fake stories, but many other content moderation challenges need humans, at least right now.

I want to be able to track NBA reporters, political reporters, and cultivate my specific interests like I did on Twitter, without having to follow those accounts and always see them in my main timeline. Like, I’m a Warriors fan, and when there’s a game on I want to see what people are talking about. But I don’t want all those tweets in my timeline all the time.

Monetizing social networks is a challenge. From a revenue product perspective, find something that feels as organic to your platform as possible. Your ad products will need to drive results. Advertisers need to find value in their performance; no one owes you their spend. You will have to work hard for each sale, as you are up against companies that have products that perform exceptionally well. A focus on brand safety is key, as advertisers' reputation is everything. Have that in the back of your mind as you design advertiser tools and safety measures. And here’s a bonus tip from someone who didn’t work at Twitter but that every would-be Twitter replacement needs to have on their mind, from one of our favorite thinkers in the space.

For a long time these platforms were unregulated. But we’re seeing in the last couple years a raft of new regulatory requirements coming in, like the Digital Services Act in the EU, and the Online Safety Act in the UK. A bunch of different states are passing different bills in the United States, and some are on appeal in the Supreme Court. The writing on the wall is clear: governments everywhere are getting way more active in regulating these technologies, and new platforms are going to have to deal with that much more than they did in the past. On the podcast this week: Kevin and I sort through a week’s worth of news about OpenAI. Then, the Times’ David Yaffe-Bellany joins to remind us why CZ is going to prison. And finally, the latest news about how AI sludge is taking over web search. Apple | Spotify | Stitcher | Amazon | Google | YouTube Governing

Industry

Those good posts(Link) (Link) (Link) Talk to usSend us tips, comments, questions, and posts: casey@platformer.news and zoe@platformer.news. By design, the vast majority of Platformer readers never pay anything for the journalism it provides. But you made it all the way to the end of this week’s edition — maybe not for the first time. Want to support more journalism like what you read today? If so, click here: |

Older messages

The OpenAI saga isn’t over just yet

Tuesday, November 28, 2023

A new board and a promised investigation could threaten Altman's happy ending

OpenAI's alignment problem

Tuesday, November 21, 2023

The company's board failed the institution. But did it have a point?

How banning one Palestinian slogan roiled Etsy

Tuesday, November 14, 2023

The company secretly banned the phrase “from the river to the sea” on sellers' wares. Some employees aren't happy

How OpenAI is building a path toward AI agents

Tuesday, November 7, 2023

Building a GPT-based copy editor showcases their promise — but the risks ahead are real

The case for a little AI regulation

Friday, November 3, 2023

Not every safety rule represents "regulatory capture" — and even challengers are asking the government to intervene

You Might Also Like

⏰ Final day to join MicroConf Connect (Applications close at midnight)

Wednesday, January 15, 2025

MicroConf Hey Rob! Don't let another year go by figuring things out alone. Today is your final chance to join hundreds of SaaS founders who are already working together to make 2025 their

How I give high-quality feedback quickly

Wednesday, January 15, 2025

If you're not regularly giving feedback, you're missing a chance to scale your judgment. Here's how to give high-quality feedback in as little as 1-2 hours per week. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

💥 Being Vague is Costing You Money - CreatorBoom

Wednesday, January 15, 2025

The Best ChatGPT Prompt I've Ever Created, Get More People to Buy Your Course, Using AI Generated Videos on Social Media, Make Super Realistic AI Images of Yourself, Build an in-email streak

Enter: A new unicorn

Wednesday, January 15, 2025

+ French AI startup investment doubles; Klarna partners with Stripe; Bavaria overtakes Berlin View in browser Leonard_Flagship Good morning there, France is strengthening its position as one of the

Meta just flipped the switch that prevents misinformation from spreading in the United States

Wednesday, January 15, 2025

The company built effective systems to reduce the reach of fake news. Last week, it shut them down Platformer Platformer Meta just flipped the switch that prevents misinformation from spreading in the

Ok... we're now REALLY live Friend !

Tuesday, January 14, 2025

Join Jackie Damelian to learn how to validate your product and make your first sales. Hi Friend , Apologies, we experienced some technical difficulties but now We're LIVE for Day 3 of the Make Your

Building GTM for AI : Office Hours with Maggie Hott

Tuesday, January 14, 2025

Tomasz Tunguz Venture Capitalist If you were forwarded this newsletter, and you'd like to receive it in the future, subscribe here. Building GTM for AI : Office Hours with Maggie Hott On

ICYMI: Musk's TikTok, AI's future, films for founders

Tuesday, January 14, 2025

A recap of the last week ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🚨 [LIVE IN 1 HOUR] Day 3 of the Challenge with Jackie Damelian

Tuesday, January 14, 2025

Join Jackie Damelian to learn how to validate your product and make your first sales. Hi Friend , Day 3 of the Make Your First Shopify Sale 5-Day Challenge is just ONE HOUR away! ⌛ Here's the link

The Broken Ladder & The Missing Manager 🪜

Tuesday, January 14, 2025

And rolling through work on a coaster͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏