Here's this week's free edition of Platformer: another scoop about the company's hard-right turn in the United States. This time around, Meta is killing off its sophisticated program that automatically detected misinformation and prevented it from spreading. Do you value independent reporting on Meta in the Trump era? If so, consider upgrading your subscription today. We'll email you all our scoops first, like our recent one about Meta's dehumanizing new speech guidelines for trans people. Plus you'll be able to discuss each today's edition with us in our chatty Discord server, and we’ll send you a link to read subscriber-only columns in the RSS reader of your choice.

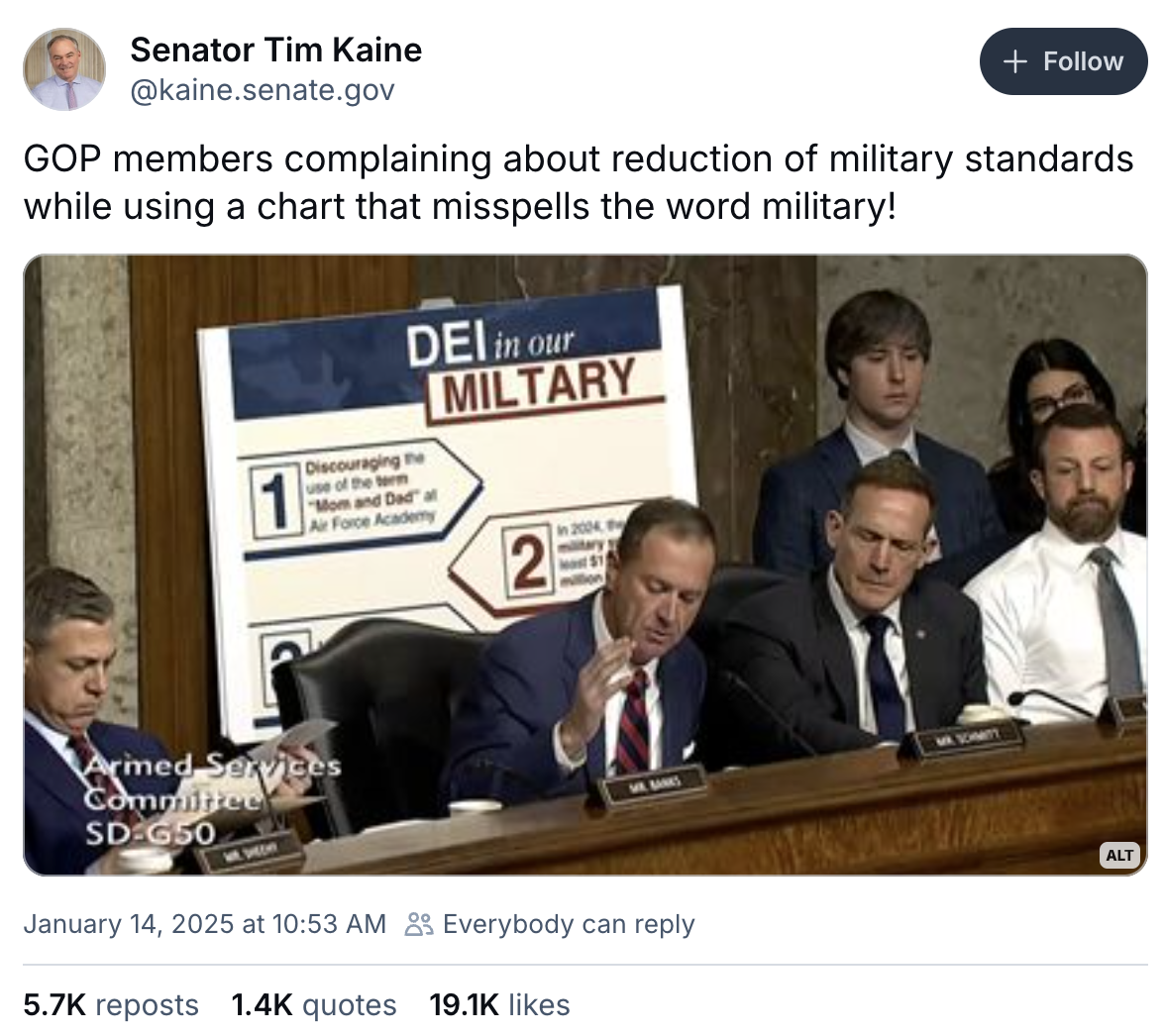

I. Last week, Meta announced a series of changes to its content moderation policies and enforcement strategies designed to curry favor with the incoming Trump administration. The company ended its fact-checking program in the United States, stopped scanning new posts for most policy violations, and created carve-outs in its community standards to allow dehumanizing speech about transgender people and immigrants. The company also killed its diversity, equity and inclusion program. Behind the scenes, the company was also quietly dismantling a system to prevent the spread of misinformation. When the company announced on Jan. 7 that it would end its fact-checking partnerships, the company also instructed teams responsible for ranking content in the company’s apps to stop penalizing misinformation, according to sources and an internal document obtained by Platformer. The result is that the sort of viral hoaxes that ran roughshod over the platform during the 2016 US presidential election — “Pope Francis endorses Trump,” Pizzagate, and all the rest — are now just as eligible for free amplification on Facebook, Instagram, and Threads as true stories. In 2016, of course, Meta hadn’t yet invested huge sums in machine-learning classifiers that can spot when a piece of viral content is likely a hoax. But nine years later, after the company’s own analyses found that these classifiers could reduce the reach of these hoaxes by more than 90 percent, Meta is shutting them off. Meta declined to comment on the changes. Instead, it pointed me to a letter and a blog post in which it had hinted that this change was coming. The letter was sent in August by Zuckerberg to Rep. Jim Jordan, the chairman of the House Judiciary Committee. In it, Zuckerberg expressed his discomfort with the Biden Administration’s efforts to pressure the company to remove certain posts about COVID-19. Zuckerberg also expressed regret that the company had temporarily reduced the distribution of stories about Hunter Biden’s laptop, which Meta and Twitter had both done out of fear that they had been the result of a Russian hack-and-leak operation. The few hours that the story’s distribution was limited would go on to become a Republican cause célèbre. As a kind of retroactive apology for bowing to censorship requests in the past, and for the company’s own actions in the Hunter Biden case, Zuckerberg said that going forward, the company would no longer reduce the reach of posts that had been sent to fact checkers but not yet evaluated. Once they had been evaluated, Meta would continue to reduce the reach of posts that had been designated as false. In hindsight, this turned out to be the first step toward killing off Meta’s misinformation efforts: granting hoaxes a temporary window for expanded reach while they awaited fact checking. That brings us to the blog post: Joel Kaplan’s “More speech, fewer mistakes,” which was published last Tuesday and among other things announced the end of the company’s US fact-checking partnerships. Buried toward the bottom were these two sentences: We also demote too much content that our systems predict might violate our standards. We are in the process of getting rid of most of these demotions and requiring greater confidence that the content violates for the rest.

At the time, Kaplan did not elaborate on which of these demotions the company planned to get rid of. Platformer can now confirm that misinformation-related demotions have been eliminated at the company. The company plans to replace its professional fact-checking program with volunteers from the user base appending information to posts in a system modeled after X’s community notes. But Meta has offered few details on how the program will work, and has not said when it will become available. As a result, Meta has chosen to open a window for viral hoaxes to spread unchecked on its properties while it works to build a new system to mitigate their impact. II. Meta’s efforts to detect misinformation had their roots in the aftermath of the 2016 US presidential election. In response to outrage over the spread of “Pope endorses Trump” and other hoaxes in the run-up to Trump’s election, Meta promised lawmakers and regulators around the world that it would take steps to improve the quality of information on its platforms. From the start of that initiative, Meta’s integrity teams worked hand-in-hand with the third-party fact checkers it hired after the election. In a 2018 blog post, the company explained the variety of signals it used to determine whether its ranking algorithms might be showing you something false: Today, in the US, the signals for false news include things like whether the post is being shared by a Page that’s spread a lot of false news before, whether the comments on the post include phrases that indicate readers don’t believe the content is true, or whether someone in the community has marked the post as false news.

Facebook uses a machine learning classifier to compile all of those misinformation signals and — by comparing a given post to past examples of false news — make a prediction: “How likely is it that a third-party fact-checker would say this post is false?” (Facebook uses classifiers for a lot of different things, like predicting whether a post is clickbait or contains nudity; you can read more in this roundtable interview from Wired). The classifier’s predictions are then used to determine whether a given piece of content should be sent to third-party fact-checkers. If a fact-checker rates the content as false, it will get shown lower in people’s News Feeds and additional reporting from fact-checkers will be provided.

Notably, none of these changes actually eliminated anyone’s freedom of expression. Instead, they restricted what the technologist Aza Raskin has termed “freedom of reach.” Fact-checking and down-ranking obviously false stories allowed liars and hoaxsters to express themselves but sought to limit the negative effects of those lies on Meta’s customers. In May 2020, at the height of the pandemic, Meta boasted about how well this effort was working. In a blog post, the company said that 95 percent of people who saw labels warning that a post contained misinformation declined to view it. The company said that in March 2020 alone, it displayed warnings related to COVID-19 on 40 million posts. Going forward, Meta will instead ask users to add their own annotations to these and other posts. Academics, journalists, lawmakers, and other interested parties will undoubtedly still conduct their own reviews of viral posts on Meta platforms in the coming months to assess the misinformation landscape. But they’ll be doing it without a critical tool they used in the past: CrowdTangle, a free services that exposed what posts on Facebook and Instagram were most popular via searchable real-time dashboards. Meta killed off CrowdTangle in August, directing users instead to an alternative that lacks many of CrowdTangle’s core features. In publishing this story, I realize that I may be giving ammo to those who believe that misinformation is a root cause of our polarization in the United States. As Joe Bernstein wrote in Harper’s 2021, discussions of misinformation sometimes lend too much credence to the idea that algorithms are all-powerful shapers of reality, and fail to reckon with the fact that people will always seek out information that aligns with their worldview. Their demand for that information, whether it is true or false, may be the most important factor leading to its creation and its spread. But it’s possible to believe that a total fixation on misinformation is counterproductive and also believe in the idea of harm reduction: that sophisticated systems that accurately identify hoaxes and then show them to fewer people ought to be left in place, at least until their replacement has been rolled out and evaluated for its effectiveness. For now, these changes only apply to the United States — though you get the sense that Zuckerberg would happily roll them out anywhere else he’s allowed to. The question now is what other “demotions” Meta is in the process of killing off. A company spokesman wouldn’t tell me. If you know, Signal me at caseynewton.01.

Stray thoughts - At the time of Zuckerberg’s letter to Jordan, I thought it might represent a canny bid on Zuckerberg’s part to remain in the political center by tossing some red meat to Republicans. After the events of the past week, though, it looks much more like an early sign of the CEO’s authentic lurch to the right.

- Yesterday I linked to Max Read’s take on the labor angle in Zuckerberg’s recent changes. In part, Read wrote, killing off DEI efforts and related initiatives should be viewed as an effort to purge the company of vocal internal critics and attract an audience of more pliant worker-drones. Like clockwork, Zuckerberg announced today that he would cut another 5 percent of his workforce, or around 3,600 people, targeting “low performers.” Most layoffs inspire a significant number of demoralized employees to quit; this move should be taken as another push toward the door for employees who believe in newly taboo ideas such as that Meta should employ men and women in equal numbers.

- Whenever anyone brings up Meta, Twitter, and the Hunter Biden laptop, it’s important to remind people that Elon Musk similarly blocked links to a dossier of information about Vice President-elect JD Vance in September. Unlike Meta or Twitter in the Biden case, Musk also banned a journalist from X from the platform for posting it, and called it “one of the most egregious, evil doxing actions we’ve ever seen.” No Republican member of Congress ever said one word about the hypocrisy.

- Always read Daphne Keller on everything, but especially read her on Meta's weakness in standing up to government pressure relative to the average newsroom.

- Seriously: what other demotions is Meta now killing off?

Governing- A bill has been introduced that could extend TikTok’s deadline to divest by 270 days, if approved by Congress. (Lauren Feiner / The Verge)

- While US fact-checkers say they were blindsided by Meta’s policy changes, global fact-checking organizations say they saw it coming. (Ananya Bhattacharya / Rest of World)

- New documents in a copyright lawsuit reveal Meta’s plans to train Llama on pirated material, including content from book piracy site LibGen. It's giving masculine energy. (Emma Roth and Kylie Robison / The Verge)

- The SEC sued Elon Musk for allegedly failing to disclose a growing stake in Twitter and ignoring a disclosure deadline in 2022. This is an open-and-shut case, as Bloomberg's Matt Levine has often noted, that could have been filed at almost any point in the past three years. (Megan Howard / Bloomberg)

- Microsoft and OpenAI asked a federal judge to dismiss the various copyright lawsuits by the New York Times, the New York Daily News, and others. They claimed the statute of limitations has expired. (Josh Russell / Courthouse News Service)

- The Open Network, a blockchain with ties to Telegram, announced plans to expand in the US as the regulatory landscape is poised to change under Donald Trump. (Ryan Weeks / Bloomberg)

- The Justice Department and FBI said they deleted the data-stealing malware planted by a China-linked hacking group, which was in millions of computers, as part of a multi-year campaign. (Carly Page / TechCrunch)

- The FTC and Justice Department wrote that Reid Hoffman’s simultaneous participation on the OpenAI and Microsoft boards could violate antitrust law. (Lauren Feiner / The Verge)

- The Biden administration finalized a rule that would effectively ban new, personal smart cars from China and Russia, and may expand the restriction to commercial vehicles. (Josh Wingrove and MacKenzie Hawkins / Bloomberg)

- The UK’s Competition and Markets Authority is probing Google to assess whether it has “strategic market status” under the new DMCC law, which will allow the government to impose rules to prevent anticompetitive behavior. (Ryan Browne / CNBC)

- The EU Commission is reportedly reconsidering its investigations into tech companies, including Apple, Meta and Google amid calls for Donald Trump to intervene. (Javier Espinoza and Henry Foy / Financial Times)

- An investigation into how Barcelona became a hub for spyware startups like Palm Beach Networks and Paradigm Shift, raising concerns about surveillance. (Lorenzo Franceschi-Bicchierai / TechCrunch)

Industry Those good postsFor more good posts every day, follow Casey’s Instagram stories. (Link) (Link) (Link) Talk to usSend us tips, comments, questions, and Facebook's current integrity ranking config: casey@platformer.news. Read our ethics policy here.

|