Platformer - Meta seeks to hide harms from teens

Here’s a free edition of Platformer for you with our first scoop of the year. Upgrade your subscription today and we’ll email you first with all our scoops — like our recent piece on the severe hallucinations experienced by Amazon’s flagship new chatbot, Q. Or you can pre-order Zoë’s rollicking new book about Twitter! Just click here.

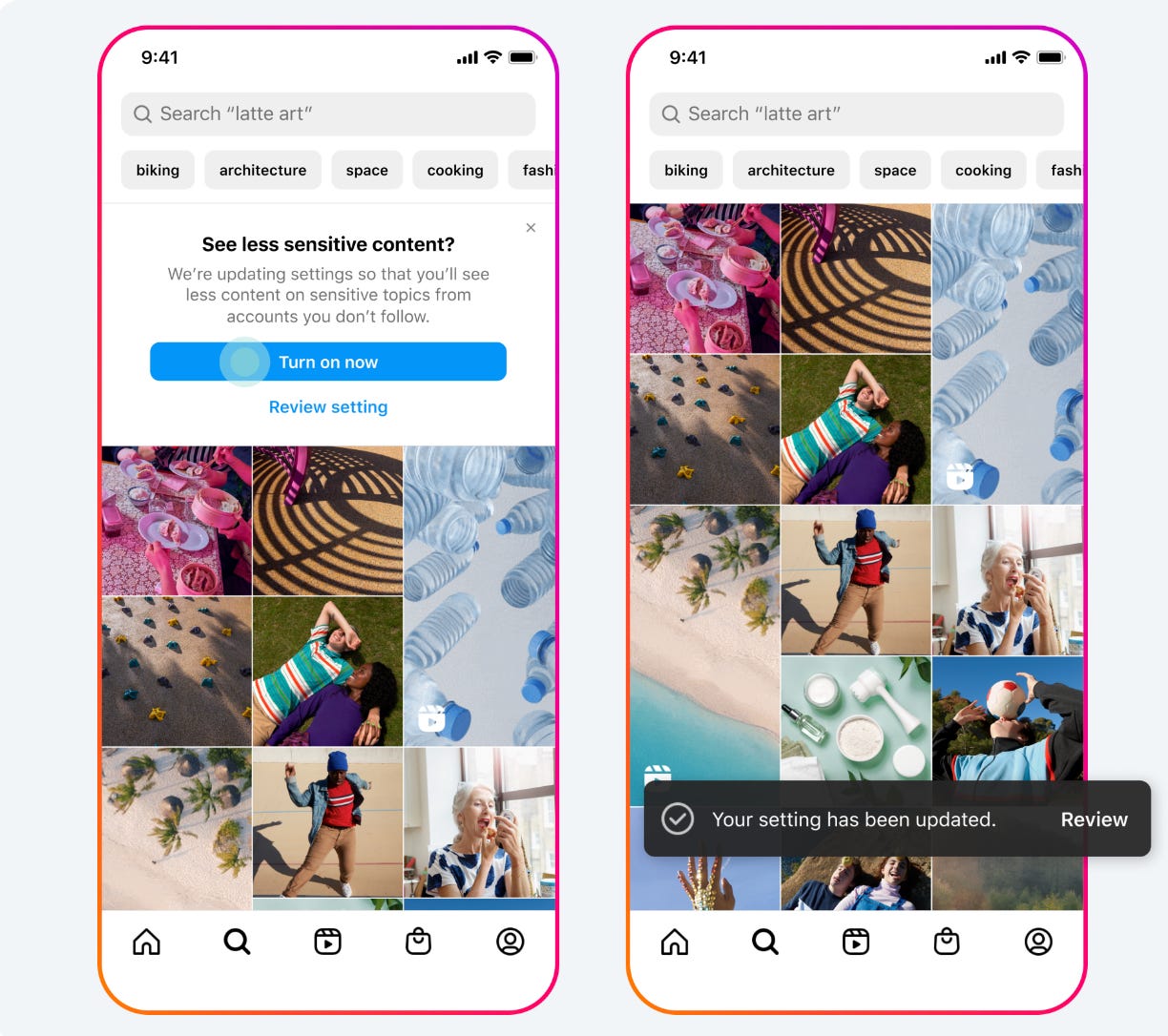

Meta seeks to hide harms from teensBut to change the conversation, the company will have to do more than tweak its settingsToday, for a change of pace, let’s talk about something other than the fate of Substack and all those who dwell upon it. Instead, let’s look at the mounting pressure on social networks to make their apps safer for young people, and the degree to which both platforms and regulators continue to talk past one another. Let’s start with the news. Today, Meta said it would take additional steps to prevent users under the age of 18 from seeing content involving self-harm, graphic violence and eating disorders, among other harms. Here’s Julie Jargon at the Wall Street Journal:

The changes announced today fall broadly into a category of platform tweaks that could be labeled “They weren’t doing that already?” But the fact that Meta made these changes, which the Journal calls “the biggest change the tech giant has made to ensure younger users have a more age-appropriate experience on its social-media sites,” underscores the degree to which child safety has become the most important dimension along which social networks are being judged in 2024. How did that become the case? The most consequential shift in attempts to regulate social media over the past year has been in the theory of harm. In the period after the 2016 US presidential election, regulators focused heavily on issues related to speech. Democrats focused on the way platforms can amplify lawful but harmful material, including hate speech and misinformation. Republicans took the opposite position, protesting against platforms’ right to moderate content and calling for an end to account removals and other restrictions in most cases. The result was an enervating stalemate in efforts to regulate US tech companies; Congress has not passed a single meaningful new tech regulation since Donald Trump was elected president. But after the better part of a decade waiting for lawmakers to act, states took matters into their own hands. And in some cases, the focus remained on speech, as in the laws passed by Florida and Texas aimed at making content moderation illegal. But over the past year, states have adopted a new approach to reducing the growth and power of social networks: making it increasingly difficult for children to use them. Utah and Arkansas have both passed laws seeking to ban minors from using some social networks without their parents’ permission. Montana sought to ban TikTok entirely. Laws like these have struggled so far in courts. A lawsuit is seeking to block the Utah legislation from being implemented; and federal judges blocked both the Arkansas law and the Montana law from taking effect. At the same time, pressure for lawmakers to act in these cases has only grown. Last year, the US surgeon general issued an advisory warning that social networks can be harmful to teens. Some critics dismissed the report as political posturing, but on the whole I thought it offered a fairly balanced portrait of both the potential benefits and harms that can come with extended use of social networks. Meanwhile, a series of investigations over the past year has continued to shine a light on ways that social networks continue to be unsafe for young people. The Wall Street Journal documented how Instagram is used to connect buyers and sellers of child sexual abuse material, and the Stanford Internet Observatory found that similar issues related to CSAM affect X, Telegram, Mastodon, and other decentralized networks. More recently, the Journal reported that Meta’s systems are unwittingly connecting pedophiles together. And on Snapchat, a scam targeting teenage boys has resulted in more than a dozen children taking their lives after being blackmailed over their nudes. Frustration with platforms culminated in the lawsuit filed against Meta in October by 41 states and the District of Columbia, alleging that the company misled young people about the potential harms they face on the company’s apps. An unredacted version of the suit that came out later documented the extent to which Meta was aware of huge numbers of under-13 users. All of that feels like necessary context for the changes Meta announced today. It also feels like an explanation why, despite the changes it has made over the past year, Meta remains on the defensive. Last July, after he signed the Utah legislation, Gov. Spencer Cox described his rationale in an interview with the New York Times’ Jane Coaston. “If you look at the increased rates of depression, anxiety, self-harm since about 2012, across the board but especially with young women, we have just seen exponential increases in those mental health concerns,” Cox told her. “Again, the research is telling us over and over and over again that it is not just correlated, but it’s being caused, at least in part, by the social media platforms.” That view is much disputed by the social networks, which argue that the data is more muddled and that any causal effects are small to nonexistent. But Cox’s belief is widely shared — and not just among Republican governors, but among millions of families who have complicated relationships with Facebook, Instagram, YouTube, TikTok, Snapchat, and other apps. If you believe that at least some teens experience harm on social media, it seems unlikely that a parental permission slip will solve it. Neither, I’m afraid, will a few tweaks to where content about self-harm can be shared. Not for the first time, lawmakers and social networks are talking past each other. To make real progress on teen mental health in 2024, both sides are going to have to find some common ground. Talk about this edition with us in Discord: This link will get you in for the next week. Governing

Industry

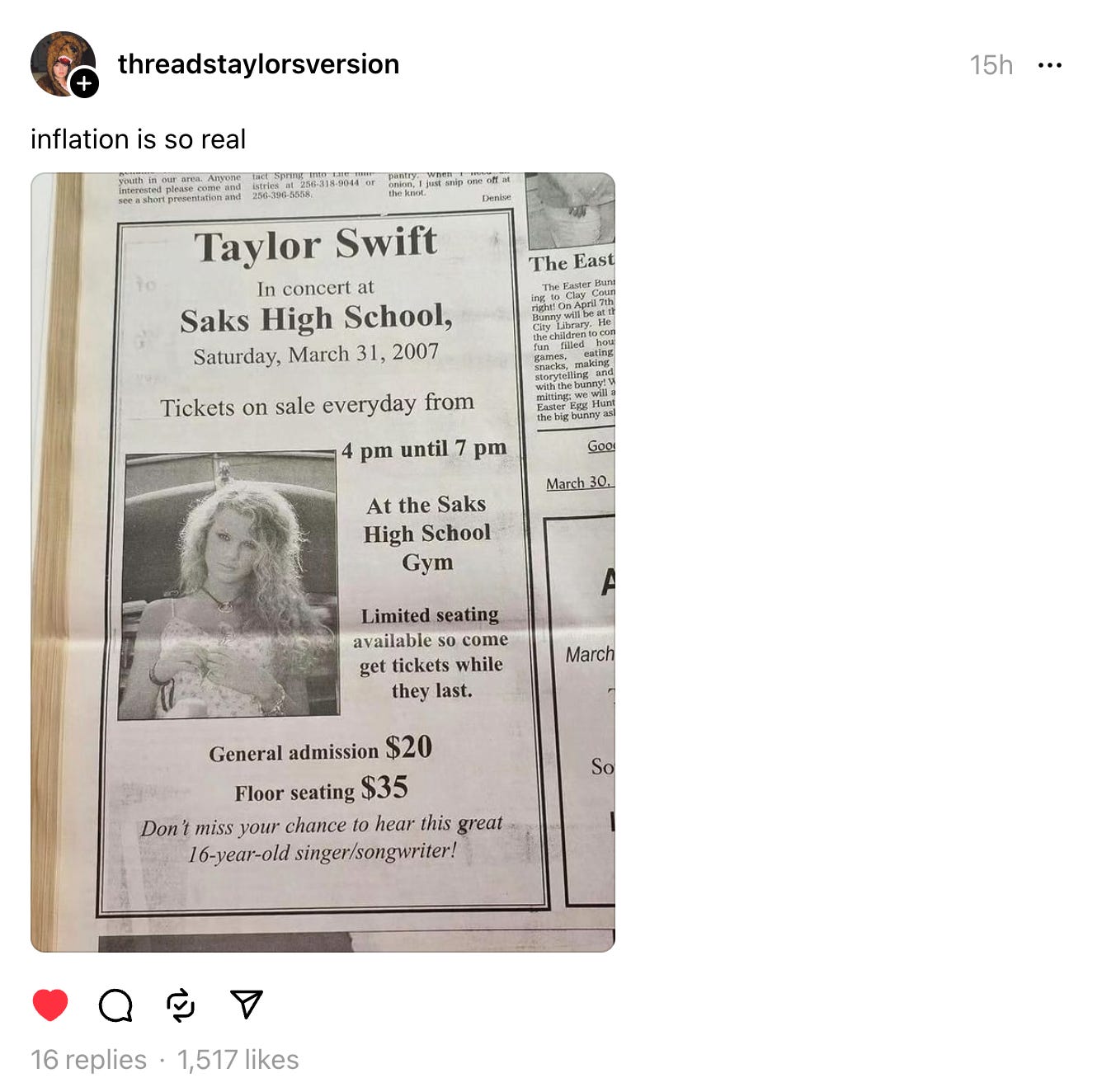

Those good postsFor more good posts every day, follow Casey’s Instagram stories. (Link) (Link) (Link) Talk to usSend us tips, comments, questions, and teen mental health solutions: casey@platformer.news and zoe@platformer.news. By design, the vast majority of Platformer readers never pay anything for the journalism it provides. But you made it all the way to the end of this week’s edition — maybe not for the first time. Want to support more journalism like what you read today? If so, click here: |

Older messages

Substack says it will remove Nazi publications from the platform

Monday, January 8, 2024

Nazi content violates rules against incitement to violence, the company says

Why Substack is at a crossroads

Friday, January 5, 2024

Some thoughts on platforms and Nazis

14 predictions about 2024

Friday, December 15, 2023

AI! Elections! Threads! Media! And more

An Epic win jolts Google

Wednesday, December 13, 2023

The company's app store monopoly has been ruled illegal, and the ramifications will extend well beyond Fortnite

Google unveils Gemini

Wednesday, December 6, 2023

CEO Sundar Pichai and DeepMind's Demis Hassabis talk to Platformer about the promise — and product roadmap — of their answer to GPT-4

You Might Also Like

🗞 What's New: How 5 vibe-coded games went viral w/out big audiences

Wednesday, March 12, 2025

Also: The definitive AI glossary ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⏳ 72 hours left! Don’t miss the biggest ecommerce sourcing event of the year

Tuesday, March 11, 2025

This is your last chance to join 9+ sourcing experts LIVE (for FREE!) Hey Friend , Time is running out! ⏳ In just 72 hours, the Ecommerce Product Sourcing & Manufacturing Summit goes live—and if

Positioning Startups in the Age of AI

Tuesday, March 11, 2025

Tomasz Tunguz Venture Capitalist If you were forwarded this newsletter, and you'd like to receive it in the future, subscribe here. Positioning Startups in the Age of AI How do you position and

AI strategies for industrials

Tuesday, March 11, 2025

where major players are making moves, and what it means for the future of the space Hi there, AI is top of mind for executives in the industrial sector – and for good reason. The biggest names in

[CEI] Chrome Extension Ideas #181

Tuesday, March 11, 2025

ideas for Twitter/X, YouTube, Email, and Notion ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

$2.7M ARR

Tuesday, March 11, 2025

IRL events coming to TGA ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Why you’re so angry at work (and what to do about it)

Tuesday, March 11, 2025

A practical framework for transforming big feelings into wisdom ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Growth Newsletter #244

Tuesday, March 11, 2025

How not to write a terrible cold email ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🛎️ Hey Friend , did you get your product costs wrong? (Here’s how to fix it)

Tuesday, March 11, 2025

Do this to lock in the best ALL-IN price from your supplier—no hidden fees, no last-minute price hikes eating into your margins. Hey Friend , Ask Kian Golzari, one of the top product sourcing experts

The next French unicorns

Tuesday, March 11, 2025

+ Arrival shuts down operations; 'another DeepSeek moment'? View in browser Powered by Vanta Author-Martin by Martin Coulter Good morning there, Chinese AI startup Monica has raised alarm bells