Making your system observability predictable

Welcome to the next week! I aim to show you how to do it predictably, making observability a quality parameter of our system. It's not just a bunch of fancy logs. Speaking about logs, let’s quickly recap what we learnt so far. Logs give us granular details about what's happening in the system at a specific time. They allow you to read the story of what has happened. Yet, even if they’re structured, they're fragmented. We often patch them manually to understand how a task moves through the system. Again, this is not the most educated way for troubleshooting. Predefined dashboards showing metrics are great for tracking known unknowns—scenarios we don’t want but know can happen. They give us an aggregated view of how your system is performing right now. If the queue size grows or the wait time for acquiring connections increases, it's a red flag that something's wrong. However, these metrics are often too high-level to diagnose the root cause. We know what happened and need to take action, but we don't understand why. We can guess, but guessing in production issues is not the predictable way to handle them. Your boss wouldn’t like to hear that you do Guess Driven Development. The alternative is modern Observability tooling based on the Open Telemetry standard. They give us both logical and physical tools. Logical:

Instrumenting ObservabilityTo understand what’s happening in our system, we need to instrument it, specify what we need, and describe how we’d like to see it. For instance, we’d like to see how long connection acquisition took or how long the connection was open. OpenTelemetry gives you automatic instrumentation for standard tools like database connectors, web frameworks, and messaging systems. Yet the automatic instrumentation lacks context. Your context. If you want to know why certain queries are slow or which specific task is holding a connection for too long, you need manual instrumentation. This means adding custom spans to your code to record business-specific actions—like when a query on the "orders" table starts, how many rows it returns, and how long it takes to execute. Without manual instrumentation, you're left guessing when things go wrong. It takes more effort, but it’s crucial for getting detailed insights that allow you to diagnose and fix issues quickly. You’re not just tracking the surface-level “what happened,” but also the deeper “why and how,” which is essential in complex systems like connection pooling. Still, we’re now building the connection pool, so kinda providing the automated instrumentation for others. That’s a challenge, as we cannot guess what people may need. That also means we should provide as much information as possible, allowing people to filter out what’s not useful. Practical InstrumentationIf we’d like to provide both traces and metrics, we need to define providers accordingly: TraceProvider and MeterProvider. The TracerProvider is the central entity keeping the general configuration of how we’d like to handle tracing. Like:

In most setups, you have a single global TracerProvider. TraceProvider can create and manage multiple tracers. Each tracer can be customised for different system parts, allowing you to trace specific services or components. You can have different tracers for each module. Each module and team may require different needs, so it’s worth allowing them to diverge. Still, having a central place where you set up basic conventions is a good practice. Accordingly, MeterProvider centralises the metrics handling. It lets to:

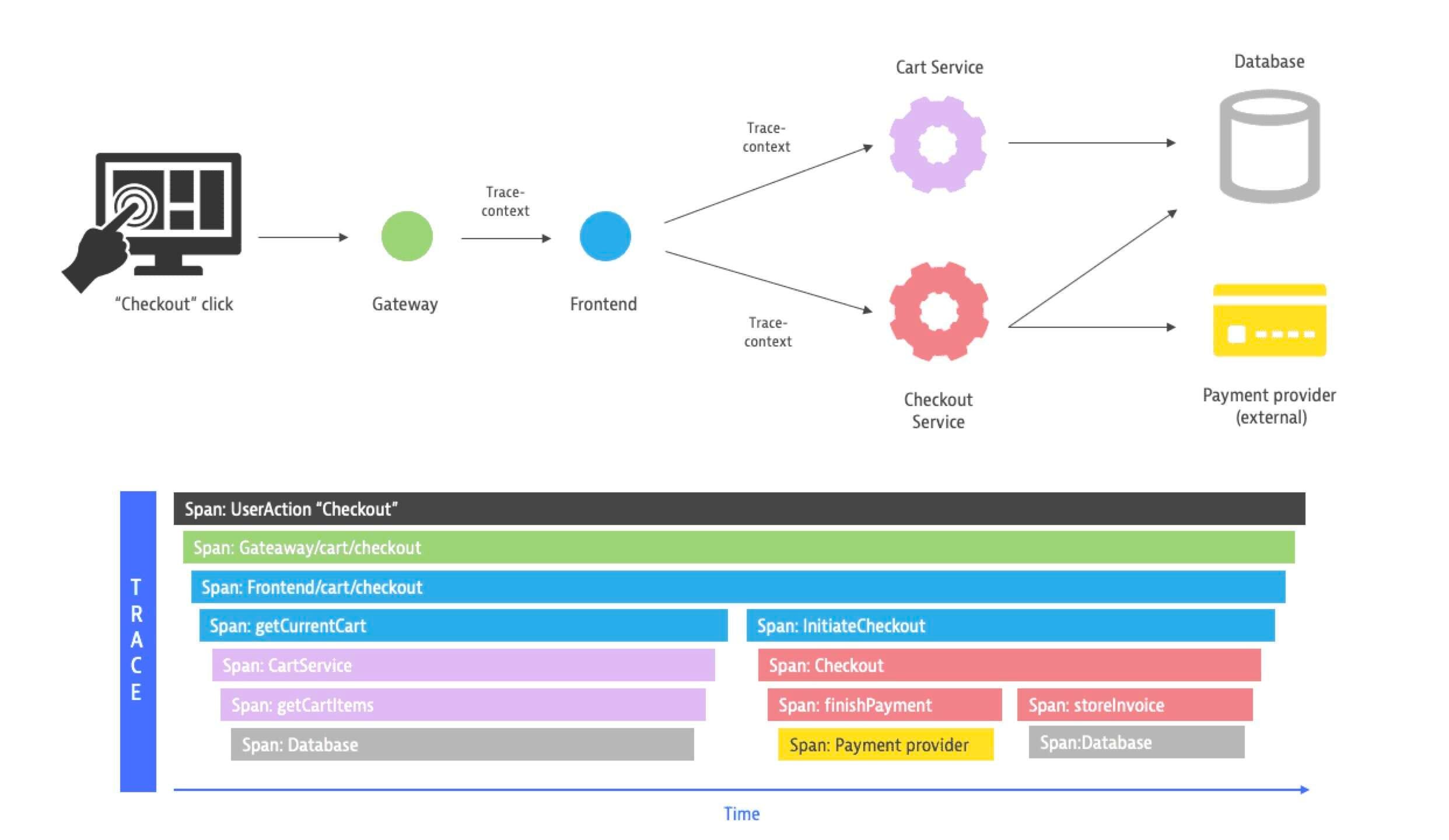

Some metrics can be highly contextual to the business features (e.g., the number of orders processed per minute), while others can be the same for the whole system (e.g., the number of Web API requests handled per second). In Node.js and TypeScript, the simplest setup for them could look as follows: And yes, you need to install the following packages: Cool stuff, but what’s next? Predictable ObservabilityMy initial plan for this article was to show you the full Graphana Docker Compose setup, ask you to run it, and show some colourful spans. Something like this from Dynatrace docs: That’d be cool. I hear you when you say, “Those articles are nice, but it’d be great if there were more diagrams.” No worries, we’ll get there. But you can already see that stuff in the vendor blogs. Google it, and you’ll see a lot of results. I want to show you today a bit harder, but the sure way—the way inspired by the excellent talk from Martin Thwaites (btw. he’s the person you should follow to learn about observability).  I was sitting in a dark room in Oslo when Martin gave this talk. When I left, I was a bit brainwashed. TLDW is that Martin showed that we should treat Spans (and other Telemetry data) as our system's output. In other words, we should verify whether we produce the expected telemetry data. I wasn’t fully ready for that at the moment I saw this talk, but I already knew that I needed to process it, and I’ll get back to this idea in the future. It took me a while, but the idea constantly circled in my head. It’s sad, but many of us haven’t seen a real production system. After seven years of my career, I retrospectived and realised that I put only three systems on prod in that span—not a significant number. The startup and venture capital culture of placing high bets is one of the key factors. People are taught to deliver something and sell it as fast as they can or pivot. Many products are not reaching even that phase, running out of money. That’s also why we don’t learn how to operate predictable production. We spend most of our time pushing features or discussing whether we should use var, const, or strongly typed variables. We don’t have the incentive to focus on observability because we won’t need that without going to production. We already discussed how to answer the question, “Is it production ready?”. And I think that one of the most important features is observability. It can be reflected in database data and events stored or published, but it should also be reflected in Telemetry. That should be a part of our acceptance criteria. That’s what Martin was postulating in his talk. Becoming production-ready is a process. We cannot magically turn the switch and be responsible. We cannot postpone thinking about observability until two weeks before going to production. We cannot just sprinkle it with the observability auto-instrumentation. Or actually, we can, but we’ll fail then. How can we do it practically, then? Provide the automated setup to ensure that we produce the expected telemetry data. Test-Driven InstrumentationLet’s try to instrument our Connection Pool in a Test-Driven Way. We’ll use Node.js and TypeScript, but you can do the same in other environments. Let’s start with the basic telemetry setup for our tests: Btw. it sucks that Substack doesn’t have code highlighting. But I know that I have some blind readers, so images are not an option… I’m using the simple in-memory setup here. For now, I don’t want to export it outside (e.g., to Graphana Tempo, Jaeger, Honeycomb, etc.). I just want to have the setup that allows me to check if certain spans and metrics were registered. For in-memory metrics, I had to steal the code from the official repo: Nothing fancy. The goal of this class is to collect and export metrics data using a provided exporter. Metrics in Open Telemetry are push-based, so we need to have a way to collect and export them. Essentially, the reader just waits for all metrics to be pushed to the exporter. The exporter is responsible for processing and sending metrics data to a backend or another storage. Having that, we can set up our tests:... Unlock this post for free, courtesy of Oskar Dudycz. |

Older messages

Making your system observability predictable

Monday, September 23, 2024

Everyone claims that observability is the key for production readiness. Yet, most of us just adds auto-instrumentation right before going to production and call it a day. That's fine, but not

When Logs and metrics aren't enough: Discovering Modern Observability

Monday, September 16, 2024

Let's return to the previous series and discuss the typical challenge of distributed systems: Observability. We'll continue to use managing a connection pool for database access as an example

Show me the money! Practically navigating the Cloud Costs Complexity

Monday, September 9, 2024

We've all seen cloud bills get out of hand, often because the true infrastructure costs are harder to predict than they seem at first glance. Today, we'll grab a calculator to discuss the costs

Using S3 but not the way you expected. S3 as strongly consistent event store.

Monday, September 2, 2024

The most powerful news usually comes surprisingly silent. AWS released a humble news: S3 now supports conditional writes. In the article I'll show you why is it groundbreaking and how powerful this

Webinar #21 - Michael Drogalis: Building the product on your own terms

Wednesday, August 28, 2024

Watch now | Did you have a brilliant idea for a startup but were afraid to try it? Or maybe you've built an Open Source tool but couldn't find a way to monetise it?How to be a solopreneur, a

You Might Also Like

📧 Did you watch the FREE chapter of Pragmatic REST APIs?

Friday, February 28, 2025

Hey, it's Milan. 👋 The weekend is almost upon us. So, if you're up for some quality learning, consider watching the free chapter of Pragmatic REST APIs. Scroll down to the curriculum or click

Data Science Weekly - Issue 588

Thursday, February 27, 2025

Curated news, articles and jobs related to Data Science, AI, & Machine Learning ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

💎 Issue 458 - Why Ruby on Rails still matters

Thursday, February 27, 2025

This week's Awesome Ruby Newsletter Read this email on the Web The Awesome Ruby Newsletter Issue » 458 Release Date Feb 27, 2025 Your weekly report of the most popular Ruby news, articles and

📱 Issue 452 - Three questions about Apple, encryption, and the U.K

Thursday, February 27, 2025

This week's Awesome iOS Weekly Read this email on the Web The Awesome iOS Weekly Issue » 452 Release Date Feb 27, 2025 Your weekly report of the most popular iOS news, articles and projects Popular

💻 Issue 451 - .NET 10 Preview 1 is now available!

Thursday, February 27, 2025

This week's Awesome .NET Weekly Read this email on the Web The Awesome .NET Weekly Issue » 451 Release Date Feb 27, 2025 Your weekly report of the most popular .NET news, articles and projects

💻 Issue 458 - Full Stack Security Essentials: Preventing CSRF, Clickjacking, and Ensuring Content Integrity in JavaScript

Thursday, February 27, 2025

This week's Awesome Node.js Weekly Read this email on the Web The Awesome Node.js Weekly Issue » 458 Release Date Feb 27, 2025 Your weekly report of the most popular Node.js news, articles and

💻 Issue 458 - TypeScript types can run DOOM

Thursday, February 27, 2025

This week's Awesome JavaScript Weekly Read this email on the Web The Awesome JavaScript Weekly Issue » 458 Release Date Feb 27, 2025 Your weekly report of the most popular JavaScript news, articles

💻 Issue 453 - Linus Torvalds Clearly Lays Out Linux Maintainer Roles Around Rust Code

Thursday, February 27, 2025

This week's Awesome Rust Weekly Read this email on the Web The Awesome Rust Weekly Issue » 453 Release Date Feb 27, 2025 Your weekly report of the most popular Rust news, articles and projects

💻 Issue 376 - Top 10 React Libraries/Frameworks for 2025 🚀

Thursday, February 27, 2025

This week's Awesome React Weekly Read this email on the Web The Awesome React Weekly Issue » 376 Release Date Feb 27, 2025 Your weekly report of the most popular React news, articles and projects

February 27th 2025

Thursday, February 27, 2025

Curated news all about PHP. Here's the latest edition Is this email not displaying correctly? View it in your browser. PHP Weekly 27th February 2025 Hi everyone, Laravel 12 is finally released, and