|

Hello! Once a week, we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

And now…let's dive into some interesting links from this week.

Facial Detection — Understanding Viola Jones’ Algorithm

From my time researching this topic I have come to the realization that a lot of people don’t actually understand it, or only understand it partly. Also, many tutorials do a bad job in explaining in “lay-mans” terms what exactly it is doing, or leave out certain steps that would otherwise clear up some confusion. So i’m going to explain from start to finish in the most simplest way possible. There are many approaches to implement facial detection, and they can be separated into the following categories…

How to train a model on 10k H100 GPUs?

My friend Francois Fleuret asked the above….I quickly jotted down what I think is fairly common knowledge among engineers working on large-scale training…There's three parts. Fitting as large of a network and as large of a batch-size as possible onto the 10k H100s — parallelizing and using memory-saving tricks. Communicating state between these GPUs as quickly as possible Recovering from failures (hardware, software, etc.) as quickly as possible…

Steal like a generative artist

I’ve done lots of plotting of simulated data before, which I guess technically counts as generative art, but this is my first attempt at making plots for purely *aesthetic* purposes…One of the main takeaways for me from Nicola’s talk was to steal like an artist…In a nutshell, a great way to learn any new creative skill is to copy and riff on what other people have done already…When developing generative art skills it can be helpful to: start by using tools that other people have made to create generative art, recreate or adapt those tools for yourself, take inspiration for generative designs from more traditional art forms, recreate designs or patterns from objects in the world around you.

In that spirit, I’ll be recreating and adapting one of her title slide artworks…

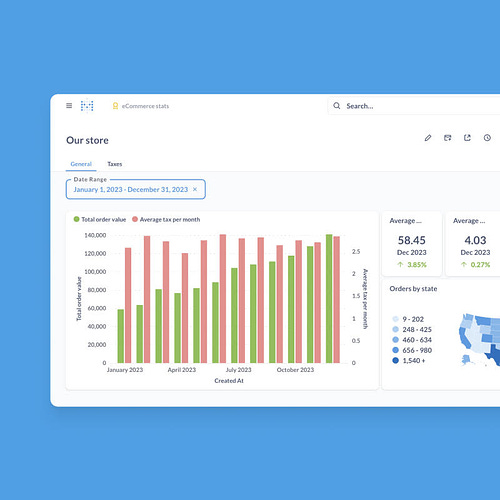

Metabase’s intuitive BI tools empower your team to effortlessly report and derive insights from your data. Compatible with your existing data stack, Metabase offers both self-hosted and cloud-hosted (SOC 2 Type II compliant) options. In just minutes, most teams connect to their database or data warehouse and start building dashboards—no SQL required. With a free trial and super affordable plans, it's the go-to choice for venture-backed startups and over 50,000 organizations of all sizes. Empower your entire team with Metabase. Read more. . * Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

"Quick data vis" - an 8-part quick course in data visualization using R

This repository has 8 activities: Very basics of R coding Introduction to tidy data frames Introduction to data visualization using ggplot Introduction to mean separation Introduction to proportional data Introduction to heatmaps Introduction to relationship data and networks Introduction to plot composition/assembly…

Database Building Blocks Seminar Series – Fall 2024

Like a hobo putting together a sandwich in a parking lot using discarded foods from multiple restaurants, many modern database management systems are comprised of composable building blocks…This modular approach enables system builders to spend less time building the banal but necessary components and instead leverage off-the-shelf software…This shift towards modularity also enhances maintainability and simplifies integration with other systems. Given this, the Carnegie Mellon University Database Research Group is exploring this recent trend with the Building Blocks Seminar Series. Each speaker will present the implementation details of their respective systems and examples of the technical challenges they faced when working with real-world customers…

How many of you are building bespoke/custom time series models these days? [Reddit Discussion]

Time series forecasting seems to have been the next wave of modeling which had gotten “auto-MLed” so to speak in every company. It’s like, “we have some existing forecasting models we already use, they are good enough, we don’t need a data scientist to go in and build a new time series model”. It seems as though it’s rare to find actual jobs involving building custom time series models in Stan, or like actually trying to think more rigorously about the problem. Is everything just “throw it into prophet” or are there any people here who are actually building custom/bespoke time series models…

Table format interoperability, future or fantasy?

In the world of open table formats (Apache Iceberg, Delta Lake, Apache Hudi, Apache Paimon, etc), an emerging trend is to provide interoperability between table formats by cross-publishing metadata. It allows a table to be written in table format X but read in format Y or Z…Cross-publishing seems to promise seamless interoperability between table formats, but the truth is that the features of the table formats vary, and you can't support them all while writing the data once…What are the alternatives? Here are some thoughts…

The 5 Data Quality Rules You Should Never Write Again

Curious how rule automation can transform your data quality workflows? Here are five rules across common dimensions of data quality—including validity, uniqueness, accuracy, timeliness, and completeness—that data analysts never need to write again…

A gentle introduction to matrix calculus

Matrix calculus is an important tool when we wish to optimize functions involving matrices or perform sensitivity analyses. This tutorial is designed to make matrix calculus more accessible to graduate students and young researchers. It contains the theory that would suffice in most applications, many fully worked-out exercises and examples, and presents some of the ‘tacit knowledge’ that is prevalent in this field…

Splink: transforming data linking through open source collaboration

This is the story of how a small team of the UK’s Ministry of Justice analysts built data linking software that’s used by governments across the world...

Debunking 6 common pgvector myths

Pgvector is Postgres' highly popular extension for storing, indexing and querying vectors. Vectors have been a useful data type for a long time, but recently they've seen rise in popularity due to their usefulness in RAG (Retrieval Augmented Generation) architectures of AI-based applications. Vectors typically power the retrieval part - using vector similarity search and nearest-neighbor algorithms, one can find the most relevant documents for a given user question…From conversations with both our users and in the pgvector community, it became clear that there are some common misconceptions and misunderstandings around its best practices and use. As a result of these misunderstandings, some people avoid pg_vector completely or use it less effectively than they otherwise would. So, let's fix this!..

Diffusion Models - bit by bit I’m gonna cover from scratch, making intuitions, diving into mathematics, ideas and ending to implementation. So this **Part 1** of the series. In this part I’ve covered GANs and VAE from very scratch…

Phrase Matching in Marginalia Search

Marginalia Search now properly supports phrase matching. This not only permits a more robust implementation of quoted search queries, but also helps promote results where the search terms occur in the document exactly in the same order as they do in the query. This is a write-up about implementing this change. This is going to be a relatively long post, as it represents about 4 months of work…

Andy Barto - In the Beginning ML was RL

Video of Andy Barto at the 2024 Reinforcement Learning Conference, where he gives an overview of the intertwined history of Machine Learning and Reinforcement Learning…

Transformers Inference Optimization Toolset

The idea of this post is not just to discuss transformer-specific optimizations, since there are plenty of resources, where one can examine every inch of transformer to make it faster…The main goal is to lower the entry barrier for those curious researchers who are currently unable to piece together the huge number of articles and papers into one picture…A lot of optimization techniques will be left out, like for example quantization methods, which are relatively diverse and deserve a separate post. Also we’ll mostly discuss transformer inference and won’t mention some training tricks, such as mixed-precision training, gradient checkpointing or sequence packing. But even so a lot of optimizations from this post could be applied to training as well…

* Based on unique clicks.

** Find last week's issue #566 here.

Looking to get a job? Check out our “Get A Data Science Job” Course

It is a comprehensive course that teaches you everything related to getting a data science job based on answers to thousands of emails from readers like you. The course has 3 sections: Section 1 covers how to get started, Section 2 covers how to assemble a portfolio to showcase your experience (even if you don’t have any), and Section 3 covers how to write your resume.

Promote yourself/organization to ~63,400 subscribers by sponsoring this newsletter. 35-45% weekly open rate.

Thank you for joining us this week! :) Stay Data Science-y! All our best,

Hannah & Sebastian

| |