Humanity Redefined - Cracks in the Scaling Laws - Sync #493

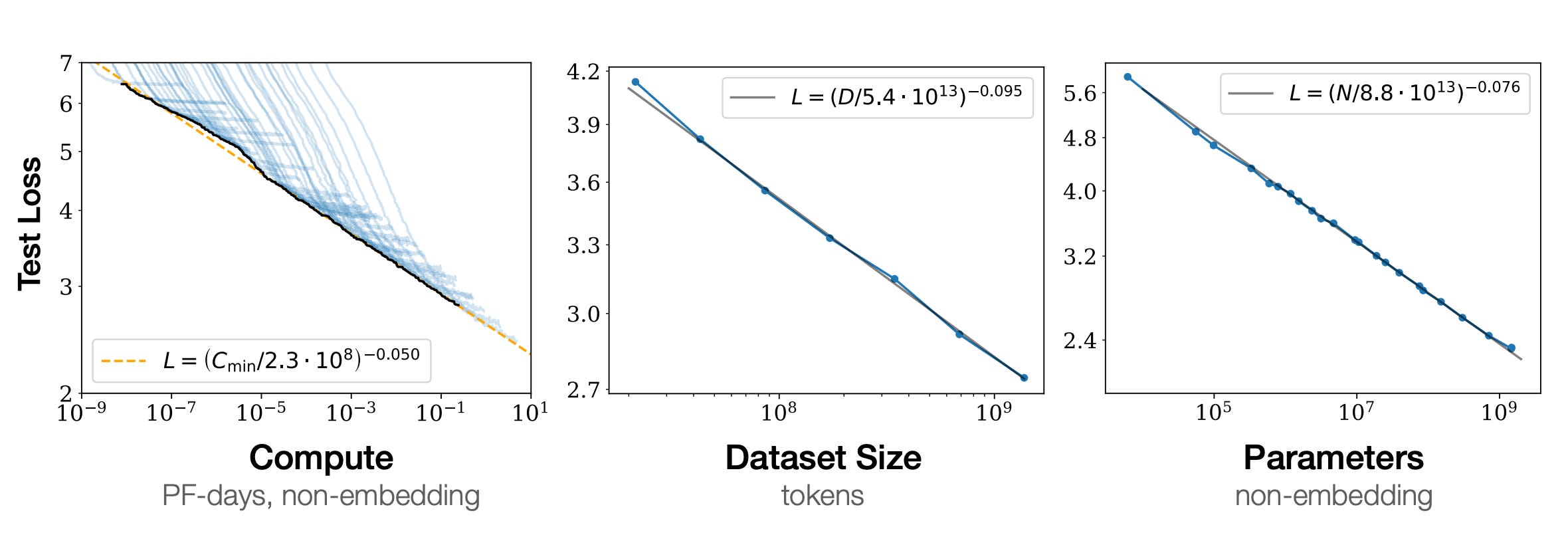

I hope you enjoy this free post. If you do, please like ❤️ or share it, for example by forwarding this email to a friend or colleague. Writing this post took around eight hours to write. Liking or sharing it takes less than eight seconds and makes a huge difference. Thank you! Cracks in the Scaling Laws - Sync #493Plus: OpenAI's new AI agent; AlphaFold3 is open-source... kind of; Amazon releases its new AI chip; Waymo One is available for everyone in LA; how can humanity become a Kardashev Type 1 civilizationHello and welcome to Sync #493! This week, we will take a closer look at the leaks emerging from leading AI labs, suggesting that researchers are hitting a wall, and explore what can be done to overcome it. Elsewhere in AI, OpenAI is reportedly planning to release a new AI agent capable of controlling users’ computers. Meanwhile, Anthropic has teamed up with Palantir and AWS to sell its AI models to defence customers, X is testing a free version of its AI chatbot Grok, and AI Granny is wasting scammers’ time. In robotics, Waymo One is now available to everyone in LA, Agility Robotics’ Digit has found a new job, and a drone has successfully delivered blood samples between two hospitals in London. Additionally, AlphaFold3 is now open source (at least the code is), one researcher has demonstrated how a cluster of human brain cells learned to control a virtual butterfly, and YouTube’s chief mad biologist has found a way to turn shrimps into fabric. Enjoy! Cracks in the Scaling LawsIf someone were to write a history book about generative AI and large language models, two milestones in AI research would probably get their own chapters. The first milestone happened in 2017 when eight Google Brain researchers published a paper titled Attention Is All You Need. It was a landmark paper which introduced the concept of transformer, the bedrock of all modern large language models. The second chapter would be dedicated to a pair of events—the launch of ChatGPT in November 2022 and the release of GPT-4 in March 2023. That’s when the idea of large language models entered the global scene and kickstarted the AI revolution we are in today. However, between these two milestones, there was another event that bridged them. That event was the publication of a paper from OpenAI titled Scaling Laws for Neural Language Models, published in January 2020. Essentially, what researchers have found is that the performance of a large language model scales up predictably depending on the allocated compute power, training dataset size or model’s parameters. Later follow-up papers from OpenAI and DeepMind confirmed the original results and expanded upon them. For OpenAI, the discovery of scaling laws was a green light for fully committing to large language models. If the scaling laws hold, then with enough computing power, a big enough training dataset and with big enough model, a highly capable, nearing human-level performance AI model is within reach. And OpenAI went full-on with large language models. Five months after the Scaling Laws for Neural Language Models paper was published, in May 2020, Microsoft agreed to build what was at that time one of the largest supercomputers, containing 10,000 GPUs, just for OpenAI to train massive models. That set off the AI industry on the path it is now—building more and more powerful supercomputers, fed with ever-increasing amounts of data to train larger and larger, and more capable, models. That trend, of increasing resources thrown at the model and getting better results, held on. We’ve seen significant leaps in performance with each new generation of large language models. This fueled optimism and raised expectations for the next generation of AI models—GPT-5, Gemini 2, and Claude 4—which are now anticipated to reach entirely new levels of performance, massively surpassing their predecessors. That, however, seems to not be the case. According to a report from The Information, OpenAI’s new model, codenamed Orion, shows better performance than GPT-4 but not as much as expected. Reportedly, the jump in performance is smaller than from GPT-3 to GPT-4. And OpenAI is not the only company experiencing this slowdown in the rate of improvements—The Verge reports that a similar thing is happening at Google DeepMind, too, saying that Gemini 2 “isn’t showing the performance gains the Demis Hassabis-led team had hoped for.” That puts leading AI companies—OpenAI, Google DeepMind, Anthropic—in a tricky spot. Over the last two years, these companies have consistently delivered groundbreaking performance with each new model release. However, the reported gains are now said to be smaller than before. If the leaks are accurate, GPT-5 and Gemini 2 will be better than their predecessors but they won’t live up to the hype coming from being the next major upgrade. The report from The Information suggests that OpenAI is looking into finding new ways of improving the performance of its next-generation model in the face of a dwindling supply of new training data. This involves new strategies such as using synthetic data produced by AI models or new ways to improve model post-training. But there is also another path forward—adding new functionalities instead of massively improving the performance of next-generation models. That way AI companies can deliver new value to their customers without delivering a new level of raw performance. We already see this strategy in action. In September, OpenAI released o1, their first reasoning model. In October, Anthropic revealed Computer use which allows Claude to take control of user’s computer and perform actions on their behalf. OpenAI and Google are rumoured to introduce a similar feature, too, very soon. Either way, the leading AI companies have to now justify the billions of dollars poured into them. One way or another, they have to find a way to overcome the wall they are facing. If you enjoy this post, please click the ❤️ button or share it. Do you like my work? Consider becoming a paying subscriber to support it For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter. Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving. 🔮 Future visions▶️ How Can Humanity Become a Kardashev Type 1 Civilization? (22:18)  The Kardashev Scale is a theoretical framework that measures a civilisation's technological advancement based on its ability to harness and utilise energy, ranging from planetary (Type I) to stellar (Type II) and galactic (Type III) levels. In this video, Matt O'Dowd from PBS Space Time explains the Kardashev Scale, explores where humanity currently stands on it, and discusses how can we become a Type I civilization. 🧠 Artificial IntelligenceOpenAI Nears Launch of AI Agent Tool to Automate Tasks for Users Amazon ready to use its own AI chips, reduce its dependence on Nvidia OpenAI to present plans for U.S. AI strategy and an alliance to compete with China X is testing a free version of AI chatbot Grok Anthropic teams up with Palantir and AWS to sell AI to defense customers Even Microsoft Notepad is getting AI text editing now Newest Google and Nvidia Chips Speed AI Training Meta is reportedly working on its own AI-powered search engine, too EU AI Act: Draft guidance for general purpose AIs shows first steps for Big AI to comply ‘AI Granny’ is happy to talk with phone scammers all day  This is one of the most beautiful uses of AI I’ve seen in recent months. O2 has released dAIsy, an AI Granny with infinite patience whose only job is to waste scammers’ time by being the most polite and annoying for scammers artificial grandma it can be. It would be interesting to see a follow-up in a few months to check if dAIsy has been effective or if this is simply a PR stunt to raise awareness about AI-powered phone scams. Google DeepMind has a new way to look inside an AI’s “mind” Oasis: A Universe in a Transformer If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word? 🤖 RoboticsWaymo One is now open to all in Los Angeles Schaeffler plans global use of Agility Robotics’ Digit humanoid ▶️ Drone delivery of blood samples between London hospitals (4:49)  In this video, Wing, Alphabet’s drone delivery company, and Apian, a UK drone delivery startup specialising in medical deliveries, demonstrate how drones are used to transport blood samples between two hospitals in London. The deliveries are part of a six-month trial to assess the feasibility of using drones for medical logistics in London and across the UK. Researchers use imitation learning to train surgical robots This Is a Glimpse of the Future of AI Robots 🧬 BiotechnologyAI protein-prediction tool AlphaFold3 is now open source Lab-grown human brain cells drive virtual butterfly in simulation  Using Finalspark’s Neuroplatform, a software engineer created a virtual butterfly controlled by human brain organoids—lab-grown human brain cells. The project demonstrates the potential of biological neural networks (BNNs), which promise to consume far less energy than artificial neural networks (ANNs) while being more adaptive and better at zero-shot learning and pattern recognition. Stem Cell Transplant 'Black Box' Unveiled in 31-Year Study of Blood Cells ▶️ Turning Shrimp into Woven Fabric (16:04)  The Thought Emporium, YouTube’s chief mad biologist, shows in this video how to turn shrimp into fabric. It sounds like an insane idea, but it’s less far-fetched than it seems. Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it. Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support! My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!" |

Older messages

Robotics is the new AI - Sync #492

Sunday, November 10, 2024

Plus: OpenAI's and Google's new models leaked; the state of robotic investments in 2024; pest control with CRISPR; drone with a flamethrower; 81-year-old biohacker trying to reverse ageing; and

Sync #491

Sunday, November 3, 2024

ChatGPT Search and Apple Intelligence are out; Waymo raises $5.6 billion; whispers of Gemini 2; new videos of humanoid robots doing things; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Claude can now control your computer - Sync #490

Sunday, October 27, 2024

Plus: OpenAI plans to release Orion by December; DeepMind open-sources SynthID-Text; Tesla has been secretly testing Robotaxi; US startup that screen embryos for IQ; Casio's robot pet; and more! ͏

State of AI Report 2024 - Sync #489

Sunday, October 20, 2024

Plus: The New York Times warns Perplexity; have we reached peak human lifespan; tech giants tap nuclear power for AI; OpenAI projects billions in losses while Nvidia's stock reaches a new high ͏ ͏

Machine learning wins two Nobel Prizes - Sync #488

Sunday, October 20, 2024

Plus: Tesla reveals Cybercab; Meta Movie Gen; Nobel Prize for microRNA; how a racist deepfake divided a community; how the semiconductor industry actually works; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

Daily Coding Problem: Problem #1707 [Medium]

Monday, March 3, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Facebook. In chess, the Elo rating system is used to calculate player strengths based on

Simplification Takes Courage & Perplexity introduces Comet

Monday, March 3, 2025

Elicit raises $22M Series A, Perplexity is working on an AI-powered browser, developing taste, and more in this week's issue of Creativerly. Creativerly Simplification Takes Courage &

Mapped | Which Countries Are Perceived as the Most Corrupt? 🌎

Monday, March 3, 2025

In this map, we visualize the Corruption Perceptions Index Score for countries around the world. View Online | Subscribe | Download Our App Presented by: Stay current on the latest money news that

The new tablet to beat

Monday, March 3, 2025

5 top MWC products; iPhone 16e hands-on📱; Solar-powered laptop -- ZDNET ZDNET Tech Today - US March 3, 2025 TCL Nxtpaper 11 tablet at CES The tablet that replaced my Kindle and iPad is finally getting

Import AI 402: Why NVIDIA beats AMD: vending machines vs superintelligence; harder BIG-Bench

Monday, March 3, 2025

What will machines name their first discoveries? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

GCP Newsletter #440

Monday, March 3, 2025

Welcome to issue #440 March 3rd, 2025 News LLM Official Blog Vertex AI Evaluate gen AI models with Vertex AI evaluation service and LLM comparator - Vertex AI evaluation service and LLM Comparator are

Apple Should Swap Out Siri with ChatGPT

Monday, March 3, 2025

Not forever, but for now. Until a new, better Siri is actually ready to roll — which may be *years* away... Apple Should Swap Out Siri with ChatGPT Not forever, but for now. Until a new, better Siri is

⚡ THN Weekly Recap: Alerts on Zero-Day Exploits, AI Breaches, and Crypto Heists

Monday, March 3, 2025

Get exclusive insights on cyber attacks—including expert analysis on zero-day exploits, AI breaches, and crypto hacks—in our free newsletter. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚙️ AI price war

Monday, March 3, 2025

Plus: The reality of LLM 'research'

Post from Syncfusion Blogs on 03/03/2025

Monday, March 3, 2025

New blogs from Syncfusion ® AI-Driven Natural Language Filtering in WPF DataGrid for Smarter Data Processing By Susmitha Sundar This blog explains how to add AI-driven natural language filtering in the