Against The Generalized Anti-Caution Argument

Suppose something important will happen at a certain unknown point. As someone approaches that point, you might be tempted to warn that the thing will happen. If you’re being appropriately cautious, you’ll warn about it before it happens. Then your warning will be wrong. As things continue to progress, you may continue your warnings, and you’ll be wrong each time. Then people will laugh at you and dismiss your predictions, since you were always wrong before. Then the thing will happen and they’ll be unprepared. Toy example: suppose you’re a doctor. Your patient wants to try a new experimental drug, 100 mg. You say “Don’t do it, we don’t know if it’s safe”. They do it anyway and it’s fine. You say “I guess 100 mg was safe, but don’t go above that.” They try 250 mg and it’s fine. You say “I guess 250 mg was safe, but don’t go above that.” They try 500 mg and it’s fine. You say “I guess 500 mg was safe, but don’t go above that.” They say “Haha, as if I would listen to you! First you said it might not be safe at all, but you were wrong. Then you said it might not be safe at 250 mg, but you were wrong. Then you said it might not be safe at 500 mg, but you were wrong. At this point I know you’re a fraud! Stop lecturing me!” Then they try 1000 mg and they die. The lesson is: “maybe this thing that will happen eventually will happen now” doesn’t count as a failed prediction. I’ve noticed this in a few places recently. First, in discussion of the Ukraine War, some people have worried that Putin will escalate (to tactical nukes? to WWIII?) if the US gives Ukraine too many new weapons. Lately there’s a genre of commentary (1, 2, 3, 4, 5, 6, 7) that says “Well, Putin didn’t start WWIII when we gave Ukraine HIMARS. They didn’t start WWIII when we gave Ukraine ATACMS. He didn’t start WWIII when we gave Ukraine F-16s. So the people who believe Putin might start WWIII have been proven wrong, and we should escalate as much as possible.” There’s obviously some level of escalation that would start WWIII (example: nuking Moscow). So we’re just debating where the line is. Since nobody (except Putin?) knows where the line is, it’s always reasonable to be cautious. I don’t actually know anything about Ukraine, but a warning about HIMARS causing WWIII seems less like “this will definitely be what does it” and more like “there’s a 2% chance this is the straw that breaks the camel’s back”. Suppose we have two theories, Escalatory-Putin and Non-Escalatory-Putin. EP says that for each new weapon we give, there’s a 2% chance Putin launches a tactical nuke. NEP says there’s a 0% chance. If we start out with even odds on both theories, after three new weapons with no nukes, our odds should only go down to 48.5% - 51.5%. (yes, this is another version of the generalized argument against updating on dramatic events) Second, I talked before about getting Biden’s dementia wrong. My internal argument against him being demented was something like “They said he was demented in 2020, but he had a good debate and proved them wrong. They said he was demented in 2022, but he gave a good State Of The Union and proved them wrong. Now they’re saying he’s demented in 2024, but they’ve already discredited themselves, so who cares?” I think this was broadly right about the Republican political machine, who was just throwing the same allegation out every election and seeing if it would stick. But regardless of the Republicans’ personal virtue, the odds of an old guy becoming newly demented each year is about 4% per year. If it had been two years since I last paid attention to this question, there was an 8% chance it had happened while I wasn’t looking. Like the other examples, dementia is something that happens eventually (this isn’t strictly true - some people reach their 100s without dementia - but I think it’s a fair idealized assumption that if someone survives long enough, then eventually their risk of cognitive decline becomes very high). It is reasonable to be worried about the President of the United States being demented - so reasonable that people will start raising the alarm about it being a possibility long before it happens. Even if some Republicans had ulterior motives for harping on it, plenty of smart, well-meaning people were also raising the alarm. Here I failed by letting the multiple false alarms lull me into a false sense of security, where I figured the non-demented side had “won” the “argument”, rather than it being a constant problem we needed to stay vigilant for. Third, this is obviously what’s going on with AI right now. The SB1047 AI safety bill tried to monitor that any AI bigger than 10^25 FLOPs (ie a little bigger than the biggest existing AIs) had to be exhaustively tested for safety. Some people argued - the AI safety folks freaked out about how AIs of 10^23 FLOPs might be unsafe, but they turned out to be safe. Then they freaked out about how AIs of 10^24 FLOPs might be unsafe, but they turned out to be safe. Now they’re freaking out about AIs of 10^25 FLOPs! Haven’t we already figured out that they’re dumb and oversensitive? No. I think of this as equivalent to the doctor who says “We haven’t confirmed that 100 mg of the experimental drug is safe”, then “I guess your foolhardy decision to ingest it anyway confirms 100 mg is safe, but we haven’t confirmed that 250 mg is safe, so don’t take that dose,” and so on up to the dose that kills the patient. It would be surprising if AI never became dangerous - if, in 2500 AD, AI still can’t hack important systems, or help terrorists commit attacks or anything like that. So we’re arguing about when we reach that threshold. It’s true and important to say “well, we don’t know, so it might be worth checking whether the answer is right now.” It probably won’t be right now the first few times we check! But that doesn’t make caution retroactively stupid and unjustified, or mean it’s not worth checking the tenth time. Can we take this insight too far? Suppose Penny Panic says “If you elect the Republicans, they’ll cancel elections and rule as dictators!” Then they elect Republicans and it doesn’t happen. The next election cycle: “If you elect the Republicans, they’ll cancel elections and rule as dictators!” Then they elect Republicans again and it still doesn’t happen. After her saying this every election cycle, and being wrong every election cycle, shouldn’t we stop treating her words as meaningful? I think we have to be careful to distinguish this from the useful cases above. It’s not true that, each election, the chance of Republicans becoming dictators increases, until eventually it’s certain. This is different from our examples above:

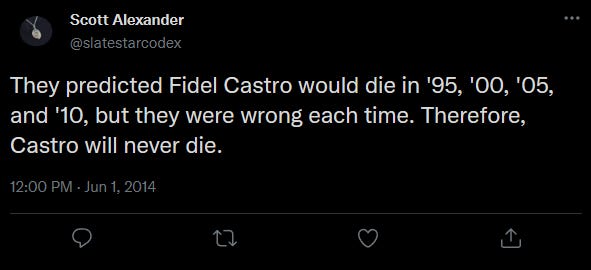

But it’s not true that at some point the Republicans have to overthrow democracy, and the chance gets higher each election. You should start with some fixed chance that the Republicans overthrow democracy per term (even if it’s 0.00001%). Then you shouldn’t change that number unless you get some new evidence. If Penny claims to have some special knowledge that the chance was higher than you thought, and you trust her, you might want to update to some higher number. Then, if she discredits herself by claiming very high chances of things that don’t happen, you might want to stop trusting her and downdate back to your original number. You should do all of this in a Bayesian way, which means that if Penny gives a very low chance (eg 2% chance per term that the Republicans start a dictatorship) you should lose trust in her slowly, but if she gives a high chance (98% chance) you should lose trust in her quickly. Likewise, if your own previous estimate of dictatorship per administration was 0.00001%, then you should change it almost zero after a few good terms, but if it was 90%, then you should update it a lot. (if you thought the chance was 0.00001%, and Penny thought it was 90%, and you previously thought you and Penny were about equally likely to be right and Aumann updated to 45%, then after three safe elections, you should update from 45% to 0.09%. On the other hand, if Penny thought the chance was 2%, you thought it was 2%, and your carefree friend thought it was 0.0001%, then after the same three safe elections, then you’re still only at 49-51 between you and your friend) Compare this to the situation with Castro. Your probability that he dies in any given year should be the actuarial table. If some pundit says he’ll die immediately and gets proven wrong, you should go back to the actuarial table. If Castro seems to be in about average health for his age, nothing short of discovering the Fountain of Youth should make you update away from the actuarial table. I worry that people aren’t starting with some kind of rapidly rising graph for Putin’s level of response to various provocations, for elderly politicians’ dementia risk per year (hey, isn’t Trump 78?), or for AI getting more powerful over time. I think you should start with a graph like that, and then you’ll be able to take warnings of caution for what they are - a reminder of a risk which is low-probability at any given time, but adds up to a high-probability eventually - rather than letting them toss your probability distribution around in random ways. If you don’t do this, then “They said it would happen N years ago, they said it would happen N-1 years ago, they said it would happen N-2 years ago […] and it didn’t happen!” becomes a general argument against caution, one that you can always use to dismiss any warnings. Of course smart people who have your best interest in mind will warn you about a dangerous outcome before the moment when it is 100% guaranteed to happen! Don’t close off your ability to listen to them! You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

How Did You Do On The AI Art Turing Test?

Wednesday, November 20, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Open Thread 356

Monday, November 18, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The Early Christian Strategy

Friday, November 15, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Book Review: The Rise Of Christianity

Tuesday, November 12, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Open Thread 355

Monday, November 11, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

838852 is your Substack verification code

Friday, February 28, 2025

Here's your verification code to sign in to Substack: 838852 This code will only be valid for the next 10 minutes. If the code does not work, you can use this login verification link: Verify email

771192 is your Substack verification code

Friday, February 28, 2025

Here's your verification code to sign in to Substack: 771192 This code will only be valid for the next 10 minutes. If the code does not work, you can use this login verification link: Verify email

Does terrible code drive you mad? Wait until you see what it does to OpenAI's GPT-4o [Fri Feb 28 2025]

Friday, February 28, 2025

Hi The Register Subscriber | Log in The Register Daily Headlines 28 February 2025 Terminator head Does terrible code drive you mad? Wait until you see what it does to OpenAI's GPT-4o Model was fine

Amazon commits $100M to Bellevue to ‘accelerate’ production of affordable housing

Friday, February 28, 2025

Breaking News from GeekWire GeekWire.com | View in browser Amazon is giving $100 million to support affordable housing efforts in Bellevue, Wash., where it has quickly grown its corporate footprint.

A Case for the Attractive Cordless Lamp

Friday, February 28, 2025

And Rio's back! The Strategist Every product is independently selected by editors. If you buy something through our links, New York may earn an affiliate commission. February 27, 2025 A Case for

BREAKING: SEC halts fraud prosecution of Chinese national who sent Trump millions

Friday, February 28, 2025

In December, Popular Information reported that Chinese crypto entrepreneur Justin Sun purchased $30 million in crypto tokens from World Liberty Financial (WLF), a new venture backed by President Donald

What A Day: Parks & Wreck

Thursday, February 27, 2025

Is Elon Musk trying to ruin the great outdoors? Sure looks like it, given what's going on at the National Parks Service. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

STARTING SOON: What do tax cuts for billionaires mean for the rest of us?

Thursday, February 27, 2025

Tonight at 8 PM ET on Zoom: How giveaways for the wealthy are going to hurt everyone else. Tonight at 8 PM ET on Zoom, The Lever and Accountable.US, a nonpartisan organization that tracks and

803408 is your Substack verification code

Thursday, February 27, 2025

Here's your verification code to sign in to Substack: 803408 This code will only be valid for the next 10 minutes. If the code does not work, you can use this login verification link: Verify email

Links For February 2025

Thursday, February 27, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏